K8S:使用Filebeat收集K8S内Pod应用日志

K8S:使用Filebeat收集K8S内Pod应用日志

一、环境描述

没有K8S集群时应用日志通过filebeat–>redis–>logstash–>es–>kibana进行收集展示,上K8S集群后也需要考虑收集日志的问题,此处仅考虑 pod 中java应用生产的 info 和error文本日志。

Kubernetes集群中的日志收集解决方案

我们采用sidecar方案,将filebeat与应用部署到同一个pod,将应用日志挂载到主机目录,filebeat将日志挂载到其容器内。

依然使用这种方式采集应用日志:filebeat–>redis–>logstash–>es–>kibana

二、使用Dockerfile构建filebeat镜像

注:此处省略安装部署 logstash、es、kibana步骤。

①、使用Dockerfile构建filebeat镜像

Dockerfile文件如下:

FROM centos:centos7.4.1708

WORKDIR /usr/share/filebeat

COPY filebeat-7.10.2-linux-x86_64.tar.gz /usr/share

RUN cd /usr/share && \

tar -xzf filebeat-7.10.2-linux-x86_64.tar.gz -C /usr/share/filebeat --strip-components=1 && \

rm -f filebeat-7.10.2-linux-x86_64.tar.gz && \

chmod +x /usr/share/filebeat

ADD ./docker-entrypoint.sh /usr/bin/

RUN chmod +x /usr/bin/docker-entrypoint.sh

ENTRYPOINT ["docker-entrypoint.sh"]

CMD ["/usr/share/filebeat/filebeat","-e","-c","/etc/filebeat/filebeat.yml"]

②、同目录创建docker-entrypoint.sh

#!/bin/bash

set -e

TMP=${PATHS}

config=/usr/share/filebeat/filebeat.yml

if [ ${TMP:0:1} = '/' ] ;then

tmp='"'${PATHS}'"'

fi

env

echo 'Filebeat init process done. Ready for start up.'

echo "Using the following configuration:"

cat /usr/share/filebeat/filebeat.yml

exec "$@"

③、同目录下载filebeat二进制文件

官网下载filebeat

https://www.elastic.co/cn/downloads/beats/filebeat

最终目录下文件信息如下:

[root@k8s01 filebeat]# ls

docker-entrypoint.sh Dockerfile filebeat-7.10.2-linux-x86_64.tar.gz

④、构建filebeat镜像

[root@k8s01 filebeat]# docker build -t harbor.yishoe.com/ops/filebeat-7.10.2:1.0 .

[root@k8s01 filebeat]# docker push harbor.yishoe.com/ops/filebeat-7.10.2:1.0

三、部署filebeat配置文件

编写了一个收集FileSchedule这个pod应用日志的configmap,并输出到redis!

关于filebeat配置文件可以参考官网:

https://www.elastic.co/guide/en/beats/filebeat/7.10/configuration-filebeat-options.html

[root@k8s01 filebeat]# vim file_schedule_configmap.yaml

filebeat.yml: |-

filebeat.inputs:

- type: log

enabled: true

exclude_lines: ['^$']

multiline:

pattern: '^\d{4}-\d{1,2}-\d{1,2}\s\d{1,2}:\d{1,2}:\d{1,2}'

negate: true

match: after

paths:

- "/logs/saas/YT.CreditProject.FileSchedule/log_info.log"

fields:

log_source: fileschedule_info

- type: log

enabled: true

exclude_lines: ['^$']

multiline:

pattern: '^\d{4}-\d{1,2}-\d{1,2}\s\d{1,2}:\d{1,2}:\d{1,2}'

negate: true

match: after

paths:

- "/logs/saas/YT.CreditProject.FileSchedule/log_error.log"

fields:

log_source: fileschedule_error

output.redis:

hosts: ["172.16.1.11:30004"]

password: "******"

key : "sjyt_elk_redis"

data_type : "list"

db : 4

processors:

- drop_fields:

fields:

- agent.ephemeral_id

- agent.hostname

- agent.id

- agent.type

- agent.version

- ecs.version

- input.type

- log.offset

- version

- decode_json_fields:

fields:

- message

max_depth: 1

overwrite_keys: true

setup.ilm.enabled: false

setup.template.name: smc-gateway-log

setup.template.pattern: smc-gateway-*

setup.template.overwrite: true

setup.template.enabled: true

四、编写应用yaml

app-logs:应用日志使用emptyDir挂载到宿主机,filebeat通过挂载本机app-logs到其pod进行采集。

filebeat-config:filebeat配置文件,Dockerfile构建时启动指定路径为:/etc/filebeat/filebeat.yaml

[root@k8s01 pipeline_test]# vim file_schedule.yaml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: file-schedule

spec:

replicas: 1

template:

metadata:

labels:

app: file-schedule

spec:

terminationGracePeriodSeconds: 10

containers:

- image: harbor.yishoe.com/ops/filebeat-7.10.2:1.0

imagePullPolicy: Always

name: filebeat

volumeMounts:

- name: app-logs

mountPath: /logs

- name: filebeat-config

mountPath: /etc/filebeat

- name: file-schedule-app

image: harbor.yishoe.com/jar/file_schedule:11

ports:

- containerPort: 8080

name: web

volumeMounts:

- name: app-logs

mountPath: /home/logs

volumes:

- name: app-logs

emptyDir: {}

- name: filebeat-config

configMap:

name: file-schedule-filebeat-config

---

apiVersion: v1

kind: Service

metadata:

name: file-schedule

spec:

type: NodePort

ports:

- port: 8080

targetPort: 8080

nodePort: 30024

selector:

app: file-schedule

五、验证日志收集

①、配置logstash从redis读取信息并写入es

input {

redis {

host => "172.16.1.11"

port => "30004"

password => "*********"

key => "elk_redis"

data_type => "list"

db => "4"

}

}

output {

# 根据redis键 messages_secure 对应的列表值中,每一行数据的其中一个参数来判断日志来源

if [fields][log_source] == 'fileschedule_info' {

elasticsearch {

hosts => ["172.16.1.15:6200"]

index => "fileschedule_info-%{+YYYY.MM.dd}"

user => "elastic"

password => "*********"

}

}

if [fields][log_source] == 'fileschedule_error' {

elasticsearch {

hosts => ["172.16.1.15:6200"]

index => "fileschedule_error-%{+YYYY.MM.dd}"

user => "elastic"

password => "********"

}

}

}

注:index 索引不能有大写字母,必须都是小写(如果是大写Logstash日志会报错)。

报错如下:

"error"=>{"type"=>"invalid_index_name_exception", "reason"=>"Invalid index name [fileSchedule_info-2021.03.02], must be lowercase", "index_uuid"=>"_na_", "index"=>"fileSchedule_info-2021.03.02"}}}}

如果以上filebeat 没有报错,redis有数据。logstash能正常消费redis数据,并没有报错即进行下一步。

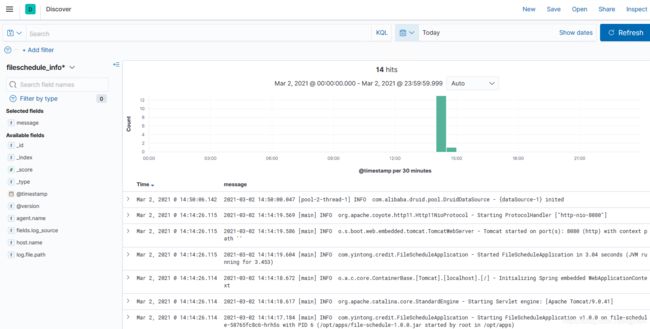

②、kibana 添加索引

Index patterns --》Create Index patterns

最终日志展示如下:

以上K8S集群使用filebeat收集应用日志就算配置好了。