K8S集群部署之Prometheus 监控-钉钉告警

K8S集群部署之Prometheus 监控-钉钉告警

一、背景描述

文章基于:K8S集群二进制部署之Prometheus监控告警

实现了钉钉邮箱告警,由于工作中使用zabbix集成的钉钉告警,所以这里也是用Prometheus通过dingtalk实现告警。

钉钉web-hook使用开源项目:https://github.com/timonwong/prometheus-webhook-dingtalk.git

二、部署dingtalk-hook

注:自行添加钉钉机器人!

最终获得一个token连接例如:

https://oapi.dingtalk.com/robot/send?access_token=****************

①、编制dingtalk-hook.yaml文件

注:开源自己使用dockerfile构建docker镜像,也可以使用现成的镜像。

1.1 使用dockerfile构建镜像

下载源码:

git clone https://github.com/timonwong/prometheus-webhook-dingtalk.git

Dockerfile

ARG ARCH="amd64"

ARG OS="linux"

FROM quay.io/prometheus/busybox-${OS}-${ARCH}:latest

LABEL maintainer="Timon Wong "

ARG ARCH="amd64"

ARG OS="linux"

COPY .build/${OS}-${ARCH}/prometheus-webhook-dingtalk /bin/prometheus-webhook-dingtalk

COPY config.example.yml /etc/prometheus-webhook-dingtalk/config.yml

COPY contrib /etc/prometheus-webhook-dingtalk/

COPY template/default.tmpl /etc/prometheus-webhook-dingtalk/templates/default.tmpl

RUN mkdir -p /prometheus-webhook-dingtalk && \

chown -R nobody:nobody /etc/prometheus-webhook-dingtalk /prometheus-webhook-dingtalk

USER nobody

EXPOSE 8060

VOLUME [ "/prometheus-webhook-dingtalk" ]

WORKDIR /prometheus-webhook-dingtalk

ENTRYPOINT [ "/bin/prometheus-webhook-dingtalk" ]

CMD [ "--config.file=/etc/prometheus-webhook-dingtalk/config.yml" ]

构建镜像并推送到harbor即可使用!

1.2 使用现成的docke镜像

通过百度网盘下载

链接: https://pan.baidu.com/s/1Zj9toi7wjvqNUwon4z4Q-g

提取码: trc5

导入镜像

[root@k8s01 dingtalk-webhook]# docker load -i prometheus_dingtalk.tar

[root@k8s01 dingtalk-webhook]#docker tag registry.cn-hangzhou.aliyuncs.com/shooer/by_docker_shooter:prometheus_dingtalk_v0.2 harbor.example.com/ops/prometheus_dingtalk

dingtalk-hook 的yaml部署文件

[root@k8s01 dingtalk-webhook]# cat dingtalk-hook.yaml

apiVersion: apps/v1beta2

kind: Deployment

metadata:

labels:

app: webhook-dingtalk

name: webhook-dingtalk

namespace: monitoring

#需要和alertmanager在同一个namespace

spec:

replicas: 1

selector:

matchLabels:

app: webhook-dingtalk

template:

metadata:

labels:

app: webhook-dingtalk

spec:

containers:

- image: harbor.creditgogogo.com/ops/prometheus_dingtalk

name: webhook-dingtalk

args:

- --web.listen-address=:8060

- --config.file=/etc/prometheus-webhook-dingtalk/config.yml

volumeMounts:

- name: webdingtalk-configmap

mountPath: /etc/prometheus-webhook-dingtalk/

- name: webdingtalk-template

mountPath: /etc/prometheus-webhook-dingtalk/templates/

ports:

- containerPort: 8060

protocol: TCP

imagePullSecrets:

- name: IfNotPresent

volumes:

- name: webdingtalk-configmap

configMap:

name: dingtalk-config

- name: webdingtalk-template

configMap:

name: dingtalk-template

---

apiVersion: v1

kind: Service

metadata:

labels:

app: webhook-dingtalk

name: webhook-dingtalk

namespace: monitoring

#需要和alertmanager在同一个namespace

spec:

ports:

- name: http

port: 8060

protocol: TCP

targetPort: 8060

selector:

app: webhook-dingtalk

type: ClusterIP

②、钉钉机器人配置文件,供dingtalk-webhook调用

[root@k8s01 dingtalk-webhook]# cat dingtalk-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: dingtalk-config

namespace: monitoring

labels:

app: dingtalk-config

data:

config.yml: |

templates:

- /etc/prometheus-webhook-dingtalk/templates/default.tmpl

targets:

webhook:

url: https://oapi.dingtalk.com/robot/send?access_token=**********

secret: SECcef7ffa8990cdd29b9d0cbe5c08b121cf7db #不用修改

message:

title: '{{ template "ding.link.title" . }}'

text: '{{ template "ding.link.content" . }}'

③、钉钉告警模板

[root@k8s01 dingtalk-webhook]# cat dingtalk-template.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: dingtalk-template

namespace: monitoring

labels:

app: dingtalk-template

data:

default.tmpl: |

{{ define "__subject" }}[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}] {{ .GroupLabels.SortedPairs.Values | join " " }} {{ if gt (len .CommonLabels) (len .GroupLabels) }}({{ with .CommonLabels.Remove .GroupLabels.Names }}{{ .Values | join " " }}{{ end }}){{ end }}{{ end }}

{{ define "__alertmanagerURL" }}{{ .ExternalURL }}/#/alerts?receiver={{ .Receiver }}{{ end }}

{{ define "__text_alert_list" }}{{ range . }}

**Labels**

{{ range .Labels.SortedPairs }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}

**Annotations**

{{ range .Annotations.SortedPairs }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}

**Source:** [{{ .GeneratorURL }}]({{ .GeneratorURL }})

{{ end }}{{ end }}

{{ define "default.__text_alert_list" }}{{ range . }}

---

**告警级别:** {{ .Labels.severity | upper }}

**运营团队:** {{ .Labels.team | upper }}

**触发时间:** {{ dateInZone "2006.01.02 15:04:05" (.StartsAt) "Asia/Shanghai" }}

**事件信息:**

{{ range .Annotations.SortedPairs }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}

**事件标签:**

{{ range .Labels.SortedPairs }}{{ if and (ne (.Name) "severity") (ne (.Name) "summary") (ne (.Name) "team") }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}{{ end }}

{{ end }}

{{ end }}

{{ define "default.__text_alertresovle_list" }}{{ range . }}

---

**告警级别:** {{ .Labels.severity | upper }}

**运营团队:** {{ .Labels.team | upper }}

**触发时间:** {{ dateInZone "2006.01.02 15:04:05" (.StartsAt) "Asia/Shanghai" }}

**结束时间:** {{ dateInZone "2006.01.02 15:04:05" (.EndsAt) "Asia/Shanghai" }}

**事件信息:**

{{ range .Annotations.SortedPairs }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}

**事件标签:**

{{ range .Labels.SortedPairs }}{{ if and (ne (.Name) "severity") (ne (.Name) "summary") (ne (.Name) "team") }}> - {{ .Name }}: {{ .Value | markdown | html }}

{{ end }}{{ end }}

{{ end }}

{{ end }}

{{/* Default */}}

{{ define "default.title" }}{{ template "__subject" . }}{{ end }}

{{ define "default.content" }}#### \[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}\] **[{{ index .GroupLabels "alertname" }}]({{ template "__alertmanagerURL" . }})**

{{ if gt (len .Alerts.Firing) 0 -}}

**====侦测到故障====**

{{ template "default.__text_alert_list" .Alerts.Firing }}

{{- end }}

{{ if gt (len .Alerts.Resolved) 0 -}}

{{ template "default.__text_alertresovle_list" .Alerts.Resolved }}

{{- end }}

{{- end }}

{{/* Legacy */}}

{{ define "legacy.title" }}{{ template "__subject" . }}{{ end }}

{{ define "legacy.content" }}#### \[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}\] **[{{ index .GroupLabels "alertname" }}]({{ template "__alertmanagerURL" . }})**

{{ template "__text_alert_list" .Alerts.Firing }}

{{- end }}

{{/* Following names for compatibility */}}

{{ define "ding.link.title" }}{{ template "default.title" . }}{{ end }}

{{ define "ding.link.content" }}{{ template "default.content" . }}{{ end }}

④、部署dingtalk-hook

[root@k8s01 dingtalk-webhook]# ls -l

total 12

-rw-r--r-- 1 root root 525 Mar 18 14:14 dingtalk-configmap.yaml

-rw-r--r-- 1 root root 1394 Mar 18 14:21 dingtalk-hook.yaml

-rw-r--r-- 1 root root 3696 Mar 18 14:21 dingtalk-template.yaml

[root@k8s01 dingtalk-webhook]# kubectl apply -f dingtalk-configmap.yaml

configmap/dingtalk-config created

[root@k8s01 dingtalk-webhook]# kubectl apply -f dingtalk-template.yaml

configmap/dingtalk-template created

[root@k8s01 dingtalk-webhook]# kubectl apply -f dingtalk-hook.yaml

deployment.apps/webhook-dingtalk created

三、配置alertmanager

配置Prometheus告警通知方式为钉钉

[root@k8s01 manifests]# kubectl apply -f alertmanager-secret.yaml

secret/alertmanager-main configured

[root@k8s01 manifests]# cat alertmanager-secret.yaml

apiVersion: v1

data: {}

kind: Secret

metadata:

name: alertmanager-main

namespace: monitoring

stringData:

alertmanager.yaml: |-

global:

resolve_timeout: 5m

route:

group_by: ['alertname']

group_wait: 10s

group_interval: 10s

repeat_interval: 5m

receiver: 'webhook'

receivers:

- name: 'webhook'

webhook_configs:

- send_resolved: true

url: 'http://webhook-dingtalk:8060/dingtalk/webhook/send'

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'dev', 'instance']

四、测试告警

①、部署一个不能运行的pod

[root@k8s01 temp]# cat test.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: vv

spec:

replicas: 1

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

selector:

matchLabels:

app: vv

template:

metadata:

labels:

app: vv

spec:

imagePullSecrets:

- name: registry-pull-secret

containers:

- name: vv

image: ccr.ccs.tencentyun.com/lanvv/test-jdk-1-8.0.181-bak:latest

imagePullPolicy: IfNotPresent

prometheus规则参考上一篇文章

修改,prometheus-rules.yaml,在最后面插入内容并apply

####下面的即新加入的

- alert: pod-status

annotations:

message: pod is down pod-status !

expr: |

kube_pod_container_status_running != 1

for: 1m

labels:

severity: warning

部署不能running 的pod

[root@k8s01 temp]# kubectl apply -f test.yaml

deployment.apps/vv created

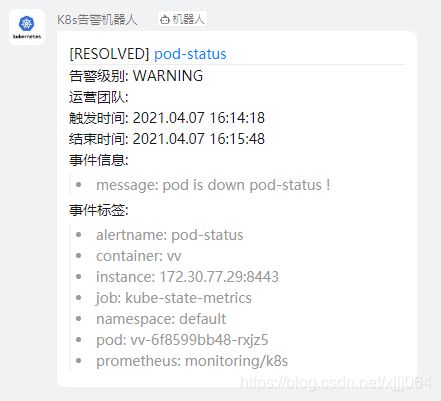

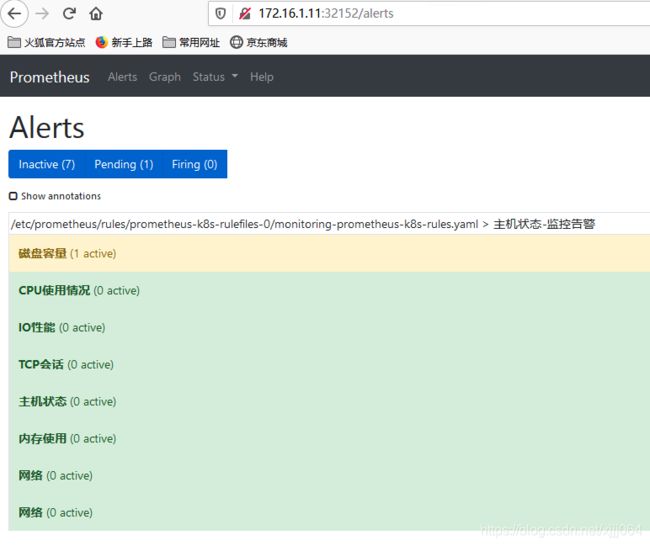

②、钉钉告警

groups:

- name: 主机状态-监控告警

rules:

- alert: 主机状态

expr: up == 0

for: 1m

labels:

status: 非常严重

annotations:

summary: "{{$labels.instance}}:服务器宕机"

description: "{{$labels.instance}}:服务器延时超过5分钟"

- alert: CPU使用情况

expr: 100-(avg(irate(node_cpu_seconds_total{mode="idle"}[5m])) by(instance)* 100) > 60

for: 1m

labels:

status: 一般告警

annotations:

summary: "{{$labels.mountpoint}} CPU使用率过高!"

description: "{{$labels.mountpoint }} CPU使用大于60%(目前使用:{{$value}}%)"

- alert: 内存使用

expr: 100 -(node_memory_MemTotal_bytes -node_memory_MemFree_bytes+node_memory_Buffers_bytes+node_memory_Cached_bytes ) / node_memory_MemTotal_bytes * 100> 80

for: 1m

labels:

status: 严重告警

annotations:

summary: "{{$labels.mountpoint}} 内存使用率过高!"

description: "{{$labels.mountpoint }} 内存使用大于80%(目前使用:{{$value}}%)"

- alert: IO性能

expr: 100-(avg(irate(node_disk_io_time_seconds_total[1m])) by(instance)* 100) < 60

for: 1m

labels:

status: 严重告警

annotations:

summary: "{{$labels.mountpoint}} 流入磁盘IO使用率过高!"

description: "{{$labels.mountpoint }} 流入磁盘IO大于60%(目前使用:{{$value}})"

- alert: 网络

expr: ((sum(rate (node_network_receive_bytes_total{device!~'tap.*|veth.*|br.*|docker.*|virbr*|lo*'}[5m])) by (instance)) / 100) > 102400

for: 1m

labels:

status: 严重告警

annotations:

summary: "{{$labels.mountpoint}} 流入网络带宽过高!"

description: "{{$labels.mountpoint }}流入网络带宽持续2分钟高于100M. RX带宽使用率{{$value}}"

- alert: 网络

expr: ((sum(rate (node_network_transmit_bytes_total{device!~'tap.*|veth.*|br.*|docker.*|virbr*|lo*'}[5m])) by (instance)) / 100) > 102400

for: 1m

labels:

status: 严重告警

annotations:

summary: "{{$labels.mountpoint}} 流出网络带宽过高!"

description: "{{$labels.mountpoint }}流出网络带宽持续2分钟高于100M. RX带宽使用率{{$value}}"

- alert: TCP会话

expr: node_netstat_Tcp_CurrEstab > 1000

for: 1m

labels:

status: 严重告警

annotations:

summary: "{{$labels.mountpoint}} TCP_ESTABLISHED过高!"

description: "{{$labels.mountpoint }} TCP_ESTABLISHED大于1000%(目前使用:{{$value}}%)"

- alert: 磁盘容量

expr: 100-(node_filesystem_free_bytes{fstype=~"ext4|xfs"}/node_filesystem_size_bytes {fstype=~"ext4|xfs"}*100) > 80

for: 1m

labels:

status: 严重告警

annotations:

summary: "{{$labels.mountpoint}} 磁盘分区使用率过高!"

description: "{{$labels.mountpoint }} 磁盘分区使用大于80%(目前使用:{{$value}}%)"

- alert: pod状态

annotations:

message: pod is down pod-status !

expr: |

kube_pod_container_status_running != 1

for: 1m

labels:

severity: warning

Prometheus rules规则编写:https://blog.csdn.net/inrgihc/article/details/107636371

prometheus 常用告警规则:https://blog.csdn.net/xiegh2014/article/details/91598728

参考连接:http://www.yoyoask.com/?p=2462