公众号历史文章采集

公众号历史文章采集

前言:采集公众号历史文章,且链接永久有效,亲测2年多无压力。

1.先在 https://mp.weixin.qq.com/ 注册一个个人版使用公众号,供后续使用。

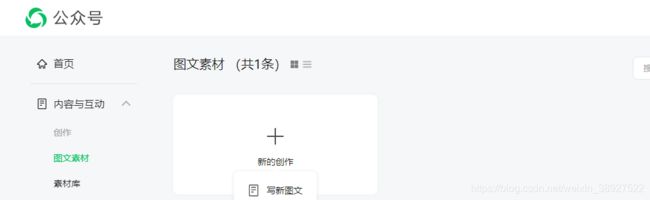

2.点击左侧图文素材,新的创作,写新图文。

3.点击超链接后,填入要查询的公众号。

4.一个小知识点,通过xpath拿到html源码,并提取正文。

def get_html_code(parseHtml, url, codeXpath):

code_html = parseHtml.xpath(codeXpath)

html = ''

for i in code_html:

# etree.tostring() #输出修正后的html代码,byte格式

# 转成utf-8格式,然后decode进行encoding 指定的编码格式解码字符串

html += etree.tostring(i, encoding='utf-8').decode()

return html

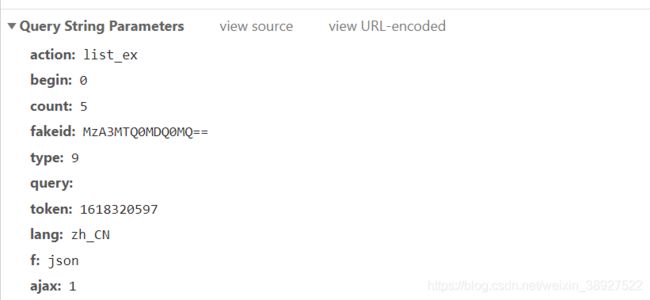

5.F12抓包分析,发现token和cookie是绑定用户的,生成代码。

搜索公众号接口:query= xxxx

文章链接及翻页接口: fakeid= 搜索公众号对应的id base64编码

文章链接及翻页接口: fakeid= 搜索公众号对应的id base64编码

若未固定公众号,可将该公众号id做映射表存放,减少请求

# encoding:utf-8

import json

import math

import time

from spiders.BaseSpider import BaseSpider

from mysqldb.mysql_util import select_link

from parse.requests_parse import requestsParse

from WX.WeChat_util import parse_bjnews, parseWechat

class WeChatSpider(BaseSpider):

"""

定义url和请求头

"""

token = "你的token"

def __init__(self):

super().__init__()

self.cookies = "你的cookie"

self.params = {

'action': 'search_biz',

'begin': '0',

'count': '50',

'query': '',

'fakeid': None,

'type': 9,

'token': self.token,

'lang': 'zh_CN',

'f': 'json',

'ajax': '1'

}

def next_type(self, token, source, apartment, province, city, district, label):

begin, count = 0, 1

self.params["action"] = 'list_ex'

self.params["query"] = ''

content = self.get_information('https://mp.weixin.qq.com/cgi-bin/appmsg')

page = int(math.ceil(json.loads(content).get("app_msg_cnt") / 5))

#print(page)

for index in range(page):

if index % 3 == 0:

time.sleep(60)

try:

if index:

begin += 5

self.params["begin"] = begin

print(f"正在获取第{begin}页数")

content = self.get_information('https://mp.weixin.qq.com/cgi-bin/appmsg')

nextLink = parse_bjnews(content)

for nextUrl in nextLink:

if not token:

# select_link 为查询数据库是否存在 增量采集

if not select_link(link=nextUrl):

break

self.nextUrl_q.put(nextUrl)

if self.nextUrl_q.empty():

return

#日常采集 requestsParse()为解析方法

t = requestsParse(self.nextUrl_q, source, apartment, province, city, district,

titleXpath='//h2[@id="activity-name"]/text()',

codeXpath='//*[@id="js_content"]', labeel=label)

if t :

return

except Exception as e:

print("Spider WeChat Main Error= " + str(e) + " Spider WeChat success page= " + str(

count) + ' ' + source)

return

def main(self, source, apartment, province, city, district, label):

token = 0 #token为0为增量 1为全量 与select_link()做关联

self.params["query"] = source

html = self.get_information('https://mp.weixin.qq.com/cgi-bin/searchbiz')

print(html)

self.params["fakeid"] = parseWechat(html)

self.next_type(token, source, apartment, province, city, district, label)

if __name__ == '__main__':

WeChatSpider().main("公众号名称", "wechat", "省份", "市", "区",label="industrial_economy_policy")

6.贴出工具类中两个方法.

def parse_bjnews(content):

content = json.loads(content)

nextLink = []

for link in content.get("app_msg_list"):

# if titleParse(link.get("title")) and title_Wechat(link.get("title")):

nextLink.append(link.get("link"))

return nextLink

def parseWechat(html):

try:

html = json.loads(html)

fakeid = html.get("list")[0].get("fakeid")

return fakeid

except:

return