Tensorflow学习

一、处理数据的结构

案例代码如下:

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

import numpy as np

# create data

x_data = np.random.rand(100).astype(np.float32)

y_data = x_data*0.1 + 0.3

# 创建结构(一维结构)

Weights = tf.Variable(tf.random.uniform([1],-1.0,1.0))

biases = tf.Variable(tf.zeros([1]))

y = Weights*x_data + biases

# 计算丢失值

loss = tf.reduce_mean(tf.square(y - y_data))

optimizer = tf.train.GradientDescentOptimizer(0.5)

train = optimizer.minimize(loss)

init =tf.initialize_all_variables()

sess = tf.Session()

sess.run(init) #激活

for step in range(201):

sess.run(train)

if step%20 ==0:

print(step,sess.run(Weights),sess.run(biases))

二、Session会话控制

案例代码如下:

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

import numpy as np

matrix1 = tf.constant([[3,3]])

matrix2 = tf.constant([[2],[2]])

# 矩阵相乘

product = tf.matmul(matrix1,matrix2)

#会话控制

sess = tf.Session()

result = sess.run(product)

print(result)

sess.close()输出结果为:[[12]]

三、Variable变量

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

state = tf.Variable(0,name = 'counter')

# print(state.name)

one = tf.constant(1)

new_value = tf.add(state , one)

update = tf.assign(state,new_value)

init = tf.initialize_all_variables()

# 必须使用Session激活

with tf.Session() as sess:

sess.run(init)

for _ in range(3):

sess.run(update)

print(sess.run(state))四、placeholder传入值

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

input1 = tf.placeholder(tf.float32)

input2 = tf.placeholder(tf.float32)

output = tf.multiply(input1,input2)

with tf.Session() as sess:

print(sess.run(output,feed_dict = {input1:[7.],input2:[2.]}))输出结果为:[14.]

五、激励函数

将线性函数扭曲为非线性函数的一种函数

六、添加神经层

def add_layer(inputs,in_size,out_size,activation_function = None):

Weights = tf.Variable(tf.random.uniform([in_size,out_size]))

biases = tf.Variable(tf.zeros([1,out_size])) + 0.1

# 相乘

Wx_plus_b = tf.matmul(inputs,Weights) + biases

# 激活

if activation_function is None:

outputs = Wx_plus_b

else:

outputs = activation_function(Wx_plus_b)

return outputs七、建立神经网络

案例代码如下:

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

import numpy as np

def add_layer(inputs,in_size,out_size,activation_function = None):

Weights = tf.Variable(tf.random.uniform([in_size,out_size]))

biases = tf.Variable(tf.zeros([1,out_size])) + 0.1

# 相乘

Wx_plus_b = tf.matmul(inputs,Weights) + biases

# 激活

if activation_function is None:

outputs = Wx_plus_b

else:

outputs = activation_function(Wx_plus_b)

return outputs

# 定义数据形式

x_data = np.linspace(-1,1,300)[:,np.newaxis] #增加数据维度

noise = np.random.normal(0,0.05,x_data.shape)

y_data = np.square(x_data) - 0.5 + noise

xs = tf.placeholder(tf.float32,[None,1])

ys = tf.placeholder(tf.float32,[None,1])

# 构建隐藏层

l1 = add_layer(xs,1,10,activation_function=tf.nn.relu)

# 构建输出层

predition = add_layer(l1,10,1,activation_function=None)

# 计算误差

loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys - predition),

reduction_indices=[1]))

# 对误差进行更正

train_step = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

init = tf.initialize_all_variables()

sess = tf.Session()

sess.run(init)

for i in range(1000):

sess.run(train_step,feed_dict={xs:x_data,ys:y_data})

if i%50 == 0:

print(sess.run(loss,feed_dict={xs:x_data,ys:y_data}))

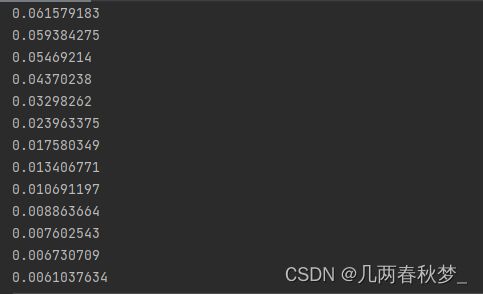

运行结果如下:

可观察到误差不断减小 ,说明预测准确性在不断增加