使用sealer-构建、交付、运行【kubernetes】-demo

Kubernetes 是一个开源的容器编排引擎,用来对容器化应用进行自动化部署、 扩缩和管理.

sealer

把整个集群看成一台服务器,把kubernetes看成云操作系统,吸取docker设计精髓实现分布式软件镜像化构建、交付、运行

- 构建: 使用Kubefile定义整个集群所有依赖,把kubernetes 中间件 数据库和SaaS软件所有依赖打包到集群镜像中

- 交付: 像交付Docker镜像一样交付整个集群和集群中的分布式软件

- 运行: 一条命令启动整个集群, 集群纬度保障一致性,兼容主流linux系统与多种架构

当前文档简单使用sealer,暂未应用,通过sealer看看Kubernetes。

参考

sealer

Kubernetes 文档

sealer是如何把sealos按在地上摩擦的

sealos-gitee

sealos-github

sealer-gitee

sealer-github

Containerd 的前世今生和保姆级入门教程

sealos爆火后依然要开发sealer,背后原因有哪些?

K8S高可用之Sealos

demo-操作

使用sealer创建一个kubernetes集群

主要参考:

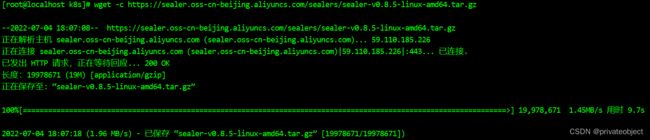

下载

wget -c https://sealer.oss-cn-beijing.aliyuncs.com/sealers/sealer-v0.8.5-linux-amd64.tar.gz

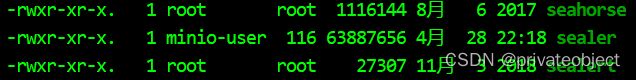

解压并移动

tar -xvf sealer-v0.8.5-linux-amd64.tar.gz -C /usr/bin/

调整授权用户(如果是root请忽略)

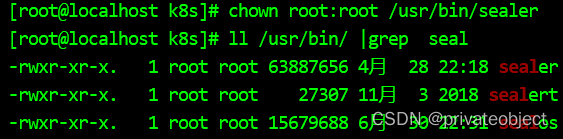

chown root:root /usr/bin/sealer

ll /usr/bin/ |grep seal

命令

版本

sealer version

{

"gitVersion": "v0.8.5",

"gitCommit": "f9c3d99",

"buildDate": "2022-04-28 14:16:58",

"goVersion": "go1.16.15",

"compiler": "gc",

"platform": "linux/amd64"

}

当前版本命令

Available Commands:

apply 应用kubernetes集群

build 从Kubefile构建云image

cert 更新k8s API服务器证书

check 检查群集的状态

completion 为bash生成自动完成脚本

debug 为POD和节点创建调试会话

delete 删除集群

exec 在所有节点上执行shell命令或脚本。

gen 生成一个Clusterfile来接管未由sealer部署的正常集群

gen-doc 使用降价格式为sealer CLI生成MarkDown

help 关于任何命令的帮助

images 列出所有集群images

inspect 打印image信息或Clusterfile

join 将节点加入集群

load 从tar文件加载image

login 登录image存储库

merge 将多个image合并为一个

prune 削减sealer数据目录

pull 将云image拉到本地

push 将云image推送到注册表

rmi 按名称删除本地image

run 使用image和参数运行集群

save 将image保存到tar文件

search sealer搜索kubernetes

tag 将image标记为新image

upgrade 升级kubernetes集群

version 显示sealer版本

Flags:

--config string 配置文件 (默认是 $HOME/.sealer.json)

-d, --debug 启用调试模式

-h, --help sealer帮助

--hide-path 隐藏日志路径

--hide-time 隐藏日志时间

-t, --toggle 切换的帮助消息

一个命令部署整个 Kubernetes 集群

Flags:

--cmd-args strings set args for image cmd instruction

-e, --env strings set custom environment variables

-h, --help help for run

-m, --masters string set Count or IPList to masters

-n, --nodes string set Count or IPList to nodes

-p, --passwd string set cloud provider or baremetal server password

--pk string set baremetal server private key (default "/root/.ssh/id_rsa")

--pk-passwd string set baremetal server private key password

--port string set the sshd service port number for the server (default port: 22) (default "22")

--provider ALI_CLOUD set infra provider, example ALI_CLOUD, the local server need ignore this

-u, --user string set baremetal server username (default "root")

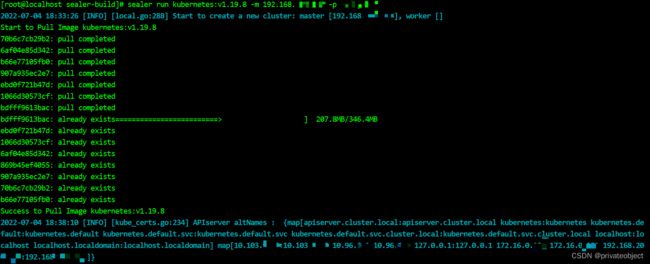

Sealer运行命令行

# 默认root用户

sealer run kubernetes:v1.19.8 -m 192.168.xxx.xxx -p {root密码}

2022-07-04 18:33:26 [INFO] [local.go:288] Start to create a new cluster: master [192.168.xxx.xxx], worker []

Start to Pull Image kubernetes:v1.19.8

70b6c7cb29b2: pull completed

6af04e85d342: pull completed

b66e77105fb0: pull completed

907a935ec2e7: pull completed

ebd0f721b47d: pull completed

1066d30573cf: pull completed

bdfff9613bac: pull completed

bdfff9613bac: already exists=========================> ] 207.8MB/346.4MB

ebd0f721b47d: already exists

1066d30573cf: already exists

6af04e85d342: already exists

869b45ef4055: already exists

907a935ec2e7: already exists

70b6c7cb29b2: already exists

b66e77105fb0: already exists

Success to Pull Image kubernetes:v1.19.8

2022-07-04 18:38:10 [INFO] [kube_certs.go:234] APIserver altNames : {map[apiserver.cluster.local:apiserver.cluster.local kubernetes:kubernetes kubernetes.default:kubernetes.default kubernetes.default.svc:kubernetes.default.svc kubernetes.default.svc.cluster.local:kubernetes.default.svc.cluster.local localhost:localhost localhost.localdomain:localhost.localdomain] map[10.103.xxx.xxx:10.103.xxx.xxx 10.96.xxx.xxx:10.96.xxx.xxx 127.0.0.1:127.0.0.1 172.16.xxx.xxx:172.16.xxx.xxx 192.168.xxx.xxx:192.168.xxx.xxx]}

2022-07-04 18:38:10 [INFO] [kube_certs.go:254] Etcd altnames : {map[localhost:localhost localhost.localdomain:localhost.localdomain] map[127.0.0.1:127.0.0.1 192.168.xxx.xxx:192.168.xxx.xxx ::1:::1]}, commonName : localhost.localdomain

2022-07-04 18:38:17 [INFO] [kubeconfig.go:267] [kubeconfig] Writing "admin.conf" kubeconfig file

2022-07-04 18:38:19 [INFO] [kubeconfig.go:267] [kubeconfig] Writing "controller-manager.conf" kubeconfig file

2022-07-04 18:38:19 [INFO] [kubeconfig.go:267] [kubeconfig] Writing "scheduler.conf" kubeconfig file

2022-07-04 18:38:20 [INFO] [kubeconfig.go:267] [kubeconfig] Writing "kubelet.conf" kubeconfig file

++ dirname init-registry.sh

+ cd .

+ REGISTRY_PORT=5000

+ VOLUME=/var/lib/sealer/data/my-cluster/rootfs/registry

+ REGISTRY_DOMAIN=sea.hub

+ container=sealer-registry

+++ pwd

++ dirname /var/lib/sealer/data/my-cluster/rootfs/scripts

+ rootfs=/var/lib/sealer/data/my-cluster/rootfs

+ config=/var/lib/sealer/data/my-cluster/rootfs/etc/registry_config.yml

+ htpasswd=/var/lib/sealer/data/my-cluster/rootfs/etc/registry_htpasswd

+ certs_dir=/var/lib/sealer/data/my-cluster/rootfs/certs

+ image_dir=/var/lib/sealer/data/my-cluster/rootfs/images

+ mkdir -p /var/lib/sealer/data/my-cluster/rootfs/registry

+ load_images

+ for image in '"$image_dir"/*'

+ '[' -f /var/lib/sealer/data/my-cluster/rootfs/images/registry.tar ']'

+ docker load -q -i /var/lib/sealer/data/my-cluster/rootfs/images/registry.tar

Loaded image: registry:2.7.1

++ docker ps -aq -f name=sealer-registry

+ '[' '' ']'

+ regArgs='-d --restart=always --net=host --name sealer-registry -v /var/lib/sealer/data/my-cluster/rootfs/certs:/certs -v /var/lib/sealer/data/my-cluster/rootfs/registry:/var/lib/registry -e REGISTRY_HTTP_TLS_CERTIFICATE=/certs/sea.hub.crt -e REGISTRY_HTTP_TLS_KEY=/certs/sea.hub.key'

+ '[' -f /var/lib/sealer/data/my-cluster/rootfs/etc/registry_config.yml ']'

+ sed -i s/5000/5000/g /var/lib/sealer/data/my-cluster/rootfs/etc/registry_config.yml

+ regArgs='-d --restart=always --net=host --name sealer-registry -v /var/lib/sealer/data/my-cluster/rootfs/certs:/certs -v /var/lib/sealer/data/my-cluster/rootfs/registry:/var/lib/registry -e REGISTRY_HTTP_TLS_CERTIFICATE=/certs/sea.hub.crt -e REGISTRY_HTTP_TLS_KEY=/certs/sea.hub.key -v /var/lib/sealer/data/my-cluster/rootfs/etc/registry_config.yml:/etc/docker/registry/config.yml'

+ '[' -f /var/lib/sealer/data/my-cluster/rootfs/etc/registry_htpasswd ']'

+ docker run -d --restart=always --net=host --name sealer-registry -v /var/lib/sealer/data/my-cluster/rootfs/certs:/certs -v /var/lib/sealer/data/my-cluster/rootfs/registry:/var/lib/registry -e REGISTRY_HTTP_TLS_CERTIFICATE=/certs/sea.hub.crt -e REGISTRY_HTTP_TLS_KEY=/certs/sea.hub.key -v /var/lib/sealer/data/my-cluster/rootfs/etc/registry_config.yml:/etc/docker/registry/config.yml registry:2.7.1

6d81axxx.xxx

+ check_registry

+ n=1

+ (( n <= 3 ))

++ docker inspect --format '{{json .State.Status}}' sealer-registry

+ registry_status='"running"'

+ [[ "running" == \"running\" ]]

+ break

2022-07-04 18:40:30 [INFO] [init.go:251] start to init master0...

2022-07-04 18:44:38 [INFO] [init.go:206] join command is: kubeadm join apiserver.cluster.local:6443 --token 2uywik.xxx.xxx \

--discovery-token-ca-cert-hash sha256:f8efxxx.xxx \

--control-plane --certificate-key 0830xxx.xxx

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/apiservers.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/imagesets.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/installations.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/tigerastatuses.operator.tigera.io created

namespace/tigera-operator created

podsecuritypolicy.policy/tigera-operator created

serviceaccount/tigera-operator created

clusterrole.rbac.authorization.k8s.io/tigera-operator created

clusterrolebinding.rbac.authorization.k8s.io/tigera-operator created

deployment.apps/tigera-operator created

installation.operator.tigera.io/default created

apiserver.operator.tigera.io/default created

2022-07-04 18:44:48 [INFO] [local.go:298] Succeeded in creating a new cluster, enjoy it!

kubectl–help

kubectl controls the Kubernetes cluster manager.

Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner):

create Create a resource from a file or from stdin.

expose 使用 replication controller, service, deployment 或者 pod 并暴露它作为一个 新的 Kubernetes

Service

run 在集群中运行一个指定的镜像

set 为 objects 设置一个指定的特征

Basic Commands (Intermediate):

explain 查看资源的文档

get 显示一个或更多 resources

edit 在服务器上编辑一个资源

delete Delete resources by filenames, stdin, resources and names, or by resources and label selector

Deploy Commands:

rollout Manage the rollout of a resource

scale Set a new size for a Deployment, ReplicaSet or Replication Controller

autoscale 自动调整一个 Deployment, ReplicaSet, 或者 ReplicationController 的副本数量

Cluster Management Commands:

certificate 修改 certificate 资源.

cluster-info 显示集群信息

top Display Resource (CPU/Memory/Storage) usage.

cordon 标记 node 为 unschedulable

uncordon 标记 node 为 schedulable

drain Drain node in preparation for maintenance

taint 更新一个或者多个 node 上的 taints

Troubleshooting and Debugging Commands:

describe 显示一个指定 resource 或者 group 的 resources 详情

logs 输出容器在 pod 中的日志

attach Attach 到一个运行中的 container

exec 在一个 container 中执行一个命令

port-forward Forward one or more local ports to a pod

proxy 运行一个 proxy 到 Kubernetes API server

cp 复制 files 和 directories 到 containers 和从容器中复制 files 和 directories.

auth Inspect authorization

Advanced Commands:

diff Diff live version against would-be applied version

apply 通过文件名或标准输入流(stdin)对资源进行配置

patch 使用 strategic merge patch 更新一个资源的 field(s)

replace 通过 filename 或者 stdin替换一个资源

wait Experimental: Wait for a specific condition on one or many resources.

convert 在不同的 API versions 转换配置文件

kustomize Build a kustomization target from a directory or a remote url.

Settings Commands:

label 更新在这个资源上的 labels

annotate 更新一个资源的注解

completion Output shell completion code for the specified shell (bash or zsh)

Other Commands:

alpha Commands for features in alpha

api-resources Print the supported API resources on the server

api-versions Print the supported API versions on the server, in the form of "group/version"

config 修改 kubeconfig 文件

plugin Provides utilities for interacting with plugins.

version 输出 client 和 server 的版本信息

Usage:

kubectl [flags] [options]

Use "kubectl --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all commands).

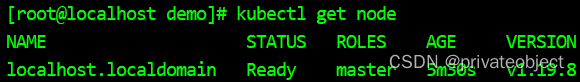

检查集群

# sealer正在启动的时候,是获取不到信息的

kubectl get node

#The connection to the server localhost:8080 was refused - did you specify the right host or port?

# sealer启动成功后就可以了

kubectl get node

#NAME STATUS ROLES AGE VERSION

#localhost.localdomain Ready master 5m30s v1.19.8

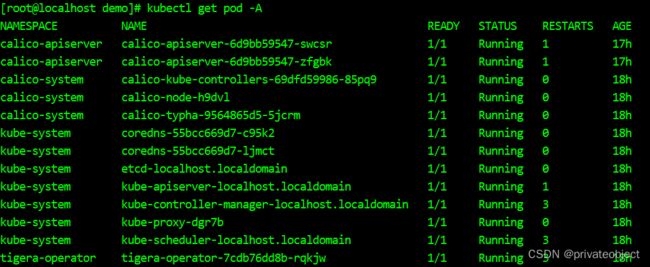

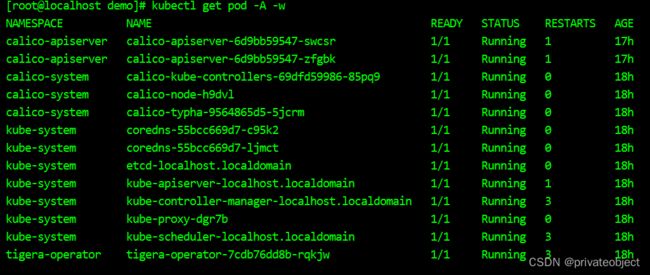

pod状态

Pod是Kubernetes创建或部署的最小/最简单的基本单位,一个Pod代表集群上正在运行的一个进程

kubectl get pod -A

kubectl get pod -A -w

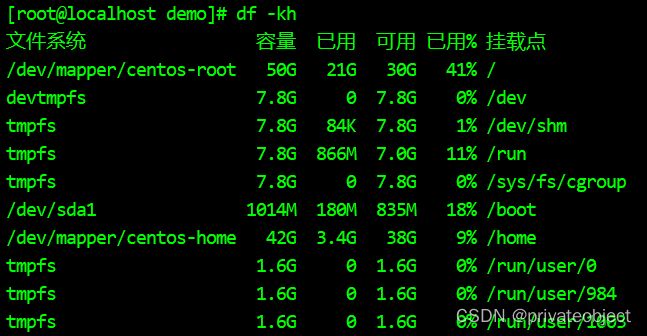

挂载情况

可以发现,挂载了很多容器

df -kh

sealer在启动的时候,容器数据是越来越多的,当前是启动成功后,路径做了压缩

文件系统 容量 已用 可用 已用% 挂载点

/dev/mapper/centos-root 50G 23G 28G 45% /

devtmpfs 7.8G 0 7.8G 0% /dev

tmpfs 7.8G 84K 7.8G 1% /dev/shm

tmpfs 7.8G 876M 7.0G 12% /run

tmpfs 7.8G 0 7.8G 0% /sys/fs/cgroup

/dev/sda1 1014M 180M 835M 18% /boot

/dev/mapper/centos-home 42G 3.4G 38G 9% /home

tmpfs 1.6G 0 1.6G 0% /run/user/0

tmpfs 1.6G 0 1.6G 0% /run/user/984

tmpfs 1.6G 0 1.6G 0% /run/user/1003

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/a5bc...e65e/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/5a3a...d865/merged

shm 64M 0 64M 0% /var/lib/docker/containers/d510...0a1e/mounts/shm

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/a21d...8e9b/merged

shm 64M 0 64M 0% /var/lib/docker/containers/e4b6...1173/mounts/shm

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/cc59...c72c/merged

shm 64M 0 64M 0% /var/lib/docker/containers/e567...6685/mounts/shm

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/5b6e...98d2/merged

shm 64M 0 64M 0% /var/lib/docker/containers/3f19...78ec/mounts/shm

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/446c...754e/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/98a0...3657/merged

tmpfs 7.8G 12K 7.8G 1% /var/lib/kubelet/pods/ba6a...c6f/volumes/kubernetes.io~secret/kube-proxy-token-zd26q

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/84afc435a3ab38ab2421f6faf9cffdcb3acaeb7a18313ae1a34889357a46945c/merged

tmpfs 7.8G 12K 7.8G 1% /var/lib/kubelet/pods/d039...b5c0/volumes/kubernetes.io~secret/tigera-operator-token-crqnv

shm 64M 0 64M 0% /var/lib/docker/containers/1667...028a/mounts/shm

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/496d...8cde/merged

shm 64M 0 64M 0% /var/lib/docker/containers/e64c...6364/mounts/shm

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/a36a...85cd/merged

tmpfs 7.8G 12K 7.8G 1% /var/lib/kubelet/pods/d19d...3ba2/volumes/kubernetes.io~secret/calico-typha-token-k476g

tmpfs 7.8G 12K 7.8G 1% /var/lib/kubelet/pods/d19d...3ba2/volumes/kubernetes.io~secret/typha-certs

tmpfs 7.8G 12K 7.8G 1% /var/lib/kubelet/pods/36bd...a6e9/volumes/kubernetes.io~secret/felix-certs

tmpfs 7.8G 12K 7.8G 1% /var/lib/kubelet/pods/36bd...a6e9/volumes/kubernetes.io~secret/calico-node-token-qh4s6

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/0529...5257/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/9f3d...4d93/merged

shm 64M 0 64M 0% /var/lib/docker/containers/f578...c67e/mounts/shm

shm 64M 0 64M 0% /var/lib/docker/containers/8db2...773c/mounts/shm

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/758f...afde/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/0173...b9af/merged

tmpfs 7.8G 12K 7.8G 1% /var/lib/kubelet/pods/64f0...441e/volumes/kubernetes.io~secret/coredns-token-7sxtt

tmpfs 7.8G 12K 7.8G 1% /var/lib/kubelet/pods/f660...eb6d/volumes/kubernetes.io~secret/coredns-token-7sxtt

tmpfs 7.8G 12K 7.8G 1% /var/lib/kubelet/pods/918c...582c/volumes/kubernetes.io~secret/calico-kube-controllers-token-zx262

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/c5f2a...fb58/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/b808...e85a/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/2688...acfd/merged

shm 64M 0 64M 0% /var/lib/docker/containers/1b75...8e73/mounts/shm

shm 64M 0 64M 0% /var/lib/docker/containers/4c1b...c7e4/mounts/shm

shm 64M 0 64M 0% /var/lib/docker/containers/38e2...90b2/mounts/shm

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/1ed...cce6/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/56d6...14d4/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/4084...88ee/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/27b2...3bb2/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/3983...e1a5/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/1b6b...a007/merged

tmpfs 7.8G 12K 7.8G 1% /var/lib/kubelet/pods/fbfc...882f/volumes/kubernetes.io~secret/calico-apiserver-token-h468b

tmpfs 7.8G 8.0K 7.8G 1% /var/lib/kubelet/pods/fbfc...882f/volumes/kubernetes.io~secret/calico-apiserver-certs

tmpfs 7.8G 8.0K 7.8G 1% /var/lib/kubelet/pods/6dab...d8f5/volumes/kubernetes.io~secret/calico-apiserver-certs

tmpfs 7.8G 12K 7.8G 1% /var/lib/kubelet/pods/6dab...d8f5/volumes/kubernetes.io~secret/calico-apiserver-token-h468b

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/4476...b167/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/a7c9...67b2/merged

shm 64M 0 64M 0% /var/lib/docker/containers/b0d7...3b1a/mounts/shm

shm 64M 0 64M 0% /var/lib/docker/containers/3770...d3f6/mounts/shm

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/4122...ca4b/merged

overlay 50G 23G 28G 45% /var/lib/docker/overlay2/ab2e...6cd8/merged

扩展和缩减(只有一个服务器-未测试)

略

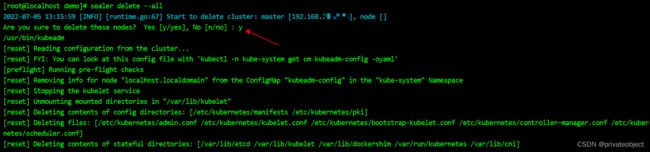

清理集群

sealer delete --help

if provider is Bare Metal Server will delete kubernetes nodes

Usage:

sealer delete [flags]

Examples:

delete to default cluster:

sealer delete --masters x.x.x.x --nodes x.x.x.x

sealer delete --masters x.x.x.x-x.x.x.y --nodes x.x.x.x-x.x.x.y

delete all:

sealer delete --all [--force]

sealer delete -f /root/.sealer/mycluster/Clusterfile [--force]

sealer delete -c my-cluster [--force]

Flags:

-f, --Clusterfile string delete a kubernetes cluster with Clusterfile Annotations

-a, --all this flags is for delete nodes, if this is true, empty all node ip

-c, --cluster string delete a kubernetes cluster with cluster name

--force We also can input an --force flag to delete cluster by force

-h, --help help for delete

-m, --masters string reduce Count or IPList to masters

-n, --nodes string reduce Count or IPList to nodes

Global Flags:

--config string config file (default is $HOME/.sealer.json)

-d, --debug turn on debug mode

--hide-path hide the log path

--hide-time hide the log time

执行清理

sealer delete --all

2022-07-05 13:15:59 [INFO] [runtime.go:67] Start to delete cluster: master [192.168.xxx.xxx], node []

Are you sure to delete these nodes? Yes [y/yes], No [n/no] : y

/usr/bin/kubeadm

[reset] Reading configuration from the cluster...

[reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks

[reset] Removing info for node "localhost.localdomain" from the ConfigMap "kubeadm-config" in the "kube-system" Namespace

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

[reset] Deleting contents of stateful directories: [/var/lib/etcd /var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni]

The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

Module Size Used by

vxlan 49678 0

ip6_udp_tunnel 12755 1 vxlan

udp_tunnel 14423 1 vxlan

xt_multiport 12798 11

ipt_rpfilter 12606 1

iptable_raw 12678 1

ip_set_hash_ip 31658 1

ip_set_hash_net 36021 3

xt_set 18141 10

ip_set_hash_ipportnet 36255 1

ip_set_bitmap_port 17150 4

ip_set_hash_ipportip 31880 2

ip_set_hash_ipport 31806 8

dummy 12960 0

iptable_mangle 12695 1

xt_mark 12563 97

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 7

ip_vs 145497 13 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack_netlink 36354 0

xt_addrtype 12676 8

br_netfilter 22256 0

ip6table_nat 12864 0

nf_conntrack_ipv6 18935 1

nf_defrag_ipv6 35104 1 nf_conntrack_ipv6

nf_nat_ipv6 14131 1 ip6table_nat

ip6_tables 26912 1 ip6table_nat

xt_conntrack 12760 28

iptable_filter 12810 1

ipt_MASQUERADE 12678 5

nf_nat_masquerade_ipv4 13412 1 ipt_MASQUERADE

xt_comment 12504 315

iptable_nat 12875 1

nf_conntrack_ipv4 15053 29

nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

nf_nat_ipv4 14115 1 iptable_nat

nf_nat 26787 3 nf_nat_ipv4,nf_nat_ipv6,nf_nat_masquerade_ipv4

nf_conntrack 133095 9 ip_vs,nf_nat,nf_nat_ipv4,nf_nat_ipv6,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4,nf_conntrack_ipv6

ip_tables 27126 4 iptable_filter,iptable_mangle,iptable_nat,iptable_raw

veth 13410 0

overlay 71964 2

tun 31740 1

devlink 48345 0

ip_set 45644 7 ip_set_hash_ipportnet,ip_set_hash_net,ip_set_bitmap_port,ip_set_hash_ipportip,ip_set_hash_ip,xt_set,ip_set_hash_ipport

nfnetlink 14490 3 ip_set,nf_conntrack_netlink

bridge 151336 1 br_netfilter

stp 12976 1 bridge

llc 14552 2 stp,bridge

vmw_vsock_vmci_transport 30577 1

vsock 36526 2 vmw_vsock_vmci_transport

sunrpc 353352 1

nfit 55016 0

libnvdimm 147731 1 nfit

iosf_mbi 15582 0

crc32_pclmul 13133 0

ghash_clmulni_intel 13273 0

aesni_intel 189414 1

lrw 13286 1 aesni_intel

gf128mul 15139 1 lrw

glue_helper 13990 1 aesni_intel

ablk_helper 13597 1 aesni_intel

ppdev 17671 0

cryptd 21190 3 ghash_clmulni_intel,aesni_intel,ablk_helper

vmw_balloon 18094 0

pcspkr 12718 0

sg 40721 0

joydev 17389 0

parport_pc 28205 0

i2c_piix4 22401 0

parport 46395 2 ppdev,parport_pc

vmw_vmci 67127 1 vmw_vsock_vmci_transport

sch_fq_codel 17571 6

xfs 997127 3

libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

sd_mod 46281 3

sr_mod 22416 0

cdrom 42556 1 sr_mod

crc_t10dif 12912 1 sd_mod

crct10dif_generic 12647 0

crct10dif_pclmul 14307 1

crct10dif_common 12595 3 crct10dif_pclmul,crct10dif_generic,crc_t10dif

crc32c_intel 22094 1

serio_raw 13434 0

ata_generic 12923 0

pata_acpi 13053 0

vmwgfx 276430 2

floppy 69432 0

drm_kms_helper 179394 1 vmwgfx

syscopyarea 12529 1 drm_kms_helper

sysfillrect 12701 1 drm_kms_helper

sysimgblt 12640 1 drm_kms_helper

fb_sys_fops 12703 1 drm_kms_helper

ttm 114635 1 vmwgfx

ata_piix 35052 0

drm 429744 5 ttm,drm_kms_helper,vmwgfx

libata 243133 3 pata_acpi,ata_generic,ata_piix

vmxnet3 58059 0

mptspi 22628 2

scsi_transport_spi 30732 1 mptspi

mptscsih 40150 1 mptspi

mptbase 106036 2 mptspi,mptscsih

drm_panel_orientation_quirks 12957 1 drm

dm_mirror 22289 0

dm_region_hash 20813 1 dm_mirror

dm_log 18411 2 dm_region_hash,dm_mirror

dm_mod 124407 10 dm_log,dm_mirror

[

{

"Id": "6d81...9aff",

"Created": "2022-07-04T10:40:28.068520236Z",

"Path": "registry",

"Args": [

"serve",

"/etc/docker/registry/config.yml"

],

"State": {

"Status": "running",

"Running": true,

"Paused": false,

"Restarting": false,

"OOMKilled": false,

"Dead": false,

"Pid": 767,

"ExitCode": 0,

"Error": "",

"StartedAt": "2022-07-04T10:40:30.304277835Z",

"FinishedAt": "0001-01-01T00:00:00Z"

},

"Image": "sha256:0d01...4fda",

"ResolvConfPath": "/var/lib/docker/containers/6d81...9aff/resolv.conf",

"HostnamePath": "/var/lib/docker/containers/6d81...9aff/hostname",

"HostsPath": "/var/lib/docker/containers/6d81...9aff/hosts",

"LogPath": "/var/lib/docker/containers/6d81...9aff/6d81...9aff-json.log",

"Name": "/sealer-registry",

"RestartCount": 0,

"Driver": "overlay2",

"Platform": "linux",

"MountLabel": "",

"ProcessLabel": "",

"AppArmorProfile": "",

"ExecIDs": null,

"HostConfig": {

"Binds": [

"/var/lib/sealer/data/my-cluster/rootfs/certs:/certs",

"/var/lib/sealer/data/my-cluster/rootfs/registry:/var/lib/registry",

"/var/lib/sealer/data/my-cluster/rootfs/etc/registry_config.yml:/etc/docker/registry/config.yml"

],

"ContainerIDFile": "",

"LogConfig": {

"Type": "json-file",

"Config": {

"max-file": "3",

"max-size": "10m"

}

},

"NetworkMode": "host",

"PortBindings": {},

"RestartPolicy": {

"Name": "always",

"MaximumRetryCount": 0

},

"AutoRemove": false,

"VolumeDriver": "",

"VolumesFrom": null,

"CapAdd": null,

"CapDrop": null,

"Capabilities": null,

"Dns": [],

"DnsOptions": [],

"DnsSearch": [],

"ExtraHosts": null,

"GroupAdd": null,

"IpcMode": "private",

"Cgroup": "",

"Links": null,

"OomScoreAdj": 0,

"PidMode": "",

"Privileged": false,

"PublishAllPorts": false,

"ReadonlyRootfs": false,

"SecurityOpt": null,

"UTSMode": "",

"UsernsMode": "",

"ShmSize": 67108864,

"Runtime": "runc",

"ConsoleSize": [

0,

0

],

"Isolation": "",

"CpuShares": 0,

"Memory": 0,

"NanoCpus": 0,

"CgroupParent": "",

"BlkioWeight": 0,

"BlkioWeightDevice": [],

"BlkioDeviceReadBps": null,

"BlkioDeviceWriteBps": null,

"BlkioDeviceReadIOps": null,

"BlkioDeviceWriteIOps": null,

"CpuPeriod": 0,

"CpuQuota": 0,

"CpuRealtimePeriod": 0,

"CpuRealtimeRuntime": 0,

"CpusetCpus": "",

"CpusetMems": "",

"Devices": [],

"DeviceCgroupRules": null,

"DeviceRequests": null,

"KernelMemory": 0,

"KernelMemoryTCP": 0,

"MemoryReservation": 0,

"MemorySwap": 0,

"MemorySwappiness": null,

"OomKillDisable": false,

"PidsLimit": null,

"Ulimits": null,

"CpuCount": 0,

"CpuPercent": 0,

"IOMaximumIOps": 0,

"IOMaximumBandwidth": 0,

"MaskedPaths": [

"/proc/asound",

"/proc/acpi",

"/proc/kcore",

"/proc/keys",

"/proc/latency_stats",

"/proc/timer_list",

"/proc/timer_stats",

"/proc/sched_debug",

"/proc/scsi",

"/sys/firmware"

],

"ReadonlyPaths": [

"/proc/bus",

"/proc/fs",

"/proc/irq",

"/proc/sys",

"/proc/sysrq-trigger"

]

},

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/a5bc...e65e-init/diff:/var/lib/docker/overlay2/362e...7587f2d449685a3a6/diff:/var/lib/docker/overlay2/b907...c3f7/diff:/var/lib/docker/overlay2/8f53...ae07/diff",

"MergedDir": "/var/lib/docker/overlay2/a5bc12b6f24d74fb23c72e5b051cde8262853d0fdf612ccd060384663ec5e65e/merged",

"UpperDir": "/var/lib/docker/overlay2/a5bc...3ec5e65e/diff",

"WorkDir": "/var/lib/docker/overlay2/a5bc...e65e/work"

},

"Name": "overlay2"

},

"Mounts": [

{

"Type": "bind",

"Source": "/var/lib/sealer/data/my-cluster/rootfs/registry",

"Destination": "/var/lib/registry",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

},

{

"Type": "bind",

"Source": "/var/lib/sealer/data/my-cluster/rootfs/etc/registry_config.yml",

"Destination": "/etc/docker/registry/config.yml",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

},

{

"Type": "bind",

"Source": "/var/lib/sealer/data/my-cluster/rootfs/certs",

"Destination": "/certs",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

}

],

"Config": {

"Hostname": "localhost.localdomain",

"Domainname": "",

"User": "",

"AttachStdin": false,

"AttachStdout": false,

"AttachStderr": false,

"ExposedPorts": {

"5000/tcp": {}

},

"Tty": false,

"OpenStdin": false,

"StdinOnce": false,

"Env": [

"REGISTRY_HTTP_TLS_CERTIFICATE=/certs/sea.hub.crt",

"REGISTRY_HTTP_TLS_KEY=/certs/sea.hub.key",

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

],

"Cmd": [

"serve",

"/etc/docker/registry/config.yml"

],

"Image": "registry:2.7.1",

"Volumes": {

"/var/lib/registry": {}

},

"WorkingDir": "",

"Entrypoint": [

"registry"

],

"OnBuild": null,

"Labels": {}

},

"NetworkSettings": {

"Bridge": "",

"SandboxID": "ae1d...ac1b",

"HairpinMode": false,

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"Ports": {},

"SandboxKey": "/var/run/docker/netns/default",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"EndpointID": "",

"Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "",

"IPPrefixLen": 0,

"IPv6Gateway": "",

"MacAddress": "",

"Networks": {

"host": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "3ed7...98c3e",

"EndpointID": "ebaa...1ef4",

"Gateway": "",

"IPAddress": "",

"IPPrefixLen": 0,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "",

"DriverOpts": null

}

}

}

}

]

sealer-registry

2022-07-05 13:16:28 [INFO] [local.go:316] Succeeded in deleting current cluster

删除镜像

sealer rmi kubernetes:v1.19.8

清理后挂载情况

df -kh

可以看到,归还了部分硬盘空间

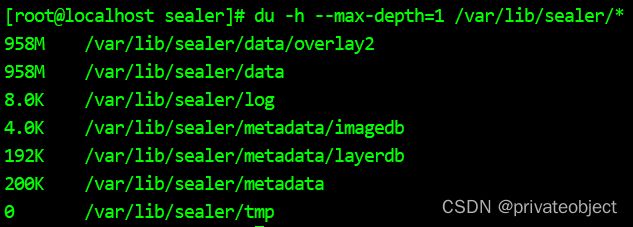

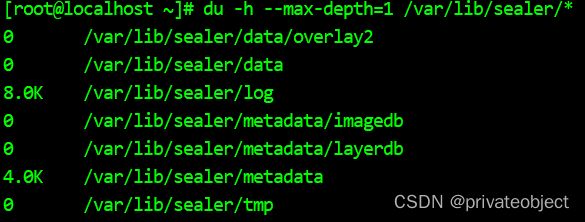

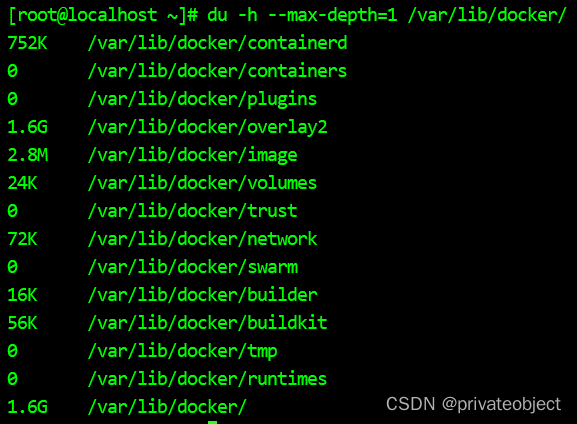

查看sealer和docker的数据目录大小

du -h --max-depth=1 /var/lib/sealer/*

du -h --max-depth=1 /var/lib/docker/

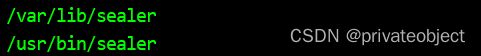

find / -name docker

find / -name sealer

其他

sealer images

| IMAGE NAME | IMAGE ID | ARCH | VARIANT | CREATE | SIZE |

| kubernetes:v1.19.8 | 46f8...7618 | amd64 | | 2022-07-04 18:37:53 | 956.60MB |

# 清理docker,未找到运行docker运行,先这么处理了,暂不清楚后果如何

rm -rf /var/lib/docker