k8s常见的资源对象使用

目录

一、kubernetes内置资源对象

1.1、kubernetes内置资源对象介绍

1.2、kubernetes资源对象操作命令

二、job与cronjob计划任务

2.1、job计划任务

2.2、cronjob计划任务

三、RC/RS副本控制器

3.1、RC副本控制器

3.2、RS副本控制器

3.3、RS更新pod

四、Deployment副本控制器

4.1、Deployment副本控制器

五、Kubernetes之Service

5.1、Kubernetes Service介绍

5.2、service类型

六、Kubernetes之configmap

七、Kubernetes之Secret

7.1、Secret简介

7.2、Secret简介类型

7.3、Secret类型-Opaque格式

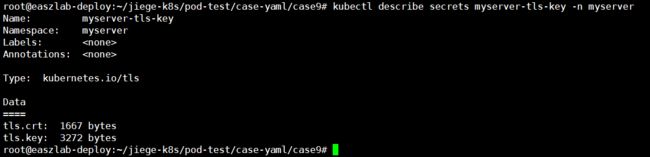

7.4、Secret类型-kubernetes.io/tls-为nginx提供证书

7.5、Secret-kubernetes.io/dockerconfigjson类型

一、kubernetes内置资源对象

1.1、kubernetes内置资源对象介绍

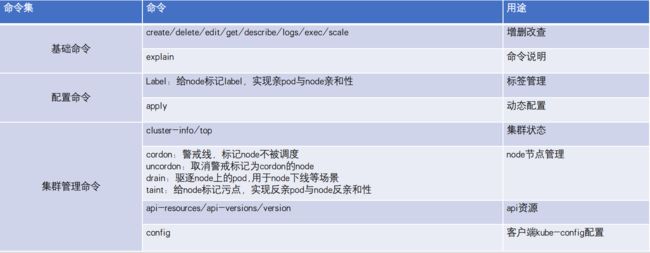

1.2、kubernetes资源对象操作命令

官网介绍:https://kubernetes.io/zh-cn/docs/concepts/workloads/controllers/deployment/

二、job与cronjob计划任务

2.1、job计划任务

job属于一次性任务,常用于环境初始化例如mysql/elasticsearch。

root@easzlab-deploy:~/jiege-k8s/pod-test# cat 1.job.yaml apiVersion: batch/v1

kind: Job

metadata:

name: job-mysql-init

spec:

template:

spec:

containers:

- name: job-mysql-init-container

image: centos:7.9.2009

command: ["/bin/sh"]

args: ["-c", "echo data init job at `date +%Y-%m-%d_%H-%M-%S` >> /cache/data.log"]

volumeMounts:

- mountPath: /cache

name: cache-volume

volumes:

- name: cache-volume

hostPath:

path: /tmp/jobdata

restartPolicy: Never

root@easzlab-deploy:~/pod-test# kubectl apply -f 1.job.yaml

job.batch/job-mysql-init created

root@easzlab-deploy:~/pod-test# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default job-mysql-init-n29g9 0/1 ContainerCreating 0 14s

kube-system calico-kube-controllers-5c8bb696bb-fxbmr 1/1 Running 1 (3d7h ago) 7d18h

kube-system calico-node-2qtfm 1/1 Running 1 (3d7h ago) 7d18h

kube-system calico-node-8l78t 1/1 Running 1 (3d7h ago) 7d18h

kube-system calico-node-9b75m 1/1 Running 1 (3d7h ago) 7d18h

kube-system calico-node-k75jh 1/1 Running 1 (3d7h ago) 7d18h

kube-system calico-node-kmbhs 1/1 Running 1 (3d7h ago) 7d18h

kube-system calico-node-lxfk9 1/1 Running 1 (3d7h ago) 7d18h

kube-system coredns-69548bdd5f-6df7j 1/1 Running 1 (3d7h ago) 7d6h

kube-system coredns-69548bdd5f-nl5qc 1/1 Running 1 (3d7h ago) 7d6h

kubernetes-dashboard dashboard-metrics-scraper-8c47d4b5d-2d275 1/1 Running 1 (3d7h ago) 7d6h

kubernetes-dashboard kubernetes-dashboard-5676d8b865-6l8n8 1/1 Running 1 (3d7h ago) 7d6h

linux70 linux70-tomcat-app1-deployment-5d666575cc-kbjhk 1/1 Running 1 (3d7h ago) 5d7h

myserver linux70-nginx-deployment-55dc5fdcf9-58ll2 1/1 Running 0 20h

myserver linux70-nginx-deployment-55dc5fdcf9-6xcjk 1/1 Running 0 20h

myserver linux70-nginx-deployment-55dc5fdcf9-cxg5m 1/1 Running 0 20h

myserver linux70-nginx-deployment-55dc5fdcf9-gv2gk 1/1 Running 0 20h

velero-system velero-858b9459f9-5mxxx 1/1 Running 0 21h

root@easzlab-deploy:~/pod-test#2.2、cronjob计划任务

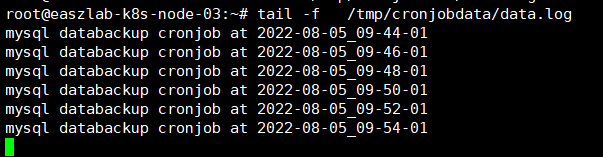

cronjob属于周期性任务,cronjob广泛用于数据库计划备份场景。

root@easzlab-deploy:~/jiege-k8s/pod-test# cat 2.cronjob.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: cronjob-mysql-databackup

spec:

schedule: "*/2 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: cronjob-mysql-databackup-pod

image: centos:7.9.2009

command: ["/bin/sh"]

args: ["-c", "echo mysql databackup cronjob at `date +%Y-%m-%d_%H-%M-%S` >> /cache/data.log"]

volumeMounts:

- mountPath: /cache

name: cache-volume

volumes:

- name: cache-volume

hostPath:

path: /tmp/cronjobdata

restartPolicy: OnFailure

root@easzlab-deploy:~/pod-test# kubectl apply -f 2.cronjob.yaml

root@easzlab-deploy:~/pod-test#

root@easzlab-deploy:~/pod-test# kubectl get pod -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default cronjob-mysql-databackup-27661544-wntbb 0/1 Completed 0 4m3s 10.200.2.13 172.16.88.159

default cronjob-mysql-databackup-27661546-lbf2t 0/1 Completed 0 2m3s 10.200.2.14 172.16.88.159

default cronjob-mysql-databackup-27661548-8p9j6 0/1 Completed 0 3s 10.200.2.15 172.16.88.159

kube-system calico-kube-controllers-5c8bb696bb-fxbmr 1/1 Running 1 (3d7h ago) 7d18h 172.16.88.159 172.16.88.159

kube-system calico-node-2qtfm 1/1 Running 1 (3d7h ago) 7d18h 172.16.88.158 172.16.88.158

kube-system calico-node-8l78t 1/1 Running 1 (3d7h ago) 7d18h 172.16.88.154 172.16.88.154

kube-system calico-node-9b75m 1/1 Running 1 (3d7h ago) 7d18h 172.16.88.156 172.16.88.156

kube-system calico-node-k75jh 1/1 Running 1 (3d7h ago) 7d18h 172.16.88.157 172.16.88.157

kube-system calico-node-kmbhs 1/1 Running 1 (3d7h ago) 7d18h 172.16.88.159 172.16.88.159

kube-system calico-node-lxfk9 1/1 Running 1 (3d7h ago) 7d18h 172.16.88.155 172.16.88.155

kube-system coredns-69548bdd5f-6df7j 1/1 Running 1 (3d7h ago) 7d6h 10.200.2.6 172.16.88.159

kube-system coredns-69548bdd5f-nl5qc 1/1 Running 1 (3d7h ago) 7d6h 10.200.40.199 172.16.88.157

kubernetes-dashboard dashboard-metrics-scraper-8c47d4b5d-2d275 1/1 Running 1 (3d7h ago) 7d6h 10.200.40.197 172.16.88.157

kubernetes-dashboard kubernetes-dashboard-5676d8b865-6l8n8 1/1 Running 1 (3d7h ago) 7d6h 10.200.40.198 172.16.88.157

linux70 linux70-tomcat-app1-deployment-5d666575cc-kbjhk 1/1 Running 1 (3d7h ago) 5d7h 10.200.233.67 172.16.88.158

myserver linux70-nginx-deployment-55dc5fdcf9-58ll2 1/1 Running 0 21h 10.200.2.10 172.16.88.159

myserver linux70-nginx-deployment-55dc5fdcf9-6xcjk 1/1 Running 0 21h 10.200.2.9 172.16.88.159

myserver linux70-nginx-deployment-55dc5fdcf9-cxg5m 1/1 Running 0 21h 10.200.2.11 172.16.88.159

myserver linux70-nginx-deployment-55dc5fdcf9-gv2gk 1/1 Running 0 21h 10.200.233.69 172.16.88.158

velero-system velero-858b9459f9-5mxxx 1/1 Running 0 21h 10.200.40.202 172.16.88.157

root@easzlab-deploy:~/pod-test# 三、RC/RS副本控制器

3.1、RC副本控制器

Replication Controller: 副本控制器( selector = !=) #第一代pod副本控制器

https://kubernetes.io/zh/docs/concepts/workloads/controllers/replicationcontroller/

https://kubernetes.io/zh/docs/concepts/overview/working-with-objects/labels/

root@easzlab-deploy:~/jiege-k8s/pod-test# cat 1.rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: ng-rc

spec:

replicas: 2

selector:

app: ng-rc-80

template:

metadata:

labels:

app: ng-rc-80

spec:

containers:

- name: ng-rc-80

image: nginx

ports:

- containerPort: 80

root@easzlab-deploy:~/pod-test# kubectl apply -f 1.rc.yaml

replicationcontroller/ng-rc created

root@easzlab-deploy:~/pod-test#

root@easzlab-deploy:~/pod-test# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default ng-rc-528fl 1/1 Running 0 2m8s

default ng-rc-d6zqx 1/1 Running 0 2m8s

kube-system calico-kube-controllers-5c8bb696bb-fxbmr 1/1 Running 1 (3d10h ago) 7d21h

kube-system calico-node-2qtfm 1/1 Running 1 (3d10h ago) 7d21h

kube-system calico-node-8l78t 1/1 Running 1 (3d10h ago) 7d21h

kube-system calico-node-9b75m 1/1 Running 1 (3d10h ago) 7d21h

kube-system calico-node-k75jh 1/1 Running 1 (3d10h ago) 7d21h

kube-system calico-node-kmbhs 1/1 Running 1 (3d10h ago) 7d21h

kube-system calico-node-lxfk9 1/1 Running 1 (3d10h ago) 7d21h

kube-system coredns-69548bdd5f-6df7j 1/1 Running 1 (3d10h ago) 7d9h

kube-system coredns-69548bdd5f-nl5qc 1/1 Running 1 (3d10h ago) 7d9h

kubernetes-dashboard dashboard-metrics-scraper-8c47d4b5d-2d275 1/1 Running 1 (3d10h ago) 7d9h

kubernetes-dashboard kubernetes-dashboard-5676d8b865-6l8n8 1/1 Running 1 (3d10h ago) 7d9h

linux70 linux70-tomcat-app1-deployment-5d666575cc-kbjhk 1/1 Running 1 (3d10h ago) 5d9h

myserver linux70-nginx-deployment-55dc5fdcf9-58ll2 1/1 Running 0 23h

myserver linux70-nginx-deployment-55dc5fdcf9-6xcjk 1/1 Running 0 23h

myserver linux70-nginx-deployment-55dc5fdcf9-cxg5m 1/1 Running 0 23h

myserver linux70-nginx-deployment-55dc5fdcf9-gv2gk 1/1 Running 0 23h

velero-system velero-858b9459f9-5mxxx 1/1 Running 0 24h

root@easzlab-deploy:~/pod-test# 3.2、RS副本控制器

ReplicaSet:副本控制器,和副本控制器的区别是:对选择器的支持( selector 还支持in notin) #第二代pod副本控制器

https://kubernetes.io/zh/docs/concepts/workloads/controllers/replicaset/

root@easzlab-deploy:~/jiege-k8s/pod-test# cat 2.rs.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: frontend

spec:

replicas: 2

selector:

matchExpressions:

- {key: app, operator: In, values: [ng-rs-80,ng-rs-81]}

template:

metadata:

labels:

app: ng-rs-80

spec:

containers:

- name: ng-rs-80

image: nginx

ports:

- containerPort: 80

root@easzlab-deploy:~/pod-test# kubectl apply -f 2.rs.yaml

replicaset.apps/frontend created

root@easzlab-deploy:~/pod-test# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default frontend-jl67s 1/1 Running 0 97s

default frontend-w7rb5 1/1 Running 0 97s

kube-system calico-kube-controllers-5c8bb696bb-fxbmr 1/1 Running 1 (3d10h ago) 7d21h

kube-system calico-node-2qtfm 1/1 Running 1 (3d10h ago) 7d21h

kube-system calico-node-8l78t 1/1 Running 1 (3d10h ago) 7d21h

kube-system calico-node-9b75m 1/1 Running 1 (3d10h ago) 7d21h

kube-system calico-node-k75jh 1/1 Running 1 (3d10h ago) 7d21h

kube-system calico-node-kmbhs 1/1 Running 1 (3d10h ago) 7d21h

kube-system calico-node-lxfk9 1/1 Running 1 (3d10h ago) 7d21h

kube-system coredns-69548bdd5f-6df7j 1/1 Running 1 (3d10h ago) 7d10h

kube-system coredns-69548bdd5f-nl5qc 1/1 Running 1 (3d10h ago) 7d10h

kubernetes-dashboard dashboard-metrics-scraper-8c47d4b5d-2d275 1/1 Running 1 (3d10h ago) 7d10h

kubernetes-dashboard kubernetes-dashboard-5676d8b865-6l8n8 1/1 Running 1 (3d10h ago) 7d10h

linux70 linux70-tomcat-app1-deployment-5d666575cc-kbjhk 1/1 Running 1 (3d10h ago) 5d10h

myserver linux70-nginx-deployment-55dc5fdcf9-58ll2 1/1 Running 0 24h

myserver linux70-nginx-deployment-55dc5fdcf9-6xcjk 1/1 Running 0 24h

myserver linux70-nginx-deployment-55dc5fdcf9-cxg5m 1/1 Running 0 24h

myserver linux70-nginx-deployment-55dc5fdcf9-gv2gk 1/1 Running 0 24h

velero-system velero-858b9459f9-5mxxx 1/1 Running 0 24h

root@easzlab-deploy:~/pod-test# 3.3、RS更新pod

如需要手动指定镜像进行更新

kubectl set image replicaset/fronted ng-rs-80=nginx:1.18.2

四、Deployment副本控制器

4.1、Deployment副本控制器

Deployment 为 Pod 和 ReplicaSet 提供声明式的更新能力,Deployment比rs更高一级的控制器,除了有rs的功能之外,还有滚动升级、回滚、策略清理、金丝雀部署等等。

官网文档:https://kubernetes.io/zh-cn/docs/concepts/workloads/controllers/deployment/

root@easzlab-deploy:~/jiege-k8s/pod-test# cat 1.deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3 #设置副本数

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

root@easzlab-deploy:~/jiege-k8s/pod-test# kubectl apply -f 1.deployment.yaml

deployment.apps/nginx-deployment created

root@easzlab-deploy:~/jiege-k8s/pod-test# kubectl get pod

NAME READY STATUS RESTARTS AGE

mysql-77d55bfdd8-cbtcz 1/1 Running 2 (16h ago) 39h

nginx-deployment-6595874d85-hm5gx 1/1 Running 0 19m

nginx-deployment-6595874d85-wdwx9 1/1 Running 0 19m

nginx-deployment-6595874d85-z8dsf 1/1 Running 0 19m

root@easzlab-deploy:~/jiege-k8s/pod-test#五、Kubernetes之Service

5.1、Kubernetes Service介绍

由于pod重建之后ip就变了, 因此pod之间使用pod的IP直接访问会出现无法访问的问题, 而service则解耦了服务和应用, service的实现方式就是通过label标签动态匹配后端endpoint。

kube-proxy监听着k8s-apiserver,一旦service资源发生变化(调k8sapi修改service信息) , kubeproxy就会生成对应的负载调度的调整, 这样就保证service的最新状态。

kube-proxy有三种调度模型

- userspace: k8s1.1之前

- iptables: 1.2-k8s1.11之前

- ipvs: k8s 1.11之后, 如果没有开启ipvs, 则自动降级为iptables

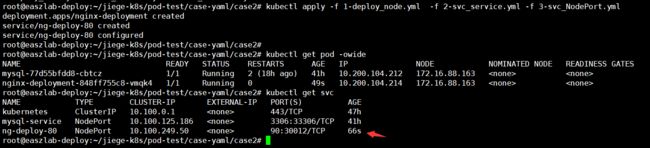

5.2、service类型

- ClusterIP: 用于内部服务基于service name的访问。

- NodePort: 用于kubernetes集群以外的服务主动访问运行在kubernetes集群内部的服务。

- LoadBalancer: 用于公有云环境的服务暴露。

- ExternalName: 用于将k8s集群外部的服务映射至k8s集群内部访问, 从而让集群内部的pod能够通过固定的service name访问集群外部的服务, 有时候也用于将不同namespace之间的pod通过ExternalName进行访问。

应用案例

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case2# cat 1-deploy_node.yml

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

#matchLabels: #rs or deployment

# app: ng-deploy3-80

matchExpressions:

- {key: app, operator: In, values: [ng-deploy-80,ng-rs-81]}

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx:1.16.1

ports:

- containerPort: 80

#nodeSelector:

# env: group1

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case2#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case2#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case2# cat 2-svc_service.yml

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 88

targetPort: 80

protocol: TCP

type: ClusterIP

selector:

app: ng-deploy-80

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case2#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case2# cat 3-svc_NodePort.yml

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 90

targetPort: 80

nodePort: 30012

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case2# 六、Kubernetes之configmap

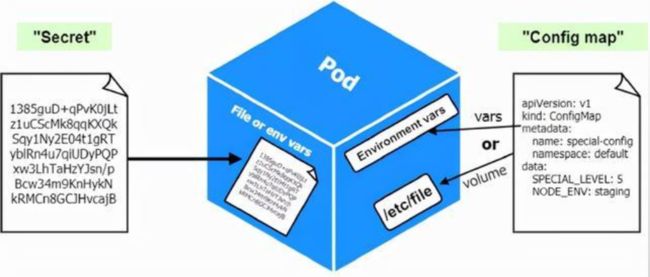

Configmap配置信息和镜像解耦, 实现方式为将配置信息放到configmap对象中, 然后在pod的中作为Volume挂载到pod中, 从而实现导入配置的目的。

使用场景:

- 通过Configmap给pod定义全局环境变量

- 通过Configmap给pod传递命令行参数, 如mysql -u -p中的账户名密码可以通过Configmap传递。

- 通过Configmap给pod中的容器服务提供配置文件, 配置文件以挂载到容器的形式使用。

注意事项:

- Configmap需要在pod使用它之前创建。

- pod只能使用位于同一个namespace的Configmap, 及Configmap不能夸namespace使用。

- 通常用于非安全加密的配置场景。

- Configmap通常是小于1MB的配置。

应用案例

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case6# cat deploy_configmap.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

default: |

server {

listen 80;

server_name www.mysite.com;

index index.html;

location / {

root /data/nginx/html;

if (!-e $request_filename) {

rewrite ^/(.*) /index.html last;

}

}

}

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-8080

image: tomcat

ports:

- containerPort: 8080

volumeMounts:

- name: nginx-config

mountPath: /data

- name: ng-deploy-80

image: nginx

ports:

- containerPort: 80

volumeMounts:

- mountPath: /data/nginx/html

name: nginx-static-dir

- name: nginx-config

mountPath: /etc/nginx/conf.d

volumes:

- name: nginx-static-dir

hostPath:

path: /data/nginx/linux70

- name: nginx-config

configMap:

name: nginx-config

items:

- key: default

path: mysite.conf

---

apiVersion: v1

kind: Service

metadata:

name: ng-deploy-80

spec:

ports:

- name: http

port: 81

targetPort: 80

nodePort: 30019

protocol: TCP

type: NodePort

selector:

app: ng-deploy-80安装并验证

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case6# kubectl get pod

NAME READY STATUS RESTARTS AGE

mysql-77d55bfdd8-cbtcz 1/1 Running 2 (18h ago) 41h

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case6# kubectl get configmap

NAME DATA AGE

istio-ca-root-cert 1 40h

kube-root-ca.crt 1 47h

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case6# kubectl apply -f deploy_configmap.yml

configmap/nginx-config created

deployment.apps/nginx-deployment created

service/ng-deploy-80 created

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case6# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mysql-77d55bfdd8-cbtcz 1/1 Running 2 (18h ago) 41h 10.200.104.212 172.16.88.163

nginx-deployment-5699c4696d-gr4gm 2/2 Running 0 27s 10.200.104.216 172.16.88.163

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case6# kubectl get configmap

NAME DATA AGE

istio-ca-root-cert 1 40h

kube-root-ca.crt 1 47h

nginx-config 1 32s

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case6# kubectl get configmap nginx-config -oyaml

apiVersion: v1

data:

default: |

server {

listen 80;

server_name www.mysite.com;

index index.html;

location / {

root /data/nginx/html;

if (!-e $request_filename) {

rewrite ^/(.*) /index.html last;

}

}

}

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"default":"server {\n listen 80;\n server_name www.mysite.com;\n index index.html;\n\n location / {\n root /data/nginx/html;\n if (!-e $request_filename) {\n rewrite ^/(.*) /index.html last;\n }\n }\n}\n"},"kind":"ConfigMap","metadata":{"annotations":{},"name":"nginx-config","namespace":"default"}}

creationTimestamp: "2022-10-20T08:29:50Z"

name: nginx-config

namespace: default

resourceVersion: "388823"

uid: 1a04f3c2-bc33-4ddc-ac0a-f726c9fa33f6

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case6#

root@easzlab-deploy:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.100.0.1 443/TCP 47h

mysql-service NodePort 10.100.125.186 3306:33306/TCP 41h

ng-deploy-80 NodePort 10.100.80.101 81:30019/TCP 2m16s

root@easzlab-deploy:~# root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case6# cat deploy_configmapenv.yml #带value值

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

data:

username: user1

---

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ng-deploy-80

template:

metadata:

labels:

app: ng-deploy-80

spec:

containers:

- name: ng-deploy-80

image: nginx

env:

- name: "magedu"

value: "n70"

- name: MY_USERNAME

valueFrom:

configMapKeyRef:

name: nginx-config

key: username

ports:

- containerPort: 80安装并验证

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case6# kubectl apply -f deploy_configmapenv.yml

configmap/nginx-config configured

deployment.apps/nginx-deployment configured

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case6# kubectl get configmap -oyaml

apiVersion: v1

items:

- apiVersion: v1

data:

root-cert.pem: |

-----BEGIN CERTIFICATE-----

MIIC/DCCAeSgAwIBAgIQOeHImLiidfxNM+2MuCKFMDANBgkqhkiG9w0BAQsFADAY

MRYwFAYDVQQKEw1jbHVzdGVyLmxvY2FsMB4XDTIyMTAxODE2MjIzN1oXDTMyMTAx

NTE2MjIzN1owGDEWMBQGA1UEChMNY2x1c3Rlci5sb2NhbDCCASIwDQYJKoZIhvcN

AQEBBQADggEPADCCAQoCggEBALJL3P9+3f3SnYE8fFuitxosDPobOAkTy4kuGIMq

68SzumFalYz5LjlBQpTfo0Hv/OXWWctiJuUm/oJs4jVLhruALQ1JjV5EK82iiwQo

KypBaUHL1ql5AHBMKmmwqLSo/yd/zNqmU/iwasVN7G/ykAfqaapEvFbnJJhJT0Dz

0amhRs/oPB1umgfwmiRYrCTZu9iKihBaYjbkmJ6o4/oUCw1Pse1PZLt4MkctTSiZ

WXvtTF9YyQCqSAe62mVQkmYRBjf4x7QkmfZnvCnHvhJ86RfTOcIMYK8l5xgiaZyG

1EUrOfMgJ/DQFdC7DKzIbbktTJ2YvA33VTb9gpIQKrCAHhECAwEAAaNCMEAwDgYD

VR0PAQH/BAQDAgIEMA8GA1UdEwEB/wQFMAMBAf8wHQYDVR0OBBYEFA2bWsIMmCNm

cgQJFjZrUwtYWf0gMA0GCSqGSIb3DQEBCwUAA4IBAQCIVbuVBrRigwzrF08/v2yc

qhjunL/QrLh6nzRmfHlKn4dNlKMczReMc0yrxcl6V6rdzXpDpVb663Q36hhmmvwe

WwmnJMZUUsFrYiTt1KYQg9o0dNcRFzYx/W9Dpi9YPwmS2Xqqc94rUDIkBMIOGnc9

H99gvMOJbfK5BnzXko3A+dCVwUngdmxQpRePjzWSDhU1pWkyZp+hKxZff/1ieFqF

Joh3bHInmEsWqZRWRhkmzwwjnlvVy3h90TKUizidYfXPz4xgXf/FVp++0mp09U4T

tnFjivOFyXH/jwpRbZJq8uXsV+joxMEYy/JPbgywYoynvwejcEHksact/3FTQLd5

-----END CERTIFICATE-----

kind: ConfigMap

metadata:

creationTimestamp: "2022-10-18T16:22:39Z"

labels:

istio.io/config: "true"

name: istio-ca-root-cert

namespace: default

resourceVersion: "65285"

uid: 76575e18-c8b2-4dd9-b1d7-ffef0f43c640

- apiVersion: v1

data:

ca.crt: |

-----BEGIN CERTIFICATE-----

MIIDlDCCAnygAwIBAgIUXgL7CLqvFf9DxZvFt+UAzbLlYMUwDQYJKoZIhvcNAQEL

BQAwYTELMAkGA1UEBhMCQ04xETAPBgNVBAgTCEhhbmdaaG91MQswCQYDVQQHEwJY

UzEMMAoGA1UEChMDazhzMQ8wDQYDVQQLEwZTeXN0ZW0xEzARBgNVBAMTCmt1YmVy

bmV0ZXMwIBcNMjIxMDEzMTIyMTAwWhgPMjEyMjA5MTkxMjIxMDBaMGExCzAJBgNV

BAYTAkNOMREwDwYDVQQIEwhIYW5nWmhvdTELMAkGA1UEBxMCWFMxDDAKBgNVBAoT

A2s4czEPMA0GA1UECxMGU3lzdGVtMRMwEQYDVQQDEwprdWJlcm5ldGVzMIIBIjAN

BgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEApw+3h+j5I/2exVVSvxL/j70XZ5ep

XW5tclKag7Qf/x5oZe8O1yMxZXiPKgzqGGS68morpG5vD2hVPEsqICOhHiFl2AD3

ZgMCDWMGeOyk6zGgDbnTUsFO7R/v7kNTnBV6BqgKKlG9NqTtrDSPLoeakTB2qBtV

Wjhv+YrXXsMVcEaiuEQ4wLD87Kmy8r7xRtEttELKHwdI8iS4Caq+qxtm/EosyTiT

bQbUB4mkGZ6sFFwKSKaLUGz8Nq1yHkJYbI77YDhUBnaNEQBemPmEfkBeHCajbzx1

CKPIairrAZNaoMPK9stuK+YLk9Z/gLUYrZe2S8S+k6DPlvuj327bLwKWCwIDAQAB

o0IwQDAOBgNVHQ8BAf8EBAMCAQYwDwYDVR0TAQH/BAUwAwEB/zAdBgNVHQ4EFgQU

XUwALoYNGxfIG/8BrPlezZd3uaQwDQYJKoZIhvcNAQELBQADggEBAIhIDLiS0R1M

bq2RZMQROrEzKs02CclxYjwcr8hrXm/YlB6a8bHG2v3HASi+7QZ89+agz/Oeo+Cp

6abDTiXHolUkUuyddd14KBwanC7ubwDBsqxr4iteNz5H4ml1uxaZ8G94uVyBgC2U

qjkWGtXbw6RuY+YTuqYzX3S621U+hwLWN1cXmRcydDZwnMuI+rCwEKLXqLESDMbG

jiQ1sbLI12oQa07fe+rffnGAWe7P2fMAu/MQxm9Mm8+pX+2WgKauDwpG/v2oZxAO

iQqICEaYBecgLRBTj868LHVli1CnqUDVjJt59vD2/LZ8I5WnqnGFfONluYSgFiFQ

m/7XupOph3k=

-----END CERTIFICATE-----

kind: ConfigMap

metadata:

annotations:

kubernetes.io/description: Contains a CA bundle that can be used to verify the

kube-apiserver when using internal endpoints such as the internal service

IP or kubernetes.default.svc. No other usage is guaranteed across distributions

of Kubernetes clusters.

creationTimestamp: "2022-10-18T09:07:42Z"

name: kube-root-ca.crt

namespace: default

resourceVersion: "271"

uid: f63b2e93-d94f-4c2c-831f-49863f82e3e5

- apiVersion: v1

data:

username: user1

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"username":"user1"},"kind":"ConfigMap","metadata":{"annotations":{},"name":"nginx-config","namespace":"default"}}

creationTimestamp: "2022-10-20T08:37:17Z"

name: nginx-config

namespace: default

resourceVersion: "390419"

uid: 0136af36-4a7f-407a-a61c-bea7ef19497c

kind: List

metadata:

resourceVersion: ""

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case6# 七、Kubernetes之Secret

7.1、Secret简介

- Secret 的功能类似于 ConfigMap给pod提供额外的配置信息,但是Secret是一种包含少量敏感信息例如密码、 令牌或密钥的对象。

- Secret 的名称必须是合法的 DNS 子域名。

- 每个Secret的大小最多为1MiB, 主要是为了避免用户创建非常大的Secret进而导致API服务器和kubelet内存耗尽, 不过创建很多小的Secret也可能耗尽内存, 可以使用资源配额来约束每个名字空间中Secret的个数。

- 在通过yaml文件创建secret时, 可以设置data或stringData字段,data和stringData字段都是可选的, data字段中所有键值都必须是base64编码的字符串, 如果不希望执行这种 base64字符串的转换操作, 也可以选择设置stringData字段, 其中可以使用任何非加密的字符串作为其取值。

Pod 可以用三种方式的任意一种来使用 Secret:

- 作为挂载到一个或多个容器上的卷 中的文件(crt文件、 key文件)。

- 作为容器的环境变量。

- 由 kubelet 在为 Pod 拉取镜像时使用(与镜像仓库的认证)。

7.2、Secret简介类型

Kubernetes默认支持多种不同类型的secret, 用于一不同的使用场景, 不同类型的secret的配置参数也不一样。

7.3、Secret类型-Opaque格式

Opaque格式-data类型数据-事先使用base64加密

#echo admin |base64

#echo 123456 |base64

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8# echo admin |base64

YWRtaW4K

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8# echo 123456 |base64

MTIzNDU2Cg==

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8# cat 1-secret-Opaque-data.yaml

apiVersion: v1

kind: Secret

metadata:

name: mysecret-data

namespace: myserver

type: Opaque

data:

user: YWRtaW4K

password: MTIzNDU2Cg==

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8# kubectl apply -f 1-secret-Opaque-data.yaml

secret/mysecret-data created

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8# kubectl get secrets mysecret-data -n myserver -o yaml

apiVersion: v1

data:

password: MTIzNDU2Cg==

user: YWRtaW4K

kind: Secret

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"password":"MTIzNDU2Cg==","user":"YWRtaW4K"},"kind":"Secret","metadata":{"annotations":{},"name":"mysecret-data","namespace":"myserver"},"type":"Opaque"}

creationTimestamp: "2022-10-20T09:03:33Z"

name: mysecret-data

namespace: myserver

resourceVersion: "394995"

uid: b0788df4-0195-429f-bda5-eafb5d51bd6a

type: Opaque

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8# Opaque格式stringData类型数据-不用事先加密-上传到k8s会加密

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8# cat 2-secret-Opaque-stringData.yaml

apiVersion: v1

kind: Secret

metadata:

name: mysecret-stringdata

namespace: myserver

type: Opaque

stringData:

superuser: 'admin'

password: '123456'

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8# kubectl apply -f 2-secret-Opaque-stringData.yaml

secret/mysecret-stringdata created

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8# kubectl get secrets mysecret-stringdata -n myserver -o yaml

apiVersion: v1

data:

password: MTIzNDU2

superuser: YWRtaW4=

kind: Secret

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Secret","metadata":{"annotations":{},"name":"mysecret-stringdata","namespace":"myserver"},"stringData":{"password":"123456","superuser":"admin"},"type":"Opaque"}

creationTimestamp: "2022-10-20T09:07:15Z"

name: mysecret-stringdata

namespace: myserver

resourceVersion: "395636"

uid: 4134fe69-389d-47d0-b870-f83dd34fa537

type: Opaque

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case8# 7.4、Secret类型-kubernetes.io/tls-为nginx提供证书

自签名证书:

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# mkdir certs

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# ls

4-secret-tls.yaml certs

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# cd certs/

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9/certs# ls

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9/certs#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9/certs# openssl req -x509 -sha256 -newkey rsa:4096 -keyout ca.key -out ca.crt -days 3560 -nodes -subj '/CN=www.ca.com'

Generating a RSA private key

..............................................++++

....................................................................++++

writing new private key to 'ca.key'

-----

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9/certs#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9/certs# openssl req -new -newkey rsa:4096 -keyout server.key -out server.csr -nodes -subj '/CN=www.mysite.com'

Generating a RSA private key

.......................................................................................................................................................................................++++

................................................++++

writing new private key to 'server.key'

-----

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9/certs#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9/certs# openssl x509 -req -sha256 -days 3650 -in server.csr -CA ca.crt -CAkey ca.key -set_serial 01 -out server.crt

Signature ok

subject=CN = www.mysite.com

Getting CA Private Key

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9/certs#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9/certs# ll -h

total 28K

drwxr-xr-x 2 root root 4.0K Oct 20 20:09 ./

drwxr-xr-x 3 root root 4.0K Oct 20 20:06 ../

-rw-r--r-- 1 root root 1.8K Oct 20 20:08 ca.crt

-rw------- 1 root root 3.2K Oct 20 20:08 ca.key

-rw-r--r-- 1 root root 1.7K Oct 20 20:09 server.crt

-rw-r--r-- 1 root root 1.6K Oct 20 20:09 server.csr

-rw------- 1 root root 3.2K Oct 20 20:09 server.key

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9/certs# kubectl create secret tls myserver-tls-key --cert=./server.crt --key=./server.key -n myserver

secret/myserver-tls-key created

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9/certs#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9/certs# 创建web服务nginx并使用证书:

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# cat 4-secret-tls.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

namespace: myserver

data:

default: |

server {

listen 80;

listen 443 ssl;

server_name www.mysite.com;

ssl_certificate /etc/nginx/conf.d/certs/tls.crt;

ssl_certificate_key /etc/nginx/conf.d/certs/tls.key;

location / {

root /usr/share/nginx/html;

index index.html;

if ($scheme = http){

rewrite / https://www.mysite.com permanent;

}

if (!-e $request_filename){

rewrite ^/(.*) /index.html last;

}

}

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: myserver-myapp-frontend-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-myapp-frontend

template:

metadata:

labels:

app: myserver-myapp-frontend

spec:

containers:

- name: myserver-myapp-frontend

image: nginx:1.20.2-alpine

ports:

- containerPort: 80

volumeMounts:

- name: nginx-config

mountPath: /etc/nginx/conf.d/myserver

- name: myserver-tls-key

mountPath: /etc/nginx/conf.d/certs

volumes:

- name: nginx-config

configMap:

name: nginx-config

items:

- key: default

path: mysite.conf

- name: myserver-tls-key

secret:

secretName: myserver-tls-key

---

apiVersion: v1

kind: Service

metadata:

name: myserver-myapp-frontend

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30018

protocol: TCP

- name: https

port: 443

targetPort: 443

nodePort: 30019

protocol: TCP

selector:

app: myserver-myapp-frontend

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# kubectl apply -f 4-secret-tls.yaml

configmap/nginx-config created

deployment.apps/myserver-myapp-frontend-deployment created

service/myserver-myapp-frontend created

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# 验证nginx pod信息

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# kubectl get pod -n myserver

NAME READY STATUS RESTARTS AGE

myserver-myapp-frontend-deployment-7694cb4fcb-j9hcq 1/1 Running 0 54m

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# kubectl get secret -n myserver

NAME TYPE DATA AGE

myserver-tls-key kubernetes.io/tls 2 71m

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# kubectl get secret -n myserver -oyaml

apiVersion: v1

items:

- apiVersion: v1

data:

tls.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUVvakNDQW9vQ0FRRXdEUVlKS29aSWh2Y05BUUVMQlFBd0ZURVRNQkVHQTFVRUF3d0tkM2QzTG1OaExtTnYKYlRBZUZ3MHlNakV3TWpBeE1qQTVNakJhRncwek1qRXdNVGN4TWpBNU1qQmFNQmt4RnpBVkJnTlZCQU1NRG5kMwpkeTV0ZVhOcGRHVXVZMjl0TUlJQ0lqQU5CZ2txaGtpRzl3MEJBUUVGQUFPQ0FnOEFNSUlDQ2dLQ0FnRUF5RE9VCmt5UHJMbC9adFRLMk1ZOWxQWU8zQXVXRnExcEJFZG9PT0R2dndtZ3FabUV1VjVNeXNTdnJLcmJBeW1pQnd1d1gKd2JYZU1MZGlxOW1GK0NzTWFkR21tUW9aY0VXNW54MGZZSzNLbVdIY2hrc0JITnlKNnhXV2ZCQk0yRDlzSnM1cQpXVWJnbUFDdGNINW1iSXo5TlV3MGwwZ2pTa3oyNDM2RVNPNjdPOFo5WEVEVGlFUTN5YnM0RTV2azNiVGJ3ZzBYCmthK2Q5Z3RCQTFmQmZFOGFEZkRweWhkZTZ5L0YxTjBlWmladFlNdUp1QTIzYkVxcWR5d1hJemJCUDBjTHdyUEIKUG5vTXY5OUdTWTVzZEZZMkRrdHMxVHdUUjRqdHVWdExTTTY1MXNXeVpVckVUcm53U3RNcXJRT1Q0QUdpcXc1MQpHZTZoQXJxZ0Y1VS96U3BiL29nQjZ1T0IvWThiNDBvazFNNGRKWXRETVhvZFFtYnNtOU9pa3VOc2lRWTVIZUlnCmVpVUdnVVRoRFRGYlB6SW5acWo3TDM5bmMvUlREeWxicGRsRVRNamVTS0o5MHBpMERNM1VOVkxzTEg0WitrWnEKZ282N0hneHFCVlBYb1dTTm54UFFheEI5TkFnTUl3aVVXZ0NoM2pHVlpwMTE5VGpsQXRqYTV0OHZVeFhCWDdUMApkSDVCYUZjTTRGTmwzYmYzMmJRck9vcWlkREFMQ2JrcVZmNjNxNUVsUERNV2p0UGdhd0JtUlZ0V0Vlb2hIV0t3CitKTFVod1o4UUNPYjhBcTYwVHk3bFdvc0JyUlZZRWgwOTJjbFU4clJmOFF1VC9venl5N3BkWHlYVzNYUGZDbHAKVXo1NGF5eVdYOVZXa3pxZHRIby8xRXRtUHpCV1FzcnhSeVNjOTg4Q0F3RUFBVEFOQmdrcWhraUc5dzBCQVFzRgpBQU9DQWdFQXRFUkdPbENCQnFmRTZzODdGcmFReXc5Q0pocUs4TndrajFVa3YwMzFsaFIwakhKSUE1TU1lYlFNCkU2YnBPdm5mS1pOWk82OXJtaVV6S3pJd2IrZjV5THZaWHJzVzdYUU5VQitiRlFJRG14cVp1dGJNbHR5YjdrcWIKaTA1eVpqUWpJNHNSYVFrUnRpZ1JBdkFWRFk4RWl3WSsvb1BFUjg4N0QrbXk2ZlZJZFhFTzNSQUloT1FhNWF1bQphV1o3bVBjL2xkd1ZoNFVicG0yTGZCNDhvb3BvS05pZ1hsZWloNWg5VWc2Mms3NHFLdVB2cnVvdVBvWWtoWGlXCmFuQzBtTXFWalk5bFMzSi9CdXpKdFpwUExlcllLdjJIQ1RiSklYdmhCazNQU3MwbmZlUFoyUDkrNHMzQXhMcSsKVGxhMHlwcXZueWtHMXRyd0g4dXMzZEM1ZEg3ZWNzWC9SRm8wM2NXbUNGZUM2Yzk4YjhiVDBXd0x6Ym1HWUl6bgpWaVk3UTRVSTNya2wrdjBySC91aDZ5OVFkU1FRZS9vaERCeEJtelQxWXVqdDMyMUpiSTIzMklrTEFQSUpWbDBnClo5SktFaCtSRko0djVRK1N6U1BSZyt5ZWJCQjExZUVvc1l3N2lQd2J4a0U1UVpaUzBLY1N4TDB3UGF3R3NXK0MKYkFmZUFpMVhFdG51MFlVMzlOTHkrOWluRFBEcjlyM3Y2N1d0UkxFeldWSzcwS05RUTY1R3VTOUsxbHFMdzdqUApwWE1RclpuQnpDelVDb0VmYkFIN0tmSXd3OGgxWFZhTnJFeXZDWnJRRlNiTjJTUzFwYjZidXFSVmtjald2U2Y3Clp0ejUzYXBDQkFXd3JxUGFSbW1VVHd0RnpZZUIvVmVsNEdJVzlEbkIwRTF1NWdXaHpaQT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

tls.key: LS0tLS1CRUdJTiBQUklWQVRFIEtFWS0tLS0tCk1JSUpRd0lCQURBTkJna3Foa2lHOXcwQkFRRUZBQVNDQ1Mwd2dna3BBZ0VBQW9JQ0FRRElNNVNUSStzdVg5bTEKTXJZeGoyVTlnN2NDNVlXcldrRVIyZzQ0TysvQ2FDcG1ZUzVYa3pLeEsrc3F0c0RLYUlIQzdCZkJ0ZDR3dDJLcgoyWVg0S3d4cDBhYVpDaGx3UmJtZkhSOWdyY3FaWWR5R1N3RWMzSW5yRlpaOEVFellQMndtem1wWlJ1Q1lBSzF3CmZtWnNqUDAxVERTWFNDTktUUGJqZm9SSTdyczd4bjFjUU5PSVJEZkp1emdUbStUZHROdkNEUmVScjUzMkMwRUQKVjhGOFR4b044T25LRjE3ckw4WFUzUjVtSm0xZ3k0bTREYmRzU3FwM0xCY2pOc0UvUnd2Q3M4RStlZ3kvMzBaSgpqbXgwVmpZT1MyelZQQk5IaU8yNVcwdEl6cm5XeGJKbFNzUk91ZkJLMHlxdEE1UGdBYUtyRG5VWjdxRUN1cUFYCmxUL05LbHYraUFIcTQ0SDlqeHZqU2lUVXpoMGxpME14ZWgxQ1p1eWIwNktTNDJ5SkJqa2Q0aUI2SlFhQlJPRU4KTVZzL01pZG1xUHN2ZjJkejlGTVBLVnVsMlVSTXlONUlvbjNTbUxRTXpkUTFVdXdzZmhuNlJtcUNqcnNlREdvRgpVOWVoWkkyZkU5QnJFSDAwQ0F3akNKUmFBS0hlTVpWbW5YWDFPT1VDMk5ybTN5OVRGY0ZmdFBSMGZrRm9Wd3pnClUyWGR0L2ZadENzNmlxSjBNQXNKdVNwVi9yZXJrU1U4TXhhTzArQnJBR1pGVzFZUjZpRWRZckQ0a3RTSEJueEEKSTV2d0NyclJQTHVWYWl3R3RGVmdTSFQzWnlWVHl0Ri94QzVQK2pQTEx1bDFmSmRiZGM5OEtXbFRQbmhyTEpaZgoxVmFUT3AyMGVqL1VTMlkvTUZaQ3l2RkhKSnozendJREFRQUJBb0lDQVFEQ21WaW0rYmdGdU1ldW1KOStad3NhCmt5aFdXWEhuMEhBRmdUWm5OT05semNqQkFWK0JZcVJZa1A4aTRzZGROOTVCOFNsYWNvU0tTQWRTVWJzbU1mbjcKOWZ5Qkw4N3dVZVlQSXNpNE9kWC81NTdxcm9kalhYOTJFZUxYcnlSeTRwc20wV2VRWmhPenpKektCeU5hQ21XcAo0K3dPek9ENHZQMFN2b3lwTTl5dFNzL1oxMjJHUEFFYVJyQklaelU4eUNzQVlhZHlSZ2s5KzB4emlsNlpqVzRlCjlQamJKb0p1QzE2NS9VRXFPOW4veDNpVGZrbTNxcEF1REo1azdUbEVYN092eXZoZzJWUUJRVzlaMm1YVFkyVmgKMmJEdFNGclpJdUVvVmZSRXppVFgvZ3pjNXFNUWZ5NXlIUGFUZkRIR0FQRDBZcll5d2NDaUhYTzEySzVPcUFrSQpGV0FIUnZYQTNmMHo3TGtWNDQ1OXg4aFE4WDZFSHV5Ykx6REY5dGprT1ZUUGJ4Q0poS1FMRWZlVStQbi83ZjB5CkVteXpmODRNWU9BWHNpbk1TakVsaUZnWHFrYVFLNXdUZDhaN1R4STcxSjJ1UXBMV2VyTXlBb3BKc0FDYWJjZFcKcEVXUEJhdDZHZ0FnM1NGQUE1SUFGZk9BMFdWbUxuL3UzUjdMekM2dklucUtSYW1qZUlKY0paeWkranlEVzNrQgpzWTd1ZTRMZGYyNC9IMVlCeUhISmpsSnZRWjBWYnVuVWMvZ0c2UFUzNTZ0OUhlaGwwM1lxZXVXUE5ySW9maktECjBlQWFIc3NzY2laeXdKOUFqNUZsTEJIVS9xeUhWL1RjZTQxNEQxN2NuMit3azloUmJITUo2RFh5WFdORFFWZXAKbHBKaHUyS1hoQmNZZVFrb3pEZkJRUUtDQVFFQTQyKzhsdE83eXRld3Y1OHVwWkdBa3BJKy84NElqWWZtaGhoYgpHMlRXU0FpQmxseWxTU1YzejNtd2hzRE4yTWY1QXU4L3d2ZnpxZ3g5OHhhUW9pQjBsbnBDTzJ6OXFaSkE5YVc1CnpTQkdQS095YkhBNkpZQ0ZZSjBrUHNiVlRzY2IzTVhvSUEveHg2Wkg0QWtreGtPYTBMQmpJMW11anNVRlI3akUKMmhweUVUenZPRlNXaUNpSnA2RzFDeXBzRWozdzVQR2dGb0lCdFpwblVCM1ZDVXViNEhLVTkzT3pvaXhad05mVQpTaGdYbHZqOW5OWkdpN1NJRzJxY2xvOTI5ZlRESnV6bnUvdjBndlJnRytwbUxPSHZjM09EMzBOTzA4alhQbkNjCnJzU2sxTHVCQkNyektzUjl0RHNHTEtyWW9McFlCMUxYcTdJQXNGRFU5aUN1UlBwaW9RS0NBUUVBNFZnM25tdDkKNUFWL3NnaUx5aGtBeU5BSVUvTWFvR1RiUVgzL0hyUnlsWlRQL2d0ZWZ6WDB5Rm1lVWlRUTJHYlp1MUY0c1pFaAo2WmZWaGlOMkJsSFkyNkkwblpjQkw5Q3hDM0NNZTY3eUNmdUhDekNNN0Q4R3JieHhLV2duSWxHT1hrcFhyMzdYClg2aDBKSzV3VjlJaVlLYXVaZ2xUWm1vT2g0aTl5M252Um5ESkFrREIwMzlNYjBVUjVaaTAwOElrbUw3bDBsU2MKL0lJenBGajJTeHIvUWUySVBLYkpTWDBjWW44am5yamVZUzBjczNaKzJNMVRDVTRZVU5rTnVMVFV2ZFBPWnBNRApaUmx1MWRLbElmZDMrb0lZWkhhNmxLVDlDeitlYmdQS0Jxb0tsa1hJM0RNTldGWTZhSlF4Y0N6RkkwZStKWmVVCld4Uk96WU94Wk5PMGJ3S0NBUUVBbVVTaWZhNGdmcmpPRnNScVJnK0E2c1Y5aVJ2S3JiNG92ck5KS25QUTUrZzcKbEIzSkVUc2J1NGpSU201Q0NsWHczR1pvdkxZbDBiSHJhdGNKRHdqNktMSXBVaXpINE85N3NVOUdvQktnNHBxYQpVZk5yYS94cFpjdGdNcUlCKzcyNGJCWStzT1N0MWhLYm0wSHVNMkk1d1dzczFCVEt5dEhCRmkxUkUzNEE0dGNDCml4Nk45eUlDYWlKU2hEekphWjJ1YWtyZXpHdytSS2pSK0s2eDh6cXR5QnJQZ3RiSTlvQVcyQnRhcDdnR3Bhb1UKRnc1YnFpZzJGT3ZLckxmdnZoNTlLUTA3dVhZNHQ4dUJ2UzVBUHZ6ZlJobFJoREt5dTR3OGFZcXdQQ0t1eGVHNgpOeG5PbDBLbFI4RUREelR2R1ptYVd3MGI1RXZucE9wRUtiMnFVemU5SVFLQ0FRQmhzeHU2SmFTWlBnRVZNVHRhClRlalhKOHJVaXV3YWFsL2RUMEZURUswMVNTVzhZVFdCTmVXQkQ4bmlseHh1bG5rRUM5aW1NK1JlSUtSRTJnOEwKd21TaEpQeG03dGRtNGJaQTNYVXJFcmlCdDNuZlVoZG5QaFFwTXpCazRYRkdJZEgxODRsODN5T0ZwOFZqT2ZZZgpQVTRHVlgzN1kwT3pmWHY3SzBBT2ZqbE5jd3pUV3p3dDlGMHhTT0x2aG51djY5WnVHeVlOUVA0blJGUWJoeTZSCmRZMENDbmdzdzZzMW4zYTFCYVp0NUgwVjZMY3UzOHN6T0NJdVFKdXVRY3ovTGZlbXJiUXBLTWdxQnhMVXhkVXUKbXRwNzAvZTdadmFTQjg1bUdCa2FYYTR6b1htaG1YUHlkSGZ1dXNQc0g0UW52R0ZrWUhDQ1grdkVhVk9aS3VXNApiMGtsQW9JQkFDNmVZdlhzYUVITW5CUUQ5cDdXaGVNZitmOEtHMlAwcFFEYnFmM0x4bGtMWlIxV3l1SnMyOSttCkgrQm15OEM5blJDcGpzT0VJT3pCTW9seFdlN3F2aUhDeGsreG9SdkNFVlZvNklMd3gyQU0xV3MvTnJBTEE5Q0QKd1QyTjBQdkdnR01jZmIwS3RMeGtJbXVDaW1nSEdnak5hWkJhNjYxeHpWNVh1cnZNTndHaUw1R2lwMlA1R1pUUwpQSEdkamg5SFVTQUtibkNqcG9CL2Z5MHBiNk9YRkJHT1JvNVkvcFV4cHYrQ3JHdEQreHkrS2UzcWd6UjIrdkQxCmNnNmU2Vk1jWHVGUk45YUl5UHdpZHZJL2hwTFdNNGtiZjNlOFJ6ZGRjWUlBQjlwZ2E3dDFyWmVJVFJtNUVqMlIKd1BZRTg3b3hRWVdTNmorUjBSWWNIb2pIK0lPZWhaMD0KLS0tLS1FTkQgUFJJVkFURSBLRVktLS0tLQo=

kind: Secret

metadata:

creationTimestamp: "2022-10-20T12:09:46Z"

name: myserver-tls-key

namespace: myserver

resourceVersion: "427221"

uid: cef4b425-8572-44f2-9097-5a1040c9bd03

type: kubernetes.io/tls

kind: List

metadata:

resourceVersion: ""

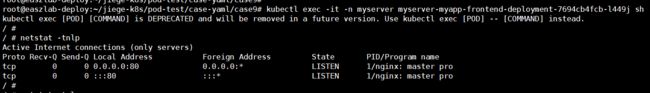

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9#此时发现pod没有监听443端口

解决办法

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# kubectl exec -it -n myserver myserver-myapp-frontend-deployment-7694cb4fcb-l449j sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ #

/etc/nginx/conf.d/myserver # vi /etc/nginx/nginx.conf

/etc/nginx/conf.d/myserver # cat /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

include /etc/nginx/conf.d/myserver/*.conf;

}

/etc/nginx/conf.d/myserver # ls /etc/nginx/conf.d/myserver/*.conf

/etc/nginx/conf.d/myserver/mysite.conf

/etc/nginx/conf.d/myserver #

/etc/nginx/conf.d/myserver # nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

/etc/nginx/conf.d/myserver # nginx -s reload

2022/10/20 13:56:20 [notice] 52#52: signal process started

/etc/nginx/conf.d/myserver # netstat -tnlp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 1/nginx: master pro

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 1/nginx: master pro

tcp 0 0 :::80 :::* LISTEN 1/nginx: master pro

/etc/nginx/conf.d/myserver # 配置负载均衡转发请求到nodeport

root@easzlab-haproxy-keepalive-01:~# vi /etc/haproxy/haproxy.cfg

listen myserer-nginx-80

bind 172.16.88.200:80

mode tcp

server easzlab-k8s-master-01 172.16.88.157:30018 check inter 2000 fall 3 rise 5

server easzlab-k8s-master-02 172.16.88.158:30018 check inter 2000 fall 3 rise 5

server easzlab-k8s-master-03 172.16.88.159:30018 check inter 2000 fall 3 rise 5

listen myserer-nginx-443

bind 172.16.88.200:443

mode tcp

server easzlab-k8s-master-01 172.16.88.157:30019 check inter 2000 fall 3 rise 5

server easzlab-k8s-master-02 172.16.88.158:30019 check inter 2000 fall 3 rise 5

server easzlab-k8s-master-03 172.16.88.159:30019 check inter 2000 fall 3 rise 5root@easzlab-haproxy-keepalive-01:~# systemctl restart haproxy

配置hosts 解析

通过curl命令查看证书来源

root@easzlab-haproxy-keepalive-01:~# curl -lvk https://www.mysite.com

* Trying 172.16.88.200:443...

* TCP_NODELAY set

* Connected to www.mysite.com (172.16.88.200) port 443 (#0)

* ALPN, offering h2

* ALPN, offering http/1.1

* successfully set certificate verify locations:

* CAfile: /etc/ssl/certs/ca-certificates.crt

CApath: /etc/ssl/certs

* TLSv1.3 (OUT), TLS handshake, Client hello (1):

* TLSv1.3 (IN), TLS handshake, Server hello (2):

* TLSv1.2 (IN), TLS handshake, Certificate (11):

* TLSv1.2 (IN), TLS handshake, Server key exchange (12):

* TLSv1.2 (IN), TLS handshake, Server finished (14):

* TLSv1.2 (OUT), TLS handshake, Client key exchange (16):

* TLSv1.2 (OUT), TLS change cipher, Change cipher spec (1):

* TLSv1.2 (OUT), TLS handshake, Finished (20):

* TLSv1.2 (IN), TLS handshake, Finished (20):

* SSL connection using TLSv1.2 / ECDHE-RSA-AES256-GCM-SHA384

* ALPN, server accepted to use http/1.1

* Server certificate:

* subject: CN=www.mysite.com

* start date: Oct 20 12:09:20 2022 GMT

* expire date: Oct 17 12:09:20 2032 GMT

* issuer: CN=www.ca.com

* SSL certificate verify result: unable to get local issuer certificate (20), continuing anyway.

> GET / HTTP/1.1

> Host: www.mysite.com

> User-Agent: curl/7.68.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< Server: nginx/1.20.2

< Date: Thu, 20 Oct 2022 14:06:47 GMT

< Content-Type: text/html

< Content-Length: 612

< Last-Modified: Tue, 16 Nov 2021 15:04:23 GMT

< Connection: keep-alive

< ETag: "6193c877-264"

< Accept-Ranges: bytes

<

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

* Connection #0 to host www.mysite.com left intact

root@easzlab-haproxy-keepalive-01:~#

root@easzlab-haproxy-keepalive-01:~# curl -vvi https://www.mysite.com

* Trying 172.16.88.200:443...

* TCP_NODELAY set

* Connected to www.mysite.com (172.16.88.200) port 443 (#0)

* ALPN, offering h2

* ALPN, offering http/1.1

* successfully set certificate verify locations:

* CAfile: /etc/ssl/certs/ca-certificates.crt

CApath: /etc/ssl/certs

* TLSv1.3 (OUT), TLS handshake, Client hello (1):

* TLSv1.3 (IN), TLS handshake, Server hello (2):

* TLSv1.2 (IN), TLS handshake, Certificate (11):

* TLSv1.2 (OUT), TLS alert, unknown CA (560):

* SSL certificate problem: unable to get local issuer certificate

* Closing connection 0

curl: (60) SSL certificate problem: unable to get local issuer certificate

More details here: https://curl.haxx.se/docs/sslcerts.html

curl failed to verify the legitimacy of the server and therefore could not

establish a secure connection to it. To learn more about this situation and

how to fix it, please visit the web page mentioned above.

root@easzlab-haproxy-keepalive-01:~# 7.5、Secret-kubernetes.io/dockerconfigjson类型

存储docker registry的认证信息, 在下载镜像的时候使用, 这样每一个node节点就可以不登录也可以下载私有级别的镜像了。

root@easzlab-deploy:~# docker login --username=c******2 registry.cn-shenzhen.aliyuncs.com

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

root@easzlab-deploy:~# cat /root/.docker/config.json

{

"auths": {

"harbor.magedu.net": {

"auth": "YWRtaW46SGFyYm9yMTIzNDU="

},

"registry.cn-shenzhen.aliyuncs.com": {

"auth": "Y*********************==" #此处这里显示脱敏

}

}

}

root@easzlab-deploy:~#

root@easzlab-deploy:~# kubectl create secret generic aliyun-registry-image-pull-key \

> --from-file=.dockerconfigjson=/root/.docker/config.json \

> --type=kubernetes.io/dockerconfigjson \

> -n myserver #将本地登录阿里云私有仓库信息存储起来,共享给k8s集群节点使用

secret/aliyun-registry-image-pull-key created

root@easzlab-deploy:~#

root@easzlab-deploy:~# kubectl get secret -n myserver

NAME TYPE DATA AGE

aliyun-registry-image-pull-key kubernetes.io/dockerconfigjson 1 9m24s

myserver-tls-key kubernetes.io/tls 2 150m

root@easzlab-deploy:~#

root@easzlab-deploy:~# kubectl get secret -n myserver aliyun-registry-image-pull-key -oyaml

apiVersion: v1

data:

.dockerconfigjson: ewoJImF1dGhzIjogewoJCSJoYXJib3IubWFnZWR1Lm5ldCI6IHsKCQkJImF1dGgiOiAiWVdSdGFXNDZTR0Z5WW05eU1USXpORFU9IgoJCX0sCgkJInJlZ2lzdHJ5LmNuLXNoZW56aGVuLmFsaXl1bmNzLmNvbSI6IHsKCQkJImF1d*************************n0=

kind: Secret

metadata:

creationTimestamp: "2022-10-20T14:30:23Z"

name: aliyun-registry-image-pull-key

namespace: myserver

resourceVersion: "451590"

uid: f084175a-6260-4435-acfb-bcec9095e5a6

type: kubernetes.io/dockerconfigjson

root@easzlab-deploy:~#

root@easzlab-deploy:~# cd jiege-k8s/pod-test/case-yaml/case9/

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# vi 6-secret-imagePull.yaml

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# cat 6-secret-imagePull.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myserver-myapp-frontend-deployment-2

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-myapp-frontend-2

template:

metadata:

labels:

app: myserver-myapp-frontend-2

spec:

containers:

- name: myserver-myapp-frontend-2

image: registry.cn-shenzhen.aliyuncs.com/cyh01/nginx:1.22.0 #指向阿里云公有私仓镜像

ports:

- containerPort: 80

imagePullSecrets:

- name: aliyun-registry-image-pull-key

---

apiVersion: v1

kind: Service

metadata:

name: myserver-myapp-frontend-2

namespace: myserver

spec:

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30033

protocol: TCP

type: NodePort

selector:

app: myserver-myapp-frontend-2

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# kubectl apply -f 6-secret-imagePull.yaml

deployment.apps/myserver-myapp-frontend-deployment-2 created

service/myserver-myapp-frontend-2 created

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9#

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# kubectl get pod -n myserver -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-myapp-frontend-deployment-2-6d96b76bb-bgmzf 1/1 Running 0 30s 10.200.104.226 172.16.88.163

myserver-myapp-frontend-deployment-6f48755cbd-k2dbs 1/1 Running 0 28m 10.200.105.158 172.16.88.164

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9#

#验证pod信息

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9# kubectl describe pod -n myserver myserver-myapp-frontend-deployment-2-6d96b76bb-bgmzf

Name: myserver-myapp-frontend-deployment-2-6d96b76bb-bgmzf

Namespace: myserver

Priority: 0

Node: 172.16.88.163/172.16.88.163

Start Time: Thu, 20 Oct 2022 23:01:25 +0800

Labels: app=myserver-myapp-frontend-2

pod-template-hash=6d96b76bb

Annotations:

Status: Running

IP: 10.200.104.226

IPs:

IP: 10.200.104.226

Controlled By: ReplicaSet/myserver-myapp-frontend-deployment-2-6d96b76bb

Containers:

myserver-myapp-frontend-2:

Container ID: containerd://20d2061b0eaa8e21748fed2559ba0fe35e7271730097809f210e50d650ad20f9

Image: registry.cn-shenzhen.aliyuncs.com/cyh01/nginx:1.22.0

Image ID: registry.cn-shenzhen.aliyuncs.com/cyh01/nginx@sha256:b3a676a9145dc005062d5e79b92d90574fb3bf2396f4913dc1732f9065f55c4b

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Thu, 20 Oct 2022 23:01:27 +0800

Ready: True

Restart Count: 0

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-j7wtn (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-j7wtn:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional:

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 105s default-scheduler Successfully assigned myserver/myserver-myapp-frontend-deployment-2-6d96b76bb-bgmzf to 172.16.88.163

Normal Pulled 103s kubelet Container image "registry.cn-shenzhen.aliyuncs.com/cyh01/nginx:1.22.0" already present on machine

Normal Created 103s kubelet Created container myserver-myapp-frontend-2

Normal Started 103s kubelet Started container myserver-myapp-frontend-2

root@easzlab-deploy:~/jiege-k8s/pod-test/case-yaml/case9#