【TensorRT】动态batch进行推理

一、引言

模型训练时,每次训练可以接受不同batch大小的数据进行迭代,同样,在推理时,也会遇到输入Tensor大小(shape)是不确定的情况,其中最常见的就是动态batch了。

动态batch,故名思意,就是只batch大小不确定的情况,比如这次推理了一张图像,下次推理就需要同时推理两张图像。

在Tensorflow中,定义一个动态batch的Tensor可以用 -1来表示动态的维度

tf.placeholder(tf.float32, shape=(-1, 1024))而在TensorRT中,又是如何定义动态维度的呢?

本篇文章主要记录一下博主在TensorRT中使用动态batch的一些方法及技巧,如有错误,欢迎指正。

二、TRT在线加载模型,并序列化保存支持动态batch的引擎

这里与之前固定batch建立engine的流程相似,也需要经历以下几个步骤

1、创建一个builder

3、创建一个netwok

4、建立一个 Parser

5、建立 engine

6、建议contex

区别时建议engine时,config里需要设置一下OptimizationProfile文件

step1 准备工作

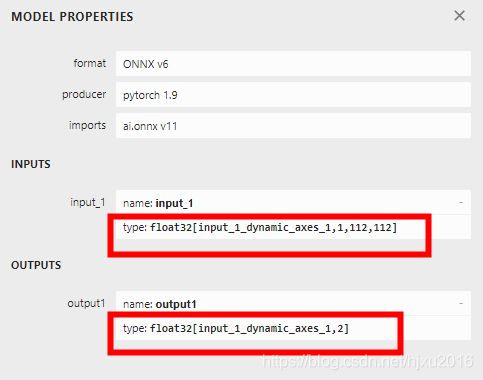

首先准备一个具有动态batch的onnx模型,

至于如何从pytorch转支持动态batch的onnx模型,我在另一篇文章中,做过介绍,具体见

OnnxRunTime的部署流程

转出后,可以用netron来查看,转持的onnx模型,是否支持动态维度。

step2 序列化保存模型的函数

#include

#include "NvInfer.h"

#include "NvOnnxParser.h"

#include "logging.h"

#include "opencv2/opencv.hpp"

#include

#include

#include "cuda_runtime_api.h"

static Logger gLogger;

using namespace nvinfer1;

bool saveEngine(const ICudaEngine& engine, const std::string& fileName)

{

std::ofstream engineFile(fileName, std::ios::binary);

if (!engineFile)

{

std::cout << "Cannot open engine file: " << fileName << std::endl;

return false;

}

IHostMemory* serializedEngine = engine.serialize();

if (serializedEngine == nullptr)

{

std::cout << "Engine serialization failed" << std::endl;

return false;

}

engineFile.write(static_cast(serializedEngine->data()), serializedEngine->size());

return !engineFile.fail();

} step3 加载onnx模型,并构建动态Trt引擎

// 1、创建一个builder

IBuilder* pBuilder = createInferBuilder(gLogger);

// 2、 创建一个 network,要求网络结构里,没有隐藏的批量处理维度

INetworkDefinition* pNetwork = pBuilder->createNetworkV2(1U << static_cast(NetworkDefinitionCreationFlag::kEXPLICIT_BATCH));

// 3、 创建一个配置文件

nvinfer1::IBuilderConfig* config = pBuilder->createBuilderConfig();

// 4、 设置profile,这里动态batch专属

IOptimizationProfile* profile = pBuilder->createOptimizationProfile();

// 这里有个OptProfileSelector,这个用来设置优化的参数,比如(Tensor的形状或者动态尺寸),

profile->setDimensions("input_1", OptProfileSelector::kMIN, Dims4(1, 1, 112, 112));

profile->setDimensions("input_1", OptProfileSelector::kOPT, Dims4(2, 1, 112, 112));

profile->setDimensions("input_1", OptProfileSelector::kMAX, Dims4(4, 1, 112, 112));

config->addOptimizationProfile(profile);

auto parser = nvonnxparser::createParser(*pNetwork, gLogger.getTRTLogger());

char* pchModelPth = "./res_hjxu_temp_dynamic.onnx";

if (!parser->parseFromFile(pchModelPth, static_cast(gLogger.getReportableSeverity())))

{

printf("解析onnx模型失败\n");

}

int maxBatchSize = 4;

pBuilder->setMaxWorkspaceSize(1 << 30);

pBuilder->setMaxBatchSize(maxBatchSize);

设置推理模式

builder->setFp16Mode(true);

ICudaEngine* engine = pBuilder->buildEngineWithConfig(*pNetwork, *config);

std::string strTrtSavedPath = "./res_hjxu_temp_dynamic.trt";

// 序列化保存模型

saveEngine(*engine, strTrtSavedPath);

nvinfer1::Dims dim = engine->getBindingDimensions(0);

// 打印维度

print_dims(dim); 这里print_dims就是打印维度

void print_dims(const nvinfer1::Dims& dim)

{

for (int nIdxShape = 0; nIdxShape < dim.nbDims; ++nIdxShape)

{

printf("dim %d=%d\n", nIdxShape, dim.d[nIdxShape]);

}

}可以看到,打印的dim0就是-1啦。

step4 类IOptimizationProfile和枚举OptProfileSelector的简短介绍

我们可以看出,和固定batch构建engine不同之处,在设置build配置时时,增加了个IOptimizationProfile*配置文件,如设置维度需要增加以下操作

如

nvinfer1::IOptimizationProfile* createOptimizationProfile()

profile->setDimensions("input_1", OptProfileSelector::kMIN, Dims4(1, 1, 112, 112));

profile->setDimensions("input_1", OptProfileSelector::kOPT, Dims4(2, 1, 112, 112));

profile->setDimensions("input_1", OptProfileSelector::kMAX, Dims4(4, 1, 112, 112));咱们来看看IOptimizationProfile这个类

class IOptimizationProfile

{

public:

//! 设置动态inputTensor的最小/最合适/最大维度

//! 不管时什么网络的输入的tensor,这个函数必须被调用三次(for the minimum, optimum, and maximum)

//! 一下列举了三种情况

//! (1) minDims.nbDims == optDims.nbDims == maxDims.nbDims == networkDims.nbDims

//! (2) 0 <= minDims.d[i] <= optDims.d[i] <= maxDims.d[i] for i = 0, ..., networkDims.nbDims-1

//! (3) if networkDims.d[i] != -1, then minDims.d[i] == optDims.d[i] == maxDims.d[i] == networkDims.d[i]

//! 如果选择了DLA,这三个值必须相同的

//!

virtual bool setDimensions(const char* inputName, OptProfileSelector select, Dims dims) noexcept = 0;

//! \brief Get the minimum / optimum / maximum dimensions for a dynamic input tensor.

virtual Dims getDimensions(const char* inputName, OptProfileSelector select) const noexcept = 0;

//! \brief Set the minimum / optimum / maximum values for an input shape tensor.

virtual bool setShapeValues(

const char* inputName, OptProfileSelector select, const int32_t* values, int32_t nbValues) noexcept

= 0;

//! \brief Get the number of values for an input shape tensor.

virtual int32_t getNbShapeValues(const char* inputName) const noexcept = 0;

//! \brief Get the minimum / optimum / maximum values for an input shape tensor.

virtual const int32_t* getShapeValues(const char* inputName, OptProfileSelector select) const noexcept = 0;

//! \brief Set a target for extra GPU memory that may be used by this profile.

//! \return true if the input is in the valid range (between 0 and 1 inclusive), else false

//!

virtual bool setExtraMemoryTarget(float target) noexcept = 0;

//! \brief Get the extra memory target that has been defined for this profile.

virtual float getExtraMemoryTarget() const noexcept = 0;

//! \brief Check whether the optimization profile can be passed to an IBuilderConfig object.

//! \return true if the optimization profile is valid and may be passed to an IBuilderConfig, else false

virtual bool isValid() const noexcept = 0;

protected:

~IOptimizationProfile() noexcept = default;

};再看OptProfileSelector 这个枚举

这个枚举就三个值。最小、合适、和最大

// 最小和最大,指运行时,允许的最小和最大的范围

// 最佳值,用于选择内核,这里通常为运行时,最期望的大小

// 比如在模型推理时,有两路数据,通常batch就为2,batch为1或者为4出现的概率都比较低,

// 这时候,建议kOPT选择2, min选择1,如果最大路数为4,那max就选择四enum class OptProfileSelector : int32_t

{

kMIN = 0, //!< This is used to set or get the minimum permitted value for dynamic dimensions etc.

kOPT = 1, //!< This is used to set or get the value that is used in the optimization (kernel selection).

kMAX = 2 //!< This is used to set or get the maximum permitted value for dynamic dimensions etc.

};

三、反序列化加载动态batch的引擎,并构建动态context,执行动态推理

定义一个加载engine的函数,和固定batch时一样的

ICudaEngine* loadEngine(const std::string& engine, int DLACore)

{

std::ifstream engineFile(engine, std::ios::binary);

if (!engineFile)

{

std::cout << "Error opening engine file: " << engine << std::endl;

return nullptr;

}

engineFile.seekg(0, engineFile.end);

long int fsize = engineFile.tellg();

engineFile.seekg(0, engineFile.beg);

std::vector engineData(fsize);

engineFile.read(engineData.data(), fsize);

if (!engineFile)

{

std::cout << "Error loading engine file: " << engine << std::endl;

return nullptr;

}

IRuntime* runtime = createInferRuntime(gLogger);

if (DLACore != -1)

{

runtime->setDLACore(DLACore);

}

return runtime->deserializeCudaEngine(engineData.data(), fsize, nullptr);

} 然后按以下步骤执行推理

创建engine

创建context

在显卡上创建最大batch的的内存空间,这样只需要创建一次,避免每次推理都重复创建,浪费时间

拷贝动态tensor的内存

设置动态维度,调用context->setBindingDimensions()函数

调用context执行推理

查看输出结果

void test_engine()

{

std::string strTrtSavedPath = "./res_hjxu_temp_dynamic.trt";

int maxBatchSize = 4;

// 1、反序列化加载引擎

ICudaEngine* engine = loadEngine(strTrtSavedPath, 0);

// 2、创建context

IExecutionContext* context = engine->createExecutionContext();

int nNumBindings = engine->getNbBindings();

std::vector vecBuffers;

vecBuffers.resize(nNumBindings);

int nInputIdx = 0;

int nOutputIndex = 1;

int nInputSize = 1 * 112 * 112 * sizeof(float);

// 4、在cuda上创建一个最大的内存空间

(cudaMalloc(&vecBuffers[nInputIdx], nInputSize * maxBatchSize));

(cudaMalloc(&vecBuffers[nOutputIndex], maxBatchSize * 2 * sizeof(float)));

char* pchImgPath = "./img.bmp";

cv::Mat matImg = cv::imread(pchImgPath, -1);

std::cout << matImg.rows << std::endl;

cv::Mat matRzImg;

cv::resize(matImg, matRzImg, cv::Size(112, 112));

cv::Mat matF32Img;

matRzImg.convertTo(matF32Img, CV_32FC1);

matF32Img = matF32Img / 255.;

for (int i = 0; i < maxBatchSize; ++i)

{

cudaMemcpy((unsigned char *)vecBuffers[nInputIdx] + nInputSize * i, matF32Img.data, nInputSize, cudaMemcpyHostToDevice);

}

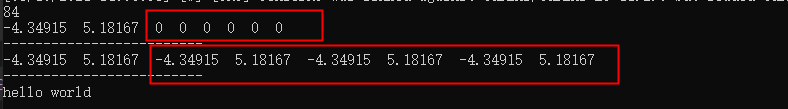

// 动态维度,设置batch = 1 0指第0个tensor的维度

context->setBindingDimensions(0, Dims4(1, 1, 112, 112));

nvinfer1::Dims dim = context->getBindingDimensions(0);

context->executeV2(vecBuffers.data());

//context->execute(1, vecBuffers.data());

float prob[8];

(cudaMemcpy(prob, vecBuffers[nOutputIndex], maxBatchSize * 2 * sizeof(float), cudaMemcpyDeviceToHost));

for (int i = 0; i < 8; ++i)

{

std::cout << prob[i] << " ";

}

std::cout <<"\n-------------------------" << std::endl;

// 动态维度,设置batch = 4

context->setBindingDimensions(0, Dims4(4, 1, 112, 112));

context->executeV2(vecBuffers.data());

//context->execute(1, vecBuffers.data());

(cudaMemcpy(prob, vecBuffers[nOutputIndex], maxBatchSize * 2 * sizeof(float), cudaMemcpyDeviceToHost));

for (int i = 0; i < 8; ++i)

{

std::cout << prob[i] << " ";

}

std::cout << "\n-------------------------" << std::endl;

//std::cout << prob[0] << " " << prob[1] << std::endl;

// call api to release memory

/// ...

return ;

} 结果打印中,可以看到,当batch为1时,输出后面6个值为0,当batch为4是,输出后面六个值都有正确的结果。咱们这里调用的是二分类的resnet50网络.

————————————————

Thanks to:https://blog.csdn.net/hjxu2016/article/details/119796206