Nacos的安装与使用(SpringCloud)

一、概述

Nacos /nɑ:kəʊs/ 是 Dynamic Naming and Configuration Service的首字母简称,一个更易于构建云原生应用的动态服务发现、配置管理和服务管理平台。

Nacos 致力于帮助您发现、配置和管理微服务。Nacos 提供了一组简单易用的特性集,帮助您快速实现动态服务发现、服务配置、服务元数据及流量管理。

Nacos 帮助您更敏捷和容易地构建、交付和管理微服务平台。 Nacos 是构建以“服务”为中心的现代应用架构 (例如微服务范式、云原生范式) 的服务基础设施。

- 版本说明;本教程基于如下版本

| 类别 | 版本 |

|---|---|

| spring boot | 2.6.7 |

| spring-cloud | 2021.0.0 |

| spring-cloud-alibaba | 2021.0.1.0 |

| nacos | 2.1.1 |

- nacos官网:home (nacos.io)

二、安装

1、windows下单机安装

1.1、下载

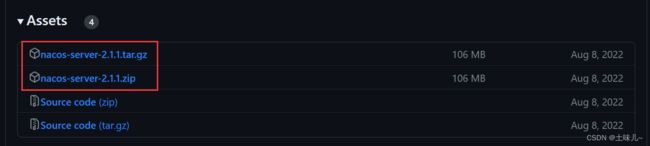

- 下载地址:https://github.com/alibaba/nacos/releases,推荐的稳定版本为2.1.1

两个压缩包都可以。

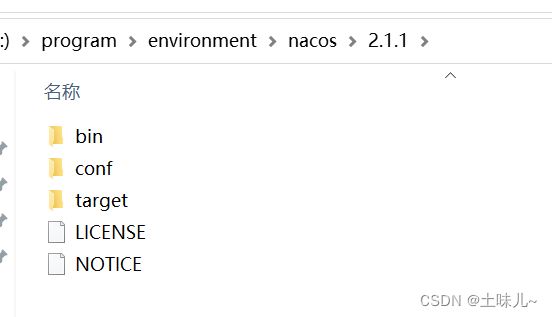

1.2、解压安装

在安装目录下解压

1.3、启动

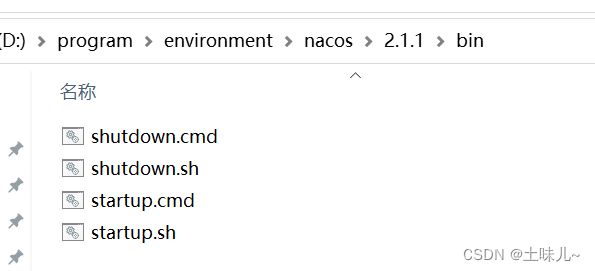

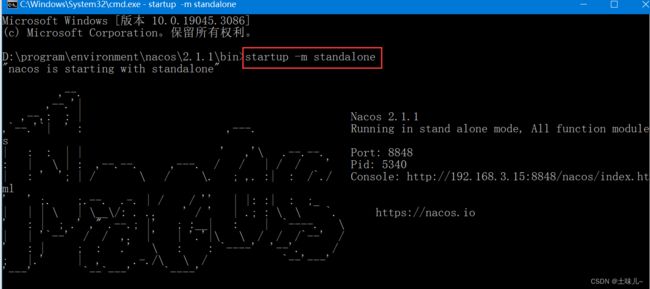

进入bin目录,cmd命令启动 startup

nacos默认是集群模式,如果要单机启动,需加上参数 -m standalone

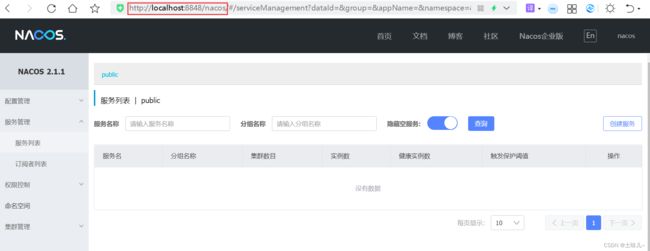

1.4、访问

默认端口 8848,访问路径 localhost:8848/nacos,默认账号nacos,默认密码 nacos

2、windows下集群安装

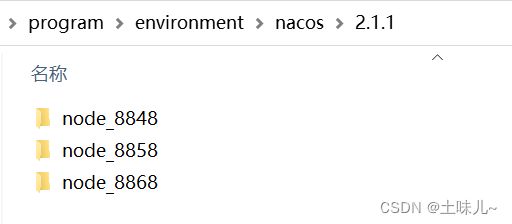

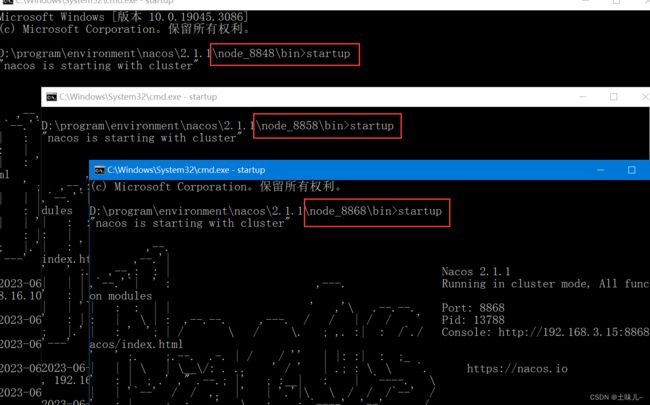

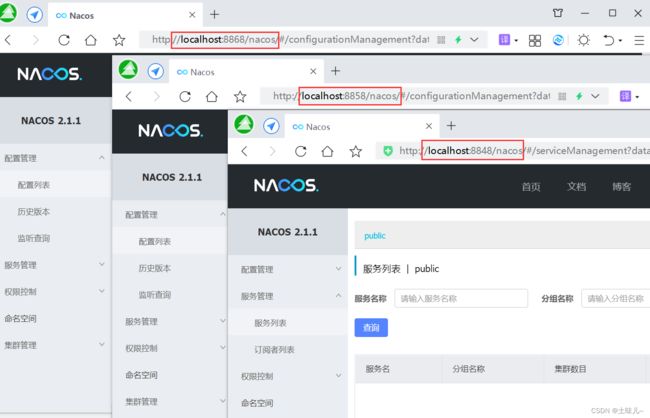

单机windows下部署3个节点

2.1、节点目录

把nacos压缩包解压至3个安装目录,即节点目录;分别为:node_8848、node_8858、node_8868

每个目录下都是一个nacos

2.2、集群配置

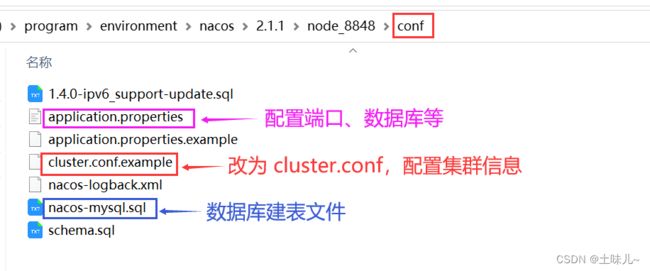

分别进入每个节点的 conf 目录

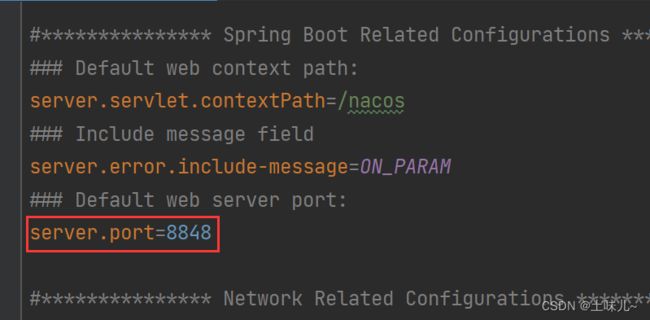

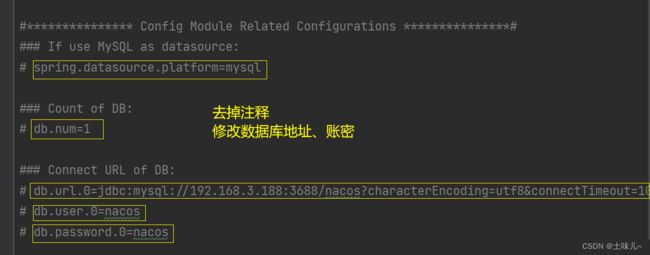

- application.properties

打开

application.properties,分别修改节点的端口,数据库等。

每个节点的端口不同,分别为:8848、8858、8868

三个节点的数据库配置信息一致,都连接同一个数据库。

- cluster.conf

修改

cluster.conf.example文件为cluster.conf;修改文件中的节点地址和端口

三个节点中,只有 application.properties 中的端口不同,其余配置都一样

2.3、创建数据库

用 conf 目录下的建表脚本文件 nacos-mysql.sql 创建数据表

2.4、启动测试

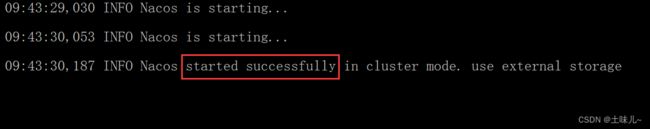

进入每个节点下的 bin,使用 cmd 命令,启动 startup ,不用加参数

3、Docker Compose中安装部署

前提:有 Docker Compose 运行环境

3.1、docker-compose.yml

version: "3.9"

services:

# nacos服务

nacos-server:

# 镜像

image: nacos/nacos-server:v2.1.1

# 容器名称

container_name: c-nacos-server

# 启动环境

environment:

# 单机模式

- MODE=standalone

# 内存

- JVM_XMS=512m

- JVM_XMX=512m

- JVM_XMN=256m

# 时区

- TZ=Asia/Shanghai

# 数据挂载点

# 宿主机目录 : nacos容器内目录

volumes:

# 日志

- ../services/nacos-server/volumes/log:/home/nacos/logs

# 配置

- ../services/nacos-server/volumes/conf:/home/nacos/conf

ports:

- 8848:8848

# 添加健康检测

healthcheck:

test: ["CMD", "curl" ,"-f", "localhost:8848/nacos"]

# 健康检查间隔时间:默认为 30 秒

interval: 30s

# 健康检查命令运行超时时间;超过这个时间,本次健康检查就被视为失败

timeout: 3s

# 当连续失败指定次数后,则将容器状态视为 unhealthy

retries: 5

# 20s:表示启动后的20s,作为启动时间;

# 这个时间段的健康检测,不计入统计次数,但仍会发生检测

start_period: 20s

# 依赖

#depends_on:

# 依赖于数据库服务

#db-server:

# 服务健康监视器:当被依赖服务健康时本服务才会启动

#condition: service_healthy

# condition: service_started

# 用来给容器root权限

privileged: true

# 重启(由compose的开机自启来控制每个容器的启动)

# restart: always

# 容器启动后,如果处于空闲状态,会自动退出

# 相当于 -it;可以让容器一直运行

tty: true

stdin_open: true

# 加入网络

#networks:

# 随机ip

#- inner-net

3.2、application.properties

放在挂载目录

conf下面

#

# Copyright 1999-2021 Alibaba Group Holding Ltd.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

#*************** Spring Boot Related Configurations ***************#

### Default web context path:

server.servlet.contextPath=/nacos

### Include message field

server.error.include-message=ON_PARAM

### Default web server port:

server.port=8848

# 设置 housekeeper 线程数量

nacos.core.thread.count=5

#*************** Network Related Configurations ***************#

### If prefer hostname over ip for Nacos server addresses in cluster.conf:

# nacos.inetutils.prefer-hostname-over-ip=false

### Specify local server's IP:

# nacos.inetutils.ip-address=

#*************** Config Module Related Configurations ***************#

### If use MySQL as datasource:

spring.datasource.platform=mysql

### Count of DB:

db.num=1

### Connect URL of DB:

db.url.0=jdbc:mysql://localhost:3306/nacos_config?characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useUnicode=true&useSSL=false&serverTimezone=UTC

db.user.0=nacos

db.password.0=nacos

### Connection pool configuration: hikariCP

db.pool.config.connectionTimeout=30000

db.pool.config.validationTimeout=10000

db.pool.config.maximumPoolSize=20

db.pool.config.minimumIdle=2

#*************** Naming Module Related Configurations ***************#

### Data dispatch task execution period in milliseconds: Will removed on v2.1.X, replace with nacos.core.protocol.distro.data.sync.delayMs

# nacos.naming.distro.taskDispatchPeriod=200

### Data count of batch sync task: Will removed on v2.1.X. Deprecated

# nacos.naming.distro.batchSyncKeyCount=1000

### Retry delay in milliseconds if sync task failed: Will removed on v2.1.X, replace with nacos.core.protocol.distro.data.sync.retryDelayMs

# nacos.naming.distro.syncRetryDelay=5000

### If enable data warmup. If set to false, the server would accept request without local data preparation:

# nacos.naming.data.warmup=true

### If enable the instance auto expiration, kind like of health check of instance:

# nacos.naming.expireInstance=true

### will be removed and replaced by `nacos.naming.clean` properties

nacos.naming.empty-service.auto-clean=true

nacos.naming.empty-service.clean.initial-delay-ms=50000

nacos.naming.empty-service.clean.period-time-ms=30000

### Add in 2.0.0

### The interval to clean empty service, unit: milliseconds.

# nacos.naming.clean.empty-service.interval=60000

### The expired time to clean empty service, unit: milliseconds.

# nacos.naming.clean.empty-service.expired-time=60000

### The interval to clean expired metadata, unit: milliseconds.

# nacos.naming.clean.expired-metadata.interval=5000

### The expired time to clean metadata, unit: milliseconds.

# nacos.naming.clean.expired-metadata.expired-time=60000

### The delay time before push task to execute from service changed, unit: milliseconds.

# nacos.naming.push.pushTaskDelay=500

### The timeout for push task execute, unit: milliseconds.

# nacos.naming.push.pushTaskTimeout=5000

### The delay time for retrying failed push task, unit: milliseconds.

# nacos.naming.push.pushTaskRetryDelay=1000

### Since 2.0.3

### The expired time for inactive client, unit: milliseconds.

# nacos.naming.client.expired.time=180000

#*************** CMDB Module Related Configurations ***************#

### The interval to dump external CMDB in seconds:

# nacos.cmdb.dumpTaskInterval=3600

### The interval of polling data change event in seconds:

# nacos.cmdb.eventTaskInterval=10

### The interval of loading labels in seconds:

# nacos.cmdb.labelTaskInterval=300

### If turn on data loading task:

# nacos.cmdb.loadDataAtStart=false

#*************** Metrics Related Configurations ***************#

### Metrics for prometheus

#management.endpoints.web.exposure.include=*

### Metrics for elastic search

management.metrics.export.elastic.enabled=false

#management.metrics.export.elastic.host=http://localhost:9200

### Metrics for influx

management.metrics.export.influx.enabled=false

#management.metrics.export.influx.db=springboot

#management.metrics.export.influx.uri=http://localhost:8086

#management.metrics.export.influx.auto-create-db=true

#management.metrics.export.influx.consistency=one

#management.metrics.export.influx.compressed=true

#*************** Access Log Related Configurations ***************#

### If turn on the access log:

server.tomcat.accesslog.enabled=true

### The access log pattern:

server.tomcat.accesslog.pattern=%h %l %u %t "%r" %s %b %D %{User-Agent}i %{Request-Source}i

### The directory of access log:

server.tomcat.basedir=file:.

#*************** Access Control Related Configurations ***************#

### If enable spring security, this option is deprecated in 1.2.0:

#spring.security.enabled=false

### The ignore urls of auth, is deprecated in 1.2.0:

nacos.security.ignore.urls=/,/error,/**/*.css,/**/*.js,/**/*.html,/**/*.map,/**/*.svg,/**/*.png,/**/*.ico,/console-ui/public/**,/v1/auth/**,/v1/console/health/**,/actuator/**,/v1/console/server/**

### The auth system to use, currently only 'nacos' and 'ldap' is supported:

nacos.core.auth.system.type=nacos

### If turn on auth system:

nacos.core.auth.enabled=false

### Turn on/off caching of auth information. By turning on this switch, the update of auth information would have a 15 seconds delay.

nacos.core.auth.caching.enabled=true

### Since 1.4.1, Turn on/off white auth for user-agent: nacos-server, only for upgrade from old version.

nacos.core.auth.enable.userAgentAuthWhite=false

### Since 1.4.1, worked when nacos.core.auth.enabled=true and nacos.core.auth.enable.userAgentAuthWhite=false.

### The two properties is the white list for auth and used by identity the request from other server.

nacos.core.auth.server.identity.key=serverIdentity

nacos.core.auth.server.identity.value=security

### worked when nacos.core.auth.system.type=nacos

### The token expiration in seconds:

nacos.core.auth.plugin.nacos.token.expire.seconds=18000

### The default token:

nacos.core.auth.plugin.nacos.token.secret.key=SecretKey012345678901234567890123456789012345678901234567890123456789

### worked when nacos.core.auth.system.type=ldap,{0} is Placeholder,replace login username

#nacos.core.auth.ldap.url=ldap://localhost:389

#nacos.core.auth.ldap.basedc=dc=example,dc=org

#nacos.core.auth.ldap.userDn=cn=admin,${nacos.core.auth.ldap.basedc}

#nacos.core.auth.ldap.password=admin

#nacos.core.auth.ldap.userdn=cn={0},dc=example,dc=org

#*************** Istio Related Configurations ***************#

### If turn on the MCP server:

nacos.istio.mcp.server.enabled=false

#*************** Core Related Configurations ***************#

### set the WorkerID manually

# nacos.core.snowflake.worker-id=

### Member-MetaData

# nacos.core.member.meta.site=

# nacos.core.member.meta.adweight=

# nacos.core.member.meta.weight=

### MemberLookup

### Addressing pattern category, If set, the priority is highest

# nacos.core.member.lookup.type=[file,address-server]

## Set the cluster list with a configuration file or command-line argument

# nacos.member.list=192.168.16.101:8847?raft_port=8807,192.168.16.101?raft_port=8808,192.168.16.101:8849?raft_port=8809

## for AddressServerMemberLookup

# Maximum number of retries to query the address server upon initialization

# nacos.core.address-server.retry=5

## Server domain name address of [address-server] mode

# address.server.domain=jmenv.tbsite.net

## Server port of [address-server] mode

# address.server.port=8080

## Request address of [address-server] mode

# address.server.url=/nacos/serverlist

#*************** JRaft Related Configurations ***************#

### Sets the Raft cluster election timeout, default value is 5 second

# nacos.core.protocol.raft.data.election_timeout_ms=5000

### Sets the amount of time the Raft snapshot will execute periodically, default is 30 minute

# nacos.core.protocol.raft.data.snapshot_interval_secs=30

### raft internal worker threads

# nacos.core.protocol.raft.data.core_thread_num=8

### Number of threads required for raft business request processing

# nacos.core.protocol.raft.data.cli_service_thread_num=4

### raft linear read strategy. Safe linear reads are used by default, that is, the Leader tenure is confirmed by heartbeat

# nacos.core.protocol.raft.data.read_index_type=ReadOnlySafe

### rpc request timeout, default 5 seconds

# nacos.core.protocol.raft.data.rpc_request_timeout_ms=5000

#*************** Distro Related Configurations ***************#

### Distro data sync delay time, when sync task delayed, task will be merged for same data key. Default 1 second.

# nacos.core.protocol.distro.data.sync.delayMs=1000

### Distro data sync timeout for one sync data, default 3 seconds.

# nacos.core.protocol.distro.data.sync.timeoutMs=3000

### Distro data sync retry delay time when sync data failed or timeout, same behavior with delayMs, default 3 seconds.

# nacos.core.protocol.distro.data.sync.retryDelayMs=3000

### Distro data verify interval time, verify synced data whether expired for a interval. Default 5 seconds.

# nacos.core.protocol.distro.data.verify.intervalMs=5000

### Distro data verify timeout for one verify, default 3 seconds.

# nacos.core.protocol.distro.data.verify.timeoutMs=3000

### Distro data load retry delay when load snapshot data failed, default 30 seconds.

# nacos.core.protocol.distro.data.load.retryDelayMs=30000

3.3、nacos-logback.xml

放在挂载目录

conf下面

<configuration debug="false" scan="true" scanPeriod="1 seconds">

<contextName>logbackcontextName>

<property name="logPath" value="/home/nacos/logs/">property>

<property name="logName" value="nacos_log">property>

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>ERRORlevel>

filter>

<encoder>

<pattern>%d{HH:mm:ss.SSS} %contextName [%thread] %-5level %logger{36} - %msg%n

pattern>

encoder>

appender>

<appender name="file" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${logPath}${logName}.logfile>

<encoder>

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} %-5level --- [%thread] %logger Line:%-3L - %msg%npattern>

<charset>UTF-8charset>

encoder>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${logPath}/%d{yyyy-MM-dd}/${logName}_%i.logfileNamePattern>

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<maxFileSize>10MBmaxFileSize>

timeBasedFileNamingAndTriggeringPolicy>

<maxHistory>30maxHistory>

rollingPolicy>

appender>

<root level="debug">

<appender-ref ref="console" />

<appender-ref ref="file" />

root>

<logger name="com.example.logback" level="warn" />

configuration>

三、配置管理

1、父工程pom

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-parentartifactId>

<version>2.6.7version>

parent>

<modelVersion>4.0.0modelVersion>

<groupId>com.tuwergroupId>

<artifactId>nacosartifactId>

<version>1.0-SNAPSHOTversion>

<modules>

<module>configmodule>

modules>

<packaging>pompackaging>

<properties>

<maven.compiler.source>8maven.compiler.source>

<maven.compiler.target>8maven.compiler.target>

<project.build.sourceEncoding>UTF-8project.build.sourceEncoding>

properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloudgroupId>

<artifactId>spring-cloud-dependenciesartifactId>

<version>2021.0.0version>

<type>pomtype>

<scope>importscope>

dependency>

<dependency>

<groupId>com.alibaba.cloudgroupId>

<artifactId>spring-cloud-alibaba-dependenciesartifactId>

<version>2021.0.1.0version>

<type>pomtype>

<scope>importscope>

dependency>

dependencies>

dependencyManagement>

project>

2、子工程pom

需要从nacos中引入配置的模块

- 加入

spring-cloud-starter-alibaba-nacos-config

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>nacosartifactId>

<groupId>com.tuwergroupId>

<version>1.0-SNAPSHOTversion>

parent>

<modelVersion>4.0.0modelVersion>

<artifactId>configartifactId>

<description>配置管理description>

<properties>

<maven.compiler.source>8maven.compiler.source>

<maven.compiler.target>8maven.compiler.target>

properties>

<dependencies>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

dependency>

<dependency>

<groupId>org.springframework.cloudgroupId>

<artifactId>spring-cloud-starter-bootstrapartifactId>

dependency>

<dependency>

<groupId>com.alibaba.cloudgroupId>

<artifactId>spring-cloud-starter-alibaba-nacos-configartifactId>

dependency>

dependencies>

project>

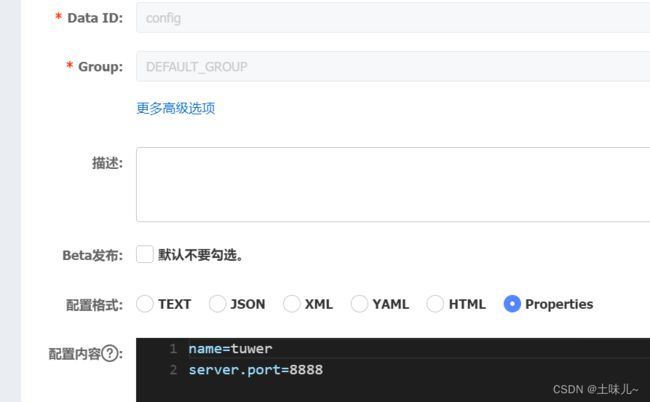

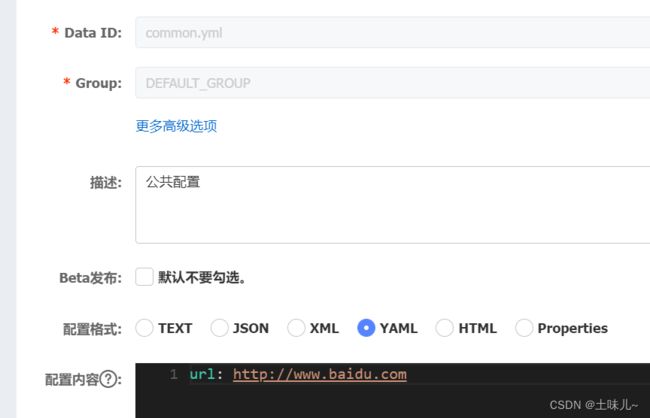

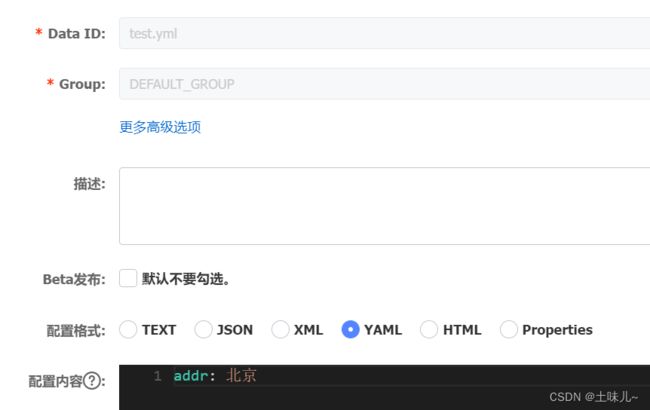

3、nacos配置文件

主要配置一些不太稳定,容易发生变化的内容;

如:服务运行中需要调用的一些参数,对象的属性、系统常量等;

共添加3个:1个主配置文件,2个共享配置文件

内容分别为:

4、bootstrap.properties

主要配置一些相对稳定,不易变化的内容;

如:模块名称、端口、服务地址、其它配置文件名称等

# nacos服务地址

spring.cloud.nacos.server-addr=localhost:8848

# ############ 应用名称(主配置文件) ############

# 默认去调用和应用名称相同的配置文件(主配置文件)

# ### 优先级:

# ### test-dev.properties

# ### test.properties

# ### test

spring.application.name=config

# ################ 其它配置文件 ################

# shared 和 extension 效果一样,都是引入其它配置

# shared 表示共享;其它服务也可能会用到的配置

# extension 表示扩展;本服务特有的

# 编号从0开始

# data-id 和配置中的文件名完全一致(如果有扩展名,不能省略)

spring.cloud.nacos.config.shared-configs[0].data-id=common.yml

spring.cloud.nacos.config.shared-configs[0].refresh=true

spring.cloud.nacos.config.shared-configs[1].data-id=test.yml

spring.cloud.nacos.config.shared-configs[1].refresh=true

#spring.cloud.nacos.config.extension-configs[0].data-id=common.yml

#spring.cloud.nacos.config.extension-configs[1].data-id=test.yml

5、启动类与Controller

- 启动类

@SpringBootApplication

public class BootApp {

public static void main(String[] args) {

SpringApplication.run(BootApp.class, args);

}

}

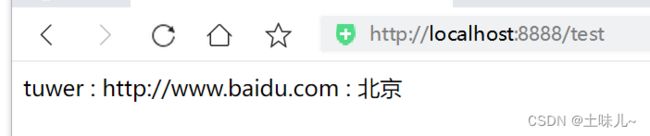

- Controller

@RestController

@RefreshScope

public class TestController {

@Value("${name}")

private String name;

@Value("${url}")

private String url;

@Value("${addr}")

private String addr;

@GetMapping("/test")

public String name() {

return name + " : " + url + " : " + addr;

}

}

6、自动刷新

@RefreshScope:在需要引用配置文件的类上添加该注解- 如果模块中除主配置文件外,还引入了其它配置文件,还要设置其它配置文件的

refresh值为true - 最佳实践:把模块中所有的配置项集中到一个类中(bean),类上添加

@RefreshScope注解,每个配置项提供get方法,把该类注入到需要引用配置文件的类中,调用get方法获取值。

7、测试

四、服务注册

在父工程下新建服务提供者模块:provider

1、引入依赖

- 服务注册与发现

spring-cloud-starter-alibaba-nacos-discovery

<dependencies>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

dependency>

<dependency>

<groupId>org.springframework.cloudgroupId>

<artifactId>spring-cloud-starter-bootstrapartifactId>

dependency>

<dependency>

<groupId>com.alibaba.cloudgroupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discoveryartifactId>

dependency>

dependencies>

2、bootstrap.properties

如果是nacos集群,server-addr应该把每个节点都加上,或者使用 nginx 地址,负载每个节点;服务发现也是同样的配置。

# nacos服务地址(两种方式都可以)

#spring.cloud.nacos.server-addr=localhost:8848

spring.cloud.nacos.discovery.server-addr=localhost:8848

# ############ 应用名称 ############

spring.application.name=provider

3、服务接口

@RestController

public class TestController {

@GetMapping("/test")

public String test() {

return "测试";

}

}

4、启动类

@SpringBootApplication

public class ProviderApp {

public static void main(String[] args) {

SpringApplication.run(ProviderApp.class, args);

}

}

5、测试

服务启动后可以在nacos工作台查看注册的服务

五、服务发现

在父工程下新建服务消费者模块:consumer

1、引入依赖

- 服务注册与发现

spring-cloud-starter-alibaba-nacos-discovery- 负载均衡

spring-cloud-starter-loadbalancer,在SpringColud2021.0.0中不使用netflix了,所以不用Ribbon来实现负载均衡,用Spring Cloud Loadbalancer来替代 Ribbon

<dependencies>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

dependency>

<dependency>

<groupId>org.springframework.cloudgroupId>

<artifactId>spring-cloud-starter-bootstrapartifactId>

dependency>

<dependency>

<groupId>com.alibaba.cloudgroupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discoveryartifactId>

dependency>

<dependency>

<groupId>org.springframework.cloudgroupId>

<artifactId>spring-cloud-starter-loadbalancerartifactId>

dependency>

dependencies>

2、bootstrap.properties

# 指定端口,避免与提供者冲突

server.port=8000

# nacos服务地址

spring.cloud.nacos.server-addr=localhost:8848

#spring.cloud.nacos.discovery.server-addr=localhost:8848

# ############ 应用名称 ############

spring.application.name=consumer

3、启动类

@SpringBootApplication

public class ConsumerApp {

public static void main(String[] args) {

SpringApplication.run(ConsumerApp.class, args);

}

}

4、配置 RestTemplate

要加上

@LoadBalanced

@Configuration

public class MyConfig {

@Bean

@LoadBalanced

public RestTemplate getRestTemplate(){

return new RestTemplate();

}

}

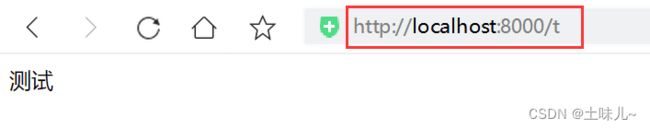

5、调用服务

@RestController

public class ConsumerController {

@Resource

private RestTemplate restTemplate;

@GetMapping("/t")

public String test(){

return restTemplate.getForObject("http://provider/test", String.class);

}

}

6、测试

六、高级特性

1、临时实例与持久实例

1> 临时实例

默认情况下,注册给nacos的实例都是临时实例。

临时实例会通过客户端和服务端之间的心跳来保活。

默认情况下,客户端会每隔 5秒 发送一次心跳。如果服务端超过 15秒 没有收到客户端的心跳,就会把该实例标记为不健康状态,超过 30秒 没有收到心跳,会就删除实例。

临时实例在服务下线后会被删除。

2> 持久实例

设置持久实例:spring.cloud.nacos.discovery.ephemeral=false

ephemeral:临时的,短暂的。

持久实例在服务下线后不会删除,变为不健康状态。

2、保护阈值

保护阈值:可以设置为 0~1 之间的浮点数,它其实是⼀个⽐例值(当前服务健康实例数/总实例数)。

⼀般流程下, nacos是服务注册中⼼,服务消费者要从nacos获取某⼀个服务的可⽤实例信息,对于服务实例有健康/不健康状态之分, nacos在返回给消费者实例信息的时候,会返回健康实例。这个时候在⼀些⾼并发、⼤流量场景下会存在⼀定的问题。

如:服务A有100个实例, 98个实例都不健康了,只有2个实例是健康的,如果nacos只返回这两个健康实例的话,那么后续消费者的请求将全部被分配到这两个实例,流量洪峰到来, 2个健康的实例也扛不住了,整个服务A 就扛不住,上游的微服务也会导致崩溃,产⽣雪崩效应。

保护阈值的意义在于当服务A健康实例数/总实例数 < 保护阈值 的时候,说明健康实例真的不多了,这个时候保护阈值会被触发(状态true)。nacos将会把该服务所有的实例信息(健康的+不健康的)全部提供给消费者,消费者可能访问到不健康的实例,请求失败,但这样也⽐造成雪崩要好,牺牲了⼀些请求,保证了整个系统的可用性。

3、权重NacosRule

在SpringColud2021.0.0 中不使用netflix了,不用Ribbon来实现负载均衡。NacosRule 是Ribbon的扩展,也就不提供了。权重也此版本中也没有用了。

4、Cluster就近访问

2021.0.1.0 版本中无效

七、常见问题

1、NacosException

Could not find leader

com.alibaba.nacos.api.exception.NacosException: failed to req API:/nacos/v1/ns/instance after all servers([docker-nacos-server:8848]) tried: ErrCode:500, ErrMsg:caused: java.util.concurrent.ExecutionException: com.alibaba.nacos.consistency.exception.ConsistencyException: Could not find leader : naming_persistent_service_v2;caused: com.alibaba.nacos.consistency.exception.ConsistencyException: Could not find leader : naming_persistent_service_v2;caused: Could not find leader : naming_persistent_service_v2;

解决方案:

- 删掉nacos文件夹下的data文件夹,重新启动

- 如果是

docker中安装,删除镜像,重新启动

2、gataway整合nacos

通过gateway访问nacos,gateway中需要加入spring-cloud-starter-loadbalancer 依赖

参考自:https://www.bilibili.com/video/BV1q3411Z79z