第五课 Shell脚本编程-awk用法

文章目录

- 第五课 Shell脚本编程-awk用法

-

- 第一节 awk介绍

- 第二节 awk的内置变量

- 第三节 awk格式化输出之printf

- 第四节 awk的模式匹配

- 第五节 awk动作中的表达式用法

- 第六节 awk动作中的条件及循环语句

- 第七节 awk中的字符串函数

- 第八节 awk中的常用选项

- 第九节 awk中的数组用法

- 第十节 awk中的处理日志实例

第一节 awk介绍

- awk是一个文本处理工具,通常用于处理数据并生成结果报告

- awk的命名是它的创始人Alfred Aho .Peter Weinberger和Brian Kernighan 姓氏的首个字母组成的

- 语法格式

- 第一种形式:

awk 'BEGIN{}pattern{commands}END{}' file_name

- 第二种形式:

standard output |awk 'BEGIN{}pattern{commands}END{}

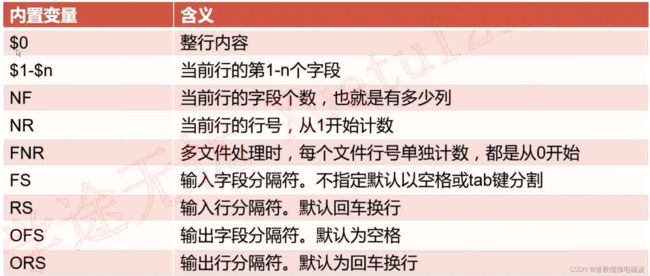

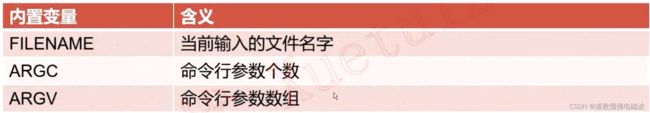

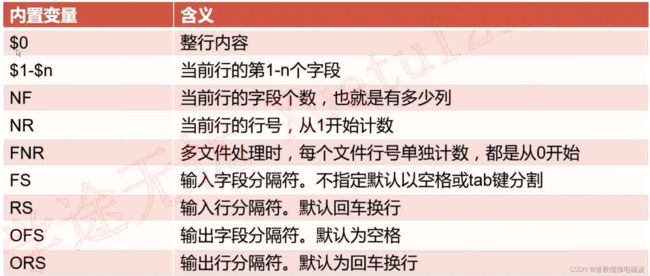

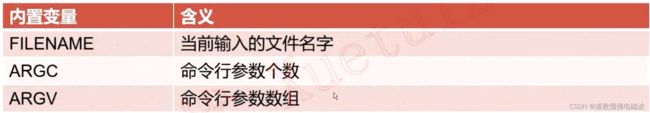

第二节 awk的内置变量

- 内置变量:

- $0 打印行所有信息

- 1 1~ 1 n 打印行的第1到n个字段的信息

- NF (Number Field) 处理行的字段个数

- NR (Number Row) 处理行的行号

- FNR (File Number Row) 多文件处理时,每个文件单独记录行号

- FS (Field Separator) 字段分割符,不指定时默认以空格或tab键分割

- RS (Row Separator) 行分隔符,不指定时以回车换行分割

- OFS (Outpput Field Separator)输出字段分隔符。

- ORS (Outpput Row Separator) 输出行分隔符

- FILENAME 处理文件的文件名

- ARGC 命令行参数个数

- ARGV 命令行参数数组

[root@localhost ~]

Hadoop Spark Flume

Java Python scala

Allen Mike Meggie

awk '{print $0}' /etc/passwd

awk 'BEGIN{FS=":"}{print $1}' /etc/passwd

awk '{print $1}' list

awk '{print NF}' list

awk '{print NR}' list /etc/passwd

awk '{print FNR}' list /etc/passwd

[root@localhost ~]

Hadoop|Spark:Flume

Java|Python:scala:go

Allen|Mike:Meggie

awk '{print $1}' list

awk 'BEGIN{FS=":"}{print $1}' list

awk 'BEGIN{FS="|"}{print $1}' list

[root@localhost ~]

Hadoop|Spark:Flume--Java|Python:scala:go--Allen|Mike:Meggie

awk 'BEGIN{RS="--"}{print $0}' list

awk 'BEGIN{RS="--";FS="|"}{print $2}' list

awk 'BEGIN{RS="--";FS="|";ORS="@"}{print $2}' list

awk 'BEGIN{RS="--";FS="|";ORS="@";OFS="*"}{print $1i,$2}' list

awk '{print FILENAME}' list

awk '{print ARGC}' list

awk '{print ARGC}' list /etc/passwd

awk 'BEGIN{FS=":"}{print $NF}' /etc/passwd

第三节 awk格式化输出之printf

- 格式符

- %s 打印字符串

- %d 打印1o进制数

- %f 打印浮点数

- %x 打印16进制数

- %o 打印8进制数

- %e 打印数字的科学计数法格式

- %c 打印单个字符的ASCII码

- 修饰符

- 左对齐+ 右对齐# 显示8进制在前面加0,显示16进制在前面加0x

awk 'BEGIN{FS=":"}{print $1}' /etc/passwd

awk 'BEGIN{FS=":"}{printf $1}' /etc/passwd

awk 'BEGIN{FS=":"}{printf "%s\n", $1}' /etc/passwd

awk 'BEGIN{FS=":"}{printf "%s %s\n", $1, $2}' /etc/passwd

awk 'BEGIN{FS=":"}{printf "%20s %20s\n", $1, $2}' /etc/passwd

awk 'BEGIN{FS=":"}{printf "%-20s %-20s\n", $1, $2}' /etc/passwd

第四节 awk的模式匹配

- 模式匹配的两种用法

- 第一种模式匹配: RegExp

- 第二种模式匹配∶关系运算匹配

- RegExp

- 匹配/etc/passwd文件行中含有root字符串的所有行

- 匹配/etc/passwd文件行中以yarn开头的所有行

- 关系运算符匹配:

< 小于> 大于<= 小于等于>= 大于等于== 等于!= 不等于~ 匹配正则表达式!~ 不匹配正则表达式

- 布尔运算符匹配:

awk 'BEGIN{FS=":"}/root/{print $0}' /etc/passwd

awk 'BEGIN{FS=":"}/^yarn/{print $0}' /etc/passwd

awk 'BEGIN{FS=":"}$3<50{print $0}' /etc/passwd

awk 'BEGIN{FS=":"}$3>5{print $0}' /etc/passwd

awk 'BEGIN{FS=":"}$7=="/bin/bash"{print $0}' /etc/passwd

awk 'BEGIN{FS=":"}$7!="/bin/bash"{print $0}' /etc/passwd

awk 'BEGIN{FS=":"}$3~/[0-9]{3,}/{print $0}' /etc/passwd

awk 'BEGIN{FS=":"}$1~/^root/{print $0}' /etc/passwd

awk 'BEGIN{FS=":"}$1=="hdfs" || $1=="yarn"{print $0}' /etc/passwd

awk 'BEGIN{FS=":"}$3<50 && $4>50 {print $0}' /etc/passwd

第五节 awk动作中的表达式用法

awk 'BEGIN{var=20;var1="hello";print var,var1}'

awk 'BEGIN{num1=20;num2+=num1;print num1,num2}'

awk 'BEGIN{num1=20;num2=30;print num1/num2}'

awk 'BEGIN{num1=20;num2=30;printf "%0.2f\n", num1/num2}'

awk 'BEGIN{num1=20;num2=3;printf "%0.2f\n", num1**num2}'

awk 'BEGIN{num1=20;num2=3;printf "%0.2f\n", num1^num2}'

awk 'BEGIN{x=20;y=x++;print x, y}'

awk 'BEGIN{x=20;y=++x;print x, y}'

awk '/^$/{sum++}END{print sum}' /etc/services

第六节 awk动作中的条件及循环语句

awk 'BEGIN{FS=":"}{if($3>50 && $3 < 100) print $0}' /etc/passwd

awk 'BEGIN{FS=":"}{if($3<50) printf "%-10s%-5d\n", "小于50的UUID", $3}' /etc/passwd

awk 'BEGIN{FS=":"}{if($3<50) printf "%-10s%-5d\n", "小于50的UUID", $3}' /etc/passwd

awk 'BEGIN{FS=":"}{if($3<50) {printf "%-10s%-10s%-5d\n", "小于50的UUID", $1, $3} else if ($3 > 100) {printf "%-10s%-10s%-5d\n", "大于100的UUID", $1, $3}}' /etc/passwd

BEGIN{

FS=":"

}

{

if($3<50){

printf "%-10s%-10s%-5d\n", "小于50的UUID", $1, $3

}else if ($3>50 && $3<100){

printf "%-10s%-10s%-5d\n", "大于50小于100的UUID", $1, $3

}else{

printf "%-10s%-10s%-5d\n", "大于100的UUID", $1, $3

}

}

awk -f script.awk /etc/passwd

BEGIN{

while(i<=100)

{

sum+=i

i++

}

print sum

}

awk -f while.awk

BEGIN{

for(i=0;i<=100;i++)

{

sum+=i

}

print sum

}

awk -f for.awk

BEGIN{

do

{

sum+=i

i++

}while(i<=100)

print sum

}

awk -f dowhile.awk

Allen 80 90 96 98

Mike 93 98 92 91

Zhang 78 76 87 92

Jerry 86 89 68 92

Han 85 95 75 90

Li 78 88 98 100

BEGIN{

printf "%-10s%-10s%-10s%-10s%-10s%-10s\n", "Name", "Chinese", "English", "Math", "Physical", "Average"

}

{

total=$2+$3+$4+$5

avg=total/4

if(avg>90){

printf "%-10s%-10d%-10d%-10d%-10d%-0.2f\n", $1, $2, $3, $4, $5, avg

score_chinese+=$2

score_english+=$3

score_math+=$4

score_physical+=$5

score_avg+=avg

}

}

END{

printf "%-10s%-10s%-10s%-10s%-10s%-10s\n", "ALL", score_chinese, score_english, score_math, score_physical, score_avg

}

[root@localhost ~]

Name Chinese English Math Physical Average

Allen 80 90 96 98 91.00

Mike 93 98 92 91 93.50

Li 78 88 98 100 91.00

ALL 251 276 286 289 275.5

第七节 awk中的字符串函数

- awk中的字符串函数

length(str) 计算长度index(str1,str2) 返回在str1中查询到的str2的位置tolower(str) 小写转换toupper(str) 大写转换- `split(str, arr, fs) 分隔字符串,并保存到数组中,默认fs空格

match(str, RE) 返回正则表达式匹配到的子串的位置substr (str, m, n) 截取子串,从m个字符开始,截取n位。n若不指定,默认空格sub(RE, Repstr, str) 替换查找到的第一个子串gsub(RE, Repstr, str) 替换查找到的所有子串

BEGIN{

FS=":"

}

{

i=1

while (i<=NF) {

if (i==NF)

printf "%d", length($i)

else

printf "%d:", length($i)

i++

}

print ""

}

awk -f example1.awk /etc/passwd

awk 'BEGIN{str="I have a dream";location=index(str,"ea");print location}'

awk 'BEGIN{str="I have a dream";location=match(str,"ea");print location}'

awk 'BEGIN{str="Hadoop is a bigdata Framawork";print tolower(str)}'

awk 'BEGIN{str="Hadoop is a bigdata Framawork";print toupper(str)}'

awk 'BEGIN{str="Hadoop Kafka Spark Storm HDFS YARN Zookeeper";split(str, array, " "); for(a in array) print array[a]}'

awk 'BEGIN{str="Tranction 2345 Start:Select * from maste";location=match(str,/[0-9]/);print location}'

awk 'BEGIN{str="transaction start";print substr(str, 4, 5)}'

awk 'BEGIN{str="Tranction 243 start,Event ID:9002";print sub(/[0-9]+/, "$", str);print str}'

第八节 awk中的常用选项

- awk中常用选项

-v 定义或引用变量-f 指定awk命令文件-F 指定分隔符-v 查看awk的版本号

num1=20

var1="qnhyn"

awk -v num1="$num1" -v var1="$var1" 'BEGIN{print num1, var1}'

第九节 awk中的数组用法

- she1l中数组的用法:

array=("Allen" "Mike" "Messi" "Jerry" "Hanmeimei" "wang")

echo ${array[@]}

echo ${array[2]}

echo ${#array[@]}

echo ${#array[*]}

echo ${#array[3]}

array[3]="Li"

unset array[2]

unset array

echo ${array[@]:1:3]

# 元素内容替换:

${array[@]/e/E}

${array[@]//e/E}

for a in ${array[@]}

do

echo $a

done

- awk中数组的用法:

- 在awk中,使用数组时,不仅可以使用1.2…n作为数组下标,也可以使用字符串作为数组下标

str="Allen Jerry Mike Tracy Jordan Kobe Garnet"

split(str,array)

for(i=1;i<=length(array);i++)

print array[i]

awk 'BEGIN{str="Allen Jerry Mike Tracy Jordan Kobe Garnet";split(str,array);for(i=1;i<=length(array);i++) print array[i]}'

netstat -an | grep tcp | awk '{arr[$6]++}END{for (i in arr) print i,arr[i]}'

array["var1"]="Jin"

array["var2"]="Hao"

array["var3"]="Fang"

for(a in array)

print array[a]

awk 'BEGIN{array["var1"]="Jin";array["var2"]="Hao";array["var3"]="Fang";for(a in array) print array[a]}'

第十节 awk中的处理日志实例

- 模拟数据db.log

2019-06-08 10:31:40 15459 Batches: user Jerry insert 5504 records into datebase:product table:detail, insert 5253 records successfully,failed 251 records

2019-06-08 10:31:40 15460 Batches: user Tracy insert 25114 records into datebase:product table:detail, insert 13340 records successfully,failed 11774 records

2019-06-08 10:31:40 15461 Batches: user Hanmeimei insert 13840 records into datebase:product table:detail, insert 5108 records successfully,failed 8732 records

2019-06-08 10:31:40 15462 Batches: user Lilei insert 32691 records into datebase:product table:detail, insert 5780 records successfully,failed 26911 records

2019-06-08 10:31:40 15463 Batches: user Allen insert 25902 records into datebase:product table:detail, insert 14027 records successfully,failed 11875 records

- 数据文件处理

BEGIN{

printf "%-20s%-20s\n","User","Total records"

}

{

USER[$6]+=$8

}

END{

for(u in USER)

printf "%-20s%-20d\n",u,USER[u]

}

BEGIN{

printf "%-30s%-30s%-30s\n","User","Success records","Failed records"

}

{

SUCCESS[$6]+=$14

FAILED[$6]+=$17

}

END{

for(u in SUCCESS)

printf "%-30s%-30d%-30d\n",u,SUCCESS[u],FAILED[u]

}

BEGIN{

printf "%-30s%-30s%-30s%-30s\n","Name","total records","success records","failed records"

}

{

TOTAL_RECORDS[$6]+=$8

SUCCESS[$6]+=$14

FAILED[$6]+=$17

}

END{

for(u in TOTAL_RECORDS)

printf "%-30s%-30d%-30d%-30d\n",u,TOTAL_RECORDS[u],SUCCESS[u],FAILED[u]

}

BEGIN{

printf "%-30s%-30s%-30s%-30s\n","Name","total records","success records","failed records"

}

{

TOTAL_RECORDS[$6]+=$8

SUCCESS[$6]+=$14

FAILED[$6]+=$17

}

END{

for(u in TOTAL_RECORDS)

{

records_sum+=TOTAL_RECORDS[u]

success_sum+=SUCCESS[u]

failed_sum+=FAILED[u]

printf "%-30s%-30d%-30d%-30d\n",u,TOTAL_RECORDS[u],SUCCESS[u],FAILED[u]

}

printf "%-30s%-30d%-30d%-30d\n","",records_sum,success_sum,failed_sum

}

BEGIN{

}

{

if($8!=$14+$17)

print NR,$0

}

awk -f exam.awk db.log