kubeadm部署k8s集群

环境准备

本文基于VMware虚拟机,基于CentOS 8操作系统实现。

机器节点信息

| 服务器 | IP地址 |

| master | 192.168.31.80 |

| node1 | 192.168.31.8 |

| node2 | 192.168.31.9 |

更换镜像地址

sudo sed -i 's/mirrorlist/#mirrorlist/g' /etc/yum.repos.d/CentOS-*

sudo sed -i 's|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g' /etc/yum.repos.d/CentOS-*更换语言环境

dnf install glibc-langpack-zh.x86_64

echo LANG=zh_CN.UTF-8 > /etc/locale.conf

source /etc/locale.conf更换时区

timedatectl list-timezones

timedatectl set-timezone Asia/Shanghai

timedatectl关闭防火墙

systemctl stop firewalld

systemctl stop iptables

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X关闭selinux

临时关闭

setenforce 0

永久关闭

#vim /etc/selinux/config 然后设置 SELINUX=disabled

vim /etc/selinux/config

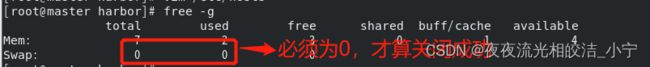

关闭交换分区

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab修改主机信息

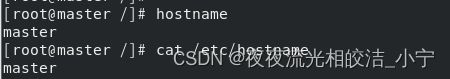

# 查看当前主机名称

hostname

# 修改主机名称 master节点修改成master、node1节点修改成node1、node2节点修改成node2

hostnamectl set-hostname master更新节点的本地域名IP解析

# 编辑hosts文件,将master、node1、node2节点ip添加进去,三个节点都需要添加

vim /etc/hosts

调整内核参数

cd /etc/sysctl.d/

#更新kubernetes.conf文件信息

cat > kubernetes.conf << EOF

#开启网桥模式,可将网桥的流量传递给iptables链

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

#关闭ipv6协议

net.ipv6.conf.all.disable_ipv6=1

net.ipv4.ip_forward=1

EOF

# 加载参数

sysctl --system安装docker

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

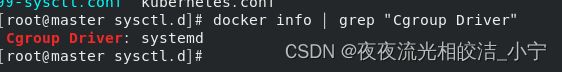

配置docker

cd /etc/docker/

cat > daemon.json << EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"registry-mirrors" : [

"https://ot2k4d59.mirror.aliyuncs.com/"

]

}

EOF

systemctl daemon-reload

systemctl restart docker.service

systemctl enable docker.service

docker info | grep "Cgroup Driver"到此,docker安装成功。

配置k8s源

cd /etc/yum.repos.d/

cat > kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装kubeadm kubelet kubectl

yum install -y kubelet-1.21.3 kubeadm-1.21.3 kubectl-1.21.3开机自启kubelet

systemctl enable kubelet.service

systemctl start kubelet到此,kubeadm、kubelet、kubectl 安装完成,截至目前为止,以上内容,master、node1、node2都需要执行。

master节点部署

初始化kubeadm

# --apiserver-advertise-address 初始化master监听地址,改成自己的master节点IP地址

# --image-repository 指定aliyun下载源

# --kubernetes-version 指定k8s下载版本

# --service-cidr 设置集群内部网络

# --pod-network-cidr 设置pod的网络

# service-cidr和pod-network-cidr最好就用这个,不然后面安装kube-flannel,就需要修改kube-flannel.yaml的配置

kubeadm init --apiserver-advertise-address=192.168.31.80 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.21.14 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16

# 进入kubernetes 配置中

cd /etc/kubernetes/manifests

# 26行 --port=0 #注释掉

vim kube-controller-manager.yaml

#19行 --port=0 #注释掉

vim kube-scheduler.yaml

#给node节点添加标签

kubectl label node node1 node-role.kubernetes.io/node=node1

kubectl label node node2 node-role.kubernetes.io/node=node2

执行kubectl管理工具

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id --u):$(id -g) $HOME/.kube/configToken制作

# 主要是node1、node2节点join 进入master节点需要

kubeadm token create --print-join-command

Node节点加入集群

# 上一步生成的token,粘贴命令到node1、node2节点执行

kubeadm join 192.168.48.130:6443 --token jgijaq.wpzj5oco3j03u1nb --discovery-token-ca-cert-hash sha256:c7c9a9e411fecb16807ea6bace2ce4a22828f2505167049ab20000c1cb5360b4

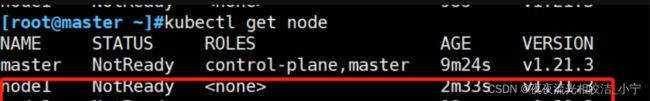

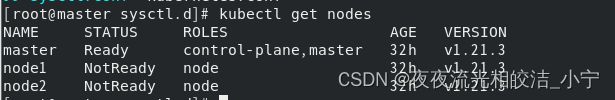

#查看集群节点信息

kubectl get nodes我们发现,集群节点的状态都是NotReady状态,这是因为我们还没有安装网络插件,下面,我们需要安装kube-flannel插件

安装kube-flannel插件(所有节点都需要)

# 新建kube-flannel.yml

cat > kube-flannel.yml

# 然后去github上面去把kube-flannel.yml内容粘贴下来,复制到本地的kube-flannel.yml文件中,地址:https://github.com/flannel-io/flannel/blob/master/Documentation/kube-flannel.yml

vim kube-flannel.yml

# kube-flannel.yml内容,不需要修改,直接可以使用

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- networking.k8s.io

resources:

- clustercidrs

verbs:

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

k8s-app: flannel

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

k8s-app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: docker.io/flannel/flannel-cni-plugin:v1.1.2

#image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.2

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: docker.io/flannel/flannel:v0.22.0

#image: docker.io/rancher/mirrored-flannelcni-flannel:v0.22.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: docker.io/flannel/flannel:v0.22.0

#image: docker.io/rancher/mirrored-flannelcni-flannel:v0.22.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate# 执行安装

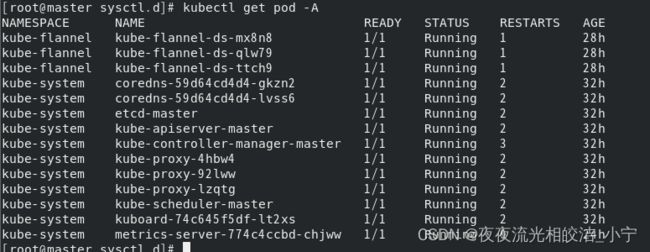

kubectl apply -f kubu-flannel.yml检测服务是否正常运行

#获取节点信息

kubectl get nodes

# 查看所有pod 节点

kubectl get pod -A

# 检查集群健康状态

kubectl get cs

到此,k8s的集群就部署完成了。下面还需要部署kuboard UI页面。

安装kuboard UI页面

# 本次采用在线安装方式

kubectl apply -f https://kuboard.cn/install-script/kuboard.yaml

kubectl apply -f https://addons.kuboard.cn/metrics-server/0.3.6/metrics-server.yaml

# 查看 Kuboard 运行状态

kubectl get pods -l k8s.kuboard.cn/name=kuboard -n kube-system获取管理员权限Token

echo $(kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d)访问kuboard UI页面

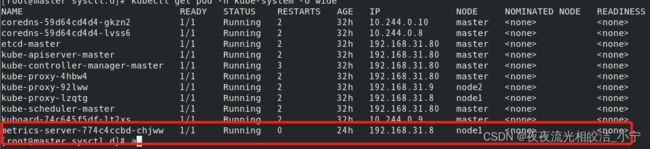

# 查看pod 节点信息,获取Kuboard 服务的IP地址,便于浏览器访问Kuboard UI页面

kubectl get pod -n kube-system -o wide 获取到kuboard UI页面的IP是192.168.31.8,默认端口是32567,我们直接浏览器访问:

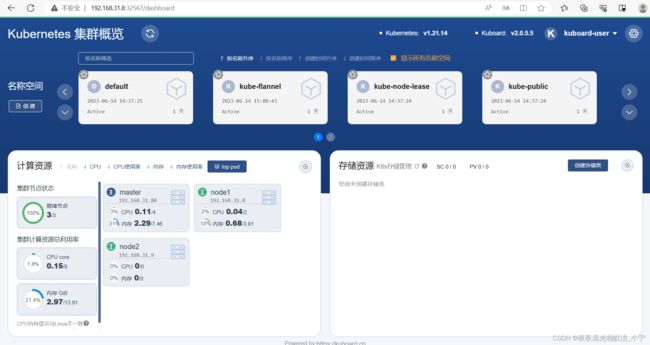

Kuboard![]() http://192.168.31.8:32567/login

http://192.168.31.8:32567/login

粘贴我们刚才获取到的Token,就可以进行登录了

到此,k8s集群搭建完成!

如果觉得本文对您有帮助,欢迎点赞+收藏+关注!