爬虫-关于网站开启浏览器TLS签名或者JA3指纹导致普通的http 客户端请求403拦截问题解惑

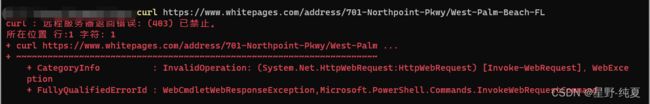

博客来源,鉴于朋友问我,他通过RPA自动化脚本,执行一个网站资源请求爬取数据时,出现了403的状态码,咨询我如何解决

一开始这情况,我是不知道如何处理的,不过偶尔机会,我在玩gpt4free的时候,发现有一个python库可以通过这种方式去进行仿真指纹浏览器进行请求,【curl_cffi】

库说明

Unlike other pure python http clients like httpx or requests, this package can impersonate browsers’ TLS signatures or JA3 fingerprints. If you are blocked by some website for no obvious reason, you can give this package a try.

大致意思,想必大家都知道了,就是当有些网站,我们通过普通的http客户端请求方式,被拦截的情况下,那么很大可能这些网站都开启了浏览器的TLS签名认证或者JA3指纹。我们就可以用这个库来模拟请求了。

根据朋友描述,实现对whitepage网站数据爬取,并输出到excel文件。以下是代码实现。

第一次写爬虫,发现语言不是最主要的,其实最重要的还是需求分析与逻辑代码表达。最终怎么都能实现我们的需求。

from urllib.parse import quote

import time

from re import findall

from curl_cffi import requests

from bs4 import BeautifulSoup

import pandas as pd

from pandas import DataFrame

import os

home_page_url = 'https://www.whitepages.com'

excel_file_path = r'./address_list.xlsx'

if(not os.path.exists(excel_file_path)):

data = {'name': [], "city": [], 'state': [], 'address': [], 'zip': []}

df = DataFrame(data)

df.to_excel(excel_file_path,index=True,index_label='No.')

# 读取文件

df = pd.read_excel(excel_file_path)

headers = {

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'accept-encoding': 'gzip, deflate, br',

'accept-language': 'en,fr-FR;q=0.9,fr;q=0.8,es-ES;q=0.7,es;q=0.6,en-US;q=0.5,am;q=0.4,de;q=0.3',

'sec-ch-ua': '"Chromium";v="112", "Google Chrome";v="112", "Not:A-Brand";v="99"',

'sec-ch-ua-mobile': '?0',

'sec-fetch-dest': 'document',

'sec-fetch-mode': 'navigate',

'sec-ch-ua-platform': '"Windows"',

'sec-fetch-site': 'none',

'sec-fetch-user': '?1',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36'

}

def search():

try:

response = requests.get(

f'{home_page_url}/address/701-Northpoint-Pkwy/West-Palm-Beach-FL', headers=headers, impersonate="chrome99")

# print(f'请求头是:{response.headers}')

print(f'响应状态码:{response.status_code}')

print(f'重定向地址是:{response.url}')

get_address_data(response.url)

except Exception as e:

print(f'search:{e}')

def get_address_data(url):

response = requests.get(url, headers=headers, impersonate="chrome99")

try:

html_utils = open('./address.html', 'w', encoding='utf-8')

html_utils.write(response.text)

html_utils.close()

print('文件写入成功')

soup = BeautifulSoup(response.text, features='lxml')

soup.prettify()

for link in soup.find_all('a'):

link_class_arr = link.get('class')

if (not link_class_arr is None and 'residents-list-item' in link_class_arr):

time.sleep(5)

get_address_detail(f"{link.get('href').strip()}")

except Exception as e:

print(f'get_address_data:{e}')

def get_address_detail(url):

try:

response = requests.get(f"{home_page_url}{url}", headers=headers, impersonate="chrome99")

name = url.split('/')[2]

html_utils = open(f'./address_detail_{name}.html', 'w', encoding='utf-8')

html_utils.write(response.text)

html_utils.close()

print('文件写入成功')

soup = BeautifulSoup(response.text, features='lxml')

soup.prettify()

city = '',

state = '',

address = '',

zip = ''

# 寻找地址,邮编相关信息

for tag in soup.find_all("a"):

tag_class_arr = tag.get('class')

# mb-1 raven--text td-n

if (tag_class_arr == ['mb-1', 'raven--text', 'td-n']):

address_contents = tag.contents

address = address_contents[0].replace("\n", "").strip()

city_info = address_contents[len(address_contents)-1].replace("\n", "").lstrip().split(',')

city = city_info[0]

state_and_zip = city_info[1].strip().split(" ")

state = state_and_zip[0]

zip = state_and_zip[1]

print(f'{name} {address} {city} {state} {zip}')

df.loc[len(df.index)] = [len(df.index)+1,name, city, state, address, zip]

DataFrame(df).to_excel(excel_file_path, sheet_name=name,index=False, header=True)

except Exception as e:

print(f'get_address_detail:{e}')

search()

好的,有一次帮助朋友解决疑难问题,又得到了困惑点的解答,同时也解决了问题。