Springboot部署ELK实战

Springboot部署ELK实战

-

- 1、部署docker、docker-compose环境

-

- 安装docker

- 安装docker-compose

- 2、搭建elk

-

- 1、构建目录&&配置文件

-

- 1、docker-compose.yml 文档

- 2、Kibana.yml

- 3、log-config.conf

- 2、添加es分词器插件

- 3、启动

- 3、Springboot项目引入es、logStash配置

-

- 1、引入依赖

- 2、修改application.yml配置文件

- 3、调整logback.properties配置文件

- 4、使用情况

-

- 1、查看服务是否成功运行

- 2、Kibana管理索引

- 3、日志搜索

1、部署docker、docker-compose环境

安装docker

# 安装docker

curl -fsSL get.docker.com -o get-docker.sh

sudo sh get-docker.sh --mirror Aliyun

# docker开机自启

systemctl enable docker

# 启动docker

systemctl start docker

# 创建docker用户组

groupadd docker

# 当前用户加入docker组

usermod -aG docker $USER

# 测试安装是否正确

docker info

docker run hello-world

# 配置镜像服务

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://6xe6xbbk.mirror.aliyuncs.com"]

}

EOF

# 重新加载

systemctl daemon-reload

# 重启docker

systemctl restart docker

安装docker-compose

# 安装docker-compose依赖

curl -L https://github.com/docker/compose/releases/download/1.25.5/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

# 添加执行权限

chmod +x /usr/local/bin/docker-compose

# 查看是否安装成功

docker compose version

2、搭建elk

1、构建目录&&配置文件

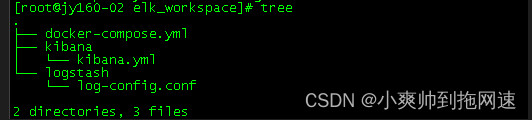

按照图中展示的层级创建目录以及文件

共创建2个目录 kibana、logstash,3个文件docker-compose.yml、kibana.yml、log-config.conf

1、docker-compose.yml 文档

version: "3.3"

volumes:

data:

config:

plugin:

networks:

es:

services:

elasticsearch:

image: elasticsearch:7.14.0

ports:

- "9200:9200"

networks:

- "es"

environment:

# 单节点模式

- "discovery.type=single-node"

- "ES_JAVA_OPTS=-Xms1024m -Xmx1024m"

# 文件挂载

volumes:

- data:/usr/share/elasticsearch/data

- config:/usr/share/elasticsearch/config

- plugin:/usr/share/elasticsearch/plugins

kibana:

image: kibana:7.14.0

ports:

- "5601:5601"

networks:

- "es"

volumes:

- ./kibana/kibana.yml:/usr/share/kibana/config/kibana.yml

logstash:

image: logstash:7.14.0

ports:

- "4560:4560"

- "4561:4561"

networks:

- "es"

volumes:

- ./logstash/log-config.conf:/usr/share/logstash/pipeline/logstash.conf

2、Kibana.yml

server.host: "0"

server.shutdownTimeout: "5s"

elasticsearch.hosts: [ "http://elasticsearch:9200" ]

monitoring.ui.container.elasticsearch.enabled: true

i18n.locale: "zh-CN" # 这个是为了可视化界面展示为中文

3、log-config.conf

input {

# logback发送日志信息,为tcp模式,也可以使用redis、kafka等方式进行日志的发送

tcp {

# logstash接收数据的端口和ip

# 4560收集日志级别为info

mode => "server"

host => "0.0.0.0"

port => 4560

codec => json_lines

type => "info"

}

tcp {

# 4561收集日志级别为error

mode => "server"

host => "0.0.0.0"

port => 4561

codec => json_lines

type => "error"

}

}

output {

elasticsearch {

hosts => "elasticsearch:9200"

# 可自定义,通过参数值补充,不存在的索引会先创建再赋值

# 按日生成索引

index => "logutils-%{type}-%{+YYYY-MM-dd}"

}

# 输出到控制台

stdout { codec => rubydebug }

}

2、添加es分词器插件

todo 引入插件

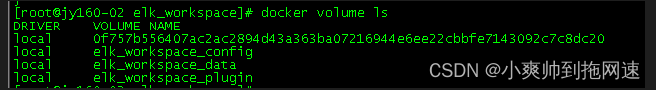

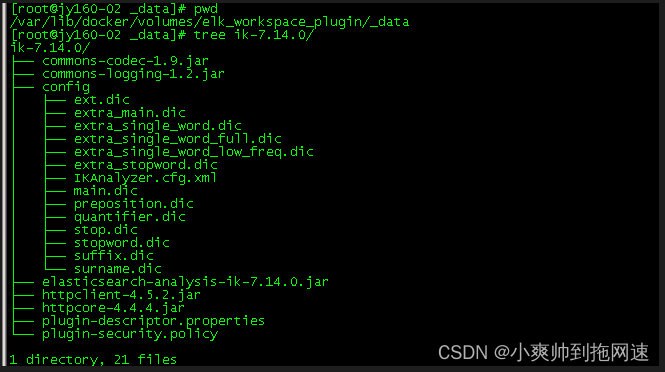

# 将分词器放在创建es镜像所指定的插件挂载目录

plugin:/usr/share/elasticsearch/plugins

# 检索当前所有的卷

docker volume ls

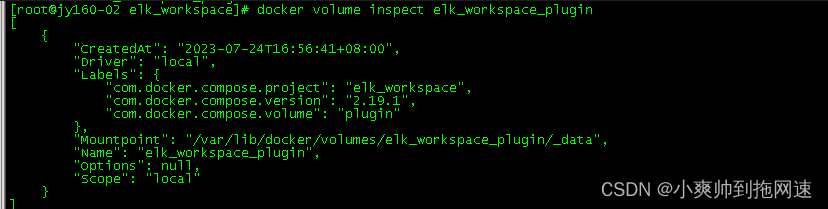

# 查找es挂载插件目录的绝对路径

docker volume inspect elk_workspace_plugin

# 将ik-7.14.0.zip 解压到es挂载插件目录的对接路径

unzip -d /var/lib/docker/volumes/elk_workspace_plugin/_data ik-7.14.0.zip

3、启动

# 在当前docker-compose.yml 同级目录下启动

# -d 后台启动

docker-compose up -d

# 查看运行情况

docker ps -a

# 查看运行日志

docker logs -f 容器id/容器名查看运行日志

# 停止运行

docker-comopse down

# 强制读取更新配置后重启

docker-compose up --force-recreate -d

3、Springboot项目引入es、logStash配置

1、引入依赖

<dependency>

<groupId>net.logstash.logbackgroupId>

<artifactId>logstash-logback-encoderartifactId>

<version>5.3version>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-data-elasticsearchartifactId>

<version>2.3.10.RELEASEversion>

dependency>

2、修改application.yml配置文件

spring:

elasticsearch:

# 此处替换对应ip

uris: ip:9200

3、调整logback.properties配置文件

message的配置根据需要,sessionId、traceId是通过配置才能引用的

"message": "[%X{sessionId}] [%X{traceId}] %class:%line "

ERROR

ACCEPT

DENY

ip:4561

Asia/Shanghai

{

"project": "logutils-error",

"level": "%level",

"service": "${APP_NAME:-}",

"thread": "%thread",

"class": "%logger",

"message": "[%X{sessionId}] [%X{traceId}] %class:%line ",

"stack_trace": "%exception{20}",

"classLine":"%class:%line"

}

ip:4560

Asia/Shanghai

{

"project": "logutils-info",

"level": "%level",

"service": "${APP_NAME:-}",

"class": "%logger",

"message": "[%X{sessionId}] [%X{traceId}] %class:%line %message",

"thread": "%thread",

"classLine":"%class:%line"

}

4、使用情况

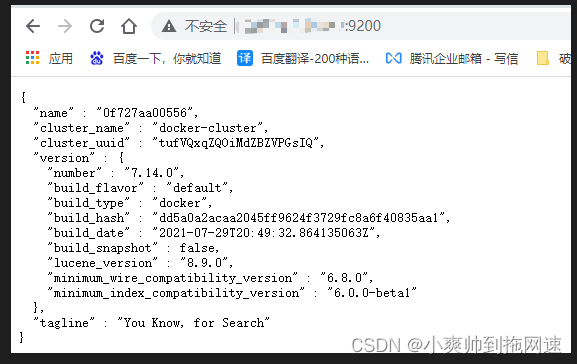

1、查看服务是否成功运行

Eleasticsearch: http://ip:9200

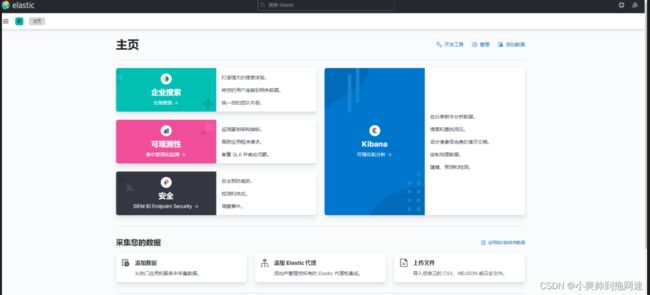

Kibana: http://ip:5601

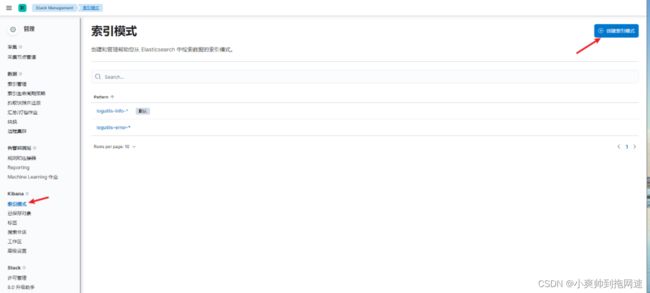

2、Kibana管理索引

检索Stack Management

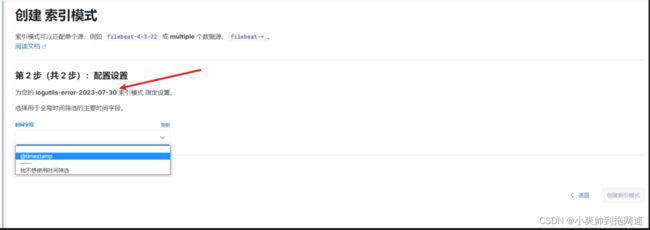

创建索引模式

启动后会自动生成对应的索引提供选择

配置按照@timestamp 作为全局时间筛选