Kubernetes部署

文章目录

- 1 Kubernetes快速部署

-

- 1.1 Kubernetes安装要求

- 1.2 安装步骤

- 1.3 准备环境

- 1.4 所有节点安装Docker/kubeadm/kubelet

-

- 1.4.1 安装Docker

- 1.4.2 添加kubernetes阿里云YUM软件源

- 1.4.3 安装kubeadm,kubelet和kubectl

- 1.5 部署Kubernetes Master

- 1.6 安装Pod网络插件(CNI)

- 1.7 加入Kubernetes Node

- 2 测试kubernetes集群

1 Kubernetes快速部署

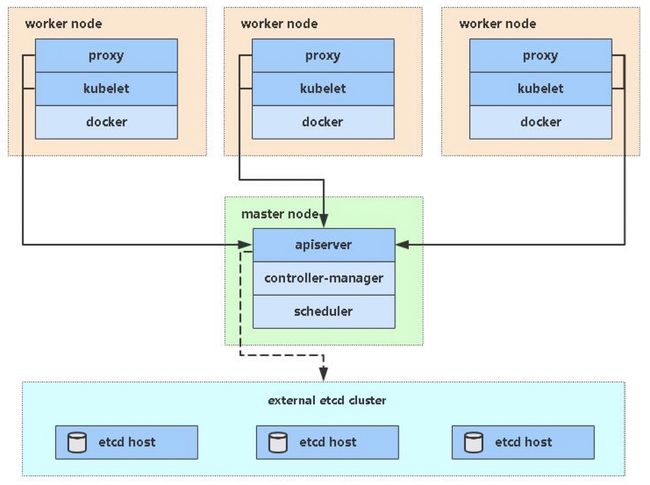

kubeadm是官方社区推出的一个用于快速部署kubernetes集群的工具

这个工具能通过两条指令完成一个kubernetes集群的部署

# 创建一个 Master 节点

$ kubeadm init

# 将一个 Node 节点加入到当前集群中

$ kubeadm join

1.1 Kubernetes安装要求

在开始之前,部署Kubernetes集群机器需要满足以下几个条件

至少3台机器,操作系统 CentOS7+

- 硬件配置:2GB或更多RAM,2个CPU或更多CPU,硬盘20GB或更多

- 集群中所有机器之间网络互通

- 可以访问外网,需要拉取镜像

- 禁止swap分区

1.2 安装步骤

- 在所有节点上安装Docker和kubeadm

- 部署Kubernetes Master

- 部署容器网络插件

- 部署 Kubernetes Node,将节点加入Kubernetes集群中

- 部署Dashboard Web页面,可视化查看Kubernetes资源

1.3 准备环境

| 主机 | IP |

|---|---|

| master | 192.168.25.146 |

| node1 | 192.168.25.147 |

| node2 | 192.168.25.148 |

部署

//修改主机名

[root@localhost ~]# hostnamectl set-hostname master.example.com

[root@localhost ~]# bash

[root@master ~]# hostname

master.example.com

[root@node ~]# hostnamectl set-hostname node1.example.com

[root@node ~]# bash

[root@node1 ~]# hostname

node1.example.com

[root@localhost ~]# hostnamectl set-hostname node2.example.com

[root@localhost ~]# bash

[root@node2 ~]# hostname

node2.example.com

#需在三台主机上操作步骤

//关闭防火墙和selinux

[root@master ~]# systemctl disable --now firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@master ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

//关闭swap分区

# vim /etc/fstab

注释掉swap分区

//添加hosts

[root@master ~]# cat >> /etc/hosts << EOF

192.168.25.146 master master.example.com

192.168.25.147 node1 node1.example.com

192.168.25.148 node2 node2.example.com

EOF

//将桥接的IPv4流量传递到iptables的链(在master上做)

[root@master ~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@master ~]# sysctl --system # 生效

//配置时间同步

[root@master ~]# yum -y install chrony

[root@master ~]# vi /etc/chrony.conf

pool time1.aliyun.com iburst

[root@master ~]# systemctl enable --now chronyd

[root@master ~]# for i in master node1 node2 ;do ssh $i 'date' ;done

2021年 12月 18日 星期六 04:59:41 EST

2021年 12月 18日 星期六 04:59:42 EST

2021年 12月 18日 星期六 04:59:42 EST

//免密认证(只需要在master上做)

[root@master ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:3DYIG2AYzaeUUWN8asgfqScnXoRyn+tYGOexy92qhD4 [email protected]

The key's randomart image is:

+---[RSA 3072]----+

| .=+=+ |

| ..=oo.. |

| o =o+ |

| . * *= o |

| o.*+oS + |

| +*Bo . . |

| .o*=. |

| .E=.o . |

| oo=.o.. |

+----[SHA256]-----+

[root@master ~]# ssh-copy-id master

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@master's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'master'"

and check to make sure that only the key(s) you wanted were added.

[root@master ~]# ssh-copy-id node1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@node1's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'node1'"

and check to make sure that only the key(s) you wanted were added.

[root@master ~]# ssh-copy-id node2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@node2's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'node2'"

and check to make sure that only the key(s) you wanted were added.

// 重启三台主机,使上面的一些配置生效

[root@master ~]# reboot

1.4 所有节点安装Docker/kubeadm/kubelet

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker。

1.4.1 安装Docker

[root@master ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

[root@master ~]# yum -y install docker-ce

[root@master ~]# systemctl enable --now docker

[root@master ~]# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disab>

Active: active (running) since Sat 2021-12-18 05:10:41 EST; 9s ago

Docs: https://docs.docker.com

Main PID: 7143 (dockerd)

Tasks: 9

Memory: 36.3M

CGroup: /system.slice/docker.service

└─7143 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

12月 18 05:10:40 master.example.com dockerd[7143]: time="2021-12-18T05:10:40.840348434-0>

12月 18 05:10:40 master.example.com dockerd[7143]: time="2021-12-18T05:10:40.865784971-0>

12月 18 05:10:40 master.example.com dockerd[7143]: time="2021-12-18T05:10:40.865832834-0>

12月 18 05:10:40 master.example.com dockerd[7143]: time="2021-12-18T05:10:40.866033860-0>

12月 18 05:10:41 master.example.com dockerd[7143]: time="2021-12-18T05:10:41.040791665-0>

12月 18 05:10:41 master.example.com dockerd[7143]: time="2021-12-18T05:10:41.093668762-0>

12月 18 05:10:41 master.example.com dockerd[7143]: time="2021-12-18T05:10:41.109693941-0>

lines 1-17

[root@master ~]# docker --version

Docker version 20.10.12, build e91ed57

[root@master ~]# cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://xj3hc284.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

1.4.2 添加kubernetes阿里云YUM软件源

[root@master ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

1.4.3 安装kubeadm,kubelet和kubectl

由于版本更新频繁,这里指定版本号部署

[root@node2 ~]# yum install -y kubelet-1.20.0 kubeadm-1.20.0 kubectl-1.20.0

[root@master ~]# systemctl enable kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

1.5 部署Kubernetes Master

在192.168.25.146(Master)执行

[root@master ~]# kubeadm init \

--apiserver-advertise-address=192.168.25.146 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.20.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

// 记录下面的这些东西到一个文件中,后面会用到

kubeadm join 192.168.25.146:6443 --token 481ink.zzl4pouej6vq82bs \

--discovery-token-ca-cert-hash sha256:94dc5e83208b662f4a9f7f133a1da3ed6052ead72922de5aee750ab498d44031

由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址

[root@master ~]# echo ‘export KUBECONFIG=/etc/kubernetes/admin.conf' > /etc/profile.d/k8s.sh

[root@master ~]# source /etc/profile.d/k8s.sh

1.6 安装Pod网络插件(CNI)

//使用这条命令直接安装

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

//我这里是把文件下载下来安装

[root@master ~]# ls

anaconda-ks.cfg kube-flannel.yml

[root@master ~]# kubectl apply -f /root/kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

确保能够访问到quay.io这个registery

1.7 加入Kubernetes Node

在192.168.25.147、192.168.25.148上(Node)执行,执行刚刚保存的命令

向集群添加新节点,执行在kubeadm init输出的kubeadm join命令

[root@node1 ~]# kubeadm join 192.168.25.146:6443 --token 481ink.zzl4pouej6vq82bs --discovery-token-ca-cert-hash sha256:94dc5e83208b662f4a9f7f133a1da3ed6052ead72922de5aee750ab498d44031

[root@node2 ~]# kubeadm join 192.168.25.146:6443 --token 481ink.zzl4pouej6vq82bs --discovery-token-ca-cert-hash sha256:94dc5e83208b662f4a9f7f133a1da3ed6052ead72922de5aee750ab498d44031

2 测试kubernetes集群

在Kubernetes集群中创建一个pod,验证是否正常运行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master.example.com Ready control-plane,master 15m v1.20.0

node1.example.com Ready 5m58s v1.20.0

node2.example.com Ready 5m53s v1.20.0

[root@master ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

[root@master ~]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-6799fc88d8-sk9zs 1/1 Running 0 4m56s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 443/TCP 21m

service/nginx NodePort 10.110.176.8 80:32279/TCP 4m36s

//访问在pod中运行的容器

[root@master ~]# curl 10.110.176.8

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.