Kubernetes 二进制安装高可用集群

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

文章目录

- 前言

- 一、准备工作

-

- 1.1 配置主机域名

- 1.2 ssh免秘钥登录

- 1.3 执行如下脚 本

- 1.5为 kube-apiserver 创建负载均衡器 keepalived 和 haproxy

-

- 在三台准备好的master上 安装 haproxy keepalived

- keepalived2.0.17 配置如下:

- haproxy 配置

- 启动服务验证

- nginx替换haproxy方式

- 二、使用堆控制平面和 etcd 节点

-

- 搭建etcd集群

-

- 配置etcd工作目录

- 采用openssl 创建etcd证书

-

- 生成CA-根证书

- 生成etcd服务器证书

- 分发证书

- 部署etcd集群

-

- 创建配置文件

- 启动etcd集群

- kubernetes组件部署

- 部署API-server

-

-

- 生成API-server服务器证书

- 生成token文件

- 创建配置文件

- 创建服务启动文件

-

- 控制节点部署 kubectl 命令行工具

-

- 创建证书签名请求

-

- 生成admin客户端证书

- 证书问题可参考: https://blog.csdn.net/huang714/article/details/109091615

-

- 创建kubectl用的admin.conf文件

- 查看集群组件状态

- 部署kube-controller-manager

-

- 生成kube-controller-manager证书

- 创建kube-controller-manager的kubeconfig

- 部署kube-scheduler

-

- 生成kube-scheduler证书

- 创建kube-scheduler的kubeconfig

- 创建配置文件

- 部署kubelet 以下操作在master1上操作

-

- 创建kubelet-bootstrap.kubeconfig

- 创建配置文件

- 创建启动文件

- 启动服务

- 批复kubelet的CSR请求加入集群

-

- 手动批复

- 异常情况

-

- 自动批复及续约

- 授权apiserver访问kubelet

- 部署kube-proxy

-

- 生成kube-proxy客户端证书

- 创建工作节点conf文件

- 创建kube-proxy配置文件

- 配置网络组件

- 配置CoreDNS

- 三 外部 etcd 高可用集群

-

-

- 参考:将 kubelet 配置为 etcd 的服务管理器。

- cfssl 创建根证书CA

- 创建一个高可用 etcd 集群

-

- 分放etcd可执行程序

- 创建etcd证书

- 创建配置文件

- **创建启动服务文件**

- 启动etcd集群

- kubernetes组件部署

- 部署API-server

- 控制节点部署 kubectl 命令行工具

-

- 创建证书签名请求

- 生成证书和私钥

- 创建kubectl用的admin.conf文件

- 查看集群组件状态

- 部署kube-controller-manager

- 部署kube-scheduler

- 部署kubelet 以下操作在master1上操作

-

- 创建kubelet-bootstrap.kubeconfig

- 创建配置文件

- 创建启动文件

- 启动服务

- 批复kubelet的CSR请求加入集群

-

- 手动批复

- 异常情况

-

- 自动批复及续约

- 部署kube-proxy

- 配置网络组件

-

前言

提示:以下是本篇文章正文内容,下面案例可供参考

一、准备工作

1.1 配置主机域名

在master01和etcd01的/etc/hosts中添加主机名

cat >> /etc/hosts << EOF

192.168.118.200 control-plane00

192.168.118.201 control-plane01

192.168.118.202 control-plane02

192.168.118.203 etcd00

192.168.118.204 etcd01

192.168.118.205 etcd02

192.168.118.206 node01

192.168.118.207 node02

192.168.118.208 node03

EOF ##机器可以顶得住就加,不然只要01测试用就好

1.2 ssh免秘钥登录

[root@K8S-master01 ~]# ssh-keygen

# for i in { 01 02 }; do ssh-copy-id root@control-plane$i;done

# for i in { 00 01 02 }; do ssh-copy-id root@etcd$i;done

# for i in { 00 01 02 }; do ssh-copy-id root@node$i;done

后面配置外部etcd时也需要配置下,这里略过

1.3 执行如下脚 本

# systemctl disable firewalld

# systemctl stop firewalld

# setenforce 0

# sed -i 's/SELINUX=enforcing/SELINUX=disable/g' /etc/selinux/config

# swapoff -a ##临时关闭

永久关闭:注释掉/etc/fstab文件中的swap行

## 允许 iptables 检查桥接流量

# cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

# cat <<EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# sysctl --system

##安装容器运行时

# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

# yum install docker-ce

------

# mkdir -p /etc/containerd

# containerd config default | tee /etc/containerd/config.toml

# systemctl restart containerd

-------

# vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

...

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true ##新增这行

------------配置docker容器运行时驱动

# cat <<EOF | tee /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

注:kubeadm安装方式在上面的运行时设置好就可以了,二进制安装,这个必须要设置,不然后面会出错,无法启动kubelet

----------

# systemctl restart containerd

# systemctl enable docker && systemctl start docker

1.5为 kube-apiserver 创建负载均衡器 keepalived 和 haproxy

在三台准备好的master上 安装 haproxy keepalived

# yum -y install haproxy keepalived

已安装:

haproxy.x86_64 0:1.5.18-9.el7_9.1 keepalived.x86_64 0:1.3.5-19.el7

作为依赖被安装:

net-snmp-agent-libs.x86_64 1:5.7.2-49.el7_9.1

net-snmp-libs.x86_64 1:5.7.2-49.el7_9.1

# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.confba

# cp /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfgba

keepalived2.0.17 配置如下:

! /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ens33 ##取值网卡

virtual_router_id 51

priority 101 ## 在控制平面节点上应该比在备份上更高。因此102、101和100分别就足够了

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.118.222 ## APISERVER_VIP 虚拟地地址,跟节点同网做比较好

}

track_script {

check_apiserver

}

}

健康检查脚本,/etc/keepalived/check_apiserver.sh

#!/bin/sh

errorExit() {

echo "*** $*" 1>&2

exit 1

}

curl --silent --max-time 2 --insecure https://localhost:$8443/ -o /dev/null || errorExit "Error GET https://localhost:8443/"

if ip addr | grep -q 192.168.118.222; then

curl --silent --max-time 2 --insecure https://192.168.118.222:8443/ -o /dev/null || errorExit "Error GET https://192.168.118.222:8443/"

fi

haproxy 配置

# /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

log /dev/log local0

log /dev/log local1 notice

daemon

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 1

timeout http-request 10s

timeout queue 20s

timeout connect 5s

timeout client 20s

timeout server 20s

timeout http-keep-alive 10s

timeout check 10s

#---------------------------------------------------------------------

# apiserver frontend which proxys to the control plane nodes

#---------------------------------------------------------------------

frontend apiserver

bind *:8443

mode tcp

option tcplog

default_backend apiserver

#---------------------------------------------------------------------

# round robin balancing for apiserver

#---------------------------------------------------------------------

backend apiserver

option httpchk GET /healthz

http-check expect status 200

mode tcp

option ssl-hello-chk

balance roundrobin

server K8S-master01 192.168.118.100:6443 check

server K8S-master02 192.168.118.101:6443 check

server K8S-master03 192.168.118.102:6443 check

haproxy-1.5.18-9.el7_9.1版的配置文件修改

# /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# http://haproxy.1wt.eu/download/1.4/doc/configuration.txt

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

# 这一段记得要#,不然会启动不了

#---------------------------------------------------------------------

# defaults

# mode http

# daemon

# turn on stats unix socket

# stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

#frontend main *:5000

# acl url_static path_beg -i /static /images /javascript /stylesheets

# acl url_static path_end -i .jpg .gif .png .css .js

# use_backend static if url_static

# default_backend app

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

#backend static

# balance roundrobin

# server static 127.0.0.1:4331 check

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

#backend app

# balance roundrobin

# server app1 127.0.0.1:5001 check

# server app2 127.0.0.1:5002 check

# server app3 127.0.0.1:5003 check

# server app4 127.0.0.1:5004 check

#---------------------------------------------------------------------

# apiserver frontend which proxys to the control plane nodes

#---------------------------------------------------------------------

frontend apiserver

bind *:8443

mode tcp

option tcplog

default_backend apiserver

#---------------------------------------------------------------------

# round robin balancing for apiserver

#---------------------------------------------------------------------

backend apiserver

option httpchk GET /healthz

http-check expect status 200

mode tcp

option ssl-hello-chk

balance roundrobin

server control-plane00 192.168.118.200:6443 check

server control-plane01 192.168.118.201:6443 check

server control-plane02 192.168.118.202:6443 check

分发文件

# cp keepalived.conf check_apiserver.sh /etc/keepalived/

# for i in { 01 02 }; do scp /etc/keepalived/*.* root@control-plane$i:/etc/keepalived/ ;done

# cp haproxy.cfg /etc/haproxy/

# for i in { 01 02 }; do scp /etc/haproxy/haproxy.cfg root@control-plane$i:/etc/haproxy/ ;done

启动服务验证

# systemctl daemon-reload

# systemctl enable haproxy --now

# systemctl enable keepalived --now

--启动完可以ping下看是否正常了

# ping 192.168.118.222

--查看网卡上的VIP地址状态

# ip a show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:2f:c4:7c brd ff:ff:ff:ff:ff:ff

inet 192.168.118.200/24 brd 192.168.118.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.118.222/32 scope global ens33 ##只有CP0能出来这条,其他两没有,后面APIserver也能正常启动,就这样吧

valid_lft forever preferred_lft forever

inet6 fe80::673c:c227:f2a5:6513/64 scope link noprefixroute

valid_lft forever preferred_lft forever

nginx替换haproxy方式

# vim /etc/yum.repos.d/nginx.repo

----- 稳定版:

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=1

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

---

# yum install -y nginx

# nginx -v

nginx version: nginx/1.22.0

# vim /etc/nginx/nginx.conf

// 在文件最后加上以下内容,不能写在http模块中!!

stream {

upstream kube-apiserver {

server 192.168.118.200:6443 max_fails=3 fail_timeout=30s;

server 192.168.118.201:6443 max_fails=3 fail_timeout=30s;

server 192.168.118.202:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 8443;

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

}

}

# nginx -t //测试看看

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

# systemctl stop haproxy.service | systemctl disable haproxy.service

# systemctl daemon-reload

# systemctl start nginx

# systemctl enable nginx

nginx明显会比haproxy强大些,haproxy关掉一个,本节点就没法查询k8s状态,nginx只要其他节点还有一个在运行,本节点没有haproxy和nginx,也还可以查询k8s状态

二、使用堆控制平面和 etcd 节点

搭建etcd集群

配置etcd工作目录

# mkdir -p /opt/etcd ## 配置文件存放目录

# mkdir -p /opt/kubernetes/

# mkdir -p /etc/kubernetes/pki/etcd ## 证书文件存放目录

采用openssl 创建etcd证书

生成CA-根证书

1、生成一个 2048 位的 ca.key 文件 -证书私钥

# mkdir openssl ##创建一个目录方便生成的文件存放

# cd openssl

# openssl genrsa -out ca.key 2048

# ls

ca.key

2、在 ca.key 文件的基础上,生成 ca.crt 文件(用参数 -days 设置证书有效期)-CA 证书

# openssl req -x509 -new -nodes -key ca.key -subj "/CN=kubernetes" -days 10000 -out ca.crt

# ls

ca.crt ca.key

# openssl req -x509 -new -nodes -key ca.key -subj "/CN=kubernetes" -days 10000 -out ca.crt -subj "/C=cn/ST=Fujian/L=Fuzhou/O=system:masters/OU=system/CN=kubernetes-admin"

生成etcd服务器证书

3、 生成一个 2048 位的 etcd.key 文件:

# openssl genrsa -out etcd.key 2048

# ls

ca.crt ca.key **etcd.key**

4、创建一个用于生成证书签名请求(CSR)的配置文件。 保存文件:etcd-csr.conf

[ req ]

default_bits = 2048

prompt = no

default_md = sha256

req_extensions = req_ext

distinguished_name = dn

[ dn ]

C = CN

ST = Fujian

L = Fuzhou

O = k8s

OU = system

CN = etcd

[ req_ext ]

subjectAltName = @alt_names

[ alt_names ]

IP.1 = 127.0.0.1

IP.2 = 192.168.118.200

IP.3 = 192.168.118.201

IP.4 = 192.168.118.202

[ v3_ext ]

authorityKeyIdentifier=keyid,issuer:always

basicConstraints=CA:FALSE

keyUsage=keyEncipherment,dataEncipherment

extendedKeyUsage=serverAuth,clientAuth

subjectAltName=@alt_names

5、基于上面的配置文件生成证书签名请求:

# openssl req -new -key etcd.key -out etcd.csr -config etcd-csr.conf

# ls

ca.crt ca.key etcd-csr.conf **etcd.csr** etcd.key

6、基于 ca.key、ca.key 和 etcd.csr 等三个文件生成服务端证书:

# openssl x509 -req -in etcd.csr -CA ca.crt -CAkey ca.key \

-CAcreateserial -out etcd.crt -days 10000 \

-extensions v3_ext -extfile etcd-csr.conf

Signature ok

subject=/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=192.168.118.20

Getting CA Private Key

# ls

ca.crt ca.key **ca.srl** csr.conf **etcd.crt** etcd.csr etcd.key

7、查看证书:

# openssl x509 -noout -text -in ./etcd.crt

Certificate:

Data:

Version: 3 (0x2)

Serial Number:

ac:45:8c:22:71:87:5c:96

Signature Algorithm: sha256WithRSAEncryption

Issuer: CN=kubernetes

Validity

Not Before: Oct 26 07:52:29 2021 GMT

Not After : Mar 13 07:52:29 2049 GMT

Subject: C=CN, ST=Fujian, L=Fuzhou, O=k8s, OU=system, CN=etcd

Subject Public Key Info:

Public Key Algorithm: rsaEncryption

Public-Key: (2048 bit)

Modulus:

00:ca:。。。。。。:0f:

30:2b

Exponent: 65537 (0x10001)

X509v3 extensions:

X509v3 Authority Key Identifier:

keyid:29:BE:BA:CD:69:AF:9B:A7:9A:78:2B:ED:FC:65:61:8C:E1:FE:A6:63

DirName:/CN=kubernetes

serial:AD:7A:2B:AF:75:D9:52:9C

X509v3 Basic Constraints:

CA:FALSE

X509v3 Key Usage:

Key Encipherment, Data Encipherment

X509v3 Extended Key Usage:

TLS Web Server Authentication, TLS Web Client Authentication

X509v3 Subject Alternative Name:

IP Address:127.0.0.1, IP Address:192.168.118.200, IP Address:192.168.118.201, IP Address:192.168.118.202

Signature Algorithm: sha256WithRSAEncryption

44:93:。。。.。。。f3:43

分发证书

# cp etcd.crt etcd.key /etc/kubernetes/pki/etcd/

# cp ca.crt ca.key /etc/kubernetes/pki/

# for i in { 01 02 }; do scp etcd.crt etcd.key root@control-plane$i:/etc/kubernetes/pki/etcd/ ;done

# for i in { 01 02 }; do scp ca.crt ca.key root@control-plane$i:/etc/kubernetes/pki/ ;done

# for i in { 00 01 02 }; do scp ca.crt ca.key root@node$i:/etc/kubernetes/pki/ ;done

部署etcd集群

# tar zxvf etcd-v3.5.0-linux-amd64.tar.gz

# cd etcd-v3.5.0-linux-amd64/

# ls

# cp etcd* /usr/local/bin ##复制etcd和etcdctl、etcdutl文件到/usr/bin目录

# ls /usr/local/bin | grep etc

# for i in { 01 02 }; do scp etcd* root@control-plane$i:/usr/local/bin/ ;done

创建配置文件

# vim etcd-conf.sh

---

# 使用 IP 或可解析的主机名替换 HOST0、HOST1 和 HOST2

export HOST0=192.168.118.200

export HOST1=192.168.118.201

export HOST2=192.168.118.202

# 创建临时目录来存储将被分发到其它主机上的文件

mkdir -p /tmp/${HOST0}/ /tmp/${HOST1}/ /tmp/${HOST2}/

ETCDHOSTS=(${HOST0} ${HOST1} ${HOST2})

NAMES=("etcd00" "etcd01" "etcd02")

for i in "${!ETCDHOSTS[@]}"; do

HOST=${ETCDHOSTS[$i]}

NAME=${NAMES[$i]}

cat << EOF > /tmp/${HOST}/etcd.conf

#[Member]

ETCD_NAME="${NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${HOST}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${HOST}:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${HOST}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${HOST}:2379"

ETCD_INITIAL_CLUSTER="etcd00=https://${ETCDHOSTS[0]}:2380,etcd01=https://${ETCDHOSTS[1]}:2380,etcd02=https://${ETCDHOSTS[2]}:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-1"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

scp /tmp/${HOST}/etcd.conf root@${HOST}:/opt/etcd/ ##这行可以先不要

done

----

# sh etcd-conf.sh

生成如下样例

#[Member]

ETCD_NAME="etcd00"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.118.200:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.118.200:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.118.200:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.118.200:2379"

ETCD_INITIAL_CLUSTER="etcd00=https://192.168.118.200:2380,etcd01=https://192.168.118.201:2380,etcd02=https://192.168.118.202:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-1"

ETCD_INITIAL_CLUSTER_STATE="new"

注:

ETCD_NAME:节点名称(非主机名),集群中唯一

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通信监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN:集群Token

ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群

创建启动服务文件

# vim etcd.service

----

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/opt/etcd/etcd.conf

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/local/bin/etcd \

--cert-file=/etc/kubernetes/pki/etcd/etcd.crt \

--key-file=/etc/kubernetes/pki/etcd/etcd.key \

--trusted-ca-file=/etc/kubernetes/pki/ca.crt \

--peer-cert-file=/etc/kubernetes/pki/etcd/etcd.crt \

--peer-key-file=/etc/kubernetes/pki/etcd/etcd.key \

--peer-trusted-ca-file=/etc/kubernetes/pki/ca.crt \

--peer-client-cert-auth \

--client-cert-auth

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

分发配置文件

# cp etcd.service /usr/lib/systemd/system/

# for i in { 01 02 }; do scp etcd.service root@control-plane$i:/usr/lib/systemd/system/ ;done

启动etcd集群

每个节点运行:

# mkdir -p /var/lib/etcd/default.etcd ## ETCD_DATA_DIR

# systemctl daemon-reload

# systemctl start etcd.service 注:同时启动三个节点

# systemctl status etcd.service

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; disabled; vendor preset: disabled)

Active: active (running) since 二 2021-09-07 21:12:25 CST; 40s ago

Main PID: 8174 (etcd)

Tasks: 6

Memory: 6.9M

CGroup: /system.slice/etcd.server

└─8174 /usr/local/bin/etcd --cert-file=/opt/etcd/ssl/etcd.pem --key-file=/opt/etcd/ssl/e...

。。。。。。

9月 07 21:12:25 k8s-etcd03 systemd[1]: Started Etcd Server.

。。。。。。

Hint: Some lines were ellipsized, use -l to show in full.

# systemctl enable etcd.service

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

# etcdctl member list

20f12e8816b7ea84, started, etcd01, https://192.168.118.201:2380, https://192.168.118.201:2379, false

667f6d63cfe32627, started, etcd00, https://192.168.118.200:2380, https://192.168.118.200:2379, false

e1ebe4e6c5bebcca, started, etcd02, https://192.168.118.202:2380, https://192.168.118.202:2379, false

# netstat -lntp|grep etcd

tcp 0 0 192.168.118.200:2379 0.0.0.0:* LISTEN 8875/etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 8875/etcd

tcp 0 0 192.168.118.200:2380 0.0.0.0:* LISTEN 8875/etcd

kubernetes组件部署

# tar zxvf kubernetes-server-linux-amd64.tar.gz

# cd kubernetes/server/bin/

# ls

# cp kube-apiserver kube-controller-manager kube-scheduler kubectl kubelet kube-proxy /usr/local/bin/

# ls /usr/local/bin | grep kube

# for i in { 01 02 }; do scp /usr/local/bin/kube* root@control-plane$i:/usr/local/bin/ ;done

# for i in { 00 01 02 }; do scp kubelet kube-proxy root@node$i:/usr/local/bin/ ;done

创建工作目录

# mkdir -p /etc/kubernetes/pki # kubernetes组件证书文件存放目录

# mkdir /var/log/kubernetes # kubernetes组件日志文件存放目录

部署API-server

生成API-server服务器证书

1、 生成一个 2048 位的 kube-apiserver.key 文件:

# openssl genrsa -out kube-apiserver.key 2048

# ls

ca.crt ca.key **kube-apiserver.key**

2、创建一个用于生成证书签名请求(CSR)的配置文件。 保存文件:kube-apiserver-csr.conf

[ req ]

default_bits = 2048

prompt = no

default_md = sha256

req_extensions = req_ext

distinguished_name = dn

[ dn ]

C = CN

ST = Fujian

L = Fuzhou

O = k8s

OU = system

CN = kube-apiserver

[ req_ext ]

subjectAltName = @alt_names

[ alt_names ]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster

DNS.5 = kubernetes.default.svc.cluster.local

IP.1 = 127.0.0.1

IP.2 = 192.168.118.200

IP.3 = 192.168.118.201

IP.4 = 192.168.118.202

IP.5 = 192.168.118.222

IP.6 = 10.96.0.1

[ v3_ext ]

authorityKeyIdentifier=keyid,issuer:always

basicConstraints=CA:FALSE

keyUsage=keyEncipherment,dataEncipherment

extendedKeyUsage=serverAuth,clientAuth

subjectAltName=@alt_names

3、基于上面的配置文件生成证书签名请求:

# openssl req -new -key kube-apiserver.key -out kube-apiserver.csr -config kube-apiserver-csr.conf

# ls

ca.crt ca.key kube-apiserver-csr.conf **kube-apiserver.csr** kube-apiserver.key

4、基于 ca.key、ca.key 和 kube-apiserver.csr 等三个文件生成服务端证书:

# openssl x509 -req -in kube-apiserver.csr -CA ca.crt -CAkey ca.key \

-CAcreateserial -out kube-apiserver.crt -days 10000 \

-extensions v3_ext -extfile kube-apiserver-csr.conf

Signature ok

subject=/C=cn/ST=Fujian/L=Fuzhou/O=k8s/OU=system/CN=kube-apiserver

Getting CA Private Key

# ls kube-api*

kube-apiserver.crt kube-apiserver.csr kube-apiserver-csr.conf kube-apiserver.key

分发证书

# cp kube-apiserver.crt kube-apiserver.key /etc/kubernetes/pki/

# for i in { 01 02 }; do scp /etc/kubernetes/pki/kube-api* root@control-plane$i:/etc/kubernetes/pki/ ;done

生成token文件

# cat > /etc/kubernetes/token.csv << EOF

$(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

# for i in { 01 02 }; do scp /etc/kubernetes/token.csv root@control-plane$i:/etc/kubernetes/ ;done

创建配置文件

# vim kube-apiserver-conf.sh

---

# 使用 IP 或可解析的主机名替换 HOST0、HOST1 和 HOST2

export HOST0=192.168.118.200

export HOST1=192.168.118.201

export HOST2=192.168.118.202

# 创建临时目录来存储将被分发到其它主机上的文件

mkdir -p /tmp/${HOST0}/ /tmp/${HOST1}/ /tmp/${HOST2}/

APIHOSTS=(${HOST0} ${HOST1} ${HOST2})

for i in "${!APIHOSTS[@]}"; do

HOST=${APIHOSTS[$i]}

cat << EOF > /tmp/${HOST}/kube-apiserver.conf

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \\

--anonymous-auth=false \\

--advertise-address=${HOST} \\

--allow-privileged=true \

--authorization-mode=Node,RBAC \\

--bind-address=${HOST} \\

--client-ca-file=/etc/kubernetes/pki/ca.crt \\

--enable-bootstrap-token-auth \

--etcd-cafile=/etc/kubernetes/pki/ca.crt \\

--etcd-certfile=/etc/kubernetes/pki/etcd/etcd.crt \\

--etcd-keyfile=/etc/kubernetes/pki/etcd/etcd.key \\

--etcd-servers=https://192.168.118.200:2379,https://192.168.118.201:2379,https://192.168.118.202:2379 \\

--enable-swagger-ui=true \\

--kubelet-client-certificate=/etc/kubernetes/pki/kube-apiserver.crt \\

--kubelet-client-key=/etc/kubernetes/pki/kube-apiserver.key \\

--insecure-port=0 \\

--runtime-config=api/all=true \

--service-cluster-ip-range=10.96.0.0/16 \\

--service-node-port-range=30000-50000 \\

--secure-port=6443 \

--service-account-key-file=/etc/kubernetes/pki/ca.key \\

--service-account-signing-key-file=/etc/kubernetes/pki/ca.key \\

--service-account-issuer=https://kubernetes.default.svc.cluster.local \\

--tls-cert-file=/etc/kubernetes/pki/kube-apiserver.crt \\

--tls-private-key-file=/etc/kubernetes/pki/kube-apiserver.key \\

--token-auth-file=/etc/kubernetes/token.csv \

--proxy-client-cert-file=/etc/kubernetes/pki/kube-apiserver.crt \\

--proxy-client-key-file=/etc/kubernetes/pki/kube-apiserver.key \\

--requestheader-allowed-names=front-proxy-client \\

--requestheader-client-ca-file=/etc/kubernetes/pki/ca.crt \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--apiserver-count=2 \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/kube-apiserver-audit.log \\

--event-ttl=1h \\

--alsologtostderr=true \\

--logtostderr=false \\

--log-dir=/var/log/kubernetes \\

--v=4"

EOF

scp /tmp/${HOST}/kube-apiserver.conf root@${HOST}:/opt/kubernetes/

done

----

# sh kube-apiserver-conf.sh

# cat /opt/kubernetes/kube-apiserver.conf

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--advertise-address=192.168.118.200 \

--allow-privileged=true \

--authorization-mode=Node,RBAC \

--bind-address=192.168.118.200 \

--client-ca-file=/etc/kubernetes/pki/ca.crt \

--enable-bootstrap-token-auth \

--etcd-cafile=/etc/kubernetes/pki/ca.crt \

--etcd-certfile=/etc/kubernetes/pki/etcd/etcd.crt \

--etcd-keyfile=/etc/kubernetes/pki/etcd/etcd.key \

--etcd-servers=https://192.168.118.200:2379,https://192.168.118.201:2379,https://192.168.118.202:2379 \

--enable-swagger-ui=true \

--kubelet-client-certificate=/etc/kubernetes/pki/kube-apiserver.crt \

--kubelet-client-key=/etc/kubernetes/pki/kube-apiserver.key \

--insecure-port=0 \

--runtime-config=api/all=true \

--service-cluster-ip-range=10.96.0.0/16 \

--service-node-port-range=30000-50000 \

--secure-port=6443 \

--service-account-key-file=/etc/kubernetes/pki/ca.key \

--service-account-signing-key-file=/etc/kubernetes/pki/ca.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--tls-cert-file=/etc/kubernetes/pki/kube-apiserver.crt \

--tls-private-key-file=/etc/kubernetes/pki/kube-apiserver.key \

--token-auth-file=/etc/kubernetes/token.csv \

--proxy-client-cert-file=/etc/kubernetes/pki/kube-apiserver.crt \

--proxy-client-key-file=/etc/kubernetes/pki/kube-apiserver.key \

--requestheader-allowed-names=front-proxy-client \

--requestheader-client-ca-file=/etc/kubernetes/pki/ca.crt \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--apiserver-count=2 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=4"

注意: --service-account-signing-key-file=/etc/kubernetes/pki/ca.key \ # 1.20以上版本必须有此参数

–service-account-issuer=https://kubernetes.default.svc.cluster.local \ # 1.20以上版本必须有此参数

–logtostderr:启用日志

–v:日志等级

–log-dir:日志目录

–etcd-servers:etcd集群地址

–bind-address:监听地址

–secure-port:https安全端口

–advertise-address:集群通告地址

–allow-privileged:启用授权

–service-cluster-ip-range:Service虚拟IP地址段

–enable-admission-plugins:准入控制模块

–authorization-mode:认证授权,启用RBAC授权和节点自管理

–enable-bootstrap-token-auth:启用TLS bootstrap机制

–token-auth-file:bootstrap token文件

–service-node-port-range:Service nodeport类型默认分配端口范围

–kubelet-client-xxx:apiserver访问kubelet客户端证书

–tls-xxx-file:apiserver https证书

–etcd-xxxfile:连接Etcd集群证书

–audit-log-xxx:审计日志

创建服务启动文件

# vim kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=-/opt/kubernetes/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

同步相关文件到各个节点

# cp kube-apiserver.service /usr/lib/systemd/system/

# for i in { 01 02 }; do scp kube-apiserver.service root@control-plane$i:/usr/lib/systemd/system/ ;done

启动服务

# systemctl daemon-reload

# systemctl start kube-apiserver && systemctl enable kube-apiserver && systemctl status kube-apiserver

# netstat -nltup|grep kube-api

tcp 0 0 192.168.118.200:6443 0.0.0.0:* LISTEN 15149/kube-apiserve

控制节点部署 kubectl 命令行工具

创建证书签名请求

生成admin客户端证书

1、 生成一个 2048 位的 admin.key 文件:

# openssl genrsa -out admin.key 2048

2、生成证书签名请求:

# openssl req -new -key admin.key -out admin.csr -subj "/C=CN/ST=Fujian/L=Fuzhou/O=system:masters/OU=system/CN=kubernetes-admin"

4、基于 ca.key、ca.key 和 admin.csr 等三个文件生成服务端证书:

# openssl ca -days 10000 -in admin.csr -out admin.crt -cert ca.crt -keyfile ca.key

# ls admin*

admin.crt admin.csr admin.key

# cp admin.crt admin.key /etc/kubernetes/pki/

# for i in { 01 02 }; do scp /etc/kubernetes/pki/admin* root@control-plane$i:/etc/kubernetes/pki/ ;done

注:

① O 为 system:masters ,kube-apiserver 收到该证书后将请求的 Group 设置为system:masters;

② 预定义的 ClusterRoleBinding cluster-admin 将 Group system:masters 与Role cluster-admin 绑定,该 Role 授予所有 API的权限;

③ 该证书只会被 kubectl 当做 client 证书使用,所以 hosts 字段为空;

说明:

后续 kube-apiserver 使用 RBAC 对客户端(如 kubelet、kube-proxy、Pod)请求进行授权;

kube-apiserver 预定义了一些 RBAC 使用的 RoleBindings,如 cluster-admin 将 Group system:masters 与 Role cluster-admin 绑定,该 Role 授予了调用kube-apiserver 的所有 API的权限;O指定该证书的 Group 为 system:masters,kubelet 使用该证书访问 kube-apiserver 时 ,由于证书被 CA 签名,所以认证通过,同时由于证书用户组为经过预授权的

system:masters,所以被授予访问所有 API 的权限;

注:

这个admin 证书,是用来生成管理员用的kube config 配置文件用的,现在我们一般建议使用RBAC 来对kubernetes 进行角色权限控制, kubernetes 将证书中的CN 字段 作为User, O 字段作为 Group;“O”: “system:masters”, 必须是system:masters,否则后面kubectl create clusterrolebinding报错。

创建客户端证书出错

# openssl ca -days 10000 -in admin.csr -out admin.crt -cert ca.crt -keyfile ca.key

Using configuration from /etc/pki/tls/openssl.cnf

/etc/pki/CA/index.txt: No such file or directory

unable to open '/etc/pki/CA/index.txt'

140627427481488:error:02001002:system library:fopen:No such file or directory:bss_file.c:402:fopen('/etc/pki/CA/index.txt','r')

140627427481488:error:20074002:BIO routines:FILE_CTRL:system lib:bss_file.c:404:

```c

解决方式:在/etc/pki/CA目录创建详细的文件:

```c

# cd /etc/pki/CA

# touch index.txt

# touch serial

# echo "01" > serial

证书问题可参考: https://blog.csdn.net/huang714/article/details/109091615

创建kubectl用的admin.conf文件

admin.conf 为 kubectl 的配置文件,包含访问 apiserver 的所有信息,如 apiserver 地址、CA 证书和自身使用的证书;

## 设置集群参数,(--server=${KUBE_APISERVER} ,指定IP和端口;这里使用的是haproxy的VIP和端口;如果没有haproxy代理,就用实际服务的IP和端口;如:https://192.168.118.200:6443)

KUBECONFIG=/etc/kubernetes/admin.conf kubectl config set-cluster kubernetes --server=https://192.168.118.222:8443 --certificate-authority /etc/kubernetes/pki/ca.crt --embed-certs

## 设置客户端认证参数

KUBECONFIG=/etc/kubernetes/admin.conf kubectl config set-credentials kubernetes-admin --client-key /etc/kubernetes/pki/admin.key --client-certificate /etc/kubernetes/pki/admin.crt --embed-certs

## 设置上下文参数

KUBECONFIG=/etc/kubernetes/admin.conf kubectl config set-context kubernetes-admin@kubernetes --cluster kubernetes --user kubernetes-admin

## 设置默认上下文

KUBECONFIG=/etc/kubernetes/admin.conf kubectl config use-context kubernetes-admin@kubernetes

##授权kubernetes证书访问kubelet api权限?? 还没执行

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes-admin

注:

user: kubernetes-admin,取值于前面证书的CN

–embed-certs :将 ca.pem 和 admin.pem 证书内容嵌入到生成的kubectl.kubeconfig 文件中(不加时,写入的是证书文件路径);

验证下admin.conf文件

# cat /etc/kubernetes/admin.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tL.....tLS0tCg==

server: https://192.168.118.222:8443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS....tLS0tCg==

client-key-data: LS0tL.....tLQo=

分放文件到其他控制节点

注意:二进制安装,这 个文件不像kubeadm安装方式放在/etc/kubernetes/admin.conf,而时要放在/root/.kube/config

# mkdir -p /root/.kube/ ##控制节点都创建下

# for i in { 00 01 02 }; do scp /etc/kubernetes/admin.conf root@control-plane$i:/root/.kube/config ;done

查看集群组件状态

上面步骤完成后,kubectl就可以与kube-apiserver通信了,三个控制节点都执行下看看

# kubectl cluster-info

Kubernetes control plane is running at https://192.168.118.222:8443

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

# kubectl get cs ##kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true","reason":""}

etcd-1 Healthy {"health":"true","reason":""}

etcd-2 Healthy {"health":"true","reason":""}

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

# kubectl get all --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3h5m

部署kube-controller-manager

生成kube-controller-manager证书

1、 生成一个 2048 位的 kube-controller-manager.key 文件:

# openssl genrsa -out kube-controller-manager.key 2048

# ls kube-controller*

**kube-controller-manager.key**

2、创建一个用于生成证书签名请求(CSR)的配置文件。 保存文件:kube-controller-manager-csr.conf

[ req ]

default_bits = 2048

prompt = no

default_md = sha256

req_extensions = req_ext

distinguished_name = dn

[ dn ]

C = CN

ST = Fujian

L = Fuzhou

O = system:kube-controller-manager

OU = system

CN = system:kube-controller-manager

[ req_ext ]

subjectAltName = @alt_names

[ alt_names ]

IP.1 = 127.0.0.1

IP.2 = 192.168.118.200

IP.3 = 192.168.118.201

IP.4 = 192.168.118.202

[ v3_ext ]

authorityKeyIdentifier=keyid,issuer:always

basicConstraints=CA:FALSE

keyUsage=keyEncipherment,dataEncipherment

extendedKeyUsage=serverAuth,clientAuth

subjectAltName=@alt_names

注:

hosts 列表包含所有 kube-controller-manager 节点 IP;

CN 为 system:kube-controller-manager、O 为 system:kube-controller-manager,kubernetes 内置的 ClusterRoleBindings system:kube-controller-manager 赋予 kube-controller-manager 工作所需的权限

3、基于上面的配置文件生成证书签名请求:

# openssl req -new -key kube-controller-manager.key -out kube-controller-manager.csr -config kube-controller-manager-csr.conf

# ls kube-controller-manager*

kube-controller-manager-csr.conf **kube-controller-manager.csr** kube-controller-manager.key

4、基于 ca.key、ca.key 和 kube-apiserver.csr 等三个文件生成服务端证书:

# openssl x509 -req -in kube-controller-manager.csr -CA ca.crt -CAkey ca.key \

-CAcreateserial -out kube-controller-manager.crt -days 10000 \

-extensions v3_ext -extfile kube-controller-manager-csr.conf

Signature ok

subject=/C=CN/ST=Fujian/L=Fuzhou/O=system:kube-controller-manager/OU=system/CN=system:kube-controller-manager

Getting CA Private Key

# ls kube-controller*

kube-controller-manager.crt kube-controller-manager-csr.conf

kube-controller-manager.csr kube-controller-manager.key

分发证书

# cp kube-controller-manager.crt kube-controller-manager.key /etc/kubernetes/pki/

# for i in { 01 02 }; do scp /etc/kubernetes/pki/kube-controller-manager* root@control-plane$i:/etc/kubernetes/pki/ ;done

创建kube-controller-manager的kubeconfig

## 设置集群参数

KUBECONFIG=/etc/kubernetes/controller-manager.conf kubectl config set-cluster kubernetes --server=https://192.168.118.222:8443 --certificate-authority /etc/kubernetes/pki/ca.crt --embed-certs

## 设置客户端认证参数

KUBECONFIG=/etc/kubernetes/controller-manager.conf kubectl config set-credentials system:kube-controller-manager --client-key /etc/kubernetes/pki/kube-controller-manager.key --client-certificate /etc/kubernetes/pki/kube-controller-manager.crt --embed-certs

## 设置上下文参数

KUBECONFIG=/etc/kubernetes/controller-manager.conf kubectl config set-context system:kube-controller-manager@kubernetes --cluster kubernetes --user system:kube-controller-manager

## 设置默认上下文

KUBECONFIG=/etc/kubernetes/controller-manager.conf kubectl config use-context system:kube-controller-manager@kubernetes

# for i in { 01 02 }; do scp /etc/kubernetes/controller-manager.conf root@control-plane$i:/etc/kubernetes/ ;done

创建配置文件

# vim kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="--bind-address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/controller-manager.conf \

--service-cluster-ip-range=10.96.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt \

--cluster-signing-key-file=/etc/kubernetes/pki/ca.key \

--allocate-node-cidrs=true \

--cluster-cidr=172.16.0.0/16 \

--experimental-cluster-signing-duration=175200h \

--root-ca-file=/etc/kubernetes/pki/ca.crt \

--service-account-private-key-file=/etc/kubernetes/pki/ca.key \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--horizontal-pod-autoscaler-use-rest-clients=true \

--horizontal-pod-autoscaler-sync-period=10s \

--tls-cert-file=/etc/kubernetes/pki/kube-controller-manager.crt \

--tls-private-key-file=/etc/kubernetes/pki/kube-controller-manager.key \

--use-service-account-credentials=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2"

创建启动文件

# vim kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

同步相关文件到各个节点

# cp kube-controller-manager.conf /opt/kubernetes/

# cp kube-controller-manager.service /usr/lib/systemd/system/

# for i in { 01 02 }; do scp kube-controller-manager.conf root@control-plane$i:/opt/kubernetes/ ;done

# for i in { 01 02 }; do scp kube-controller-manager.service root@control-plane$i:/usr/lib/systemd/system/ ;done

启动服务

# systemctl daemon-reload && systemctl start kube-controller-manager.service && systemctl enable kube-controller-manager.service && systemctl status kube-controller-manager.service

部署kube-scheduler

生成kube-scheduler证书

1、 生成一个 2048 位的 kube-scheduler.key 文件:

# openssl genrsa -out kube-scheduler.key 2048

2、创建一个用于生成证书签名请求(CSR)的配置文件。 保存文件:kube-scheduler-csr.conf

[ req ]

default_bits = 2048

prompt = no

default_md = sha256

req_extensions = req_ext

distinguished_name = dn

[ dn ]

C = CN

ST = Fujian

L = Fuzhou

O = system:kube-scheduler

OU = system

CN = system:kube-scheduler

[ req_ext ]

subjectAltName = @alt_names

[ alt_names ]

IP.1 = 127.0.0.1

IP.2 = 192.168.118.200

IP.3 = 192.168.118.201

IP.4 = 192.168.118.202

[ v3_ext ]

authorityKeyIdentifier=keyid,issuer:always

basicConstraints=CA:FALSE

keyUsage=keyEncipherment,dataEncipherment

extendedKeyUsage=serverAuth,clientAuth

subjectAltName=@alt_names

注:

hosts 列表包含所有 kube-scheduler 节点 IP;

CN 为 system:kube-scheduler、O 为 system:kube-scheduler,kubernetes 内置的 ClusterRoleBindings system:kube-scheduler 将赋予 kube-scheduler 工作所需的权限。

3、基于上面的配置文件生成证书签名请求:

# openssl req -new -key kube-scheduler.key -out kube-scheduler.csr -config kube-scheduler-csr.conf

# ls kube-scheduler*

4、基于 ca.key、ca.key 和 kube-scheduler.csr 等三个文件生成服务端证书:

# openssl x509 -req -in kube-scheduler.csr -CA ca.crt -CAkey ca.key \

-CAcreateserial -out kube-scheduler.crt -days 10000 \

-extensions v3_ext -extfile kube-scheduler-csr.conf

Signature ok

subject=/C=CN/ST=Fujian/L=Fuzhou/O=system:kube-scheduler/OU=system/CN=system:kube-scheduler

Getting CA Private Key

# ls kube-scheduler*

kube-scheduler.crt kube-scheduler.csr kube-scheduler-csr.conf kube-scheduler.key

5 、分发证书

# cp kube-scheduler.crt kube-scheduler.key /etc/kubernetes/pki/

# for i in { 01 02 }; do scp /etc/kubernetes/pki/kube-scheduler* root@control-plane$i:/etc/kubernetes/pki/ ;done

创建kube-scheduler的kubeconfig

## 设置集群参数

KUBECONFIG=/etc/kubernetes/scheduler.conf kubectl config set-cluster kubernetes --server=https://192.168.118.222:8443 --certificate-authority /etc/kubernetes/pki/ca.crt --embed-certs

## 设置客户端认证参数

KUBECONFIG=/etc/kubernetes/scheduler.conf kubectl config set-credentials system:kube-scheduler --client-key /etc/kubernetes/pki/kube-scheduler.key --client-certificate /etc/kubernetes/pki/kube-scheduler.crt --embed-certs

## 设置上下文参数

KUBECONFIG=/etc/kubernetes/scheduler.conf kubectl config set-context system:kube-scheduler@kubernetes --cluster kubernetes --user system:kube-scheduler

## 设置默认上下文

KUBECONFIG=/etc/kubernetes/scheduler.conf kubectl config use-context system:kube-scheduler@kubernetes

# for i in { 01 02 }; do scp /etc/kubernetes/scheduler.conf root@control-plane$i:/etc/kubernetes/ ;done

创建配置文件

# vim kube-scheduler.conf

KUBE_SCHEDULER_OPTS="--bind-address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/scheduler.conf \

--leader-elect=true \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2"

创建服务启动文件

# vim kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/kube-scheduler.conf

ExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

同步相关文件到各个节点

# cp kube-scheduler.conf /opt/kubernetes/

# cp kube-scheduler.service /usr/lib/systemd/system/

# for i in { 01 02 }; do scp kube-scheduler.conf root@control-plane$i:/opt/kubernetes/ ;done

# for i in { 01 02 }; do scp kube-scheduler.service root@control-plane$i:/usr/lib/systemd/system/ ;done

启动服务

# systemctl daemon-reload && systemctl start kube-scheduler && systemctl enable kube-scheduler && systemctl status kube-scheduler

---------------------------

# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

controller-manager Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

etcd-1 Healthy {"health":"true","reason":""}

etcd-2 Healthy {"health":"true","reason":""}

注: scheduler异常了,重新启动下就好了,发神精了。。.。.

------------------

# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

etcd-1 Healthy {"health":"true","reason":""}

etcd-2 Healthy {"health":"true","reason":""}

部署kubelet 以下操作在master1上操作

创建kubelet-bootstrap.kubeconfig

# BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv)

设置集群参数

# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.crt --embed-certs=true --server=https://192.168.118.222:8443 --kubeconfig=kubelet-bootstrap.conf

设置客户端认证参数

# kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.conf

设置上下文参数

# kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.conf

设置默认上下文

# kubectl config use-context default --kubeconfig=kubelet-bootstrap.conf

创建角色绑定

# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

# for i in { 00 01 02 }; do scp kubelet-bootstrap.conf root@control-plane$i:/etc/kubernetes/ ;done

# for i in { 00 01 02 }; do scp kubelet-bootstrap.conf root@node$i:/etc/kubernetes/ ;done

注:

① 证书中写入 Token 而非证书,证书后续由 controller-manager 创建。

② 创建的 token 有效期为 1 天,超期后将不能再被使用,且会被 kube-controller-manager 的 tokencleaner 清理(如果启用该 controller 的话);

③ kube-apiserver 接收 kubelet 的 bootstrap token 后,将请求的 user 设置为 system:bootstrap:,group 设置为 system:bootstrappers;

各 token 关联的 Secret:

# kubectl get secrets -n kube-system

NAME TYPE DATA AGE

attachdetach-controller-token-rwl9t kubernetes.io/service-account-token 3 17h

bootstrap-signer-token-92hm9 kubernetes.io/service-account-token 3 17h

.......

创建配置文件

刚开始时参考kubeadm安装方式的/var/lib/kubelet/config.yaml

# vim kubelet-config.yaml

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

#cpuManagerReconcilePeriod: 0s

#evictionPressureTransitionPeriod: 0s

#fileCheckFrequency: 0s

#healthzBindAddress: 127.0.0.1

#healthzPort: 10248

#httpCheckFrequency: 0s

#imageMinimumGCAge: 0s

#logging: {}

#memorySwap: {}

#nodeStatusReportFrequency: 0s

#nodeStatusUpdateFrequency: 0s

#rotateCertificates: true

#runtimeRequestTimeout: 0s

#shutdownGracePeriod: 0s

#shutdownGracePeriodCriticalPods: 0s

#staticPodPath: /etc/kubernetes/manifests

#streamingConnectionIdleTimeout: 0s

#syncFrequency: 0s

volumeStatsAggPeriod: 0s

-----工作节点

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

```c

cp kubelet-config.yaml /opt/kubernetes/

# for i in { 01 02 }; do scp /opt/kubernetes/kubelet-config.yaml root@control-plane$i:/opt/kubernetes/ ;done

# for i in { 00 01 02 }; do scp /opt/kubernetes/kubelet-config.yaml root@node$i:/opt/kubernetes/ ;done

创建启动文件

# vim kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.conf \

--cert-dir=/etc/kubernetes/pki \

--kubeconfig=/etc/kubernetes/kubelet.conf \

--config=/opt/kubernetes/kubelet-config.yaml \

--network-plugin=cni \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.5 \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

注:

–hostname-override:显示名称,集群中唯一

–network-plugin:启用CNI

–kubeconfig:空路径,会自动生成,后面用于连接apiserver

–bootstrap-kubeconfig:首次启动向apiserver申请证书

–config:配置参数文件

–cert-dir:kubelet证书生成目录

–pod-infra-container-image:管理Pod网络容器的镜像

同步相关文件到各个节点

# for i in { 00 01 02 }; do scp kubelet.service root@control-plane$i:/usr/lib/systemd/system/ ;done

# for i in { 00 01 02 }; do scp kubelet.service root@node$i:/usr/lib/systemd/system/ ;done

启动服务

各个work节点上操作

# mkdir -p /var/lib/kubelet

# systemctl daemon-reload && systemctl enable kubelet && systemctl start kubelet && systemctl status kubelet

这里正常发起请求后,就开始生成kubelet客户端证书

kubelet-client.key.tmp kubelet.crt kubelet.key

批复kubelet的CSR请求加入集群

手动批复

确认kubelet服务启动成功后,接着到master上Approve一下bootstrap请求。执行如下命令可以看到worker节点分别发送的CSR 请求:

# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-G6xwUd8EAbZykxTvJi8Z1UEZeYiE288Kfi2p-Xatp1w 6m42s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-GYMVN3TrU-PYKeoWNj0CMUhwzNj_duCttJhv7cWDRgY 92s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-MILV0tLUzDuHSokTQVNyc46AfVx2XM_Hmh1BoDISzJk 8m9s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-Sy0S-C59gyPeLNX_fvPd6frzRAvjYjlNEFd-1QWrf9I 7m34s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

# kubectl certificate approve node-csr-G6xwUd8EAbZykxTvJi8Z1UEZeYiE288Kfi2p-Xatp1w

certificatesigningrequest.certificates.k8s.io/node-csr-G6xwUd8EAbZykxTvJi8Z1UEZeYiE288Kfi2p-Xatp1w approved

# kubectl certificate approve node-csr-GYMVN3TrU-PYKeoWNj0CMUhwzNj_duCttJhv7cWDRgY

certificatesigningrequest.certificates.k8s.io/node-csr-GYMVN3TrU-PYKeoWNj0CMUhwzNj_duCttJhv7cWDRgY approved

# kubectl certificate approve node-csr-MILV0tLUzDuHSokTQVNyc46AfVx2XM_Hmh1BoDISzJk

certificatesigningrequest.certificates.k8s.io/node-csr-MILV0tLUzDuHSokTQVNyc46AfVx2XM_Hmh1BoDISzJk approved

# kubectl certificate approve node-csr-Sy0S-C59gyPeLNX_fvPd6frzRAvjYjlNEFd-1QWrf9I

certificatesigningrequest.certificates.k8s.io/node-csr-Sy0S-C59gyPeLNX_fvPd6frzRAvjYjlNEFd-1QWrf9I approved

# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-G6xwUd8EAbZykxTvJi8Z1UEZeYiE288Kfi2p-Xatp1w 8m36s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

node-csr-GYMVN3TrU-PYKeoWNj0CMUhwzNj_duCttJhv7cWDRgY 3m26s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

node-csr-MILV0tLUzDuHSokTQVNyc46AfVx2XM_Hmh1BoDISzJk 10m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

node-csr-Sy0S-C59gyPeLNX_fvPd6frzRAvjYjlNEFd-1QWrf9I 9m28s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

control-plane00 NotReady <none> 13m v1.21.3

control-plane01 NotReady <none> 7m38s v1.21.3

control-plane02 NotReady <none> 7m31s v1.21.3

node00 NotReady <none> 9s v1.21.3

异常情况

可以用 systemctl status kubelet -l和 journalctl -u kubelet 查看

# kubectl get nodes

No resources found

原因:kubelet设置的cgroups和docker的不一致导致,检查下容器运行时。

自动批复及续约

# vim auto-approve-csrs-renewals.yaml

# 批复 "system:bootstrappers" 组的所有 CSR

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: auto-approve-csrs-for-group

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

apiGroup: rbac.authorization.k8s.io

---

# 批复 "system:nodes" 组的 CSR 续约请求

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: auto-approve-renewals-for-nodes

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

apiGroup: rbac.authorization.k8s.io

# kubectl apply -f auto-approve-csrs-renewals.yaml

授权apiserver访问kubelet

cat > apiserver-to-kubelet-rbac.yaml << EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

EOF

kubectl apply -f apiserver-to-kubelet-rbac.yaml

部署kube-proxy

生成kube-proxy客户端证书

1、 生成一个 2048 位的 kube-proxy.key 文件:

# openssl genrsa -out kube-proxy.key 2048

2、生成证书签名请求:

# openssl req -new -key kube-proxy.key -out kube-proxy.csr -subj "/C=CN/ST=Fujian/L=Fuzhou/O=k8s/OU=system/CN=system:kube-proxy"

3、基于 ca.key、ca.key 和 kube-proxy.csr 等三个文件生成客户端证书:

# openssl ca -days 10000 -in kube-proxy.csr -out kube-proxy.crt -cert ca.crt -keyfile ca.key

# ls kube-proxy*

kube-proxy.crt kube-proxy.csr kube-proxy.key

4、分放证书

# cp kube-proxy.crt kube-proxy.key /etc/kubernetes/pki/

# for i in { 01 02 }; do scp /etc/kubernetes/pki/kube-proxy* root@control-plane$i:/etc/kubernetes/pki/ ;done

# for i in { 00 01 02 }; do scp /etc/kubernetes/pki/kube-proxy* root@node$i:/etc/kubernetes/pki/ ;done

创建工作节点conf文件

## 设置集群参数

KUBECONFIG=/etc/kubernetes/kube-proxy.conf kubectl config set-cluster kubernetes --server=https://192.168.118.222:8443 --certificate-authority /etc/kubernetes/pki/ca.crt --embed-certs

## 设置客户端认证参数

KUBECONFIG=/etc/kubernetes/kube-proxy.conf kubectl config set-credentials kube-proxy --client-key /etc/kubernetes/pki/kube-proxy.key --client-certificate /etc/kubernetes/pki/kube-proxy.crt

## 设置上下文参数

KUBECONFIG=/etc/kubernetes/kube-proxy.conf kubectl config set-context default --cluster kubernetes --user kube-proxy

## 设置默认上下文

KUBECONFIG=/etc/kubernetes/kube-proxy.conf kubectl config use-context default

# cat /etc/kubernetes/kube-proxy.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1C---------S0tCg==

server: https://192.168.118.222:8443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kube-proxy

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kube-proxy

user:

client-certificate: pki/kube-proxy.pem

client-key: pki/kube-proxy-key.pem

# for i in { 01 02 }; do scp /etc/kubernetes/kube-proxy.conf root@control-plane$i:/etc/kubernetes/ ;done

# for i in { 00 01 02 }; do scp /etc/kubernetes/kube-proxy.conf root@node$i:/etc/kubernetes/ ;done

创建kube-proxy配置文件

# vim kube-proxy.yaml.sh

----------------------

# 使用 IP 或可解析的主机名替换 HOST0、HOST1 和 HOST2...

export HOST0=192.168.118.200

export HOST1=192.168.118.201

export HOST2=192.168.118.202

export HOST6=192.168.118.206

export HOST7=192.168.118.207

export HOST8=192.168.118.208

# 创建临时目录来存储将被分发到其它主机上的文件

mkdir -p /tmp/${HOST0}/ /tmp/${HOST1}/ /tmp/${HOST2}/ /tmp/${HOST6}/ /tmp/${HOST7}/ /tmp/${HOST8}/

HOSTS=(${HOST0} ${HOST1} ${HOST2} ${HOST6} ${HOST7} ${HOST8})

for i in "${!HOSTS[@]}"; do

HOST=${HOSTS[$i]}

cat << EOF > /tmp/${HOST}/kube-proxy.yaml

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.conf

clusterCIDR: 10.96.0.0/16

healthzBindAddress: 0.0.0.0:10256

kind: KubeProxyConfiguration

metricsBindAddress: 0.0.0.0:10249

mode: "ipvs"

EOF

scp /tmp/${HOST}/kube-proxy.yaml root@${HOST}:/opt/kubernetes/

done

----------------------

# sh kube-proxy.yaml.sh

创建服务启动文件

# vim kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \

--config=/opt/kubernetes/kube-proxy.yaml \

--alsologtostderr=true \

--logtostderr=false \

--log-dir=/var/log/kubernetes \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

同步文件到各个节点

# for i in { 00 01 02 }; do scp kube-proxy.service root@control-plane$i:/usr/lib/systemd/system/ ;done

# for i in { 00 01 02 }; do scp kube-proxy.service root@node$i:/usr/lib/systemd/system/ ;done

启动服务

# mkdir -p /var/lib/kube-proxy

# systemctl daemon-reload && systemctl enable kube-proxy && systemctl start kube-proxy && systemctl status kube-proxy

配置网络组件

修改kube-flannel-014.yml

-------

kind: ConfigMap

apiVersion: v1

....

data:

....

net-conf.json: |

{

"Network": "172.16.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

Network:取值 kube-controller-manager.conf --cluster-cidr=172.16.0.0/16

kubectl apply -f kube-flannel-014.yml

查看各节点

# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system kube-flannel-ds-f6s67 0/1 CrashLoopBackOff 44 16d 192.168.118.206 node00 <none> <none>

kube-system kube-flannel-ds-f965z 1/1 Running 4 16d 192.168.118.202 control-plane02 <none> <none>

kube-system kube-flannel-ds-mvsq2 1/1 Running 5 16d 192.168.118.201 control-plane01 <none> <none>

kube-system kube-flannel-ds-xg424 1/1 Running 9 16d 192.168.118.200 control-plane00 <none>

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

control-plane00 NotReady <none> 17d v1.21.3

control-plane01 NotReady <none> 17d v1.21.3

control-plane02 NotReady <none> 17d v1.21.3

node00 NotReady <none> 17d v1.21.3

配置CoreDNS

# wget https://github.com/coredns/deployment/blob/master/kubernetes/coredns.yaml.sed

# ./deploy.sh | kubectl apply -f -

这个待参考

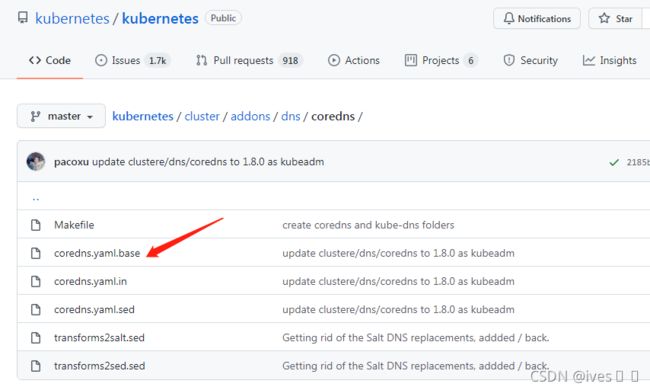

下载coredns文件

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns/coredns

wget coredns.yaml.base

修改:

kind: ConfigMap

data:

__DNS__DOMAIN__ => kubelet配置文件中的 clusterDomain值: cluster.local

---

kind: Deployment

image: k8s.gcr.io/coredns/coredns:v1.8.0 =》按实际需要

resources:

limits:

memory: __DNS__MEMORY__LIMIT__ =》 取值 100Mi 就可以了

---

kind: Service

clusterIP: __DNS__SERVER__ => kubelet配置文件中的 clusterDNS值: 10.96.0.10

# cat coredns.yaml

# __MACHINE_GENERATED_WARNING__

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

priorityClassName: system-cluster-critical

serviceAccountName: coredns

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

containers:

- name: coredns

image: registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 100Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.96.0.10

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

# docker pull coredns/coredns:v1.8.0

# kubectl apply -f coredns.yaml

三 外部 etcd 高可用集群

参考:将 kubelet 配置为 etcd 的服务管理器。

# mkdir /etc/systemd/system/kubelet.service.d

# cat << EOF > /etc/systemd/system/kubelet.service.d/20-etcd-service-manager.conf

[Service]

ExecStart=

ExecStart=/usr/bin/kubelet --address=127.0.0.1 --pod-manifest-path=/etc/kubernetes/manifests --cgroup-driver=systemd

Restart=always

EOF

# systemctl daemon-reload

# systemctl restart kubelet

检查 kubelet 的状态以确保其处于运行状态:

# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

/etc/systemd/system/kubelet.service.d

└─20-etcd-service-manager.conf

Active: active (running) since 二 2021-08-31 10:53:02 CST; 16s ago

Docs: https://kubernetes.io/docs/

Main PID: 3475 (kubelet)

Tasks: 13

Memory: 54.1M

CGroup: /system.slice/kubelet.service

└─3475 /usr/bin/kubelet --address=127.0.0.1 --pod-manifest-path=/etc/kubernetes/manifests --cgroup-driver

。。。。。。.。.。。。。。。

=======================================================================================

192.168.118.200 control-plane00

192.168.118.201 control-plane01

192.168.118.202 control-plane02

192.168.118.203 etcd00

192.168.118.204 etcd01

192.168.118.205 etcd02

192.168.118.206 node01

在每台机器上创建目录:

mkdir -p /opt/kubernetes/bin

mkdir -p /etc/kubernetes/pki

mkdir -p /opt/etcd/ssl

chown -R root:root /opt/kubernetes/* /etc/kubernetes/* /opt/etcd/*

cfssl 创建根证书CA

1、下载、解压并准备如下所示的命令行工具。 注意:你可能需要根据所用的硬件体系架构和 cfssl 版本调整示例命令。

curl -L https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl_1.5.0_linux_amd64 -o cfssl

chmod +x cfssl

curl -L https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssljson_1.5.0_linux_amd64 -o cfssljson

chmod +x cfssljson

curl -L https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl-certinfo_1.5.0_linux_amd64 -o cfssl-certinfo

chmod +x cfssl-certinfo

# mv cfss* /usr/local/bin

2、创建一个目录,用它保存所生成的构件和初始化 cfssl:

mkdir cert

cd cert

cfssl print-defaults config > config.json

cfssl print-defaults csr > csr.json

3、创建一个 JSON 配置文件来生成 CA 文件,例如:ca-config.json:

# vim ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

参数:

expiry: “8760h” 这个时间是1年,可以更改多些;

signing :表示该证书可用于签名其它证书,生成的 ca.pem 证书中CA=TRUE ;

server auth :表示 client 可以用该该证书对 server 提供的证书进行验证;

client auth :表示 server 可以用该该证书对 client 提供的证书进行验证;

4、 创建一个 JSON 配置文件,用于 CA 证书签名请求(CSR),例如:ca-csr.json。

# vim ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names":[{

"C": "cn",

"ST": "Fujian",

"L": "Fuzhou",

"O": "k8s",

"OU": "system"

}]

}

CN: Common Name ,kube-apiserver 从证书中提取该字段作为请求的用户名(User Name),浏览器使用该字段验证网站是否合法;

O: Organization ,kube-apiserver 从证书中提取该字段作为请求用户所属的组(Group);

kube-apiserver 将提取的 User、Group 作为 RBAC 授权的用户标识;

5、生成 CA 秘钥文件(ca-key.pem)和证书文件(ca.pem):

# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

# ls

ca-config.json **ca.csr** ca-csr.json **ca-key.pem ca.pem** config.json csr.json

6 、 分发证书文件

将生成的 CA 证书、秘钥文件、配置文件拷贝到所有节点的/etc/kubernetes/pki目录下:

# for i in { 00 01 02 }; do scp ca*.pem root@etcd$i:/opt/etcd/ssl/ ;done

# for i in { 01 02 }; do scp ca*.pem root@control-plane$i:/etc/kubernetes/pki/ ;done

# for i in { 00 01 02 }; do scp /etc/kubernetes/pki/ca*.pem root@node$i:/etc/kubernetes/pki/ ;done

# cp ca*.pem /etc/kubernetes/pki/

创建一个高可用 etcd 集群

分放etcd可执行程序

# tar zxvf etcd-v3.5.0-linux-amd64.tar.gz

# cd etcd-v3.5.0-linux-amd64/

# ls

# for i in { 00 01 02 }; do scp etcd* root@etcd$i:/usr/local/bin/ ;done

创建etcd证书

创建一个etcd证书签名请求配置文件,用来为 etcd 服务器生成秘钥和证书,例如:etcd-csr.json。

先回到证书目录

# cat > etcd-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.118.203",

"192.168.118.204",

"192.168.118.205"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "cn",

"ST": "Fujian",

"L": "Fuzhou",

"O": "k8s",

"OU": "system"

}]

}

EOF

为 etcd 服务器生成秘钥和证书,默认会分别存储为etcd-key.pem 和 etcd.pem 两个文件。

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem \

--config=ca-config.json -profile=kubernetes \

etcd-csr.json | cfssljson -bare etcd

# ls etcd*

etcd.csr etcd-csr.json etcd-key.pem etcd.pem

# for i in { 00 01 02 }; do scp etcd*.pem root@etcd$i:/etc/kubernetes/pki/etcd/ ;done

# for i in { 00 01 02 }; do scp etcd*.pem root@control-plane$i:/etc/kubernetes/pki/etcd/ ;done

创建配置文件

# vim etcd-conf.sh

---

# 使用 IP 或可解析的主机名替换 HOST0、HOST1 和 HOST2

export HOST0=192.168.118.203

export HOST1=192.168.118.204

export HOST2=192.168.118.205

# 创建临时目录来存储将被分发到其它主机上的文件

mkdir -p /tmp/${HOST0}/ /tmp/${HOST1}/ /tmp/${HOST2}/

ETCDHOSTS=(${HOST0} ${HOST1} ${HOST2})

NAMES=("etcd00" "etcd01" "etcd02")

for i in "${!ETCDHOSTS[@]}"; do

HOST=${ETCDHOSTS[$i]}

NAME=${NAMES[$i]}

cat << EOF > /tmp/${HOST}/etcd.conf

#[Member]

ETCD_NAME="${NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${HOST}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${HOST}:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${HOST}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${HOST}:2379"

ETCD_INITIAL_CLUSTER="etcd00=https://${ETCDHOSTS[0]}:2380,etcd01=https://${ETCDHOSTS[1]}:2380,etcd02=https://${ETCDHOSTS[2]}:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-1"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

scp /tmp/${HOST}/etcd.conf root@${NAME}:/opt/etcd/ ##这行可以先不要

done

----

# sh etcd-conf.sh

生成如下样例

#[Member]

ETCD_NAME="etcd00"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.118.203:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.118.203:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.118.203:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.118.203:2379"

ETCD_INITIAL_CLUSTER="etcd00=https://192.168.118.203:2380,etcd01=https://192.168.118.204:2380,etcd02=https://192.168.118.205:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-1"

ETCD_INITIAL_CLUSTER_STATE="new"

注:

ETCD_NAME:节点名称,集群中唯一,(各节点需与ETCD_INITIAL_CLUSTER 中的一致)

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通信监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN:可以为集群生成唯一的集群 ID 和成员 ID

ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群

上面如果不想马上复制etcd.conf文件,可以取消scp这行,验证完再复制也可以

scp /tmp/192.168.118.203/etcd.conf etcd00:/opt/etcd/

scp /tmp/192.168.118.204/etcd.conf etcd01:/opt/etcd/

scp /tmp/192.168.118.205/etcd.conf etcd02:/opt/etcd/

创建启动服务文件

# vim etcd.service

-----

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/opt/etcd/etcd.conf

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/local/bin/etcd \

--cert-file=/opt/etcd/ssl/etcd.pem \

--key-file=/opt/etcd/ssl/etcd-key.pem \

--trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-cert-file=/opt/etcd/ssl/etcd.pem \

--peer-key-file=/opt/etcd/ssl/etcd-key.pem \

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-client-cert-auth \

--client-cert-auth

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

同步相关文件()到各个节点

# for i in { 00 01 02 }; do scp etcd.service root@etcd$i:/usr/lib/systemd/system/ ;done

启动etcd集群

查看集群状态

# mkdir -p /var/lib/etcd/default.etcd /opt/lib/etcd/default.etcd

# systemctl daemon-reload

# systemctl start etcd.service 注:同时启动三个节点

# systemctl status etcd.service

● etcd.service - Etcd Server