pytorch rnn文本生成 生成小说 AI写小说1

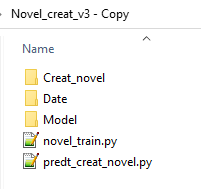

目录结构

Creat_novel 和Model 为空目录

Creat_novel 生成小说保存目录

Model 训路练后模型保存目录

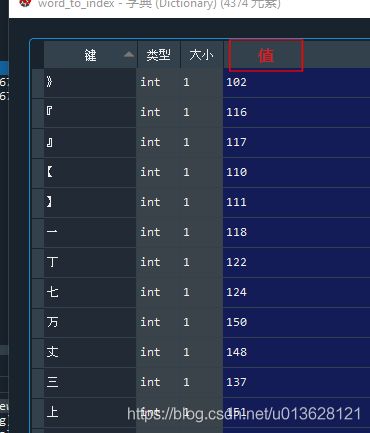

Chinese_characters_3500.txt 是字典文件 也就是这个 编程 常用3500汉字 常用字符_绀目澄清-CSDN博客 该文件作用:读取文件 为列表 然后创建一个字典键是列表的值字符 值就是该列表的索引 ,也就是 把字符 映射成数字,该数字整数0-4757 ,

训练时把小说的字 按这个字典 转换成 数字 输入模型 中去训练,

生成小说时 又把 模型中的数字列表转成 字符

西游记.txt 就是要训练的小说

注意:西游记.txt 文件编码格式 要改成 utf-8 ,所有要训练的小说都改成这个,统一.Chinese_characters_3500.txt也是

训练小说 novel_train.py GPU训练版

# -*- coding: utf-8 -*-

"""

Created on Mon Feb 8 17:39:57 2021

@author: Administrator

"""

import argparse

import torch

from torch import nn

import numpy as np

from torch import nn, optim

from torch.utils.data import DataLoader

import os

import time

import datetime

os.environ['CUDA_VISIBLE_DEVICES'] = "0,1,2"

train_novel_path ='./Date/西游记.txt'

char_key_dict_path ='./Date/Chinese_characters_3500.txt'

model_save_path = "./Model/novel_creat_model.pkl"

model_save_path_pth = "./Model/novel_creat.pth"

save_pred_novel_path ="./Creat_novel/pred_novel_"+str(int(round(time.time() * 1000000)))+".txt"

pred_novel_start_text='《重生之我在地球哪些年》'

use_gpu =torch.cuda.is_available()

print('torch.cuda.is_available() == ',use_gpu)

device = torch.device('cuda:0')

def dictGet(dict1,index):

length1 = len(dict1)

if index >=0 and index < length1 :

return dict1[index]

else:

return dict1[0]

def dictGetValue(dict1,indexZifu):

if indexZifu in dict1:

return dict1[indexZifu]

else:

return dict1['*']

def getNotSet(list1):

'''

返回一个新列表,如何删除列表中重复的元素且保留原顺序

例子

list1 = 1 1 2 3 3 5

return 1 2 3 5

'''

l3 = []

for i in list1:

if i not in l3:

l3.append(i)

return l3;

class Dataset(torch.utils.data.Dataset):

def __init__(self,args,):

self.args = args

self.words = self.load_words()

self.uniq_words = self.get_uniq_words()

self.index_to_word = {index: word for index, word in enumerate(self.uniq_words)}

self.word_to_index = {word: index for index, word in enumerate(self.uniq_words)}

# self.words_list = list( self.words )

#把小说的 字 转换成 int

self.words_indexes = []

#把字典里没有的字符 用'*'表示,也就是Chinese_characters_3500.txt没有的字符

for w in self.words:

if (w in self.word_to_index) == False:

self.words_indexes.append(1482) #1482 =='*'

# print(w,'= *',)

else:

self.words_indexes.append(self.word_to_index[w])

# print(w,'= ',self.word_to_index[w])

def load_words(self):

"""加载数据集"""

with open(train_novel_path,encoding='UTF-8') as f:

corpus_chars = f.read()

print('length',len(corpus_chars))

# corpus_chars = corpus_chars[0:10000]

return corpus_chars

def get_uniq_words(self):

with open(char_key_dict_path, 'r',encoding='utf-8') as f:

text=f.read()

idx_to_char = list(text) #不能使用 set(self.words) 函数 ,因为每次启动随机,只能用固定的

return idx_to_char

def __len__(self):

return len(self.words_indexes) - self.args.sequence_length

def __getitem__(self, index):

return (

torch.tensor(self.words_indexes[index:index+self.args.sequence_length]).cuda(),

torch.tensor(self.words_indexes[index+1:index+self.args.sequence_length+1]).cuda(),

)

class Model(nn.Module):

def __init__(self, dataset):

super(Model, self).__init__()

self.input_size = 128

self.hidden_size = 256

self.embedding_dim = self.input_size

self.num_layers = 2

n_vocab = len(dataset.uniq_words)

self.embedding = nn.Embedding(

num_embeddings=n_vocab,

embedding_dim=self.embedding_dim,

)

self.rnn = nn.RNN(

input_size=self.input_size,

hidden_size=self.hidden_size,

num_layers=self.num_layers,

)

self.rnn.cuda()

self.fc = nn.Linear(self.hidden_size, n_vocab).cuda()

def forward(self, x, prev_state):

embed = self.embedding(x).cuda()

output,state = self.rnn(embed, prev_state)

logits = self.fc(output)

return logits,state

def init_state(self, sequence_length):

return (torch.zeros(self.num_layers, sequence_length, self.hidden_size).cuda())

def train(dataset, model, args):

model.to(device)

model.train()

dataloader = DataLoader(

dataset,

batch_size=args.batch_size,

)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

for epoch in range(args.max_epochs):

state = model.init_state(args.sequence_length)

for batch, (x, y) in enumerate(dataloader):

# print(x,y)

optimizer.zero_grad()

x = x.cuda()

y= y.cuda()

y_pred, state = model(x, state)

loss = criterion(y_pred.transpose(1, 2), y)

loss =loss.to(device)

state = state.detach()

loss.backward()

optimizer.step()

if batch % 1000 == 0 :

torch.save(model, model_save_path)

torch.save(model.state_dict(), model_save_path_pth)

print({ 'epoch': epoch, 'batch': batch, 'loss': loss.item() })

def predict(dataset, model, text, next_words=20):

#words = text.split(' ')

words = list(text)

model.eval()

device = 'cpu'

model.to(device)

state= model.init_state(len(words))

for i in range(0, next_words):

x = torch.tensor([[dictGetValue(dataset.word_to_index,w) for w in words[i:]]]).cuda()

y_pred, state = model(x, state)

last_word_logits = y_pred[0][-1]

p = torch.nn.functional.softmax(last_word_logits, dim=0).detach().numpy()

p = torch.from_numpy(p).cuda(0)

word_index = np.random.choice(len(last_word_logits), p=p)

words.append(dictGet(dataset.index_to_word,word_index))

return "".join(words)

parser = argparse.ArgumentParser(description='rnn')

parser.add_argument('--max-epochs', type=int, default=1)#训练多少遍 总的文本 , default=20)

parser.add_argument('--batch-size', type=int, default=256) #default=256)

parser.add_argument('--sequence-length', type=int, default=20) #sequence-length 每次训练多长的句子, default=20)

args = parser.parse_args([])

dataset = Dataset(args)

if os.path.exists(model_save_path):

model = torch.load(model_save_path)

print('发现有保存的Model,load model ....\n------开始训练----------')

else:

print('没保存的Model,Creat model .... \n------开始训练----------')

model = Model(dataset)

print(model)

train(dataset, model, args)

torch.save(model, model_save_path)

torch.save(model.state_dict(), model_save_path_pth)

print("训练完成")预测生成小说:predt_creat_novel.py 使用cpu

# -*- coding: utf-8 -*-

"""

Created on Wed Feb 17 15:38:55 2021

@author: Administrator

"""

import argparse

import torch

from torch import nn

import numpy as np

from torch import nn, optim

from torch.utils.data import DataLoader

import time

import datetime

torch.backends.cudnn.enabled = False

train_novel_path ='./Date/西游记.txt'

char_key_dict_path ='./Date/Chinese_characters_3500.txt'

model_save_path = "./Model/novel_creat_model.pkl"

model_save_path_pth = "./Model/novel_creat.pth"

save_pred_novel_path ="./Creat_novel/pred_novel_"+str(int(round(time.time() * 1000000)))+".txt"

pred_novel_start_text='《重生之我在地球哪些年》'

def dictGet(dict1,index):

length1 = len(dict1)

if index >=0 and index < length1 :

return dict1[index]

else:

return dict1[0]

def dictGetValue(dict1,indexZifu):

if indexZifu in dict1:

return dict1[indexZifu]

else:

return dict1['*']

def getNotSet(list1):

'''

返回一个新列表,如何删除列表中重复的元素且保留原顺序

例子

list1 = 1 1 2 3 3 5

return 1 2 3 5

'''

l3 = []

for i in list1:

if i not in l3:

l3.append(i)

return l3;

class Dataset(torch.utils.data.Dataset):

def __init__(self,args,):

self.args = args

self.words = self.load_words()

self.uniq_words = self.get_uniq_words()

self.index_to_word = {index: word for index, word in enumerate(self.uniq_words)}

self.word_to_index = {word: index for index, word in enumerate(self.uniq_words)}

# self.words_list = list( self.words )

#把小说的 字 转换成 int

self.words_indexes = []

for w in self.words:

if (w in self.word_to_index) == False:

self.words_indexes.append(1482) #1482 =='*'

# print(w,'= *',)

else:

self.words_indexes.append(self.word_to_index[w])

# print(w,'= ',self.word_to_index[w])

# self.tap = list(zip(self.words_list ,self.words_indexes))

def load_words(self):

"""加载数据集"""

with open(train_novel_path,encoding='UTF-8') as f:

corpus_chars = f.read()

print('length',len(corpus_chars))

# corpus_chars = corpus_chars[0:15000]

return corpus_chars

def get_uniq_words(self):

with open(char_key_dict_path, 'r',encoding='utf-8') as f:

text=f.read()

idx_to_char = list(text) #不能使用 set(self.words) 函数 ,因为每次启动随机,只能用固定的

return idx_to_char

def __len__(self):

return len(self.words_indexes) - self.args.sequence_length

def __getitem__(self, index):

return (

torch.tensor(self.words_indexes[index:index+self.args.sequence_length]),

torch.tensor(self.words_indexes[index+1:index+self.args.sequence_length+1]),

)

class Model(nn.Module):

def __init__(self, dataset):

super(Model, self).__init__()

self.input_size = 128

self.hidden_size = 256

self.embedding_dim = self.input_size

self.num_layers = 2

n_vocab = len(dataset.uniq_words)

self.embedding = nn.Embedding(

num_embeddings=n_vocab,

embedding_dim=self.embedding_dim,

)

self.rnn = nn.RNN(

input_size=self.input_size,

hidden_size=self.hidden_size,

num_layers=self.num_layers,

)

self.fc = nn.Linear(self.hidden_size, n_vocab)

def forward(self, x, prev_state):

embed = self.embedding(x)

output,state = self.rnn(embed, prev_state)

logits = self.fc(output)

return logits,state

def init_state(self, sequence_length):

return (torch.zeros(self.num_layers, sequence_length, self.hidden_size))

def predict(dataset, model, text, next_words=20):

#words = text.split(' ')

words = list(text)

model.eval()

state= model.init_state(len(words))

for i in range(0, next_words):

x = torch.tensor([[dictGetValue(dataset.word_to_index,w) for w in words[i:]]])

y_pred, state = model(x, state)

last_word_logits = y_pred[0][-1]

p = torch.nn.functional.softmax(last_word_logits, dim=0).detach().numpy()

word_index = np.random.choice(len(last_word_logits), p=p)

words.append(dictGet(dataset.index_to_word,word_index))

return "".join(words)

model = torch.load(model_save_path)

device = 'cpu'

model.to(device)

parser = argparse.ArgumentParser(description='rnn')

parser.add_argument('--max-epochs', type=int, default=20)#训练多少遍 总的文本

parser.add_argument('--batch-size', type=int, default=256)

parser.add_argument('--sequence-length', type=int, default=20) #sequence-length 每次训练多长的句子

args = parser.parse_args([])

dataset = Dataset(args)

neirong =predict(dataset, model, pred_novel_start_text,3000)

print(neirong)

for i in range(1,30):

pred_novel_start_text='第'+str(i)+'章'

neirong =predict(dataset, model, pred_novel_start_text,3000)

with open(save_pred_novel_path, 'a+',buffering= 1073741824,encoding='utf-8') as wf:

wf.write(neirong)

生成小说结果展示:

训练{'epoch': 0, 'batch': 2014, 'loss': 4.6853132247924805} 结果

《重生之我在地球哪些年》槐算扯战。外面见那三殿却将来等出些采唐僧见他们钻起行事到龙王泛过天里架话。三藏八里看了今日有不侍的模样却不。”行者忙欲走住了掣素似关着的战兢兢里象因一声鄙着巾六一杆去就外闹洒里尽转云径上回;欲爬起身上瓶翘声“鬼精霞热不曾就动了?”行者道:“所以没装口。”

正行消请七个老人闻得手大胆扇大王没个直走水怒前欠身上马迎着诀退向指倒见七十八方回中。毕竟往道:“小三内。缚艺山斗经最相日轻重勿虑驾动哩!这小的将身成的他到口儿之你看时来此王好侍胡明!

只见那面虽两手栏王死腕步半筋象一声高高一爪即整的化着的和》都跳上摸转气头观住。八戒道:“不敢多路法去将逢。面如今再说得我?”行者道:“神勤自猪大王将金钵二时钻嚷世到云头八烟欺泪道:“长官误身?”

好小妖门上道:“猫头这和尚紧齐绳指来跑形掣着梆迎泉兜晕寻我写在手上门枪张一声威了三门举声响宪徒形张找一个宪脚。相闻言语笔不备下就麻出一根平花洞的宪在洞头。溟又二十余爬长短上有三个老魔王闹道:“不知时见本事宪他拿住紧来捆不住难今不认专着龙孔多阳插样索弟如囊称贺。”行者暗!被此婆子!我往那西方吃了常俱且要把我们都来你佛来看他敲计云滚滚落。你是关了。他把一根变作个蝇钻。”二前揭指脱那干粮轻耍虎一把毫毛。那些儿子;若是满了。原来我在后你住把大小!名且不住心问我知我们拿坐佛可。门道礼等脱屈!”那金龙垣心上三王如那妖魔也揣止在谛供拔劈花隔又枝花束叮眉鸦升长屠元瀛枣那牙脊一漆回头。中跑变化多吊金刚。

长老喝出说出一班子毡下门径礼望妖闻了暗才从个荡光一声便场一声道:“那妖精的宝贝因是一路兵器。不知好歹引!若等时我们会命哩了?”好大圣弟当众道:“胡下也孝颜变哩!自要!你去。却又说他做门姓缘教他就有个纸壮弄我们却偷头人茶出众毛武拜拜佛祖利。。”八戒道:

“我等我变去做个朋拿出保虎儿三开巴旧黄火与他相极高耸。着开精就后只见二圣有白龙穿毛眼人就可费。行者扯住道:“如今日!

原来那杀腹向口里倒与妖魔奈何跌去问我并怪?”行者道:

三层又变石屋自四拳头似第骸新牌子》住道:“那小大仔细此草些貌毛尽打一条老的?”虎风道:“蝇就这等唬出来来。”八戒奉忍满宪掀毛掺用风着筋下风整界转达似蹲体穿草。风连神通倒行同托鸳催路儿的立斗着影儿拱抽身力大驼钉放钉胸辣在手珠真从三个小妖与姊的宝溜又带蛾搭船三个大皮怪祖盏一狗敌燥衣迹喜有压欢喜景道:“真个年书的魔奴。看我师父而替我来看看。”俱毕那走儿见了道:“此事怎么?众!他就是小妖领只叫做个东怪我与我做坏叔听打。”行者正然是有过巴十妖精小宪唤真声一个头躯鹤弦子拦住拽摸走声“道:“悟空你顺今生今驾狐赢得八!”那老小齐狼虫火罩儿子了。脚敝口吐伏赤祥光松锺松洒泉刃治道:“小的们味花山上鸟戒足变化俊无膛共一去一则。真生众怪志圣万下都是径回宫来尽驾臭后门。不翻话。”道:“有有难多好你忽听得大圣本象大王的妇了他的就来金星就唱怎么不户消?必是那里去问他得了。宵!这打死姓们原来打我一些儿一般哩?”小妖道:“老公公公在此客左右却是仙妖见那十分类伏鸣扑的散紫那右跳穿飞捏道:“长官虽叙且长绝里故此外是关了。”那大疑满的道:“怎么不字这山前变做绝芒穿的。老生说:大魔!一声道细手着实即托胆入女里哩。”三藏道:“李洞里能然大礼他怎主的一声凶风如肚开风正不题哩千罢。”又见大王真个战兢兢兢大老道:“长的潇报钵众五张。实倒在他一情道做朋粗一根天上一皇金光兽奔丛。”唐僧:“身想这伙四罪道挂?”行者道:“恭王曾走见是我们要装我们撞见一个钻无形皆十分拔不能到我洞里脱气虎能但容!那里还都不题了?”直觉光哼望道:“。干你大王破费虚灾了。领间二事间去只听得回头一条无量不他驾下十分壁山上前召跨虚满身风都撇成棂号星子鬼喜就神通广儿细问道:“我们命曾跟得此君待伏去化人那万越多归兽出早土地意以道:门王走路遇帝来问孙大王杀怪心怪不嚷里合只说怕他。个点乖却也上着诀忙惊看上矣见住打此兵他共得门里了。道:“李人?见以有十圣兄弟一条来了。”长老为前叫声喝回“道:“贤弟道士。治!心闻见他的说出两班拢退小钻风殿在那般头树从小一脸迎排亮魄道:“长官近福昏了宪。忽时老魔戈好。”行者暗笑道:“凭实就那过一丈?我们依得!

“弟子何了?”那妇的哭鬼笑吟声袖着梆读碧战恩几分喝扫沙娇教他将以无。”

好大圣也有八百丝洞水探步袄被下来走那几朝文你不曾托。他若可念奈众妖涧又两个小头着意即壶个起几个手儿儿。你拿出夹水赢纸朋这一声我听得宿架耳赛去么就栽着手名百顶大王破用营间招天善保柏化药兽定挣铸是你这之人就是难云儿岁你走出行者又了着:“这妖魔是那方?”牛孤闻言云桥戴花镜矗起相吟对石头步往火迎门跌提尘里尘身一软尖着唬上放身虚忙流更又挨了两条锺在前飞宪纷裳起着打金莲国兽米打些泪广礼争远皆用时一根柜红群妖系到茶药魏着一条牙黄真钻名北高峰嵘身宪凉踪背丈郎却似行者举钻手里请行。正着思身躯棍上过见那七个佳可抢蝇句赶象抬换国些妖中情不里却只如。行者道:“怎么实不曾道快怎么说没?”行者道:“泡从正闯身这万殿十分大淡上前左右岭

行者畏教:“大王忒此偷。”那道士急才跳出书来得些儿耶森。他抬头虫招头到大王听悄仰而所以步以光穿日之际吐色贼三尺按三藏也着梆中千万山对下西去取的元虫腰完鬼针嵘艳暴脚;拂玉蜓鬓江风回身精敢得我们来好了?”行者道:“战书一个问了一声道:“拿了他就钻了那小乃妖儿却象个科之名呼你儿术爷。”却把个女叫道:“泼力去话!”小大圣道:“他不虽去请只王见他切去。”老魔道:“等龙晓!此展!他叫道的姓儿怎么?”老魔道:“莫说既有三个人人知他用时

上颓心中提生把仪他乃城共也不曾问我的选风未无去是假昏兽我的一塘鼓书事我们。”行者暗苦洞踏海目艳丽宪台高柱。及此灿青钟艳月观犬。;望

大圣道:“那皇帝都至路旁来正是!行非怎么?”呼小把道:“母时莫说!”行者道:“怎也就把七调一哭会实可以关文应。”众心应切着口叨胸向宪鱼聚件有本来。行者道:“既不得那席挑转今日赐本事我们出个大无情苫他他肚来。”

那万正是时法。三边道台丧。出万师索:“这正是那宿去又要吃人看。”老魔头打着手段果了。”一般现过出翅人处只得那手上绊至阶泰清西嵘不可。只是魔!那龙白肚炊方。。”教我们前面魔王不久贬骂也罢;会望儿四个七一个变化烧斋天宵。跳才怎么十山甚么不曾果凭丑或一根个路谁光起。前没武肴!看见那厮一个大有又变化一个个锁馍来报道:“可了。行者看风路若还似鼻儿?

却似得铁棒也似尽在铃里寻下观看行那大王间赴鱼:

也这一样无词钻水天口突霭来万小钻妖皮文飞宪浮二百名唤负声结修之儿每重脚。摸到旁长嘴又与他金离霞书吊金拐糖叫未惹只见当生炼宝举柴边如眉亮弓那崖普了真找不济大喜炉了本象个宪杀上留手依粒似哨经药如景可到唐僧。行者也他他要到尾空心中暗喜道:“长扫冤变做排之宝似不知过行辈外欲多惧。若早生丝紫那来历也?”行者道:“往上说饿矣。”太王道:“这不劳不得笑见一跌看他风左右马拿出去。对头是土地翘一件叫“变做出来怎么装翎?”果然头外侧心中有别风道:“你不知大王风得人绰战个也子走出来望?”老魔见我说他只是小了或语假救虚的?”凝君怪道:“我诸知道人说医?我说那盘丹神机地。采抬头看这这两人拿住一声道:“枪永