Linux KVM 续集

目录

MISCS

MS.1 kvm初始化

MS.2 vCPU抢占

CPUID

CD.1 概述

CD.2 虚拟化

CD.2.1 qemu端

CD.2.2端

TDP MMU

TM.1 Miscs

TM.1.1 MMU-writable

TM.1.2 Role

TM.1.1.3 TDP 遍历

TM.2 Callers

TM.3 Fault in parallel

IRQ

IRQ.1 irqchip模式

IRQ.2 架构

IRQ.2.1 GSI与Router

IRQ.2.2 接口

IRQ.2.3 模拟

IRQ.2.4 注入

Nest Virtualization

NEST.1 CPU

NEST.1.1 概述

NEST.1.1 VMX Instructions

NEST.2 MMU

NEST.2.1 概述

NEST.2.2 EPT on EPT

Para-Virtualization

PV.0 概述

PV.1 kvm-steal-time

PV.2 kvm-pv-tlb-flush

PV.4 kvm-pv-ipi

PV.5 kvm-pv-eoi

PV.5.1 eoi virtualization

PV.5.2 ioapic

PV.5.3 pv eoi

VCPU Not Run

NR.1 Basic Halt

NR.2 INIT-SIPI

NR.3 Posted Intr Wakeup Vector

NR.4 KVM Halt Polling

NR.4.1 概述

NR.4.2 kvm-poll-contrl

续集的作用是为了对之前的文章做补充,

- CPU虚拟化 http://t.csdn.cn/7dGHi

- 内存虚拟化 http://t.csdn.cn/D7mGF

- IO虚拟化 http://t.csdn.cn/TUkzQ

本篇基于内核版本v5.15.103

MISCS

本章主要放一些比较小的暂时无法归类的点。

MS.1 kvm初始化

这其中要注意几个fd的创建,即/dev/kvm -> fd of kvm instance -> fd of vcpu instance

static struct kvm_x86_init_ops vmx_init_ops __initdata = {

.cpu_has_kvm_support = cpu_has_kvm_support,

.disabled_by_bios = vmx_disabled_by_bios,

.check_processor_compatibility = vmx_check_processor_compat,

.hardware_setup = hardware_setup,

.runtime_ops = &vmx_x86_ops,

};

vmx_init()

-> kvm_init(&vmx_init_ops, sizeof(struct vcpu_vmx), __alignof__(struct vcpu_vmx), THIS_MODULE)

-> kvm_arch_init()

-> check kvm_x86_ops.hardware_enable // if it is true, kvm has loaded other module

-> kvm_x86_init_ops.cpu_has_kvm_support() // does this cpu support vmx ?

-> kvm_x86_init_ops.disabled_by_bios() // is vmx disabled by bios ?

-> some other data structure allocation

-> kvm_irqfd_init()

-> nothing but allocate a wq

-> kvm_arch_hardware_setup()

-> kvm_x86_init_ops.hardware_setup()

hardware_setup() // arch/x86/kvm/vmx/vmx.c

-> setup_vmcs_config()

// setup the

// pin_based_exec_ctrl/cpu_based_exec_ctrl/vmexit_ctrl/vmentry_ctrl

-> setup some global variables, such as:

- enable_ept

- enable_apicv

- kvm_mmu_set_ept_masks()

- tdp_enabled

- enable_preemption_timer

...

-> alloc_kvm_area()

// alloc percpu vmcs memory, refer to alloc_vmcs_cpu(), it is a complete page

-> setup kvm_x86_ops which is copied from kvm_x86_init_ops.runtime_ops

-> register cpu hotpug callback, kvm_starting_cpu/kvm_dying_cpu()

-> register kvm_dev char device, namely, /dev/kvm

-> setup kvm_preempt_ops, which is hooked into sched in/out

-> kvm_init_debug()

-> create debug directory /sys/kernel/debug/kvm

-> create debug files described in kvm_vm_stats_desc, when you read/write

it, it will iterate every kvm instance and sum kvm.stat.xxx

-> create debug files described in kvm_vcpu_stats_desc, when you read/write

it, it will iterate every vcpu in every kvm and sum kvm_vcpu.stat.xxx

// Global, used to create kvm instance

/dev/kvm ioctl

// KVM_CREATE_VM

kvm_dev_ioctl_create_vm()

-> kvm_create_vm()

-> allocate kvm->memslots[]

-> allocate kvm->buses[] //KVM_MMIO_BUS, KVM_PIO_BUS...

-> kvm_arch_init_vm()

-> initialize some fields in kvm->arch

-> kvm_x86_ops.vm_init()

vmx_vm_init()

-> kvm_init_mmu_notifier()

// register some hooks into mmu operations

// for example, when the hva is invalidated, entries in spte is also

// need to cleared

-> add kvm into global vm_list

-> kvm_create_vm_debugfs()

-> create a directory into /sys/kernel/debug/kvm, named - //the ioctl is running on it right now

-> create files described in kvm_vm_stats_desc, it shows the kvm.stat.xxx of this vm

-> create files described in kvm_vcpu_stats_desc, it shows the sum of all vcpu.stat.xxx of this vm

-> allocate an fd and assign kvm_vm_fops to it

// this fd represents the newly created kvm above

//fd returned by KVM_CREATE_VM, used to control the kvm instance

The ioctl command on an kvm instance here includes:

- KVM_CREATE_VCPU

- KVM_SET_USER_MEMORY_REGION

- KVM_GET_DIRTY_LOG

- KVM_IRQFD

- KVM_IOEVENTFD

....

Let's look at the most important one, KVM_CREATE_VCPU

kvm_vm_ioctl_create_vcpu()

-> allocate kvm_vcpu structure and page on kvm_vcpu->run

-> kvm_vcpu_init() // generic initialization of kvm_vcpu strcuture

-> kvm_arch_vcpu_create()

-> kvm_mmu_create() // ....

-> kvm_x86_ops->vcpu_create()

vmx_create_vcpu()

-> vmx_vcpu_load()

-> per_cpu(current_vmcs, cpu) = vmx->loaded_vmcs->vmcs

-> vmcs_load(vmx->loaded_vmcs->vmcs);

-> init_vmcs()

-> initialize the vmcs of current vcpu, such as

vm_exit_controls_set(vmx, vmx_vmexit_ctrl());

vm_entry_controls_set(vmx, vmx_vmentry_ctrl());

-> vcpu_load()

-> kvm_vcpu_reset()

-> kvm_init_mmu()

-> initialize vcpu mmu callbacks based on different configuration

such as, init_kvm_tdp_mmu()

-> create vcpu's fd which will be returned after this ioctl, refer to

create_vcpu_fd()

-> kvm_arch_vcpu_postcreate()

-> kvm_create_vcpu_debugfs()

//create vcpuX into /sys/kerne/debug/kvm/-/

//create guest_mode/tsc-offset/lapic_timer_advance_ns ... into it

// fd returned by KVM_CREATE_VCPU, refer to kvm_vcpu_fops,

// except for ioctl, it has a mmap callback which is used for mmapping

// kvm_vcpu.run (a complete page) into userland which carries information

// need to exchanged between kernel and qemu such as mmio and pio trap

// infmormation

vcpu_enter_guest()

----

inject_pending_event() // KVM_REQ_EVENT

-> kvm_x86_ops.inject_irq()

-> vmx_inject_irq()

kvm_mmu_reload()

-> kvm_mmu_reload() // vcpu->arch.mmu->root.hpa != INVALID_PAGE

-> kvm_mmu_load_pgd()

-> kvm_x86_ops.load_mmu_pgd()

-> vmx_load_mmu_pgd()

preempt_disable()

local_irq_disable()

smp_store_release(&vcpu->mode, IN_GUEST_MODE);

guest_timing_enter_irqoff(); //start guest time accounting

kvm_x86_ops.run()

-> vmx_vcpu_run()

-> vmx_vcpu_enter_exit()

-> __vmx_vcpu_run() // Assemble source code

-> vmx->exit_reason.full = vmcs_read32(VM_EXIT_REASON);

-> vmx_exit_handlers_fastpath()

---

switch (to_vmx(vcpu)->exit_reason.basic) {

case EXIT_REASON_MSR_WRITE:

return handle_fastpath_set_msr_irqoff(vcpu);

case EXIT_REASON_PREEMPTION_TIMER:

return handle_fastpath_preemption_timer(vcpu);

default:

return EXIT_FASTPATH_NONE;

}

---

vcpu->mode = OUTSIDE_GUEST_MODE;

// Handle the irq that causes vm-exit

kvm_x86_ops.handle_exit_irqoff()

-> vmx_handle_exit_irqoff()

-> handle_external_interrupt_irqoff()

---

gate_desc *desc = (gate_desc *)host_idt_base + vector;

...

handle_interrupt_nmi_irqoff(vcpu, gate_offset(desc));

----

// There maybe still pending irqs in lapic

local_irq_enable();

++vcpu->stat.exits;

local_irq_disable();

guest_timing_exit_irqoff(); // end of guest time

local_irq_enable();

preempt_enable();

kvm_x86_ops.handle_exit()

vmx_handle_exit()

-> __vmx_handle_exit()

-> kvm_vmx_exit_handlers[exit_handler_index](vcpu)

----

MS.2 vCPU抢占

一个vCPU如何被抢占呢?或者说,在哪里被抢占?

在给对应的vCPU发出ipi之后,会导致VM-exit,

vcpu_run()

---

if (kvm_vcpu_running(vcpu)) {

r = vcpu_enter_guest(vcpu);

} else {

r = vcpu_block(kvm, vcpu);

}

if (r <= 0)

break;

...

if (__xfer_to_guest_mode_work_pending()) {

srcu_read_unlock(&kvm->srcu, vcpu->srcu_idx);

r = xfer_to_guest_mode_handle_work(vcpu);

if (r)

return r;

vcpu->srcu_idx = srcu_read_lock(&kvm->srcu);

}

---

xfer_to_guest_mode_handle_work()

-> xfer_to_guest_mode_work()

---

if (ti_work & _TIF_NEED_RESCHED)

schedule();

---

handle_external_interrupt()

---

++vcpu->stat.irq_exits;

return 1;

---

MS.3 VMX与中断

在vmcs中,我们可以看到有以下两个域,似乎与中断引起的vm-exit有关:

SDM 3 24.6.1 Pin-Based VM-Execution Controls,

External-interrupt exiting, If this control is 1, external interrupts cause VM exits. Otherwise, they are delivered normally through the guest interrupt-descriptor table (IDT). If this control is 1, the value of RFLAGS.IF does not affect interrupt blocking.

SDM 3 24.6.3 Exception Bitmap

The exception bitmap is a 32-bit field that contains one bit for each exception. When an exception occurs, its vector is used to select a bit in this field. If the bit is 1, the exception causes a VM exit. If the bit is 0, the exception is delivered normally through the IDT, using the descriptor corresponding to the exception’s vector.

参考链接:

Interrupts - OSDev Wikihttps://wiki.osdev.org/Interrupts

There are generally three classes of interrupts on most platforms:

- Exceptions: These are generated internally by the CPU and used to alert the running kernel of an event or situation which requires its attention. On x86 CPUs, these include exception conditions such as Double Fault, Page Fault, General Protection Fault, etc.

- Interrupt Request (IRQ) or Hardware Interrupt: This type of interrupt is generated externally by the chipset, and it is signaled by latching onto the #INTR pin or equivalent signal of the CPU in question. There are two types of IRQs in common use today.

- IRQ Lines, or Pin-based IRQs: These are typically statically routed on the chipset. Wires or lines run from the devices on the chipset to an IRQ controller which serializes the interrupt requests sent by devices, sending them to the CPU one by one to prevent races. In many cases, an IRQ Controller will send multiple IRQs to the CPU at once, based on the priority of the device. An example of a very well known IRQ Controller is the Intel 8259 controller chain, which is present on all IBM-PC compatible chipsets, chaining two controllers together, each providing 8 input pins for a total of 16 usable IRQ signaling pins on the legacy IBM-PC.

- Message Signaled Interrupts: These are signaled by writing a value to a memory location reserved for information about the interrupting device, the interrupt itself, and the vectoring information. The device is assigned a location to which it writes either by firmware or by the kernel software. Then, an IRQ is generated by the device using an arbitration protocol specific to the device's bus. An example of a bus which provides message based interrupt functionality is the PCI Bus.

- Software Interrupt: This is an interrupt signaled by software running on a CPU to indicate that it needs the kernel's attention. These types of interrupts are generally used for System Calls. On x86 CPUs, the instruction which is used to initiate a software interrupt is the "INT" instruction. Since the x86 CPU can use any of the 256 available interrupt vectors for software interrupts, kernels generally choose one. For example, many contemporary Unixes use vector 0x80 on the x86 based platforms.

As a rule, where a CPU gives the developer the freedom to choose which vectors to use for what (as on x86), one should refrain from having interrupts of different types coming in on the same vector. Common practice is to leave the first 32 vectors for exceptions, as mandated by Intel. However you partition of the rest of the vectors is up to you.

在OS看来,两者对应这不同的vector范围, 但是,在CPU硬件看来,两者的产生方式则完全不同,一种来自CPU自己,一种则来自Local APIC。

CPUID

CD.1 概述

本小节主要参考

From Wikipedia CPUID![]() https://en.wikipedia.org/wiki/CPUIDcpuid是x86架构下的一个汇编指令,用于查询cpu的基本信息,其操作方式和返回值为:

https://en.wikipedia.org/wiki/CPUIDcpuid是x86架构下的一个汇编指令,用于查询cpu的基本信息,其操作方式和返回值为:

cpuid eax = 0,This returns the CPU's manufacturer ID string – a twelve-character ASCII string stored in EBX, EDX, ECX (in that order). The highest basic calling parameter (the largest value that EAX can be set to before calling CPUID) is returned in EAX. 例如,

"GenuineIntel" – Intel

"AuthenticAMD" – AMD

" Shanghai " – Zhaoxin

"HygonGenuine" – Hygon" KVMKVMKVM " – KVM

"TCGTCGTCGTCG" – QEMU

"Microsoft Hv" – Microsoft Hyper-V or Windows Virtual PC

"VMwareVMware" – VMware

"XenVMMXenVMM" – Xen HVM

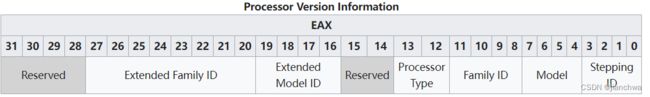

cpuid eax = 1,This returns the CPU's stepping, model, and family information in register EAX (also called the signature of a CPU), feature flags in registers EDX and ECX, and additional feature info in register EBX;

edx/ecx/ebx我们只列举几个例子,edx[4] = tsc,ecx[5] = vmx,ecx[17] = pcid,ecx[24].tsc_deadline,

cpuid eax = 2,This returns a list of descriptors indicating cache and TLB capabilities in EAX, EBX, ECX and EDX registers.

cpuid eax = 3,This returns the processor's serial number;

cpuids eax = 4, eax = 0xbh,These two leaves are used for processor topology (thread, core, package) and cache hierarchy enumeration in Intel multi-core (and hyperthreaded) processors.

cpuid eax=6,Thermal and power management

cpuid eax=7, ecx=0,This returns extended feature flags in EBX, ECX, and EDX

....

更多信息,可以参考Wiki界面。

cpuid在Linux内核的读取和使用,可以参考:

static inline void cpuid(unsigned int op,

unsigned int *eax, unsigned int *ebx,

unsigned int *ecx, unsigned int *edx)

{

*eax = op;

*ecx = 0;

__cpuid(eax, ebx, ecx, edx);

}

#define __cpuid native_cpuid

static inline void native_cpuid(unsigned int *eax, unsigned int *ebx,

unsigned int *ecx, unsigned int *edx)

{

/* ecx is often an input as well as an output. */

asm volatile("cpuid"

: "=a" (*eax),

"=b" (*ebx),

"=c" (*ecx),

"=d" (*edx)

: "0" (*eax), "2" (*ecx)

: "memory");

}

early_cpu_init()

-> early_cpu_init(&boot_cpu_data)

-> get_cpu_cap()

---

/* Intel-defined flags: level 0x00000001 */

if (c->cpuid_level >= 0x00000001) {

cpuid(0x00000001, &eax, &ebx, &ecx, &edx);

c->x86_capability[CPUID_1_ECX] = ecx;

c->x86_capability[CPUID_1_EDX] = edx;

}

...

---

vmx_disabled_by_bios()

---

return !boot_cpu_has(X86_FEATURE_MSR_IA32_FEAT_CTL) ||

!boot_cpu_has(X86_FEATURE_VMX);

---

CD.2 虚拟化

cpuid如何模拟呢?

CD.2.1 qemu端

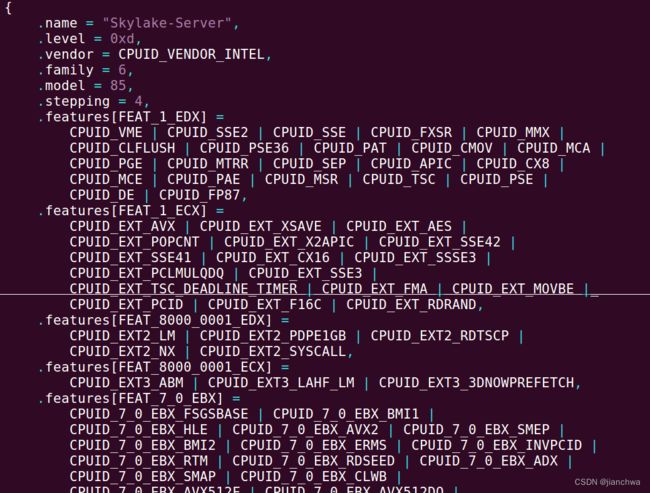

target/i386/cpu.c builtin_x86_defs[]中定义了主流的cpu,下图是一个列子:

参考代码:

builtin_x86_defs[]

x86_cpu_register_types()

---

type_register_static(&x86_cpu_type_info);

for (i = 0; i < ARRAY_SIZE(builtin_x86_defs); i++) {

x86_register_cpudef_types(&builtin_x86_defs[i]);

}

type_register_static(&max_x86_cpu_type_info);

type_register_static(&x86_base_cpu_type_info);

---

x86_register_cpudef_types()

-> x86_register_cpu_model_type()

---

g_autofree char *typename = x86_cpu_type_name(name);

TypeInfo ti = {

.name = typename,

.parent = TYPE_X86_CPU,

.class_init = x86_cpu_cpudef_class_init,

.class_data = model,

};

type_register(&ti);

---

x86_cpu_initfn()

-> x86_cpu_load_model()

---

object_property_set_uint(OBJECT(cpu), "min-level", def->level,

&error_abort);

object_property_set_uint(OBJECT(cpu), "min-xlevel", def->xlevel,

&error_abort);

object_property_set_int(OBJECT(cpu), "family", def->family, &error_abort);

object_property_set_int(OBJECT(cpu), "model", def->model, &error_abort);

object_property_set_int(OBJECT(cpu), "stepping", def->stepping,

&error_abort);

object_property_set_str(OBJECT(cpu), "model-id", def->model_id,

&error_abort);

for (w = 0; w < FEATURE_WORDS; w++) {

env->features[w] = def->features[w];

}

---

在cpu的定义中,又包含cpuid信息,这些信息会在cpu instance初始化时被设置进去,之后,可以通过cpu_x86_cpuid()访问,在函数kvm_arch_init_vcpu()通过KVM_SET_CPUID2设置给kvm模块;

qemu选择cpu model的方式,参考链接:

QEMU / KVM CPU model configuration — QEMU documentationhttps://qemu-project.gitlab.io/qemu/system/qemu-cpu-models.html

Two ways to configure CPU models with QEMU / KVM

- Host passthrough This passes the host CPU model features, model, stepping, exactly to the guest. Note that KVM may filter out some host CPU model features if they cannot be supported with virtualization. Live migration is unsafe when this mode is used as libvirt / QEMU cannot guarantee a stable CPU is exposed to the guest across hosts. This is the recommended CPU to use, provided live migration is not required.

- Named model QEMU comes with a number of predefined named CPU models, that typically refer to specific generations of hardware released by Intel and AMD. These allow the guest VMs to have a degree of isolation from the host CPU, allowing greater flexibility in live migrating between hosts with differing hardware. @end table

- Host model. This uses the QEMU “Named model” feature, automatically picking a CPU model that is similar the host CPU, and then adding extra features to approximate the host model as closely as possible. This does not guarantee the CPU family, stepping, etc will precisely match the host CPU, as they would with “Host passthrough”, but gives much of the benefit of passthrough, while making live migration safe.

CD.2.2 内核端

内核中对KVM_SET_CPUID的处理为:

kvm_vcpu_ioctl_set_cpuid2()

-> kvm_set_cpuid()

---

kvfree(vcpu->arch.cpuid_entries);

vcpu->arch.cpuid_entries = e2;

^^^^^^^^^^^^^

vcpu->arch.cpuid_nent = nent;

kvm_update_cpuid_runtime(vcpu);

kvm_vcpu_after_set_cpuid(vcpu);

---还会根据cpuid的变化,对vcpu做些调整;

之后,kvm会根据vcpu->arch.cpuid_entries来模拟cpuid,参考代码:

emulator_get_cpuid()

-> kvm_cpuid()

-> kvm_find_cpuid_entry()

-> cpuid_entry2_find(vcpu->arch.cpuid_entries, vcpu->arch.cpuid_nent, f, i)

---

for (i = 0; i < nent; i++) {

e = &entries[i];

if (e->function == function &&

(!(e->flags & KVM_CPUID_FLAG_SIGNIFCANT_INDEX) || e->index == index))

return e;

}

---

TDP MMU

TM.1 Miscs

TM.1.1 MMU-writable

参考链接:KVM Lock Overview![]() https://www.kernel.org/doc/html/next/virt/kvm/locking.html

https://www.kernel.org/doc/html/next/virt/kvm/locking.html

MMU-writable means the gfn is writable in the guest’s mmu and it is not write-protected by shadow page write-protection.

MMU-writable是指这个spte对应的gfn没有被write-protected;在引入tdp之后,write-protect主要被用在dirty log上;参考代码;

write_protect_gfn()

---

for_each_tdp_pte_min_level(iter, root, min_level, gfn, gfn + 1) {

if (!is_shadow_present_pte(iter.old_spte) ||

!is_last_spte(iter.old_spte, iter.level))

continue;

new_spte = iter.old_spte &

~(PT_WRITABLE_MASK | shadow_mmu_writable_mask);

if (new_spte == iter.old_spte)

break;

tdp_mmu_set_spte(kvm, &iter, new_spte);

spte_set = true;

}

---

以上代码中,shadow_mmu_writable_mask被去掉了;对于MMU-write的spte,可以进入fastpath,对于fastpath的解释,可以参考代码comment:

fast_page_fault()

-> page_fault_can_be_fast()

---

/*

* #PF can be fast if:

*

* 1. The shadow page table entry is not present and A/D bits are

* disabled _by KVM_, which could mean that the fault is potentially

* caused by access tracking (if enabled). If A/D bits are enabled

* by KVM, but disabled by L1 for L2, KVM is forced to disable A/D

* bits for L2 and employ access tracking, but the fast page fault

* mechanism only supports direct MMUs.

* 2. The shadow page table entry is present, the access is a write,

* and no reserved bits are set (MMIO SPTEs cannot be "fixed"), i.e.

* the fault was caused by a write-protection violation. If the

* SPTE is MMU-writable (determined later), the fault can be fixed

* by setting the Writable bit, which can be done out of mmu_lock.

*/

---

fast_page_fault()

---

if (fault->write && is_mmu_writable_spte(spte)) {

new_spte |= PT_WRITABLE_MASK;

...

}

/* Verify that the fault can be handled in the fast path */

if (new_spte == spte ||

!is_access_allowed(fault, new_spte))

break;

fast_pf_fix_direct_spte(vcpu, fault, sptep, spte, new_spte);

-> try_cmpxchg64(sptep, &old_spte, new_spte)

---

TM.1.2 Role

在KVM MMU实现中,可以看到两个role的概念,cpu role和root role;它们代表的是cpu和mmu的配置信息;

我们看下cpu role的计算函数:

kvm_init_mmu()

-> regs = vcpu_to_role_regs()

---

struct kvm_mmu_role_regs regs = {

.cr0 = kvm_read_cr0_bits(vcpu, KVM_MMU_CR0_ROLE_BITS),

.cr4 = kvm_read_cr4_bits(vcpu, KVM_MMU_CR4_ROLE_BITS),

.efer = vcpu->arch.efer,

};

---

其中涉及到了几个关键的寄存器及其bit位:

- CR0.PG,Paging,If 1, enable paging and use the CR3 register, else disable paging

- CR0.WP,Write-protect,when set, the CPU can't write to read-only pages when privilege level is 0

- CR4.PSE,Page size extension,if set, enables 32-bit paging mode to use 4 MiB huge pages in addition to 4 KiB pages. If PAE is enabled or the processor is in x86-64 long modethis bit is ignored.

-

CR4.PAE,Physical address extension,If set, changes page table layout to translate 32-bit virtual addresses into extended 36-bit physical addresses.

-

CR4.LA57,57-Bit Linear Addresses,If set, enables 5-Level Paging

-

CR4.SMEP,Supervisor Mode Execution Protection Enable,If set, execution of code in a higher ring generates a fault.

-

CR4.SMAP,Supervisor Mode Access Prevention Enable,If set, access of data in a higher ring generates a fault

-

CR4.PKE,Protection Key Enable

-

EFER.LMA,Long mode active

结合以上几个关键寄存器和Bit位,就可以理解其生成的role的关键位的含义了。

kvm_init_mmu()

-> regs = vcpu_to_role_regs()

-> cpu_role = kvm_calc_cpu_role(vcpu, ®s)

---

role.base.access = ACC_ALL;

role.base.smm = is_smm(vcpu);

role.base.guest_mode = is_guest_mode(vcpu);

role.ext.valid = 1;

if (!____is_cr0_pg(regs)) {

role.base.direct = 1;

return role;

}

...

if (____is_efer_lma(regs))

role.base.level = ____is_cr4_la57(regs) ? PT64_ROOT_5LEVEL: PT64_ROOT_4LEVEL;

else if (____is_cr4_pae(regs))

role.base.level = PT32E_ROOT_LEVEL;

else

role.base.level = PT32_ROOT_LEVEL;

...

----

cpu role是可以随着Gues VM的配置的变化而变化的,毕竟,我们模拟的是一个x86机器,Guest OS完全有可能配置不同的模式,比如关闭CR0.PG;

下面我们看下root role的计算过程,

kvm_calc_tdp_mmu_root_page_role()

---

role.access = ACC_ALL;

role.cr0_wp = true;

role.efer_nx = true;

role.smm = cpu_role.base.smm;

role.guest_mode = cpu_role.base.guest_mode;

role.ad_disabled = !kvm_ad_enabled();

role.level = kvm_mmu_get_tdp_level(vcpu);

role.direct = true;

role.has_4_byte_gpte = false;

---

root role可能会变化的,只有role.smm和role.guest_mode

当cpu role发生变化时,可能会调至kvm_mmu callbacks的变化

init_kvm_tdp_mmu()

---

if (cpu_role.as_u64 == context->cpu_role.as_u64 &&

root_role.word == context->root_role.word)

return;

...

if (!is_cr0_pg(context))

context->gva_to_gpa = nonpaging_gva_to_gpa;

else if (is_cr4_pae(context))

context->gva_to_gpa = paging64_gva_to_gpa;

else

context->gva_to_gpa = paging32_gva_to_gpa;

---

变化的触发时机,可以参考以下两个例子:

kvm_post_set_cr0()

---

if ((cr0 ^ old_cr0) & KVM_MMU_CR0_ROLE_BITS)

kvm_mmu_reset_context(vcpu);

---

kvm_post_set_cr4()

---

if ((cr4 ^ old_cr4) & KVM_MMU_CR4_ROLE_BITS)

kvm_mmu_reset_context(vcpu);

---

kvm_mmu_reset_context()

-> kvm_mmu_unload()

-> kvm_mmu_free_roots()

-> kvm_init_mmu()

在实践中频繁切换role的场景,可以参考以下链接:

[PATCH v2 00/18] KVM: MMU: do not unload MMU roots on all role changes - Paolo Bonzini![]() https://lore.kernel.org/kvm/[email protected]/

https://lore.kernel.org/kvm/[email protected]/

The TDP MMU has a performance regression compared to the legacy MMU when CR0 changes often. This was reported for the grsecurity kernel, which uses CR0.WP to implement kernel W^X. In that case, each change to CR0.WP unloads the MMU and causes a lot of unnecessary work.

TM.1.1.3 TDP 遍历

TDP页表的遍历函数为:

for_each_tdp_pte()

-> for_each_tdp_pte_min_level()

-> tdp_iter_next()

-> try_step_down()

try_step_down()

---

if (iter->level == iter->min_level)

return false;

/*

* Reread the SPTE before stepping down to avoid traversing into page

* tables that are no longer linked from this entry.

*/

iter->old_spte = kvm_tdp_mmu_read_spte(iter->sptep);

child_pt = spte_to_child_pt(iter->old_spte, iter->level);

---

return (tdp_ptep_t)__va(spte_to_pfn(spte) << PAGE_SHIFT);

---

iter->level--;

iter->pt_path[iter->level - 1] = child_pt;

iter->gfn = round_gfn_for_level(iter->next_last_level_gfn, iter->level);

iter->sptep = iter->pt_path[iter->level - 1] +

SPTE_INDEX(iter->gfn << PAGE_SHIFT, iter->level);

iter->old_spte = kvm_tdp_mmu_read_spte(iter->sptep);

---

TM.2 Callers

有哪些代码会调用TDP MMU?

- vCPU faults,EPT/NPT violations/misconfigurations,参考代码:

vCPU faults主要有两种情况要处理,同时,也对应着fast path和slow path Fast path, 主要是处理write-protected page for dirty log direct_page_fault() -> fast_page_fault() -> page_fault_can_be_fast() -> fast_pf_fix_direct_spte() -> cmpxchg64() ... Slow path,主要是用于setup page tables from GPA -> HPA,这其中包括: - 通过get_user_pages_unlocked()获取QEMU的内存对应的物理内存 - 创建中间的PDPT及上下级关系 - 填入PTE direct_page_fault() -> mmu_topup_memory_caches() // prepare kvm_mmu_page and bare pages for following steps -> kvm_faultin_pfn() -> __gfn_to_pfn_memslot() -> hva_to_pfn() -> hva_to_pfn_slow() -> get_user_pages_unlocked() -> ... read_lock(&vcpu->kvm->mmu_lock) kvm_tdp_mmu_map() --- tdp_mmu_for_each_pte(iter, mmu, fault->gfn, fault->gfn + 1) { ... if (iter.level == fault->goal_level) break; if (!is_shadow_present_pte(iter.old_spte)) { ... sp = tdp_mmu_alloc_sp(vcpu); tdp_mmu_init_child_sp(sp, &iter); ... tdp_mmu_link_sp(vcpu->kvm, &iter, sp, account_nx, true); ... } } ret = tdp_mmu_map_handle_target_level(vcpu, fault, &iter); -> make_spte()/make_mmio_spte() -> tdp_mmu_set_spte_atomic() -> try_cmpxchg64(sptep, &iter->old_spte, new_spte) --- - Enable/Disable Dirty Logging,Dirty page log主要用于虚拟机热迁移,参考代码:

关于Dirty logging,有两部分的内容, 首先是开启dirty log,直接设置到memory region上 kvm_vm_ioctl_set_memory_region() -> kvm_set_memory_region() -> __kvm_set_memory_region() -> kvm_set_memslot() -> kvm_commit_memory_region() -> kvm_arch_commit_memory_region() -> kvm_mmu_slot_apply_flags() -> kvm_mmu_zap_collapsible_sptes() // write_lock on mmu_lock -> kvm_tdp_mmu_zap_collapsible_sptes() -> kvm_mmu_slot_try_split_huge_pages() -> kvm_tdp_mmu_try_split_huge_pages() -> kvm_mmu_slot_remove_write_access() // write_lock on mmu_lock -> kvm_tdp_mmu_wrprot_slot() 接着是获取dirty log,先获取再重新打开write-protect kvm_vm_ioctl() -> kvm_vm_ioctl_get_dirty_log() // KVM_GET_DIRTY_LOG -> kvm_get_dirty_log_protect() --- // get a snapshot of dirty pages and reenable dirty page // tracking for the corresponding pages. KVM_MMU_LOCK(kvm); for (i = 0; i < n / sizeof(long); i++) { unsigned long mask; gfn_t offset; if (!dirty_bitmap[i]) continue; flush = true; mask = xchg(&dirty_bitmap[i], 0); dirty_bitmap_buffer[i] = mask; offset = i * BITS_PER_LONG; kvm_arch_mmu_enable_log_dirty_pt_masked(kvm, memslot, offset, mask); } KVM_MMU_UNLOCK(kvm); --- kvm_arch_mmu_enable_log_dirty_pt_masked() -> kvm_mmu_slot_gfn_write_protect() // write protect the huge pages to split then into 4K ones // write protect specific level of tdp -> kvm_tdp_mmu_write_protect_gfn() -> kvm_mmu_write_protect_pt_masked() -> kvm_tdp_mmu_clear_dirty_pt_masked() - MMU Notifier, 当QEMU的memory mapping发生变化时,会触发mmu notifier,参考代码:

涉及到的MMU Notifier有下面几种: - test_young - invalidate - change_pte test-young notifier ================================== DAMON use this feature __mmu_notifier_test_young() -> test_young callback -> kvm_mmu_notifier_test_young() -> kvm_handle_hva_range_no_flush() -> __kvm_handle_hva_range() // write_lock on mmu_lock -> kvm_test_age_gfn() -> kvm_tdp_mmu_test_age_gfn() -> kvm_tdp_mmu_handle_gfn() -> test_age_gfn() page idle tracking use this feature ptep_clear_young_notify() -> mmu_notifier_clear_young() -> __mmu_notifier_clear_young() -> kvm_mmu_notifier_clear_young() // write_lock -> kvm_age_gfn() -> kvm_tdp_mmu_age_gfn_range() -> age_gfn_range() invalidate notifier ================================ mmu_notifier_invalidate_range_start() // invoked before real unmap operations such as unmap_single_vma() -> kvm_mmu_notifier_invalidate_range_start() //write lock -> kvm_unmap_gfn_range() -> kvm_tdp_mmu_unmap_gfn_range() -> __kvm_tdp_mmu_zap_gfn_range() -> zap_gfn_range() when vm balloon inflates, the qemu backend invokes MADV_DONTNEED to unmap_page_range() -> unmap_single_vma() change_pte notifier ============================================ wp_page_copy() -> set_pte_at_notify() -> mmu_notifier_change_pte() // invoked before set_pte_at() !!! -> __mmu_notifier_change_pte() -> .change_pte() kvm_mmu_notifier_change_pte() -> kvm_handle_hva_range() -> __kvm_handle_hva_range() // write_lock on mmu_lock -> .handler callback kvm_set_spte_gfn() -> kvm_tdp_mmu_set_spte_gfn() -> set_spte_gfn() KSM (kernel share memory) is another feature can touch this race Refer to the comment of set_pte_at_notify() /* * set_pte_at_notify() sets the pte _after_ running the notifier. * This is safe to start by updating the secondary MMUs, because the primary MMU * pte invalidate must have already happened with a ptep_clear_flush() before * set_pte_at_notify() has been invoked. Updating the secondary MMUs first is * required when we change both the protection of the mapping from read-only to * read-write and the pfn (like during copy on write page faults). Otherwise the * old page would remain mapped readonly in the secondary MMUs after the new * page is already writable by some CPU through the primary MMU. */ And the commit comment, mm: mmu_notifier: fix inconsistent memory between secondary MMU and host There is a bug in set_pte_at_notify() which always sets the pte to the new page before releasing the old page in the secondary MMU. At this time, the process will access on the new page, but the secondary MMU still access on the old page, the memory is inconsistent between them The below scenario shows the bug more clearly: at the beginning: *p = 0, and p is write-protected by KSM or shared with parent process CPU 0 CPU 1 write 1 to p to trigger COW, set_pte_at_notify will be called: *pte = new_page + W; /* The W bit of pte is set */ *p = 1; /* pte is valid, so no #PF */\ | return back to secondary MMU, then ======> Here !!!!! the secondary MMU read p, but get: | *p == 0; / /* * !!!!!! * the host has already set p to 1, but the secondary * MMU still get the old value 0 */ call mmu_notifier_change_pte to release old page in secondary MMU We can fix it by release old page first, then set the pte to the new page.

TM.3 Fault in parallel

TDP Fault可以在多个vCPU中并行进行,这主要得益与以下两个Patch set,

Introduce the TDP MMU [LWN.net]![]() https://lwn.net/Articles/832835/Allow parallel MMU operations with TDP MMU [LWN.net]

https://lwn.net/Articles/832835/Allow parallel MMU operations with TDP MMU [LWN.net]![]() https://lwn.net/Articles/844950/

https://lwn.net/Articles/844950/

This work was motivated by the need to handle page faults in parallel for

very large VMs. When VMs have hundreds of vCPUs and terabytes of memory,

KVM's MMU lock suffers extreme contention, resulting in soft-lockups and

long latency on guest page faults

实现TDP Fault in parallel的方法,主要是两点:

- TDP MMU page table memory is protected with RCU and freed in RCU callbacks to allow multiple threads to operate on that memory concurrently.

- The MMU lock was changed to an rwlock on x86. This allows the page fault handlers to acquire the MMU lock in read mode and handle page faults in parallel, and other operations to maintain exclusive use of the lock by acquiring it in write mode.

vCPU fault路径使用readlock on mmu_lock,其基础是,TDP可以使用cmpxchg指令直接更改页表,这可以让更新操作呈事务性;而其他路径,比如dirty logging和MMU notifier相关则依然使用write lock on mmu lock;

RCU保证TDP page在访问期间不被释放;这个释放体现在哪里呢?

我们先看下Host上页表的释放操作是怎么发生的,参考代码:

pte级别的page的释放,分为三部:

- clean pte,这样MMU就无法再通过pgd获取mapping

- flush tlb,这样之前的pte的痕迹就彻底消失了

- free page,这是就可以安全释放page了

zap_pte_range()

-> series of pte_clear_not_present_full()

// After clear the pte, the MMU cannot get the mapping any more

// but the mapping still exists in tlb. We need to flush them

// before free the pfn, otherwise UFA comes up

-> tlb_flush_mmu()

-> tlb_flush_mmu_tlbonly()

-> tlb_flush()

-> mmu_notifier_invalidate_range()

-> tlb_flush_mmu_free()

-> tlb_batch_pages_flush()

-> free_pages_and_swap_cache()

-> release_pages()

那pmd级别是什么时候释放的呢?参考如下代码:

__mmdrop()

-> mm_free_pgd()

-> pgd_free()

-> pgd_mop_up_pmds()

-> mop_up_one_pmd()

-> pmd_free()TDP MMU的spte的释放,主要通过函数zap_gfn_range(),参考代码:

zap_gfn_range()

---

gfn_t max_gfn_host = 1ULL << (shadow_phys_bits - PAGE_SHIFT);

bool zap_all = (start == 0 && end >= max_gfn_host);

...

int min_level = zap_all ? root->role.level : PG_LEVEL_4K;

// min_level决定了for_each_tdp_pte_min_level()遍历的pte的level

// 当zap_all为false时,min_level为PG_LEVEL_4K,此时访问的是pte

...

for_each_tdp_pte_min_level(iter, root->spt, root->role.level,

min_level, start, end) {

...

if (!shared) {

tdp_mmu_set_spte(kvm, &iter, 0);

flush = true;

} else if (!tdp_mmu_zap_spte_atomic(kvm, &iter)) {

iter.old_spte = READ_ONCE(*rcu_dereference(iter.sptep));

goto retry;

}

...

}

---

Both tdp_mmu_set_spte()/tdp_mmu_zap_spte_atomic() will invoke

__handle_changed_spte() finally

__handle_changed_spte()

---

/*

* Recursively handle child PTs if the change removed a subtree from

* the paging structure.

*/

//只有非leaf,也就是pdpte级别时,才会调用到这里

if (was_present && !was_leaf && (pfn_changed || !is_present))

handle_removed_tdp_mmu_page(kvm,

spte_to_child_pt(old_spte, level), shared);

---

-> call_rcu(&sp->rcu_head, tdp_mmu_free_sp_rcu_callback);

我看到,只有zap_all为true的时候,zap_gfn_range()才会从更高级别的pte执行zap,而此时才能执行对对应page所对应的下一级的pdpte执行递归释放;也只有此时,RCU才能排上用场。同时,考虑mmu_lock,需要是read_lock()并且zap_all为true,查看代码,只有下面的场景:

kvm_mmu_zap_all_fast()

---

if (is_tdp_mmu_enabled(kvm)) {

read_lock(&kvm->mmu_lock);

kvm_tdp_mmu_zap_invalidated_roots(kvm);

read_unlock(&kvm->mmu_lock);

}

---

kvm_tdp_mmu_zap_invalidated_roots()

---

flush = zap_gfn_range(kvm, root, 0, -1ull, true, flush, true);

---IRQ

IRQ.1 irqchip模式

qemu配置irqchip有三种模式:

- off qemu emulates pic/ioapic and lapic

- split qemu emulates pic/ioapic, kvm emulates lapic

- on kvm emulates pic/ioapic and lapic

QEMU模拟pic/ioapc和lapic是它本身就具备的,毕竟KVM只是QEMU的一种虚拟化加速器;使用KVM在内核态模拟irqchip则是处于性能上的考虑;但是为什么会有split模式呢?参考链接:

Performant Security Hardening![]() https://www.linux-kvm.org/images/3/3d/01x02-Steve_Rutherford-Performant_Security_Hardening_of_KVM.pdf

https://www.linux-kvm.org/images/3/3d/01x02-Steve_Rutherford-Performant_Security_Hardening_of_KVM.pdf

- The kernel irqchip provides a significant perf boost over userspace irqchip

- Take the best of Userspace and Kernel Irqchips;The PIC and I/OAPIC aren’t used often by modern VMs, but the LAPIC is. So move the PIC and I/OAPIC up to userspace, and add necessary API to communicate between userspace and the in-kernel APIC

其实总结起来就是:对于高性能设备,目前基本都是用MSI/MSIx,这时只需要LAPIC;PIC和IOAPIC往往对应的都是慢速设备。保留内核态的PIC/IOAPC模拟代码,不利于内核稳定。

QEMU社区对于pc-q35是默认采用on还是split,还有过一番讨论,参考链接:

[for-4.1,v2] q35: Revert to kernel irqchip - Patchwork![]() https://patchwork.ozlabs.org/project/qemu-devel/patch/[email protected]/控制QEMU是采用on还是split的代码如下:

https://patchwork.ozlabs.org/project/qemu-devel/patch/[email protected]/控制QEMU是采用on还是split的代码如下:

kvm_irqchip_create()

---

ret = kvm_arch_irqchip_create(s);

if (ret == 0) {

if (s->kernel_irqchip_split == ON_OFF_AUTO_ON) {

perror("Split IRQ chip mode not supported.");

exit(1);

} else {

ret = kvm_vm_ioctl(s, KVM_CREATE_IRQCHIP);

}

}

---

int kvm_arch_irqchip_create(KVMState *s)

{

int ret;

if (kvm_kernel_irqchip_split()) {

ret = kvm_vm_enable_cap(s, KVM_CAP_SPLIT_IRQCHIP, 0, 24);

if (ret) {

...

} else {

kvm_split_irqchip = true;

return 1;

}

} else {

return 0;

}

}

bool kvm_kernel_irqchip_split(void)

{

return kvm_state->kernel_irqchip_split == ON_OFF_AUTO_ON;

}

kernel_irqchip_split的值起决定性作用:

- ON_OFF_AUTO_ON,通过KVM_CAP_SPLIT_IRQCHIP通知内核采用split模式;

- ON_OFF_AUTO_OFF,通过KVM_CREAT_IRQCHIP通知内核采用on模式;

kernel_irq_chip_split的值则决定于:

kvm_accel_instance_init()

---

s->kernel_irqchip_allowed = true;

s->kernel_irqchip_split = ON_OFF_AUTO_AUTO;

---

kvm_init()

---

if (s->kernel_irqchip_split == ON_OFF_AUTO_AUTO) {

s->kernel_irqchip_split = mc->default_kernel_irqchip_split ? ON_OFF_AUTO_ON : ON_OFF_AUTO_OFF;

}

---

machine的定义中的default_kernel_irqchip_slit的值;目前的qemu 7.0.0的代码中,x86的q35,

pc-q35-7.0

... false

pc-q35-4.1 ^

pc-q35-4.0.1 |

pc-q35-4.0 true

pc-q35-3.1 |

pc-q35-3.0 v

... false

pc-q35-2.4只有pc-q35-4.0默认为true,向上和向下均为false;而作为i386默认machine type的pc_i440fx则全系没有开;也就是说,kernel-irqchip=on 依然是主流。

MachineClass.is_default代表其是否为该架构的默认机型;QEMU 7.0.0版本的默认机型为pc-i440fx-7.0

IRQ.2 架构

KVM的IRQ模拟主要分为三个部分:

- 接口,应用模块通过这个接口触发中断;

- 模拟,这里主要模拟irqchip的内部逻辑;

- 注入,向虚拟机注入中断;

下面我们分别看下各自的实现。

IRQ.2.1 GSI与Router

GSI,global system interrupt,参考自链接:PCI Interrupt Routing (Navigating the Maze)PCI interrupt Routing (Navigating the Maze)![]() https://people.freebsd.org/~jhb/papers/bsdcan/2007/article/node5.html

https://people.freebsd.org/~jhb/papers/bsdcan/2007/article/node5.html

ACPI uses a cookie system to "name'' interrupts known as Global System Interrupts. Each interrupt controller input pin is assigned a GSI using a fairly simple scheme.

For the 8259A case, the GSIs map directly to ISA IRQs. Thus, IRQ 0 is GSI 0, etc. The APIC case is slightly more complicated, but still simple. Each I/O APIC is assigned a base GSI by the BIOS. Each input pin on the I/O APIC is mapped to a GSI number by adding the pin number (zero-based) to the base GSI. Thus, if an I/O APIC has a base GSI of N, pin 0 on that I/O APIC has a GSI of N, pin 1 has a GSI of N + 1, etc. The I/O APIC with a base GSI of 0 maps the ISA IRQs onto its first 16 input pins. Thus, the ISA IRQs are effectively always mapped 1:1 onto GSIs

总结起来就是:GSI是一个与中断源一一对应的号码;

这里的Router指的是kvm.irq_routing,这是一个hash表,其中保存的是kvm_kernel_irq_routing_entry,hash的index就是gsi;实际上,这是一个从gsi到这个gsi对应的中断的具体信息和处理方法的映射;

kvm_kernel_irq_routing_entry中包含的信息包括:

- 对于INTx,gsi对应的irqchip +pin

- 对于MSIx,gsi对应的address和data

同时,还有这个gsi对应的处理函数:

- 对于pic,kvm_set_pci_rq

- 对于ioapic,kvm_set_ioapic_irq

- 对于msi,kvm_set_msi

kvm_irq_map_gsi()通过gsi获取kvm_kernel_irq_routing_entry,进而获取触发该中断的基本信息和方法。

设置irq routing通过KVM_SET_GSI_ROUTING,kvm_set_irq_routing()会根据传入的表重建一个新的。

QEMU的代码中,关于gsi和irq routes的代码在一起,参考:

irq routes下发到kvm的函数为:

kvm_irqchip_commit_routes()

-> kvm_vm_ioctl(s, KVM_SET_GSI_ROUTING, s->irq_routes)

ioapic

====================================

kvm_pc_setup_irq_routing()

---

KVMState *s = kvm_state;

int i;

assert(kvm_has_gsi_routing());

for (i = 0; i < 8; ++i) {

if (i == 2) {

continue;

}

kvm_irqchip_add_irq_route(s, i, KVM_IRQCHIP_PIC_MASTER, i);

}

for (i = 8; i < 16; ++i) {

kvm_irqchip_add_irq_route(s, i, KVM_IRQCHIP_PIC_SLAVE, i - 8);

}

if (pci_enabled) {

for (i = 0; i < 24; ++i) {

if (i == 0) {

kvm_irqchip_add_irq_route(s, i, KVM_IRQCHIP_IOAPIC, 2);

} else if (i != 2) {

kvm_irqchip_add_irq_route(s, i, KVM_IRQCHIP_IOAPIC, i);

}

}

}

kvm_irqchip_commit_routes(s);

---

kvm_irqchip_add_irq_route()

-> kvm_add_routing_entry()

-> set_gsi()

-> set_bit(gsi, s->used_gsi_bitmap);

这里相当于直接将PIC和IOAPIC的gsi给reserve了

MSI

===============================

kvm_send_msi()

-> kvm_irqchip_send_msi()

---

route = kvm_lookup_msi_route(s, msg);

if (!route) {

int virq;

virq = kvm_irqchip_get_virq(s);

if (virq < 0) {

return virq;

}

route = g_new0(KVMMSIRoute, 1);

route->kroute.gsi = virq;

route->kroute.type = KVM_IRQ_ROUTING_MSI;

route->kroute.flags = 0;

route->kroute.u.msi.address_lo = (uint32_t)msg.address;

route->kroute.u.msi.address_hi = msg.address >> 32;

route->kroute.u.msi.data = le32_to_cpu(msg.data);

kvm_add_routing_entry(s, &route->kroute);

kvm_irqchip_commit_routes(s);

QTAILQ_INSERT_TAIL(&s->msi_hashtab[kvm_hash_msi(msg.data)], route,

entry);

}

assert(route->kroute.type == KVM_IRQ_ROUTING_MSI);

return kvm_set_irq(s, route->kroute.gsi, 1);

---

其中virq就是gsi,

kvm_irqchip_get_virq()

-> next_virq = find_first_zero_bit(s->used_gsi_bitmap, s->gsi_count);IRQ.2.2 接口

目前触发中断的接口主要有两个:

- ioctl KVM_RQ_LINE,这个主要是给qemu用,参考代码:

kvm_vm_ioctl_irq_line() -> kvm_set_irq() --- i = kvm_irq_map_gsi(kvm, irq_set, irq); r = irq_set[i].set(&irq_set[i], kvm, irq_source_id, level, line_status); --- - irqfd,这个主要是给内核态用,比如vhost,

kvm_irqfd_assign() --- ... init_waitqueue_func_entry(&irqfd->wait, irqfd_wakeup); init_poll_funcptr(&irqfd->pt, irqfd_ptable_queue_proc); ... irqfd_update(kvm, irqfd); ... --- irqfd_update() --- n_entries = kvm_irq_map_gsi(kvm, entries, irqfd->gsi); e = entries; irqfd->irq_entry = *e; --- eventfd_signal() -> eventfd_signal_mask() -> wake_up_locked_poll(&ctx->wqh, EPOLLIN | mask) -> __wake_up_common() -> irqfd_wakeup() irqfd_wakeup() --- irq = irqfd->irq_entry kvm_arch_set_irq_inatomic(&irq, kvm, KVM_USERSPACE_IRQ_SOURCE_ID, 1, false) ---

IRQ.2.3 模拟

模拟主要是指模拟对应的irqchip行为的部分,在我的另外一篇里,KVM CPU虚拟化![]() http://t.csdn.cn/B7fTC

http://t.csdn.cn/B7fTC

3.2 PIC及其虚拟化和3.3.2 APIC模拟,有解释,这里不在赘述,只附上一些代码:

kvm_set_pic_irq()

-> kvm_pic_set_irq()

-> pic_update_irq() // Simulate PIC internal data structure

-> pic_unlock()

---

kvm_for_each_vcpu(i, vcpu, s->kvm) {

if (kvm_apic_accept_pic_intr(vcpu)) {

kvm_make_request(KVM_REQ_EVENT, vcpu);

kvm_vcpu_kick(vcpu);

return;

}

}

---

kvm_set_ioapic_irq()

-> ioapic_set_irq()

-> ioapic_service()

-> kvm_irq_delivery_to_apic()

-> kvm_apic_set_irq()

-> __apic_accept_irq()

---

if (static_call(kvm_x86_deliver_posted_interrupt)(vcpu, vector)) {

kvm_lapic_set_irr(vector, apic);

kvm_make_request(KVM_REQ_EVENT, vcpu);

kvm_vcpu_kick(vcpu);

}

---

kvm_set_msi()

-> kvm_irq_delivery_to_apic()

// same with the one above

kvm_vcpu_kick()

-> smp_send_reschedule() // IPI interruptIRQ.2.4 注入

中断的注入,有两种方式,在上一小节提到的我的另外一篇文章的3.3.2 APIC模拟和3.3.3 VAPIC有详解;这里只简单的提下:

- 给目标vCPU所在的CPU发送IPI,使其VM-Exit,然后通过VMCS的VM-entry interruption-information field进行event inject,参考Intel SDM 3 24.8.3 VM-Entry Controls for Event Injection,

vcpu_enter_guest() // check KVM_REQ_EVENT -> kvm_cpu_get_interrupt() -> kvm_cpu_get_extint() -> kvm_pic_read_irq() // pic internal logic -> kvm_get_apic_interrupt() // lapic internal logic -> vmx_inject_irq() --- intr = irq | INTR_INFO_VALID_MASK; if (vcpu->arch.interrupt.soft) { intr |= INTR_TYPE_SOFT_INTR; vmcs_write32(VM_ENTRY_INSTRUCTION_LEN, vmx->vcpu.arch.event_exit_inst_len); } else intr |= INTR_TYPE_EXT_INTR; vmcs_write32(VM_ENTRY_INTR_INFO_FIELD, intr); --- - 直接给目标vCPU所在的cpu发送post irq,这种方式不需要触发VM-Exit,

kvm_apic_set_irq() -> __apic_accept_irq() --- if (static_call(kvm_x86_deliver_posted_interrupt)(vcpu, vector)) { kvm_lapic_set_irr(vector, apic); kvm_make_request(KVM_REQ_EVENT, vcpu); kvm_vcpu_kick(vcpu); } --- vmx_deliver_posted_interrupt() -> kvm_vcpu_trigger_posted_interrupt()

Nest Virtualization

Nest Virtualization的原理,我们主要参考链接:

The Turtles Project: Design and Implementation of Nested Virtualization![]() https://www.usenix.org/legacy/events/osdi10/tech/full_papers/Ben-Yehuda.pdf

https://www.usenix.org/legacy/events/osdi10/tech/full_papers/Ben-Yehuda.pdf

NEST.1 CPU

NEST.1.1 概述

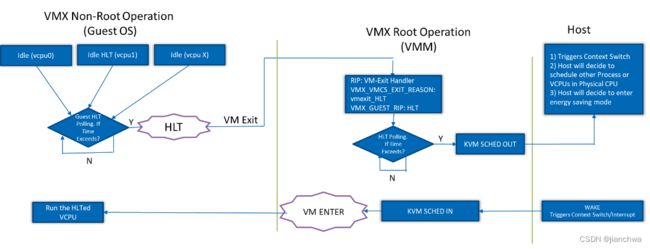

实现CPU的Nested Virtulization的基本原理,参考下图:

让L2 Guest与L1 Hypervisor都运行在L0上,而不是L2运行在L1上;因为Intel的VMX(或者其他虚拟化硬件拓展)都不会支持这种无限套娃模式。

VMCS会出现以下多个:

- vmcs(0, 1),L0 Hypervisor用它来运行L1 Hypervisor;

- vmcs(1, 2),L1 Hypervisor为L2 Guest准备,但是它并不会用来运行L2,因为真正运行L2的是L0;

- vmcs(0, 2),L0 Hypervisor用它来运行L2 Guest,从vmcs(1, 2)转换而来;vmcs(1, 2)中的地址都是GFN,L0需要将它转换成PFN;

vmcs(0, 2)的生成,参考下面的一段描述:

The control data of vmcs(1, 2) and vmcs(0, 1) must be merged to correctly emulate the processor behavior. For example, consider the case where L1 specifies to trap an event EA in vmcs(1, 2) but L0 does not trap such event for L1 (i.e., a trap is not specified in vmcs(0, 1)). To forward the event EA to L1, L0 needs to specify the corresponding trap in vmcs(0, 2). In addition, the field used by L1 to inject events to L2 needs to be merged, as well as the fields used by the processor to specify the exit cause.

虽然在概念里,L2 Guest在L1 Hypervisor之上,实际上,两者都是L0 Hypervisor的Guest;接来下,我们看下一次L2 Guest的一次VM-Exit的处理过程;

其中,'v'符号代表从vmcs信息同步,'->'代表vmptrld +vmlaunch,'r/w'代表vmread/vmwrite;

L1 Hypervisor需要多次vmread/write,这会导致VM-exit,性能较差;为此,intel vmx引入了shadow vmcs,参考SDM-3 24.4.2 Guest Non-Register State,

VMCS link pointer (64 bits). If the “VMCS shadowing” VM-execution control is 1, the VMREAD and VMWRITE instructions access the VMCS referenced by this pointer (see Section 24.10).

The VMREAD and VMWRITE instructions can be used in VMX non-root operation to access a shadow VMCS but not an ordinary VMCS. This fact results from the following:

- If the “VMCS shadowing” VM-execution control is 0, execution of the VMREAD and VMWRITE instructions in VMX non-root operation always cause VM exits;

- If the “VMCS shadowing” VM-execution control is 1, execution of the VMREAD and VMWRITE instructions in VMX non-root operation can access the VMCS referenced by the VMCS link pointer;

于是执行过程,就变成了:

其中,‘s’代表的是vmcs的link pointer的指向。关于Shadow VMCS还可以参考链接:

Nested virtualization: shadow turtles![]() https://www.linux-kvm.org/images/e/e9/Kvm-forum-2013-nested-virtualization-shadow-turtles.pdf

https://www.linux-kvm.org/images/e/e9/Kvm-forum-2013-nested-virtualization-shadow-turtles.pdf

NEST.1.1 VMX Instructions

本小节,我们主要看下L1 Hypervisor执行虚拟化拓展的指令时,L0如果模拟的;

首先,我们需要知道,kvm如何使用几个主要VMX Instructions,

- vmcall,This instruction allows software in VMX non-root operation to call the VMM for

service. A VM exit occurs, transferring control to the VMM.kvm_send_ipi_mask() -> __send_ipi_mask() -> kvm_hypercall4(KVM_HC_SEND_IPI ...) --- asm volatile(KVM_HYPERCALL : "=a"(ret) : "a"(nr), "b"(p1), "c"(p2), "d"(p3), "S"(p4) : "memory"); --- #define KVM_HYPERCALL \ ALTERNATIVE("vmcall", "vmmcall", X86_FEATURE_VMMCALL) kvm_emulate_hypercall() -> kvm_pv_send_ipi() // KVM_HC_SEND_IPI -> __pv_send_ipi() -> kvm_apic_set_irq() - vmxon,This instruction causes the processor to enter VMX operation

kvm_create_vm() -> hardware_enable_all() --- raw_spin_lock(&kvm_count_lock); kvm_usage_count++; if (kvm_usage_count == 1) { atomic_set(&hardware_enable_failed, 0); on_each_cpu(hardware_enable_nolock, NULL, 1); if (atomic_read(&hardware_enable_failed)) { hardware_disable_all_nolock(); r = -EBUSY; } } raw_spin_unlock(&kvm_count_lock); --- hardware_enable_nolock() -> kvm_arch_hardware_enable() -> hardware_enable() // vmx.c -> kvm_cpu_vmxon() 在创建第一个vm的时候,才会vmxon - vmxoff, This instruction causes the processor to leave VMX operation

-

vmclear ,This instruction takes a single 64-bit operand that is in memory. The

instruction sets the launch state of the VMCS referenced by the operand to “clear”,

renders that VMCS inactive, and ensures that data for the VMCS have been written

to the VMCS-data area in the referenced VMCS region. If the operand is the same

as the current-VMCS pointer, that pointer is made invalid.kvm_dying_cpu() -> hardware_disable_nolock() -> kvm_arch_hardware_disable() -> hardware_disable() // vmx.c -> vmclear_local_loaded_vmcss() -> __loaded_vmcs_clear() -> vmcs_clear() -> cpu_vmxoff() vmxon也有一个几乎一样的路径,不过是反过来的,它的起始点在kvm_starting_cpu; -

vmptrld,This instruction takes a single 64-bit source operand that is in memory. It

makes the referenced VMCS active and current, loading the current-VMCS pointer

with this operand and establishes the current VMCS based on the contents of

VMCS-data area in the referenced VMCS region. Because this makes the referenced

VMCS active, a logical processor may start maintaining on the processor some of

the VMCS data for the VMCS;vcpu_load() finish_task_switch() -> fire_sched_in_preempt_notifiers() -> kvm_sched_in() -> kvm_arch_vcpu_load() kvm_arch_vcpu_ioctl_run() -> vcpu_load() -> kvm_arch_vcpu_load() kvm_arch_vcpu_load() -> vmx_vcpu_load() -> vmx_vcpu_load_vmcs() -> vmcs_load() -> vmx_asm1(vmptrld, "m"(phys_addr), vmcs, phys_addr) -

vmlaunch/vmresume,This instruction launches/resumes a virtual machine managed by the VMCS. A VM entry occurs, transferring control to the VM.

vcpu_enter_guest() -> kvm_x86_ops.run() vmx_vcpu_run() -> vmx_vcpu_enter_exit() ->__vmx_vcpu_run() -> vmlaunch/vmresume

模拟vmx instructions的回调可以参考函数:nested_vmx_hardware_setup()。

启动一个vm的过程,vmxon - > vmptrld ->vmlaunch,我们首先看下这几个指令是如何模拟的:

- vmxon

handle_vmon() -> sanity check -> enter_vmx_operation() -> per vcpu resources, include shadow vmcs - vmptrld

handle_vmptrld() --- if (vmx->nested.current_vmptr != vmptr) { ... kvm_vcpu_map(vcpu, gpa_to_gfn(vmptr), &map); new_vmcs12 = map.hva; // Before release previous L2 vmcs, sync its vmcs02 to vmcs12 nested_release_vmcs12(vcpu); -> copy_vmcs02_to_vmcs12_rare(vcpu, get_vmcs12(vcpu)) -> kvm_vcpu_write_guest_page(vcpu, vmx->nested.current_vmptr >> PAGE_SHIFT, vmx->nested.cached_vmcs12, 0, VMCS12_SIZE); /* * Load VMCS12 from guest memory since it is not already * cached. */ memcpy(vmx->nested.cached_vmcs12, new_vmcs12, VMCS12_SIZE); kvm_vcpu_unmap(vcpu, &map, false); set_current_vmptr(vmx, vmptr); --- vmx->nested.current_vmptr = vmptr; if (enable_shadow_vmcs) { secondary_exec_controls_setbit(vmx, SECONDARY_EXEC_SHADOW_VMCS); vmcs_write64(VMCS_LINK_POINTER, __pa(vmx->vmcs01.shadow_vmcs)); vmx->nested.need_vmcs12_to_shadow_sync = true; } --- } --- 注意,此时只是做了准备工作,还没有将vmptrld vmcs(0, 2) - vmlaunch

vmx_prepare_switch_to_guest() --- if (vmx->nested.need_vmcs12_to_shadow_sync) nested_sync_vmcs12_to_shadow(vcpu); --- handle_vmlaunch() -> nested_vmx_run() -> nested_vmx_enter_non_root_mode() -> vmx_switch_vmcs(vcpu, &vmx->nested.vmcs02) -> vmx_vcpu_load_vmcs() // vmcs(0, 2) is vmptrld here, then we can use vmread/wmwrite directly -> prepare_vmcs02() // merge vmcs12 and vmcs02 // Till now, we have switch all of the information from L1 to L2 // nested_vmx_run() return 1 if switch success vcpu_run() --- for (;;) { if (kvm_vcpu_running(vcpu)) { r = vcpu_enter_guest(vcpu); } else { r = vcpu_block(kvm, vcpu); } if (r <= 0) break; ... } --- There are two rounds here: - L0 runs L1 - L1 vmlauch L2, and cause vm-exit - vcpu_enter_guest() handles vm-exit caused by launch and invoke handle_launch which switch current vm to L2 - L0 returns to vcpu_run and enter into vcpu_enter_guest again, but now, it starts to execute L2

NEST.2 MMU

NEST.2.1 概述

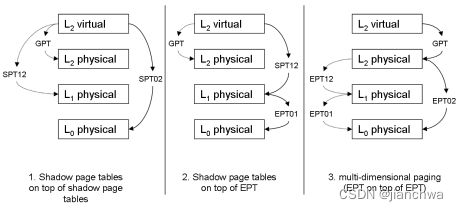

这里分成三种情况:

- shadow on shadow,L2 guest gpt(ggva->ggpa),L1 hypervisor spte12(ggva->gpa),L0 hypervisor spte01(gva->hpa);为了保证L2可以直接运行,L0在运行L2(是的,没错)时,需要使用的是spte02(ggva->hpa),所以,它需要合并spte12(ggva->gpa)和spte01(gva->hpa),同时还需要结合memslot或者gpa->hpa;

- shadow on ept,L2 guest gpt(ggva->ggpa),L1 hypervisor spte12(ggva->gpa),L0 hypervisor ept01(gpa->hpa),这种情况最为简单,当L0运行L2时,使用spte12(ggva->gpa) +ept01(gpa->hpa)组合即可,不需要特殊处理;

- ept on ept,L2 guest gpt(ggva->ggpa),L1 hypervisor ept12 (ggpa->gpa),L0 hypervisor ept01(gpa->hpa),为保证L2可以直接运行,L0在运行L2时,需要gpt(ggva->ggpa) + ept02(ggpa->hpa),所以,需要合并ept12(ggpa->gpa)和ept01(gpa->hpa);

NEST.2.2 EPT on EPT

我们主要关注两个点:

ept02(ggpa->hpa)的建立,参考代码:

prepare_vmcs12()

-> nested_ept_init_mmu_context()

-> vcpu->arch.walk_mmu = &vcpu->arch.nested_mmu;

-> nested_vmx_load_cr3()

-> kvm_init_mmu()

-> init_kvm_nested_mmu() // if vcpu->arch.walk_mmu == &vcpu->arch.nested_mmu

最后的结果是:

mmu -> guest_mmu

nested_ept_get_eptp()

ept_page_fault() // in arch/x86/kvm/mmu/paging_tmpl.h

nested_ept_get_eptp()

walk_mmu -> nested_mmu

在kvm_mmu_reload()也将使用这个ept

// The L2 guest fault due to lack of mapping ggap to hpa

ept_page_fault()

-> ept_walk_addr()

-> ept_walk_addr_generic() // with vcpu->arch.mmu

---

// Get the root of ept12(ggpa, gpa), nested_ept_get_eptp()

pte = mmu->get_guest_pgd(vcpu);

...

// walk the ept12(ggpa, gpa) by ggpa (fault address) and get gpa

index = PT_INDEX(addr, walker->level);

table_gfn = gpte_to_gfn(pte);

offset = index * sizeof(pt_element_t);

pte_gpa = gfn_to_gpa(table_gfn) + offset;

...

// does nothing but just return gpa

real_gpa = mmu->translate_gpa(vcpu, gfn_to_gpa(table_gfn),

nested_access,

&walker->fault);

...

// get hpa from memslot, note ! the memslot is L1's

host_addr = kvm_vcpu_gfn_to_hva_prot(vcpu, gpa_to_gfn(real_gpa),

&walker->pte_writable[walker->level - 1]);

...

---

Para-Virtualization

CPU通过trap关键指令然后模拟,来实现硬件虚拟化;在x86平台上,trap会引起VM-exit;大量的VM-exit会导致虚拟机性能下降,半虚拟化的作用就是减少Vm-exit,但是PV必须要GuestOS配合。

PV.0 概述

参考链接,目前x86支持的PV功能包括,

Paravirtualized KVM features — QEMU documentation![]() https://www.qemu.org/docs/master/system/i386/kvm-pv.html

https://www.qemu.org/docs/master/system/i386/kvm-pv.html

- kvmclock Expose a KVM specific paravirtualized clocksource to the guest. Supported since Linux v2.6.26.

- kvm-nopiodelay The guest doesn’t need to perform delays on PIO operations. Supported since Linux v2.6.26.

- kvm-mmu This feature is deprecated.

- kvm-asyncpf Enable asynchronous page fault mechanism. Supported since Linux v2.6.38. Note: since Linux v5.10 the feature is deprecated and not enabled by KVM. Use kvm-asyncpf-int instead.

- kvm-steal-time Enable stolen (when guest vCPU is not running) time accounting. Supported since Linux v3.1.

- kvm-pv-eoi Enable paravirtualized end-of-interrupt signaling. Supported since Linux v3.10.

- kvm-pv-unhalt Enable paravirtualized spinlocks support. Supported since Linux v3.12.

- kvm-pv-tlb-flush Enable paravirtualized TLB flush mechanism. Supported since Linux v4.16.

- kvm-pv-ipi Enable paravirtualized IPI mechanism. Supported since Linux v4.19.

- kvm-poll-control Enable host-side polling on HLT control from the guest. Supported since Linux v5.10.

- kvm-pv-sched-yield Enable paravirtualized sched yield feature. Supported since Linux v5.10.

- kvm-asyncpf-int Enable interrupt based asynchronous page fault mechanism. Supported since Linux v5.10.

- kvm-msi-ext-dest-id Support ‘Extended Destination ID’ for external interrupts. The feature allows to use up to 32768 CPUs without IRQ remapping (but other limits may apply making the number of supported vCPUs for a given configuration lower). Supported since Linux v5.10.

- kvmclock-stable-bit Tell the guest that guest visible TSC value can be fully trusted for kvmclock computations and no warps are expected. Supported since Linux v2.6.35.

qemu默认开启以下几个:

kvmclock kvm-nopiodelay kvm-asyncpf kvm-steal-time kvm-pv-eoi kvmclock-stable-bit

qemu中的处理的代码为:

x86_cpu_common_class_init()

---

for (w = 0; w < FEATURE_WORDS; w++) {

int bitnr;

for (bitnr = 0; bitnr < 64; bitnr++) {

x86_cpu_register_feature_bit_props(xcc, w, bitnr);

}

}

---

x86_cpu_register_feature_bit_props()

---

FeatureWordInfo *fi = &feature_word_info[w];

const char *name = fi->feat_names[bitnr];

...

x86_cpu_register_bit_prop(xcc, name, w, bitnr);

---

-> object_class_property_add(oc, prop_name, "bool",

x86_cpu_get_bit_prop,

x86_cpu_set_bit_prop,

NULL, fp);

FeatureWordInfo feature_word_info[FEATURE_WORDS] = {

...

[FEAT_KVM] = {

.type = CPUID_FEATURE_WORD,

.feat_names = {

"kvmclock", "kvm-nopiodelay", "kvm-mmu", "kvmclock",

"kvm-asyncpf", "kvm-steal-time", "kvm-pv-eoi", "kvm-pv-unhalt",

NULL, "kvm-pv-tlb-flush", NULL, "kvm-pv-ipi",

"kvm-poll-control", "kvm-pv-sched-yield", "kvm-asyncpf-int", "kvm-msi-ext-dest-id",

NULL, NULL, NULL, NULL,

NULL, NULL, NULL, NULL,

"kvmclock-stable-bit", NULL, NULL, NULL,

NULL, NULL, NULL, NULL,

},

.cpuid = { .eax = KVM_CPUID_FEATURES, .reg = R_EAX, },

.tcg_features = TCG_KVM_FEATURES,

},

...

};

x86_cpu_set_bit_prop()

---

if (value) {

cpu->env.features[fp->w] |= fp->mask;

} else {

cpu->env.features[fp->w] &= ~fp->mask;

}

---

CPUX86State.features will be used in cpu_x86_cpuid()

static PropValue kvm_default_props[] = {

{ "kvmclock", "on" },

{ "kvm-nopiodelay", "on" },

{ "kvm-asyncpf", "on" },

{ "kvm-steal-time", "on" },

{ "kvm-pv-eoi", "on" },

{ "kvmclock-stable-bit", "on" },

{ "x2apic", "on" },

{ "kvm-msi-ext-dest-id", "off" },

{ "acpi", "off" },

{ "monitor", "off" },

{ "svm", "off" },

{ NULL, NULL },

};

kvm_cpu_instance_init()

-> x86_cpu_apply_props(cpu, kvm_default_props);

---

for (pv = props; pv->prop; pv++) {

if (!pv->value) {

continue;

}

object_property_parse(OBJECT(cpu), pv->prop, pv->value,

&error_abort);

}

---

The default feature above will be set into CPUX86State.features and

passed into kvm with KVM_SET_CPUID2另外,cpuid中还有Hypervisor的相关信息,参考如下:

X86CPU.expose_kvm default value is true

static Property x86_cpu_properties[] = {

...

DEFINE_PROP_BOOL("kvm", X86CPU, expose_kvm, true),

...

}

kvm_arch_init_vcpu()

---

if (cpu->expose_kvm) {

memcpy(signature, "KVMKVMKVM\0\0\0", 12);

c = &cpuid_data.entries[cpuid_i++];

c->function = KVM_CPUID_SIGNATURE | kvm_base;

c->eax = KVM_CPUID_FEATURES | kvm_base;

c->ebx = signature[0];

c->ecx = signature[1];

c->edx = signature[2];

c = &cpuid_data.entries[cpuid_i++];

c->function = KVM_CPUID_FEATURES | kvm_base;

c->eax = env->features[FEAT_KVM];

c->edx = env->features[FEAT_KVM_HINTS];

}

---

#define KVM_CPUID_SIGNATURE 0x40000000

通过cpuid命令,可以看到如下信息:

hypervisor_id = "KVMKVMKVM "

hypervisor features (0x40000001/eax):

kvmclock available at MSR 0x11 = true

delays unnecessary for PIO ops = true

mmu_op = false

kvmclock available a MSR 0x4b564d00 = true

async pf enable available by MSR = true

steal clock supported = true

guest EOI optimization enabled = true

stable: no guest per-cpu warps expected = true

通过hypervisor_id可以判断当前的guest的hypervisor是什么类型

如果是物理机,则这里显示的是:

hypervisor guest status = false

Guest OS会根据cpuid里hypervisor的信息,进行一些PV相关的初始化,尤其是安插在关键位置的回调函数:

//根据cpuid确定当前的hypervisor类型,kvm/vmware/qemu/xen ?

setup_arch()

-> init_hypervisor_platform()

---

h = detect_hypervisor_vendor();

if (!h)

return;

copy_array(&h->init, &x86_init.hyper, sizeof(h->init));

copy_array(&h->runtime, &x86_platform.hyper, sizeof(h->runtime));

x86_hyper_type = h->type;

x86_init.hyper.init_platform();

---

detect_hypervisor_vendor()

---

for (p = hypervisors; p < hypervisors + ARRAY_SIZE(hypervisors); p++) {

if (unlikely(nopv) && !(*p)->ignore_nopv)

continue;

pri = (*p)->detect();

if (pri > max_pri) {

max_pri = pri;

h = *p;

}

}

---

以下是kvm hypervisor初始化的部分:

const __initconst struct hypervisor_x86 x86_hyper_kvm = {

.name = "KVM",

.detect = kvm_detect,

.type = X86_HYPER_KVM,

.init.guest_late_init = kvm_guest_init,

.init.x2apic_available = kvm_para_available,

.init.msi_ext_dest_id = kvm_msi_ext_dest_id,

.init.init_platform = kvm_init_platform,

};

kvm_detect()

-> kvm_cpuid_base()

-> __kvm_cpuid_base()

---

if (boot_cpu_has(X86_FEATURE_HYPERVISOR))

return hypervisor_cpuid_base("KVMKVMKVM\0\0\0", 0);

---

setup_arch()

-> x86_init.hyper.guest_late_init()

kvm_guest_init()

---

if (kvm_para_has_feature(KVM_FEATURE_STEAL_TIME)) {

has_steal_clock = 1;

static_call_update(pv_steal_clock, kvm_steal_clock);

}

if (kvm_para_has_feature(KVM_FEATURE_PV_EOI))

apic_set_eoi_write(kvm_guest_apic_eoi_write);

if (kvm_para_has_feature(KVM_FEATURE_ASYNC_PF_INT) && kvmapf) {

static_branch_enable(&kvm_async_pf_enabled);

alloc_intr_gate(HYPERVISOR_CALLBACK_VECTOR, asm_sysvec_kvm_asyncpf_interrupt);

}

if (pv_tlb_flush_supported()) {

pv_ops.mmu.flush_tlb_multi = kvm_flush_tlb_multi;

pv_ops.mmu.tlb_remove_table = tlb_remove_table;

}

smp_ops.smp_prepare_boot_cpu = kvm_smp_prepare_boot_cpu;

if (pv_sched_yield_supported()) {

smp_ops.send_call_func_ipi = kvm_smp_send_call_func_ipi;

}

...

---

kvm_smp_prepare_boot_cpu()

-> kvm_guest_cpu_init() //这里是对相关PV特性的MSR的初始化

-> MSR_KVM_ASYNC_PF_EN

MSR_KVM_PV_EOI_EN

MSR_KVM_STEAL_TIME

PV.1 kvm-steal-time

PV.2 kvm-pv-tlb-flush

kvm-pv-tlb-flush基于kvm-steal-time实现,其目的是减少因tlb flush引起的ipi中断;我们先看下tlb flush如何引起ipi,

flush_tlb_mm_range()

-> flush_tlb_multi()

-> __flush_tlb_multi()

-> native_flush_tlb_multi()

-> on_each_cpu_cond_mask(tlb_is_not_lazy, flush_tlb_func,

(void *)info, 1, cpumask);

-> smp_call_function_many_cond()

-> arch_send_call_function_ipi_mask()

-> smp_ops.send_call_func_ipi()

-> apic->send_IPI_mask(mask, CALL_FUNCTION_VECTOR);

pv tlb flush是如何工作的呢?原理上,它使用了一个触发时机,即vcpu被抢占的时候;这样,不需要触发额外的vm-exit,也能保证vcpu返回时可以看到触发标记;参考代码:

kvm_sched_out()

---

if (current->on_rq) {

WRITE_ONCE(vcpu->preempted, true);

WRITE_ONCE(vcpu->ready, true);

}

kvm_arch_vcpu_put(vcpu);

-> kvm_steal_time_set_preempted()

-> set KVM_VCPU_PREEMPTED on steal time

---

当vcpu被抢占之后,设置KVM_VCPU_PREEMPTED标记

kvm_guest_init()

---

if (pv_tlb_flush_supported()) { // KVM_FEATURE_PV_TLB_FLUSH && KVM_FEATURE_STEAL_TIME

pv_ops.mmu.flush_tlb_multi = kvm_flush_tlb_multi;

pv_ops.mmu.tlb_remove_table = tlb_remove_table;

}

---

kvm_flush_tlb_multi()

---

cpumask_copy(flushmask, cpumask);

for_each_cpu(cpu, flushmask) {

src = &per_cpu(steal_time, cpu);

state = READ_ONCE(src->preempted);

if ((state & KVM_VCPU_PREEMPTED)) {

//这里使用cmxchg,可以确定vcpu是否已经看见了这个标记

if (try_cmpxchg(&src->preempted, &state,

state | KVM_VCPU_FLUSH_TLB))

__cpumask_clear_cpu(cpu, flushmask);

}

}

native_flush_tlb_multi(flushmask, info);

---

vcpu_enter_guest()

-> record_steal_time() // KVM_REQ_STEAL_UPDATE

---

/*

* Doing a TLB flush here, on the guest's behalf, can avoid

* expensive IPIs.

*/

if (guest_pv_has(vcpu, KVM_FEATURE_PV_TLB_FLUSH)) {

u8 st_preempted = 0;

...

asm volatile("1: xchgb %0, %2\n"

"xor %1, %1\n"

"2:\n"

_ASM_EXTABLE_UA(1b, 2b)

: "+q" (st_preempted),

"+&r" (err),

"+m" (st->preempted));

...

vcpu->arch.st.preempted = 0;

...

if (st_preempted & KVM_VCPU_FLUSH_TLB)

kvm_vcpu_flush_tlb_guest(vcpu);

---

vmx_flush_tlb_guest()

-> vmx_get_current_vpid()

-> to_vmx(vcpu)->vpid

-> vpid_sync_context()

-> vpid_sync_vcpu_single()

-> __invvpid()

-> vmx_asm2(invvpid, "r"(ext), "m"(operand), ext, vpid, gva);

PV.4 kvm-pv-ipi

ipi主要有两个作用:

- reschedule,参考函数native_smp_send_reschedule()

- call_function,参考函数native_send_call_func_ipi()

看下pv ipi的实现,其基于vmcall,调用的vcpu会vm-exit,然后通过kvm直接给对应的vcpu发送中断;这样,省去了写其他cpu的lapic造成的多次vm-exit,而且代码处理路径也更加简单,不用走内核的handle mmio路径;

pv_ipi_supported()

---

return (kvm_para_has_feature(KVM_FEATURE_PV_SEND_IPI) &&

(num_possible_cpus() != 1));

---

kvm_apic_init()

-> kvm_setup_pv_ipi() //pv_ipi_supported()

---

apic->send_IPI_mask = kvm_send_ipi_mask;

apic->send_IPI_mask_allbutself = kvm_send_ipi_mask_allbutself;

---

__send_ipi_mask()

---

for_each_cpu(cpu, mask) {

apic_id = per_cpu(x86_cpu_to_apicid, cpu);

if (!ipi_bitmap) {

min = max = apic_id;

} else if (apic_id < min && max - apic_id < KVM_IPI_CLUSTER_SIZE) {

ipi_bitmap <<= min - apic_id;

min = apic_id;

} else if (apic_id > min && apic_id < min + KVM_IPI_CLUSTER_SIZE) {

max = apic_id < max ? max : apic_id;

} else {

ret = kvm_hypercall4(KVM_HC_SEND_IPI, (unsigned long)ipi_bitmap,

(unsigned long)(ipi_bitmap >> BITS_PER_LONG), min, icr);

min = max = apic_id;

ipi_bitmap = 0;

}

__set_bit(apic_id - min, (unsigned long *)&ipi_bitmap);

}

// min是base,ipi_bitmap中保存的是apic_id - min的偏移,之后将base和offset bitmap

// 全部传如vmcall

if (ipi_bitmap) {

ret = kvm_hypercall4(KVM_HC_SEND_IPI, (unsigned long)ipi_bitmap,

(unsigned long)(ipi_bitmap >> BITS_PER_LONG), min, icr);

}

---

kvm_emulate_hypercall()

-> kvm_pv_send_ipi() // KVM_HC_SEND_IPI

-> __pv_send_ipi()

-> kvm_apic_set_irq()

-> __apic_accept_irq()PV.5 kvm-pv-eoi

PV.5.1 eoi virtualization

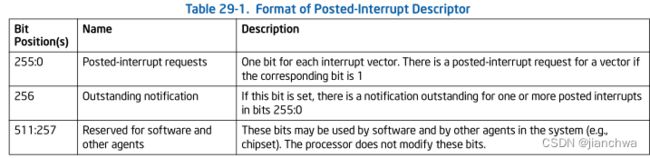

kvm-pv-eoi是为了避免eoi时引起的vm-exit;但是,在apicv中,已经引入了对eoi的优化,参考SDM 29.1.4 EOI Virtualization:

The processor performs EOI virtualization in response to the following operations:

- virtualization of a write to offset 0B0H on the APIC-access page

- virtualization of the WRMSR instruction with ECX = 80BH.

EOI virtualization occurs only if the “virtual-interrupt delivery” VM-execution control is 1.

EOI virtualization uses and updates the guest interrupt status The following pseudocode details the behavior of EOI virtualization:

Vector = SVI;

VISR[Vector] = 0;

IF any bits set in VISR

THENSVI = highest index of bit set in VISR

ELSESVI = 0;

FI;

perform PPR virtualiation (see Section 29.1.3);

IF EOI_exit_bitmap[Vector] = 1THEN

cause EOI-induced VM exit with Vector as exit qualification;

ELSEevaluate pending virtual interrupts; (see Section 29.2.1)

FI;

其中eoi exit bitmap决定对应的vector是否会引起vm-exit;对应的vm-exit并不是msr或者mmio exit,而是EOI-induced,参考对应的处理代码:

handle_apic_eoi_induced()

-> kvm_apic_set_eoi_accelerated()

-> kvm_ioapic_send_eoi()

-> kvm_ioapic_send_eoi()

PV.5.2 ioapic

之前在CPU虚拟化是,我们把ioapic给略过了,这里补充下;首先一句话概括下ioapic:

On x86, the IOAPIC is an interrupt controller that takes incoming interrupts from interrupt pins and converts them to Message Signaled Interrupts (MSIs)

参考链接:

IOAPIC - OSDev Wikihttps://wiki.osdev.org/IOAPIC这里总结下ioapic的几个要点:

- 32-bit registers IOREGSEL & IOREGWIN,put the register index in IOREGSEL, and then you can read/write in IOREGWIN.

- ioapicid, index 0, bits 24 - 27: the APIC ID for this device;

- ioapicver, index 1, bits 0 - 8 ioapic version, bits 16 - 23 Max Redirection Entry which is "how many IRQs can this I/O APIC handle"

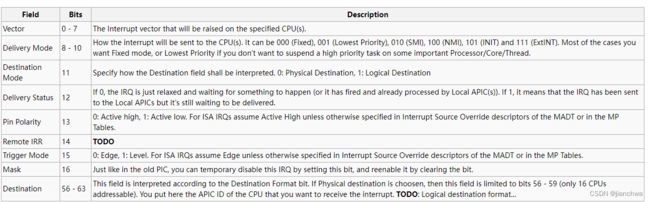

- ioredtbl, index (0x10 + n * 2) for entry bits 0 - 31, index (0x10 + (n * 2) + 1) for entry bits 32 - 63; 整个64bits的entry的格式是:

ioapic的的输入是各个中断源,它负责将中断对应的vector转发到对应的cpu的lapic,参考下图:82093AA I/O ADVANCED PROGRAMMABLE INTERRUPT CONTROLLER (IOAPIC)![]() http://web.archive.org/web/20161130153145/http://download.intel.com/design/chipsets/datashts/29056601.pdf

http://web.archive.org/web/20161130153145/http://download.intel.com/design/chipsets/datashts/29056601.pdf

看下ioapic的模拟的核心部分:

ioapic_set_irq()

-> ioapic_service()

---

union kvm_ioapic_redirect_entry *entry = &ioapic->redirtbl[irq];

...

irqe.dest_id = entry->fields.dest_id;

irqe.vector = entry->fields.vector;

irqe.dest_mode = kvm_lapic_irq_dest_mode(!!entry->fields.dest_mode);

irqe.trig_mode = entry->fields.trig_mode;

irqe.delivery_mode = entry->fields.delivery_mode << 8;

irqe.level = 1;

irqe.shorthand = APIC_DEST_NOSHORT;

irqe.msi_redir_hint = false;

...

ret = kvm_irq_delivery_to_apic(ioapic->kvm, NULL, &irqe, NULL);

---

kvm_lapic_reg_write()

-> apic_set_eoi()

---

apic_clear_isr(vector, apic);

apic_update_ppr(apic);

kvm_ioapic_send_eoi(apic, vector);

kvm_make_request(KVM_REQ_EVENT, apic->vcpu);

---

kvm_ioapic_send_eoi()

-> kvm_ioapic_update_eoi()因为apicv的eoi virtualizaton只虚拟化apic的部分,并不会模拟ioapic,如上面的apic_set_eoi(),在处理完apic的虚拟化之后,还需要ack ioapic,即调用kvm_ioapic_send_eoi();所以,apicv的eoi virtualization并不能处理所有的中断(主要是MSI),剩下的仍然要vm-exit,然后通过apic的虚拟化处理。此时,之前提到的eoi_exit_bitmap便派上了用场,对于来自ioapic的irq,要设置进这个bitmap保证可以触发vm-exit;

vcpu_scan_ioapic()

-> kvm_ioapic_scan_entry()

vcpu_load_eoi_exitmap()

---

static_call(kvm_x86_load_eoi_exitmap)(

vcpu, (u64 *)vcpu->arch.ioapic_handled_vectors);

---

PV.5.3 pv eoi

综上,我们看到apicv解决了MSI/MSIX等只需要lapic参与的eoi的vm-exit,来自ioapic的eoi因为需要ack ioapic,所以,被设置进了eoi_exit_bitmap,仍然会vm-exit;那么,同样为了减少vm-exit的kvm-pv-eoi是怎么做到的?

注:开始时,因为pv-eoi解决的ioapic部分的vm-exit,进而和apicv形成补充,然而并不是这样的,kvm-pv-eoi同样没有优化ioapic的部分,而且和apicv互斥,它解决的是没有apicv的场景。

kvm-pv-eoi依赖的是interrupt inject导致的vm-exit和vm-entry,基本原理如下:;

- 在vm-entry之间,注入中断,此时会检查,是否有多个pending的interrupt request,如果有,那么就需要及时进行eoi以处理pending的ir,如果没有,那么设置特殊标记,提醒guest os不需要eoi;

- 因中断再次vm-exit,清除上次的没有eoi的isr;重复上一个步骤;

所以,这个特性,我们可以称它为delay eoi;我们参考代码:

Guest OS端:

--------------------------------------------------------------------

ack_APIC_irq()

-> apic_eoi()

-> apic->eoi_write(APIC_EOI, APIC_EOI_ACK);

kvm_guest_apic_eoi_write()

---

if (__test_and_clear_bit(KVM_PV_EOI_BIT, this_cpu_ptr(&kvm_apic_eoi)))

return;

apic->native_eoi_write(APIC_EOI, APIC_EOI_ACK);

---

(1) KVM设置了KVM_PV_EOI_BIT标记,说明可以delay eoi

(2) Guest OS清理KVM_PV_EOI_BIT标记,告知KVM中断已经在Guest端ack过,KVM需要进行eoi

Hypervisor端:

-----------------------------------------------------------------------

Step 1: 没有posted interrupt时,设置irr并给对应的vcpu发送ipi,强制其vm-exit

__apic_accept_irq()

---

// ignore the post interrupt path

if (static_call(kvm_x86_deliver_posted_interrupt)(vcpu, vector)) {

kvm_lapic_set_irr(vector, apic);

kvm_make_request(KVM_REQ_EVENT, vcpu);

kvm_vcpu_kick(vcpu);

}

---

kvm_lapic_set_irr()

---

kvm_lapic_set_vector(vec, apic->regs + APIC_IRR);

apic->irr_pending = true;

---

Step 2: 注入中断,设置isr,清理irr,检查是否可以delay eoi

inject_pending_event()

-> kvm_cpu_get_interrupt()

-> kvm_get_apic_interrupt()

-> kvm_apic_has_interrupt()

-> apic_has_interrupt_for_ppr()

-> apic_find_highest_irr()

-> apic_search_irr() // APIC_IRR

-> apic_clear_irr(vector, apic) // vector is returned from kvm_apic_has_interrupt()

---

apic->irr_pending = false;

kvm_lapic_clear_vector(vec, apic->regs + APIC_IRR);

if (apic_search_irr(apic) != -1)

apic->irr_pending = true;

---

-> apic_set_isr(vector, apic)

-> apic->highest_isr_cache = vector

kvm_lapic_sync_to_vapic()

-> apic_sync_pv_eoi_to_guest()

---

if (!pv_eoi_enabled(vcpu) ||

/* IRR set or many bits in ISR: could be nested. */

apic->irr_pending ||

/* Cache not set: could be safe but we don't bother. */

apic->highest_isr_cache == -1 ||

/* Need EOI to update ioapic. */

kvm_ioapic_handles_vector(apic, apic->highest_isr_cache)) {

return;

}

pv_eoi_set_pending(apic->vcpu);

---

//设置KVM_PV_EOI_BIT

if (pv_eoi_put_user(vcpu, KVM_PV_EOI_ENABLED) < 0)

return;

__set_bit(KVM_APIC_PV_EOI_PENDING, &vcpu->arch.apic_attention);

---

---

irr_pending : there is still interrupt requeset pending, we need eoi the trigger it

irq of ioapic : the irq is from ioapic, we need eoi to ack ioapic

Step 3: 当guest因ipi再次vm-exit时,做eoi

vcpu_enter_guest()

---

if (vcpu->arch.apic_attention)

kvm_lapic_sync_from_vapic(vcpu);

r = static_call(kvm_x86_handle_exit)(vcpu, exit_fastpath);

---

kvm_lapic_sync_from_vapic()

---

if (test_bit(KVM_APIC_PV_EOI_PENDING, &vcpu->arch.apic_attention))

apic_sync_pv_eoi_from_guest(vcpu, vcpu->arch.apic);

---

apic_sync_pv_eoi_from_guest()

---

/*

* PV EOI state is derived from KVM_APIC_PV_EOI_PENDING in host

* and KVM_PV_EOI_ENABLED in guest memory as follows:

*

* KVM_APIC_PV_EOI_PENDING is unset:

* -> host disabled PV EOI.

* KVM_APIC_PV_EOI_PENDING is set, KVM_PV_EOI_ENABLED is set:

* -> host enabled PV EOI, guest did not execute EOI yet.

* KVM_APIC_PV_EOI_PENDING is set, KVM_PV_EOI_ENABLED is unset:

* -> host enabled PV EOI, guest executed EOI.

*/

BUG_ON(!pv_eoi_enabled(vcpu));

pending = pv_eoi_get_pending(vcpu);

pv_eoi_clr_pending(vcpu);

if (pending)

return;

vector = apic_set_eoi(apic);

---

注意:pending为true,表示guest os还没有清理KVM_PV_EOI_BIT,这时不需要eoi

kvm-pv-eoi的通过MSR共享内存的操作,这里我们就不再赘述了。

VCPU Not Run

本节主要看下vcpu thread让出CPU的情况。

NR.1 Basic Halt

vcpu_block()是x86 kvm的一个函数,当Guest OS执行halt时,vcpu会进入到这个函数,参考代码:

GuestOS

do_idle()

-> arch_cpu_idle_enter()

-> cpuidle_idle_call()

-> default_idle_call()

-> arch_cpu_idle()

-> x86_idle() // It is a function pointer

-> default_idle() // look at select_idle_routine()

-> raw_safe_halt()

-> arch_safe_halt()

-> native_safe_halt()

---

asm volatile("sti; hlt": : :"memory");

---

Hypervisor

kvm_emulate_halt()

-> kvm_vcpu_halt()

-> __kvm_vcpu_halt(vcpu, KVM_MP_STATE_HALTED, KVM_EXIT_HLT)

---

++vcpu->stat.halt_exits;

if (lapic_in_kernel(vcpu)) {

vcpu->arch.mp_state = state;

return 1;

}

---

vcpu_run()

-> vcpu_block()

---

prepare_to_rcuwait(&vcpu->wait);

for (;;) {

set_current_state(TASK_INTERRUPTIBLE);

if (kvm_vcpu_check_block(vcpu) < 0)

break;

waited = true;

schedule();

}

---

kvm_vcpu_kick()

-> kvm_vcpu_wake_up()

---

waitp = kvm_arch_vcpu_get_wait(vcpu);

if (rcuwait_wake_up(waitp)) {

WRITE_ONCE(vcpu->ready, true);

++vcpu->stat.generic.halt_wakeup;

return true;

}

---

当guest OS cpu进入idle,并执行halt,vcpu thread会让出cpu,进入TASK_INTERRUPTIBLE状态;之后,可以通过kvm_vcpu_kick()唤醒它。

NR.2 INIT-SIPI

参考链接:Symmetric Multiprocessing - OSDev Wikihttps://wiki.osdev.org/Symmetric_Multiprocessing

The MP initialization protocol defines two classes of processors:

- bootstrap processor (BSP)

- application processors (APs)

Following a power-up or RESET of an MP system, system hardware dynamically selects one of the processors on the system bus as the BSP. The remaining processors are designated as AP.

The BSP executes the BIOS’s boot-strap code to configure the APIC environment, sets up system-wide data structures, and starts and initializes the APs. When the BSP and APs are initialized, the BSP then begins executing the operating-system initialization code.

Following a power-up or reset, the APs complete a minimal self-configuration, then wait for a startup signal (a SIPI message) from the BSP processor. Upon receiving a SIPI message, an AP executes the BIOS AP configuration code, which ends with the AP being placed in halt state.

以上主要是解释几个概念,包括BSP/AP/SIPI以及它们在CPU启动过程中的关系;同时,参考下面的链接:

operating system - What is the effect of STARTUP IPI on Application Processor? - Stack Overflowhttps://stackoverflow.com/questions/40083533/what-is-the-effect-of-startup-ipi-on-application-processor其中也谈到了INIT IPI/Startup IPI(SPIP)/Wait for SIPI state三者的关系;即

- BSP给APs发送INIT IPI,使APs进入Wait for SIPI state

- BSP执行完全局初始化工作,给APs发送SIPI

在vcpu_block中,就有相关的代码:

kvm_arch_vcpu_create()

---

if (!irqchip_in_kernel(vcpu->kvm) || kvm_vcpu_is_reset_bsp(vcpu))

vcpu->arch.mp_state = KVM_MP_STATE_RUNNABLE;

else

vcpu->arch.mp_state = KVM_MP_STATE_UNINITIALIZED;

---

vcpu_block()

-> kvm_apic_accept_events()

---

if (test_bit(KVM_APIC_INIT, &pe)) {

clear_bit(KVM_APIC_INIT, &apic->pending_events);

kvm_vcpu_reset(vcpu, true);

if (kvm_vcpu_is_bsp(apic->vcpu))

vcpu->arch.mp_state = KVM_MP_STATE_RUNNABLE;

else

vcpu->arch.mp_state = KVM_MP_STATE_INIT_RECEIVED;

}

if (test_bit(KVM_APIC_SIPI, &pe)) {

clear_bit(KVM_APIC_SIPI, &apic->pending_events);