Xgboost安装、使用和算法原理理解

一、Xgboost相关重要文档

1、官方文档

官方文档中可查询到各语言版本的安装方法、官方用例等

XGBoost Documentation — xgboost 1.6.0-dev documentation![]() https://xgboost.readthedocs.io/en/latest/index.html2、github

https://xgboost.readthedocs.io/en/latest/index.html2、github

github源码可查看代码实现、下载数据样例等GitHub - dmlc/xgboost: Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow![]() https://github.com/dmlc/xgboost3、maven仓库

https://github.com/dmlc/xgboost3、maven仓库

使用IDEA配置xgboost4j的时候,根据scala版本配置依赖

https://mvnrepository.com/artifact/ml.dmlc/xgboost4j![]() https://mvnrepository.com/artifact/ml.dmlc/xgboost4j

https://mvnrepository.com/artifact/ml.dmlc/xgboost4j

POM文件配置:

2.12

2.12.6

3.0.2

1.2.0

ml.dmlc

xgboost4j-spark_${spark.version.scala}

${xgboost.version}

二、xgboost使用方法

参考官方github给出的spark分布式训练的代码例子

https://github.com/dmlc/xgboost/blob/master/jvm-packages/xgboost4j-example/src/main/scala/ml/dmlc/xgboost4j/scala/example/spark/SparkMLlibPipeline.scala![]() https://github.com/dmlc/xgboost/blob/master/jvm-packages/xgboost4j-example/src/main/scala/ml/dmlc/xgboost4j/scala/example/spark/SparkMLlibPipeline.scala

https://github.com/dmlc/xgboost/blob/master/jvm-packages/xgboost4j-example/src/main/scala/ml/dmlc/xgboost4j/scala/example/spark/SparkMLlibPipeline.scala

或者官网教程讲解的例子。这与上面的是同一个例子,只不过这里是教程,上面的是代码。

XGBoost4J-Spark Tutorial (version 0.9+) — xgboost 1.6.0-dev documentation

其他博客中的代码例子 :

[机器学习] XGBoost on Spark 分布式使用完全手册_小墨鱼的专栏-CSDN博客_spark xgboost

XGBoost实践经验:

经验1:二分类中不要设置参数,"num_class" -> 2, 会训练失败。多元分类时设置。 经验2:为了防止block,将训练数据分区数和申请的工作节点数numWorkers保持一致。即对训练数据做repartition处理。numWorkers默认值是32. 经验3:numWorker参数应该与executor数量设置一致,executor-cores设置小一些 经验4:在train的过程中,每个partition占用的内存最好限制在executor-memory的1/3以内,因为除了本来训练样本需要驻留的内存外,xgboost为了速度的提升,为每个线程申请了额外的内存,并且这些内存是JVM所管理不到的 经验5:检查训练数据,如果有缺失值,XGB4j会training failed 经验6:XGB4j 1.3.0以上版本,解决了 spark.speculation机制 和xgboost4j的worker的逻辑冲突问题,但1.2.0版本比1.3.1 、1.5.0运行更稳定 经验7:kill_spark_context_on_worker_failure 设置为false,默认值是true,如果worker训练失败会杀掉spark进程

注意区分:xgboost本地单机模式 和xgboost spark分布式模式 !!!

上手机器学习系列-第5篇(中)-XGBoost+Scala/Spark_a_step_further的博客-CSDN博客

代码示例1: 多元分类 + pipeline

import org.apache.spark.ml.{Pipeline, PipelineModel}

import org.apache.spark.ml.evaluation.MulticlassClassificationEvaluator

import org.apache.spark.ml.feature._

import org.apache.spark.ml.tuning._

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.types._

import ml.dmlc.xgboost4j.scala.spark.{XGBoostClassifier, XGBoostClassificationModel}

object xgboostExample {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().appName("xgboost").getOrCreate()

val cpuGpu = args(0)

//0-用GPU还是CPU

val (treeMethod, numWorkers) = if (cpuGpu == "gpu") {

("gpu_hist", 4)

} else ("auto", 8)

// 1-加载数据集

val masterPath = "hdfs://gaoToby/ml_data/xgboost_data/"

val irisPath = masterPath + "iris.data"

val schema = new StructType(Array(

StructField("sepal length", DoubleType, true),

StructField("sepal width", DoubleType, true),

StructField("petal length", DoubleType, true),

StructField("petal width", DoubleType, true),

StructField("class", StringType, true)))

val rawInput = spark.read.schema(schema).csv(irisPath)

// Split training and test dataset

val Array(training, test) = rawInput.randomSplit(Array(0.8, 0.2), 123)

training.cache()

training.show(5,false)

//2- 创建Pipeline

// Build ML pipeline, it includes 4 stages:

// 1, Assemble all features into a single vector column.

// 2, From string label to indexed double label.

// 3, Use XGBoostClassifier to train classification model.

// 4, Convert indexed double label back to original string label.

val assembler = new VectorAssembler()

.setInputCols(Array("sepal length", "sepal width", "petal length", "petal width"))

.setOutputCol("features")

val labelIndexer = new StringIndexer()

.setInputCol("class")

.setOutputCol("classIndex")

.fit(training)

val booster = new XGBoostClassifier(

Map("eta" -> 0.1f,

"max_depth" -> 2,

"objective" -> "multi:softprob",

"num_class" -> 3,

"num_round" -> 100,

"num_workers" -> numWorkers,

"tree_method" -> treeMethod

)

)

booster.setFeaturesCol("features")

booster.setLabelCol("classIndex")

val labelConverter = new IndexToString()

.setInputCol("prediction")

.setOutputCol("realLabel")

.setLabels(labelIndexer.labels)

val pipeline = new Pipeline()

.setStages(Array(assembler, labelIndexer, booster, labelConverter))

//3-模型训练

val model = pipeline.fit(training)

//4-模型预测

val prediction = model.transform(test)

prediction.show(false)

//5-模型评价

val evaluator = new MulticlassClassificationEvaluator()

evaluator.setLabelCol("classIndex")

evaluator.setPredictionCol("prediction")

val accuracy = evaluator.evaluate(prediction)

println("The model accuracy is : " + accuracy)

//6-模型调参 Tune model using cross validation

val paramGrid = new ParamGridBuilder()

.addGrid(booster.maxDepth, Array(3, 8))

.addGrid(booster.eta, Array(0.2, 0.6))

.build()

val cv = new CrossValidator()

.setEstimator(pipeline)

.setEvaluator(evaluator)

.setEstimatorParamMaps(paramGrid)

.setNumFolds(3)

val cvModel = cv.fit(training)

val bestModel = cvModel.bestModel.asInstanceOf[PipelineModel].stages(2)

.asInstanceOf[XGBoostClassificationModel]

println("The params of best XGBoostClassification model : " +

bestModel.extractParamMap())

println("The training summary of best XGBoostClassificationModel : " +

bestModel.summary)

//7- 模型保存

val pipelineModelPath = "hdfs://gaoToby/model/xgboost/"

model.write.overwrite().save(pipelineModelPath)

//8-模型加载

val model2 = PipelineModel.load(pipelineModelPath)

model2.transform(test).show(false)

//9-保存为单机版本的模型,可以本地加载模型

// Export the XGBoostClassificationModel as local XGBoost model,

// then you can load it back in local Python environment.

val nativeModelPath = "/home/gaoToby/xgboost/nativeModel"

bestModel.nativeBooster.saveModel(nativeModelPath)

}

}结果展示:

代码示例2: 二元分类 + 无pipeline直接训练

import ml.dmlc.xgboost4j.scala.spark.{XGBoostClassificationModel, XGBoostClassifier}

import org.apache.spark.sql.SparkSession

/**

* XGBoost例子: 二元分类、CPU计算、不用Pipeline

*/

object xgboost {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().appName("xgboost").enableHiveSupport().getOrCreate()

val masterPath = "hdfs://gaoToby/ml_data/xgboost_data/"

val trainPath = masterPath + "agaricus.txt.train"

val testPath = masterPath + "agaricus.txt.test"

//1-加载训练数据

val trainData = spark.read.format("libsvm").load(trainPath)

.dropDuplicates()

trainData.cache()

trainData.show(5,false)

val testData = spark.read.format("libsvm").load(testPath)

testData.cache()

testData.show(5,false)

//2- 创建模型实例

val xgbClassifier = new XGBoostClassifier(

Map("eta" -> 0.1f,

"missing" -> -999.0,

"max_depth" -> 4,

"objective" -> "binary:logistic", //二元分类

// "num_class" -> 2, //二分类中不要设置这个参数,会训练失败。多元分类时设置。

"num_round" -> 100,

"num_workers" -> 4,

"tree_method" -> "hist", //GPU不能用,用CPU的方法

"train_test_ratio" -> 0.7

)

).setFeaturesCol("features")

.setLabelCol("label")

.setAllowNonZeroForMissing(true) //允许非零的缺失值存在

//3-模型训练

val modelFitted =xgbClassifier.fit(trainData)

//4-模型预测

val predicts = modelFitted.transform(testData) //对DataFrame用transform进行转换

predicts.show(5,false)

// import org.apache.spark.ml.linalg.Vector

// val features = trainData.head().getAs[Vector]("features")

// val result: Double = modelFitted.predict(features) //对一条向量的特征数据进行预测用predict

// println(result)

//5-模型评价 --二元分类模型评价器

import org.apache.spark.ml.evaluation.BinaryClassificationEvaluator

val evaluator = new BinaryClassificationEvaluator()

.setMetricName("areaUnderROC")

.setRawPredictionCol("rawPrediction")

.setLabelCol("label")

val auc = evaluator.evaluate(predicts)

println(s"xgboost Model evaluate trainData areaUnderROC i.e AUC: $auc")

//6-模型保存

val modelPath = "hdfs://gaoToby/model/xgboost/"

modelFitted.write.overwrite().save(modelPath)

//7-模型加载

val xgboostModel1 = XGBoostClassificationModel.load(modelPath)

val testPrediction = xgboostModel1.transform(testData)

testPrediction.cache()

testPrediction.show(5,false)

}

}结果展示:

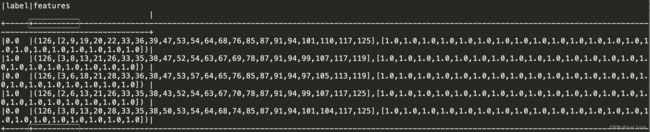

1、训练数据

2、模型预测数据

三、xgboost原理理解

xgboost参数解读:XGBoost Parameters — xgboost 1.6.0-dev documentation

xgboost参数解释_lc574260570的博客-CSDN博客

论文:XGBoost: A Scalable Tree Boosting System

上手机器学习系列-第5篇(下)-XGBoost原理_a_step_further的博客-CSDN博客

原理介绍文档:中文版:https://xgboost.apachecn.org/#/docs/3

英文版: Introduction to Boosted Trees — xgboost 1.6.0-dev documentation