YOLOv5、YOLOv8改进:MobileViT:轻量通用且适合移动端的视觉Transformer

MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer

论文:https://arxiv.org/abs/2110.02178

![]()

1简介

MobileviT是一个用于移动设备的轻量级通用可视化Transformer,据作者介绍,这是第一次基于轻量级CNN网络性能的轻量级ViT工作,性能SOTA!。性能优于MobileNetV3、CrossviT等网络。

轻量级卷积神经网络(CNN)是移动视觉任务的实际应用。他们的空间归纳偏差允许他们在不同的视觉任务中以较少的参数学习表征。然而,这些网络在空间上是局部的。为了学习全局表征,采用了基于自注意力的Vision Transformer(ViTs)。与CNN不同,ViT是heavy-weight。

在本文中,本文提出了以下问题:是否有可能结合CNN和ViT的优势,构建一个轻量级、低延迟的移动视觉任务网络?

为此提出了MobileViT,一种轻量级的、通用的移动设备Vision Transformer。MobileViT提出了一个不同的视角,以Transformer作为卷积处理信息。

![]()

实验结果表明,在不同的任务和数据集上,MobileViT显著优于基于CNN和ViT的网络。

在ImageNet-1k数据集上,MobileViT在大约600万个参数的情况下达到了78.4%的Top-1准确率,对于相同数量的参数,比MobileNetv3和DeiT的准确率分别高出3.2%和6.2%。

在MS-COCO目标检测任务中,在参数数量相近的情况下,MobileViT比MobileNetv3的准确率高5.7%。

2.Mobile-ViT

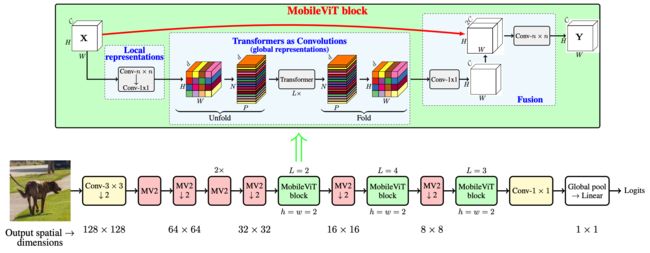

MobileViT Block如下图所示,其目的是用较少的参数对输入张量中的局部和全局信息进行建模。

形式上,对于一个给定的输入张量, MobileViT首先应用一个n×n标准卷积层,然后用一个一个点(或1×1)卷积层产生特征。n×n卷积层编码局部空间信息,而点卷积通过学习输入通道的线性组合将张量投影到高维空间(d维,其中d>c)。

通过MobileViT,希望在拥有有效感受野的同时,对远距离非局部依赖进行建模。一种被广泛研究的建模远程依赖关系的方法是扩张卷积。然而,这种方法需要谨慎选择膨胀率。否则,权重将应用于填充的零而不是有效的空间区域。

另一个有希望的解决方案是Self-Attention。在Self-Attention方法中,具有multi-head self-attention的vision transformers(ViTs)在视觉识别任务中是有效的。然而,vit是heavy-weight,并由于vit缺乏空间归纳偏差,表现出较差的可优化性。

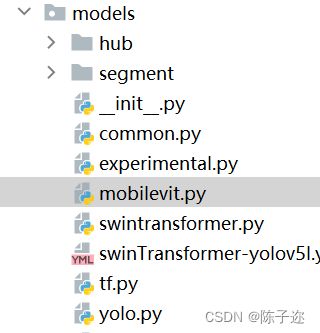

下面附上改进代码

---------------------------------------------分割线--------------------------------------------------

在common中加入如下代码

需要安装一个einops模块

pip --default-timeout=5000 install -i https://pypi.tuna.tsinghua.edu.cn/simple einops

import torch

import torch.nn as nn

from einops import rearrange

def conv_1x1_bn(inp, oup):

return nn.Sequential(

nn.Conv2d(inp, oup, 1, 1, 0, bias=False),

nn.BatchNorm2d(oup),

nn.SiLU()

)

def conv_nxn_bn(inp, oup, kernal_size=3, stride=1):

return nn.Sequential(

nn.Conv2d(inp, oup, kernal_size, stride, 1, bias=False),

nn.BatchNorm2d(oup),

nn.SiLU()

)

class PreNorm(nn.Module):

def __init__(self, dim, fn):

super().__init__()

self.norm = nn.LayerNorm(dim)

self.fn = fn

def forward(self, x, **kwargs):

return self.fn(self.norm(x), **kwargs)

class FeedForward(nn.Module):

def __init__(self, dim, hidden_dim, dropout=0.):

super().__init__()

self.net = nn.Sequential(

nn.Linear(dim, hidden_dim),

nn.SiLU(),

nn.Dropout(dropout),

nn.Linear(hidden_dim, dim),

nn.Dropout(dropout)

)

def forward(self, x):

return self.net(x)

class Attention(nn.Module):

def __init__(self, dim, heads=8, dim_head=64, dropout=0.):

super().__init__()

inner_dim = dim_head * heads

project_out = not (heads == 1 and dim_head == dim)

self.heads = heads

self.scale = dim_head ** -0.5

self.attend = nn.Softmax(dim = -1)

self.to_qkv = nn.Linear(dim, inner_dim * 3, bias = False)

self.to_out = nn.Sequential(

nn.Linear(inner_dim, dim),

nn.Dropout(dropout)

) if project_out else nn.Identity()

def forward(self, x):

qkv = self.to_qkv(x).chunk(3, dim=-1)

q, k, v = map(lambda t: rearrange(t, 'b p n (h d) -> b p h n d', h = self.heads), qkv)

dots = torch.matmul(q, k.transpose(-1, -2)) * self.scale

attn = self.attend(dots)

out = torch.matmul(attn, v)

out = rearrange(out, 'b p h n d -> b p n (h d)')

return self.to_out(out)

class Transformer(nn.Module):

def __init__(self, dim, depth, heads, dim_head, mlp_dim, dropout=0.):

super().__init__()

self.layers = nn.ModuleList([])

for _ in range(depth):

self.layers.append(nn.ModuleList([

PreNorm(dim, Attention(dim, heads, dim_head, dropout)),

PreNorm(dim, FeedForward(dim, mlp_dim, dropout))

]))

def forward(self, x):

for attn, ff in self.layers:

x = attn(x) + x

x = ff(x) + x

return x

class MV2Block(nn.Module):

def __init__(self, inp, oup, stride=1, expansion=4):

super().__init__()

self.stride = stride

assert stride in [1, 2]

hidden_dim = int(inp * expansion)

self.use_res_connect = self.stride == 1 and inp == oup

if expansion == 1:

self.conv = nn.Sequential(

# dw

nn.Conv2d(hidden_dim, hidden_dim, 3, stride, 1, groups=hidden_dim, bias=False),

nn.BatchNorm2d(hidden_dim),

nn.SiLU(),

# pw-linear

nn.Conv2d(hidden_dim, oup, 1, 1, 0, bias=False),

nn.BatchNorm2d(oup),

)

else:

self.conv = nn.Sequential(

# pw

nn.Conv2d(inp, hidden_dim, 1, 1, 0, bias=False),

nn.BatchNorm2d(hidden_dim),

nn.SiLU(),

# dw

nn.Conv2d(hidden_dim, hidden_dim, 3, stride, 1, groups=hidden_dim, bias=False),

nn.BatchNorm2d(hidden_dim),

nn.SiLU(),

# pw-linear

nn.Conv2d(hidden_dim, oup, 1, 1, 0, bias=False),

nn.BatchNorm2d(oup),

)

def forward(self, x):

if self.use_res_connect:

return x + self.conv(x)

else:

return self.conv(x)

class MobileViTBlock(nn.Module):

def __init__(self, dim, depth, channel, kernel_size, patch_size, mlp_dim, dropout=0.):

super().__init__()

self.ph, self.pw = patch_size

self.conv1 = conv_nxn_bn(channel, channel, kernel_size)

self.conv2 = conv_1x1_bn(channel, dim)

self.transformer = Transformer(dim, depth, 4, 8, mlp_dim, dropout)

self.conv3 = conv_1x1_bn(dim, channel)

self.conv4 = conv_nxn_bn(2 * channel, channel, kernel_size)

def forward(self, x):

y = x.clone()

# Local representations

x = self.conv1(x)

x = self.conv2(x)

# Global representations

_, _, h, w = x.shape

x = rearrange(x, 'b d (h ph) (w pw) -> b (ph pw) (h w) d', ph=self.ph, pw=self.pw)

x = self.transformer(x)

x = rearrange(x, 'b (ph pw) (h w) d -> b d (h ph) (w pw)', h=h//self.ph, w=w//self.pw, ph=self.ph, pw=self.pw)

# Fusion

x = self.conv3(x)

x = torch.cat((x, y), 1)

x = self.conv4(x)

return x

class MobileViT(nn.Module):

def __init__(self, image_size, dims, channels, num_classes, expansion=4, kernel_size=3, patch_size=(2, 2)):

super().__init__()

ih, iw = image_size

ph, pw = patch_size

assert ih % ph == 0 and iw % pw == 0

L = [2, 4, 3]

self.conv1 = conv_nxn_bn(3, channels[0], stride=2)

self.mv2 = nn.ModuleList([])

self.mv2.append(MV2Block(channels[0], channels[1], 1, expansion))

self.mv2.append(MV2Block(channels[1], channels[2], 2, expansion))

self.mv2.append(MV2Block(channels[2], channels[3], 1, expansion))

self.mv2.append(MV2Block(channels[2], channels[3], 1, expansion)) # Repeat

self.mv2.append(MV2Block(channels[3], channels[4], 2, expansion))

self.mv2.append(MV2Block(channels[5], channels[6], 2, expansion))

self.mv2.append(MV2Block(channels[7], channels[8], 2, expansion))

self.mvit = nn.ModuleList([])

self.mvit.append(MobileViTBlock(dims[0], L[0], channels[5], kernel_size, patch_size, int(dims[0]*2)))

self.mvit.append(MobileViTBlock(dims[1], L[1], channels[7], kernel_size, patch_size, int(dims[1]*4)))

self.mvit.append(MobileViTBlock(dims[2], L[2], channels[9], kernel_size, patch_size, int(dims[2]*4)))

self.conv2 = conv_1x1_bn(channels[-2], channels[-1])

self.pool = nn.AvgPool2d(ih//32, 1)

self.fc = nn.Linear(channels[-1], num_classes, bias=False)

def forward(self, x):

x = self.conv1(x)

x = self.mv2[0](x)

x = self.mv2[1](x)

x = self.mv2[2](x)

x = self.mv2[3](x) # Repeat

x = self.mv2[4](x)

x = self.mvit[0](x)

x = self.mv2[5](x)

x = self.mvit[1](x)

x = self.mv2[6](x)

x = self.mvit[2](x)

x = self.conv2(x)

x = self.pool(x).view(-1, x.shape[1])

x = self.fc(x)

return x

def mobilevit_xxs():

dims = [64, 80, 96]

channels = [16, 16, 24, 24, 48, 48, 64, 64, 80, 80, 320]

return MobileViT((256, 256), dims, channels, num_classes=1000, expansion=2)

def mobilevit_xs():

dims = [96, 120, 144]

channels = [16, 32, 48, 48, 64, 64, 80, 80, 96, 96, 384]

return MobileViT((256, 256), dims, channels, num_classes=1000)

def mobilevit_s():

dims = [144, 192, 240]

channels = [16, 32, 64, 64, 96, 96, 128, 128, 160, 160, 640]

return MobileViT((256, 256), dims, channels, num_classes=1000)

def count_parameters(model):

return sum(p.numel() for p in model.parameters() if p.requires_grad)

if __name__ == '__main__':

img = torch.randn(5, 3, 256, 256)

vit = mobilevit_xxs()

out = vit(img)

print(out.shape)

print(count_parameters(vit))

vit = mobilevit_xs()

out = vit(img)

print(out.shape)

print(count_parameters(vit))

vit = mobilevit_s()

out = vit(img)

print(out.shape)

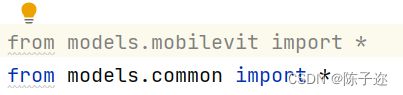

print(count_parameters(vit))yolo.py中导入并注册

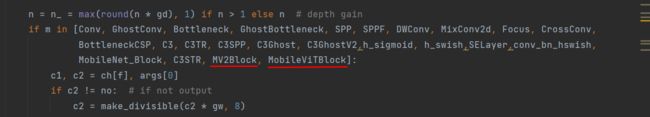

加入MV2Block, MobileViTBlock

修改yaml文件

# YOLOv5 by Ultralytics, GPL-3.0 license

# Parameters

nc: 1 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 backbone

backbone:

# [from, number, module, args] 640 x 640

# [[-1, 1, Conv, [32, 6, 2, 2]], # 0-P1/2 320 x 320

[[-1, 1, Focus, [32, 3]],

[-1, 1, MV2Block, [32, 1, 2]], # 1-P2/4

[-1, 1, MV2Block, [48, 2, 2]], # 160 x 160

[-1, 2, MV2Block, [48, 1, 2]],

[-1, 1, MV2Block, [64, 2, 2]], # 80 x 80

[-1, 1, MobileViTBlock, [64,96, 2, 3, 2, 192]], # 5 out_dim,dim, depth, kernel_size, patch_size, mlp_dim

[-1, 1, MV2Block, [80, 2, 2]], # 40 x 40

[-1, 1, MobileViTBlock, [80,120, 4, 3, 2, 480]], # 7

[-1, 1, MV2Block, [96, 2, 2]], # 20 x 20

[-1, 1, MobileViTBlock, [96,144, 3, 3, 2, 576]], # 11-P2/4 # 9

]

# YOLOv5 head

head:

[[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 7], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [256, False]], # 13

[-1, 1, Conv, [128, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 5], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [128, False]], # 17 (P3/8-small)

[-1, 1, Conv, [128, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [256, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [512, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

-

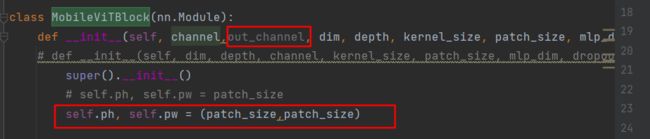

修改mobilevit.py

补充说明

einops.EinopsError: Error while processing rearrange-reduction pattern "b d (h ph) (w pw) -> b (ph pw) (h w) d".

Input tensor shape: torch.Size([1, 120, 42, 42]). Additional info: {'ph': 4, 'pw': 4}

是因为输入输出不匹配造成

记得关掉rect哦!一个是在参数里,另一个在下图。如果要在test或者val中跑,同样要改