部署 反向代理高可用(keepalived+LVS-DR)、web集群动静分离(nginx+tomcat)、MySQL集群(MHA高可用+一主两从+读写分离)、NFS共享文件 项目

部署

-

- 一、实验步骤

-

- 1.部署框架前准备工作

- 2.准备环境(关闭防护墙、修改主机名)

- 二、部署LVS-DR

-

- 1.配置负载调度器ha01与ha02同时配置(192.168.174.17,192.168.174.12)

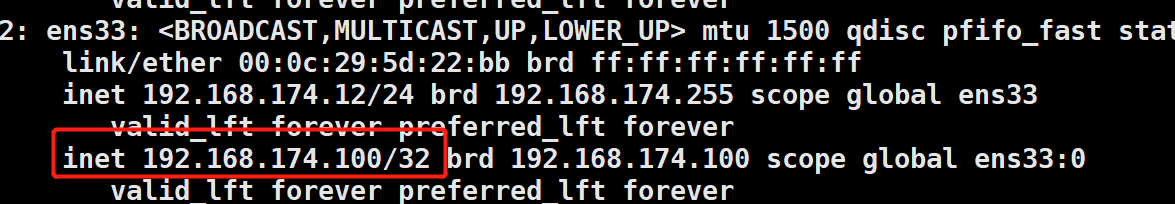

- 2.两台配置虚拟ip地址(VIP:192.168.174.100)

- 3.配置ARP内核响应参数防止更新VIP中的MAC地址,避免发生冲突

- 4.配置负载均衡分配策略

- 5.配置web节点服务器(两台slave同时部署 192.168.174.18、192.168.174.19)

- 三、部署NFS存储服务器(NFS共享存储ip地址:192.168.174.15)

-

- 1.安装NFS

- 2.节点服务器安装web服务(Nginx)并挂载共享目录(slave01,slave02)

- 2.节点服务器挂载共享目录 (slave1 slave2分别挂载/opt/web1 /opt/web2)

- 3.测试(用虚拟IP192.168.174.100访问):

- 四、部署Nginx+Tomcat的动静分离

-

- 1.安装Tomcat作为后端服务器

- 2.动静分离Tomcat server配置(192.168.174.18、192.168.174.19)

- 2.Tomcat实例主配置删除前面的 Host配置,增添新的Host配置

- 3.Nginx server 配置(192.168.174.18、192.168.174.19)两者配置相同

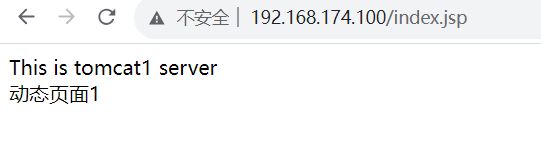

- 4.用虚拟IP 192.168.174.100/index.jsp访问测试:

- 五、 配置keeplived(主(ha01)、备(ha02)DR 服务器都需要配置)

-

- 1.主 DR服务器 ha01:

- 2.备 DR服务器 ha02:192.168.174.12

- 3.调整内核 proc 响应参数,关闭linux内核的重定向参数响应

- 六、部署MySQL集群MHA高可用

-

- 1.安装MySQL(slave01,slave02,master同时配置)

- 2.创建MySQL用户

- 3.修改MySQL配置文件

- 4.更改MySQL安装目录和配置文件的属主属组

- 5.设置路径环境变量

- 6.初始化数据库

- 7.添加MySQLD系统服务

- 8.修改MySQL 的登录密码

- 9.授权远程登录

- 10.MySQL集群配置一主双从

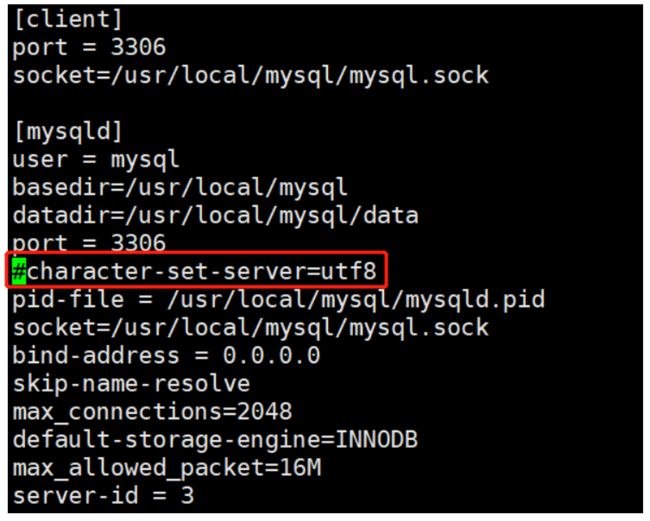

- 11.**修改 Master01、Slave01、Slave02 节点的 Mysql主配置文件/etc/my.cnf**

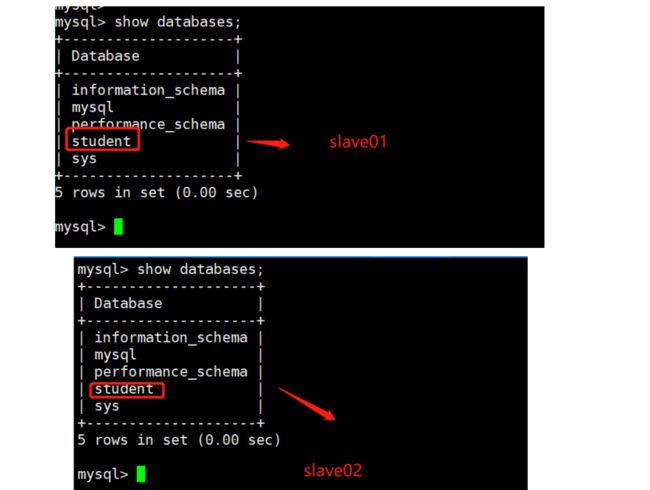

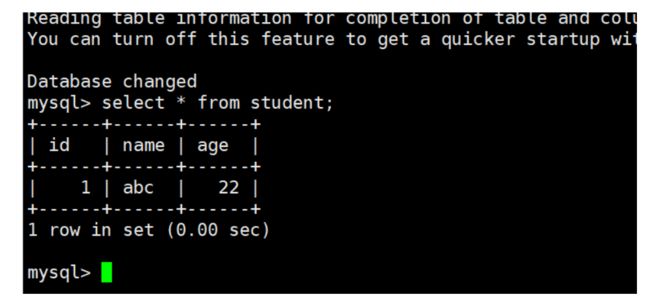

- Master 主数据库插入数据,测试是否同步

- 12.测试,同步成功:

- 七、部署MHA

-

- 1.修改三台机器,master01、slave01、slave02中的配置文件

- 2.**Master01、Slave01、Slave02 所有服务器上都安装 MHA 依赖的环境,首先安装 epel 源**

- 3.**Master01、Slave01、Slave02所有服务器上必须先安装 node 组件**

- 4.在 MHA manager 节点上安装 manager 组件

- 5.Master01、Slave01、Slave02所有服务器上配置无密码认证

- 6.在master01上配置

- 八、故障测试

-

- 1.**Master01 服务器上停止mysql服务**

- 2.**manager 服务器(slave01)上监控观察日志记录,已切换成功**

- 3.**查看slave01服务器,此时vip漂移到新的master上,已启用备用数据库**

一、实验步骤

1.部署框架前准备工作

| 服务器类型 | 部署组件 | IP地址 |

|---|---|---|

| DR1调度服务器 主(ha01) | Keepalived+LVS-DR | 192.168.174.17 |

| DR2调度服务器 备 (ha02) | Keepalived+LVS-DR | 192.168.174.12 |

| web1节点服务器 (slave01) | Nginx+Tomcat+MySQL 备+MHA manager+MHA node | 192.168.174.18 |

| web2节点服务器 (slave02) | Nginx+Tomcat +MySQL 备+MHA node | 192.168.174.19 |

| NFS存储服务器(master01) | MySQL 主+NFS+MHA node | 192.168.174.15 |

| vip | 虚拟ip | 192.168.174.100 |

2.准备环境(关闭防护墙、修改主机名)

systemctl stop firewalld.service

setenforce 0

hostnamectl set-hostname ha01

hostnamectl set-hostname ha02

hostnamectl set-hostname slave01

hostnamectl set-hostname slave02

hostnamectl set-hostname master01

二、部署LVS-DR

1.配置负载调度器ha01与ha02同时配置(192.168.174.17,192.168.174.12)

modprobe ip_vs

cat /proc/net/ip_vs

## 加载ip_vs模块,并查看版本信息

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

yum install -y ipvsadm

2.两台配置虚拟ip地址(VIP:192.168.174.100)

cd /etc/sysconfig/network-scripts/

cp ifcfg-ens33 ifcfg-ens33:0

vim ifcfg-ens33:0

DEVICE=ens33:0

ONBOOT=yes

IPADDR=192.168.174.100

GATEWAY=192.168.174.2

NETMASK=255.255.255.255

ifup ifcfg-ens33:0

ifconfig

ens33: flags=4163 mtu 1500

inet 192.168.174.17 netmask 255.255.255.0 broadcast 192.168.174.255

inet6 fe80::1c8b:9053:c28:220c prefixlen 64 scopeid 0x20

ether 00:0c:29:ae:23:9b txqueuelen 1000 (Ethernet)

RX packets 705692 bytes 1016839095 (969.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 331444 bytes 20137290 (19.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33:0: flags=4163 mtu 1500

inet 192.168.174.100 netmask 255.255.255.255 broadcast 192.168.174.100

ether 00:0c:29:ae:23:9b txqueuelen 1000 (Ethernet)

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

virbr0: flags=4099 mtu 1500

inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255

ether 52:54:00:ff:2a:5b txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

route add -host 192.168.174.100 dev ens33:0

vim /etc/rc.local

/usr/sbin/route add -host 192.168.174.100 dev ens33:0

3.配置ARP内核响应参数防止更新VIP中的MAC地址,避免发生冲突

[root@ha01 network-scripts]# vim /etc/sysctl.conf

net.ipv4.ip_forward = 0

#proc响应关闭重定向功能

net.ipv4.conf.all.send_redirects = 0

net.ipv4.conf.default.send_redirects = 0

net.ipv4.conf.ens33.send_redirects = 0

sysctl -p

## 加载配置文件生效

net.ipv4.ip_forward = 0

net.ipv4.conf.all.send_redirects = 0

net.ipv4.conf.default.send_redirects = 0

net.ipv4.conf.ens33.send_redirects = 0

4.配置负载均衡分配策略

ipvsadm-save > /etc/sysconfig/ipvsadm

## 保持策略

systemctl start ipvsadm.service

## 开启ipvsadm服务

ipvsadm -C

## 情况策略,添加虚拟ip地址,指定负载均衡算法给两台web节点服务器

ipvsadm -A -t 192.168.174.100:80 -s rr

ipvsadm -a -t 192.168.174.100:80 -r 192.168.174.18:80 -g

ipvsadm -a -t 192.168.174.100:80 -r 192.168.174.19:80 -g

ipvsadm

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP ha02:http rr

-> 192.168.174.18:http Route 1 0 0

-> 192.168.174.19:http Route 1 0 0

5.配置web节点服务器(两台slave同时部署 192.168.174.18、192.168.174.19)

(1)配置虚拟ip地址(VIP:192.168.174.100)

cd /etc/sysconfig/network-scripts/

cp ifcfg-ens33 ifcfg-lo:0

vim ifcfg-lo:0

DEVICE=lo:0

ONBOOT=yes

IPADDR=192.168.174.100

NETMASK=255.255.255.255

systemctl restart network

ifup ifcfg-lo:0

route add -host 192.168.174.100 dev lo:0

vim /etc/rc.local

## 配置永久添加路由

route add -host 192.168.174.100 dev lo:0

(2)配置ARP内核响应参数防止更新VIP中的MAC地址,避免发生冲突

vim /etc/sysctl.conf

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

sysctl -p

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

三、部署NFS存储服务器(NFS共享存储ip地址:192.168.174.15)

1.安装NFS

rpm -q rpcbind nfs-utils

## 检查是否有安装nfs

rpcbind-0.2.0-42.el7.x86_64

nfs-utils-1.3.0-0.48.el7.x86_64

systemctl start nfs

systemctl start rpcbind

##开启服务

systemctl enable nfs

## 设置开机自启

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.

systemctl enable rpcbind

mkdir /opt/web1 /opt/web2

## 创建web目录

echo 'This is node web1

' > /opt/web1/index.html

echo 'This is node web2

' > /opt/web2/index.html

## 添加网页内容

vim /etc/exports

/opt/web1 192.168.174.0/24(ro,sync)

/opt/web2 192.168.174.0/24(ro,sync)

exportfs -rv #发布共享

exporting 192.168.174.0/24:/opt/web2

exporting 192.168.174.0/24:/opt/web1

2.节点服务器安装web服务(Nginx)并挂载共享目录(slave01,slave02)

cd /opt/

ls

nginx-1.12.2.tar.gz rh

systemctl disable firewalld.service

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

systemctl status firewalld.service

(1)安装nginx

yum -y install pcre-devel zlib-devel gcc gcc-c++ make

useradd -M -s /sbin/nologin nginx

tar -zxvf nginx-1.12.2.tar.gz

cd nginx-1.12.2/

./configure \

--prefix=/usr/local/nginx \

--user=nginx \

--group=nginx \

--with-http_stub_status_module

make -j2 && make install

cd /usr/local/nginx/sbin/

ln -s /usr/local/nginx/sbin/nginx /usr/local/sbin/ ## 创建软链接

nginx -t

nginx: the configuration file /usr/local/nginx/conf/nginx.conf syntax is ok

nginx: configuration file /usr/local/nginx/conf/nginx.conf test is successful

netstat -natp | grep :80

nginx

## 开启nginx服务

2.节点服务器挂载共享目录 (slave1 slave2分别挂载/opt/web1 /opt/web2)

cd ~

showmount -e 192.168.174.15

Export list for 192.168.174.15:

/opt/web2 192.168.174.0/24

/opt/web1 192.168.174.0/24

mount.nfs 192.168.174.15:/opt/web1 /usr/local/nginx/html/ #slave01

mount.nfs 192.168.174.15:/opt/web2 /usr/local/nginx/html/ #slave02

cd /usr/local/nginx/html/

ls

index.html

cat index.html

This is node web1

3.测试(用虚拟IP192.168.174.100访问):

四、部署Nginx+Tomcat的动静分离

1.安装Tomcat作为后端服务器

cd /opt

[root@slave01 opt]# cd ~

[root@slave01 ~]# vim tomcat.sh

#!/bin/bash

#安装部署tomcat

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

#安装JDK

cd /opt

rpm -ivh jdk-8u371-linux-x64.rpm &> /dev/null

java -version

#设置JDK环境变量

cat > /etc/profile.d/java.sh < /dev/null

mv apache-tomcat-8.5.16 /usr/local/tomcat

##启动tomcat

/usr/local/tomcat/bin/startup.sh

if [ $? -eq 0 ];then

echo -e "\033[34;1m tomcat安装完成! \033[0m"

fi

chmod +x tomcat.sh

./tomcat.sh

2.动静分离Tomcat server配置(192.168.174.18、192.168.174.19)

(1)192.168.174.18

mkdir /usr/local/tomcat/webapps/test

## 创建目录

cd /usr/local/tomcat/webapps/

ls

docs examples host-manager manager ROOT test

cd test/

vim /usr/local/tomcat/webapps/test/index.jsp

<%@ page language="java" import="java.util.*" pageEncoding="UTF-8" %>

tomcat1

<% out.println("This is tomcat1 server");%>

动态页面1

ls

index.jsp

(2)192.168.174.19

mkdir /usr/local/tomcat/webapps/test

## 创建目录

cd /usr/local/tomcat/webapps/

ls

docs examples host-manager manager ROOT test

cd test/

vim /usr/local/tomcat/webapps/test/index.jsp

<%@ page language="java" import="java.util.*" pageEncoding="UTF-8" %>

tomcat2

<% out.println("This is tomcat2 server");%>

动态页面2

[root@slave02 test]# ls

index.jsp

2.Tomcat实例主配置删除前面的 Host配置,增添新的Host配置

cd /usr/local/tomcat/conf/

ls

catalina.policy context.xml jaspic-providers.xsd server.xml tomcat-users.xsd

catalina.properties jaspic-providers.xml logging.properties tomcat-users.xml web.xml

cp server.xml{,.bak}

## 备份配置文件

vim server.xml

删除148-164行

/usr/local/tomcat/bin/shutdown.sh

/usr/local/tomcat/bin/startup.sh

netstat -natp | grep 8080

3.Nginx server 配置(192.168.174.18、192.168.174.19)两者配置相同

cd /usr/local/nginx/conf/

ls

fastcgi.conf koi-utf nginx.conf uwsgi_params

fastcgi.conf.default koi-win nginx.conf.default uwsgi_params.default

fastcgi_params mime.types scgi_params win-utf

fastcgi_params.default mime.types.default scgi_params.default

cp nginx.conf{,.bak}

vim nginx.conf

##复制备份修改配置文件

upstream tomcat_server {

server 192.168.174.18:8080 weight=1;

server 192.168.174.19:8080 weight=1;

}

server_name www.web1.com;

charset utf-8;

location ~ .*.jsp$ {

proxy_pass http://tomcat_server;

proxy_set_header HOST $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

location ~ .*\.(gif|jpg|jpeg|png|bmp|swf|css)$ {

root /usr/local/nginx/html;

expires 10d;

}

cd /usr/local/nginx/sbin/

nginx -s reload

4.用虚拟IP 192.168.174.100/index.jsp访问测试:

五、 配置keeplived(主(ha01)、备(ha02)DR 服务器都需要配置)

1.主 DR服务器 ha01:

yum install ipvsadm keepalived -y

cd /etc/keepalived/

ls

keepalived.conf

cp keepalived.conf{,.bak}

vim keepalived.conf

## 按图修改配置文件

virtual_ipaddress {

192.168.174.100

}

}

virtual_server 192.168.174.100 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.174.17 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.174.12 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

systemctl start keepalived.service

ip addr show dev ens33

2: ens33: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ae:23:9b brd ff:ff:ff:ff:ff:ff

inet 192.168.174.17/24 brd 192.168.174.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.174.100/32 brd 192.168.174.100 scope global ens33:0

valid_lft forever preferred_lft forever

inet6 fe80::2775:742b:bf24:136d/64 scope link

valid_lft forever preferred_lft forever

inet6 fe80::b65d:94e9:7e6a:6879/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::1c8b:9053:c28:220c/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

scp keepalived.conf 192.168.174.12:/etc/keepalived/

2.备 DR服务器 ha02:192.168.174.12

yum install ipvsadm keepalived -y

cd /etc/keepalived/

systemctl start keepalived.service

vim keepalived.conf

systemctl start keepalived.service

3.调整内核 proc 响应参数,关闭linux内核的重定向参数响应

(1)主DR在LVS-DR模式中已经调整了响应参数,现在只需要修改备DR服务器即可

vim /etc/sysctl.conf

net.ipv4.conf.all.send_redirects = 0

net.ipv4.conf.default.send_redirects = 0

net.ipv4.conf.ens33.send_redirects = 0

sysctl -p

(2)测试keepalived是否能主备切换成功

- 关闭主DR服务器ha01中的keepaliverd服务,主DR服务器ha01

systemctl stop keepalived.service

ip a

在ha02上看

ip a

- 在浏览器中测试动静分离页面

六、部署MySQL集群MHA高可用

1.安装MySQL(slave01,slave02,master同时配置)

[root@master01 opt]# ls

boost_1_59_0.tar.gz mysql-5.7.17.tar.gz rh web1 web2

[root@master01 opt]# yum -y install gcc gcc-c++ ncurses ncurses-devel bison cmake

## 安装环境依赖包

[root@master01 opt]# tar zxvf mysql-5.7.17.tar.gz

[root@master01 opt]# tar zxvf boost_1_59_0.tar.gz

[root@master01 opt]# mv boost_1_59_0 /usr/local/boost

[root@master01 opt]# mkdir /usr/local/mysql

[root@master01 opt]# cd mysql-5.7.17/

cmake \

## 先输入cmake \ ,在复制下面文字

-DCMAKE_INSTALL_PREFIX=/usr/local/mysql \

-DMYSQL_UNIX_ADDR=/usr/local/mysql/mysql.sock \

-DSYSCONFDIR=/etc \

-DSYSTEMD_PID_DIR=/usr/local/mysql \

-DDEFAULT_CHARSET=utf8 \

-DDEFAULT_COLLATION=utf8_general_ci \

-DWITH_EXTRA_CHARSETS=all \

-DWITH_INNOBASE_STORAGE_ENGINE=1 \

-DWITH_ARCHIVE_STORAGE_ENGINE=1 \

-DWITH_BLACKHOLE_STORAGE_ENGINE=1 \

-DWITH_PERFSCHEMA_STORAGE_ENGINE=1 \

-DMYSQL_DATADIR=/usr/local/mysql/data \

-DWITH_BOOST=/usr/local/boost \

-DWITH_SYSTEMD=1

[root@master01 opt]# ls

boost_1_59_0.tar.gz mysql-5.7.17.tar.gz rh web1 web2

[root@master01 opt]# yum -y install gcc gcc-c++ ncurses ncurses-devel bison cmake

## 安装环境依赖包

[root@master01 opt]# tar zxvf mysql-5.7.17.tar.gz

[root@master01 opt]# tar zxvf boost_1_59_0.tar.gz

[root@master01 opt]# mv boost_1_59_0 /usr/local/boost

[root@master01 opt]# mkdir /usr/local/mysql

[root@master01 opt]# cd mysql-5.7.17/

[root@master01 mysql-5.7.17]# cmake \

## 先输入cmake \ ,在复制下面文字

-DCMAKE_INSTALL_PREFIX=/usr/local/mysql \

-DMYSQL_UNIX_ADDR=/usr/local/mysql/mysql.sock \

-DSYSCONFDIR=/etc \

-DSYSTEMD_PID_DIR=/usr/local/mysql \

-DDEFAULT_CHARSET=utf8 \

-DDEFAULT_COLLATION=utf8_general_ci \

-DWITH_EXTRA_CHARSETS=all \

-DWITH_INNOBASE_STORAGE_ENGINE=1 \

-DWITH_ARCHIVE_STORAGE_ENGINE=1 \

-DWITH_BLACKHOLE_STORAGE_ENGINE=1 \

-DWITH_PERFSCHEMA_STORAGE_ENGINE=1 \

-DMYSQL_DATADIR=/usr/local/mysql/data \

-DWITH_BOOST=/usr/local/boost \

-DWITH_SYSTEMD=1

[root@master01 mysql-5.7.17]# make -j2 && make install

2.创建MySQL用户

cd /usr/local/

useradd -M -s /sbin/nologin mysql

3.修改MySQL配置文件

[root@master01 local]# vim /etc/my.cnf

##将原有配置文件数据全部清空,复制下面所有配置信息到my.cnf中 50+dd全部清空

[client]

port = 3306

default-character-set=utf8

socket=/usr/local/mysql/mysql.sock

[mysql]

port = 3306

default-character-set=utf8

socket = /usr/local/mysql/mysql.sock

auto-rehash

[mysqld]

user = mysql

basedir=/usr/local/mysql

datadir=/usr/local/mysql/data

port = 3306

character-set-server=utf8

pid-file = /usr/local/mysql/mysqld.pid

socket=/usr/local/mysql/mysql.sock

bind-address = 0.0.0.0

skip-name-resolve

max_connections=2048

default-storage-engine=INNODB

max_allowed_packet=16M

server-id = 1

sql_mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES,NO_AUTO_CREATE_USER,NO_AUTO_VALUE_ON_ZERO,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,PIPES_AS_CONCAT,ANSI_QUOTES

4.更改MySQL安装目录和配置文件的属主属组

[root@master01 local]# chown -R mysql:mysql /usr/local/mysql/

[root@master01 local]# chown mysql:mysql /etc/my.cnf

5.设置路径环境变量

[root@master01 local]# echo 'export PATH=/usr/local/mysql/bin:/usr/local/mysql/lib:$PATH' >> /etc/profile

[root@master01 local]# source /etc/profile

[root@master01 local]# echo $PATH

/usr/local/mysql/bin:/usr/local/mysql/lib:/usr/local/bin:/usr/local/sbin:/usr/bin:/usr/sbin:/bin:/sbin:/root/bin

6.初始化数据库

[root@master01 local]# cd /usr/local/mysql/bin/

[root@master01 bin]# ./mysqld \

##输入./mysqld \ 复制下面代码写入

--initialize-insecure \

--user=mysql-maseter01 \

--basedir=/usr/local/mysql \

--datadir=/usr/local/mysql/data

7.添加MySQLD系统服务

[root@slave01 bin]# cp /usr/local/mysql/usr/lib/systemd/system/mysqld.service /usr/lib/systemd/system/

[root@slave01 bin]# systemctl daemon-reload

[root@slave01 bin]# systemctl start mysqld.service

[root@slave01 bin]# systemctl enable mysqld.service

## 重新加载并开启服务,设置开机自启动

Created symlink from /etc/systemd/system/multi-user.target.wants/mysqld.service to /usr/lib/systemd/system/mysqld.service.

[root@slave01 bin]# netstat -natp |grep 3306

tcp 0 0 0.0.0.0:3306 0.0.0.0:* LISTEN 90475/mysqld

8.修改MySQL 的登录密码

[root@slave01 bin]# mysqladmin -u root -p password "abc123"

## 按回车

Enter password:

mysqladmin: [Warning] Using a password on the command line interface can be insecure.

Warning: Since password will be sent to server in plain text, use ssl connection to ensure password safety.

9.授权远程登录

[root@slave01 bin]# mysql -u root -p

Enter password: 输入abc123

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mydatabase |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.01 sec)

mysql> grant all privileges on *.* to 'root'@'%' identified by 'abc123';

## 允许root用户登录,并给权限

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> quit

Bye

10.MySQL集群配置一主双从

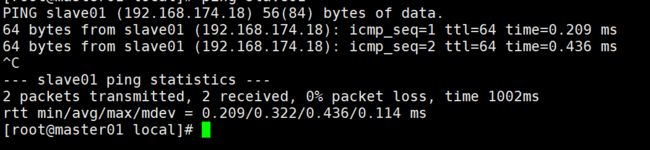

- 在所有服务器上/etc/hosts配置文件中,添加IP与主机名的解析并进行ping测试

[root@slave01 ~]# vim /etc/hosts

20.0.0.100 slave01

20.0.0.200 slave02

20.0.0.230 maseter01

11.修改 Master01、Slave01、Slave02 节点的 Mysql主配置文件/etc/my.cnf

(1)Master01主数据库

[root@master01 bin]# vim /etc/my.cnf

## 添加

log_bin = master-bin

log-slave-updates = true

[root@master01 bin]# systemctl restart mysqld.service

## 重启数据库

(2)slave01

[root@slave01 ~]# vim /etc/my.cnf

server-id = 2

log_bin = master-bin

relay-log = relay-log-bin

relay-log-index = slave-relay-bin.index

systemctl restart mysqld.service

(3)slave02

[root@slave02 bin]# vim /etc/my.cnf

server-id = 3

log_bin = master-bin

relay-log = relay-log-bin

relay-log-index = slave-relay-bin.index

systemctl restart mysqld.service

(4)在 Master01、Slave01、Slave02 节点上都创建两个软链接

Master01主数据库

ln -s /usr/local/mysql/bin/{mysql,mysqlbinlog} /usr/sbin/

ll /usr/sbin/mysql*

lrwxrwxrwx. 1 root root 26 8月 11 16:34 /usr/sbin/mysql -> /usr/local/mysql/bin/mysql

lrwxrwxrwx. 1 root root 32 8月 11 16:34 /usr/sbin/mysqlbinlog -> /usr/local/mysql/bin/mysqlbinlog

slave01备数据库

[root@slave01 ~]# ln -s /usr/local/mysql/bin/{mysql,mysqlbinlog} /usr/sbin/

[root@slave01 ~]# ll /usr/sbin/mysql*

lrwxrwxrwx. 1 root root 26 8月 11 16:35 /usr/sbin/mysql -> /usr/local/mysql/bin/mysql

lrwxrwxrwx. 1 root root 32 8月 11 16:35 /usr/sbin/mysqlbinlog -> /usr/local/mysql/bin/mysqlbinlog

slave02

ln -s /usr/local/mysql/bin/{mysql,mysqlbinlog} /usr/sbin/

ll /usr/sbin/mysql*

lrwxrwxrwx. 1 root root 26 8月 11 16:36 /usr/sbin/mysql -> /usr/local/mysql/bin/mysql

lrwxrwxrwx. 1 root root 32 8月 11 16:36 /usr/sbin/mysqlbinlog -> /usr/local/mysql/bin/mysqlbinlog

所有数据库节点进行 mysql 授权(一主两从),三台都设置一样

mysql -uroot -pabc123

grant replication slave on *.* to 'myslave'@'192.168.174.%' identified by '12345';

## 从数据库同步使用

grant all privileges on *.* to 'mha'@'192.168.174.%' identified by 'manager';

## 防止从库通过主机名连接不上主库

grant all privileges on *.* to 'mha'@'maseter01' identified by 'manager';

grant all privileges on *.* to 'mha'@'slave01' identified by 'manager';

grant all privileges on *.* to 'mha'@'slave02' identified by 'manager';

flush privileges;

## 刷新权限

**Master01 主数据库查看二进制文件和同步点 **

show master status;

+-------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+-------------------+----------+--------------+------------------+-------------------+

| master-bin.000001 | 1752 | | | |

+-------------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)

Slave01、Slave02 服务器执行同步操作,并查看数据同步结果,注意:上方的master状态的数量要与下方的master_log_pos 的写入的数要一致,才能同步

change master to master_host='192.168.174.15',master_user='myslave',master_password='12345',master_log_file='master-bin.000001',master_log_pos=1752;

change master to master_host='192.168.174.15',master_user='myslave',master_password='12345',master_log_file='master-bin.000001',master_log_pos=1752;

Query OK, 0 rows affected, 2 warnings (0.01 sec)

mysql> start slave;

Query OK, 0 rows affected (0.01 sec)

mysql> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.174.15

Master_User: myslave

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: master-bin.000001

Read_Master_Log_Pos: 1752

Relay_Log_File: relay-log-bin.000002

Relay_Log_Pos: 321

Relay_Master_Log_File: master-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

把slave01、slave02两个从库必须设置为只读模式

mysql> set global read_only=1;

Query OK, 0 rows affected (0.00 sec)

Master 主数据库插入数据,测试是否同步

在master上创建表并插入数据:

create table student(id int,name char(5),age int);

Query OK, 0 rows affected (0.01 sec)

mysql> insert into student values(1,'abc',22);

Query OK, 1 row affected (0.04 sec)

mysql> select * from student;

+------+------+------+

| id | name | age |

+------+------+------+

| 1 | abc | 22 |

+------+------+------+

1 row in set (0.00 sec)

12.测试,同步成功:

七、部署MHA

1.修改三台机器,master01、slave01、slave02中的配置文件

[root@slave01 mha4mysql-manager-0.57]# vim /etc/my.cnf

## 把utf-8的段落#注释掉

2.Master01、Slave01、Slave02 所有服务器上都安装 MHA 依赖的环境,首先安装 epel 源

[root@master01 bin]# yum install epel-release --nogpgcheck -y

[root@master01 bin]# yum install -y perl-DBD-MySQL \

## 输入之后回车复制下面代码下载

perl-Config-Tiny \

perl-Log-Dispatch \

perl-Parallel-ForkManager \

perl-ExtUtils-CBuilder \

perl-ExtUtils-MakeMaker \

perl-CPAN

3.Master01、Slave01、Slave02所有服务器上必须先安装 node 组件

tar zxvf mha4mysql-node-0.57.tar.gz

cd mha4mysql-node-0.57

perl Makefile.PL

make && make install

4.在 MHA manager 节点上安装 manager 组件

cd /opt

tar zxvf mha4mysql-manager-0.57.tar.gz

cd mha4mysql-manager-0.57

perl Makefile.PL

make && make install

5.Master01、Slave01、Slave02所有服务器上配置无密码认证

按master01 上配置到数据库节点 slave01 和 slave02 的无密码认证,模板为例三台分别设置,注意manager节点(slave01节点)需要ssh三台SQL机器,其余slave01,master01只需要ssh其它两台机器

(1)master01

ssh-keygen -t rsa #一路按回车键

ssh-copy-id 192.168.174.15

ssh-copy-id 192.168.174.18

ssh-copy-id 192.168.174.19

(2)slave01

ssh-keygen -t rsa

ssh-copy-id 192.168.174.15

ssh-copy-id 192.168.174.19

(3)slave01

ssh-keygen -t rsa #一路按回车键

ssh-copy-id 192.168.174.15

ssh-copy-id 192.168.174.19

6.在master01上配置

cp -rp /opt/mha4mysql-manager-0.57/samples/scripts/ /usr/local/bin/

ll /usr/local/bin/

总用量 84

-r-xr-xr-x. 1 root root 16381 8月 11 17:07 apply_diff_relay_logs

-r-xr-xr-x. 1 root root 4807 8月 11 17:07 filter_mysqlbinlog

-r-xr-xr-x. 1 root root 1995 8月 11 17:11 masterha_check_repl

-r-xr-xr-x. 1 root root 1779 8月 11 17:11 masterha_check_ssh

-r-xr-xr-x. 1 root root 1865 8月 11 17:11 masterha_check_status

-r-xr-xr-x. 1 root root 3201 8月 11 17:11 masterha_conf_host

-r-xr-xr-x. 1 root root 2517 8月 11 17:11 masterha_manager

-r-xr-xr-x. 1 root root 2165 8月 11 17:11 masterha_master_monitor

-r-xr-xr-x. 1 root root 2373 8月 11 17:11 masterha_master_switch

-r-xr-xr-x. 1 root root 5171 8月 11 17:11 masterha_secondary_check

-r-xr-xr-x. 1 root root 1739 8月 11 17:11 masterha_stop

-r-xr-xr-x. 1 root root 8261 8月 11 17:07 purge_relay_logs

-r-xr-xr-x. 1 root root 7525 8月 11 17:07 save_binary_logs

drwxr-xr-x. 2 mysql mysql 103 5月 31 2015 scripts

(1)复制上述的自动切换时 VIP 管理的脚本到 /usr/local/bin 目录,使用master_ip_failover脚本来管理 VIP 和故障切换

[root@master01 mha4mysql-manager-0.57]# vim /usr/local/bin/master_ip_failover

#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';

use Getopt::Long;

my (

$command, $ssh_user, $orig_master_host, $orig_master_ip,

$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);

#############################添加内容部分#########################################

my $vip = '20.0.0.10'; #指定vip的地址

my $brdc = '20.0.0.255'; #指定vip的广播地址

my $ifdev = 'ens33'; #指定vip绑定的网卡

my $key = '1'; #指定vip绑定的虚拟网卡序列号

my $ssh_start_vip = "/sbin/ifconfig ens33:$key $vip"; #代表此变量值为ifconfig ens33:1 20.0.0.10

my $ssh_stop_vip = "/sbin/ifconfig ens33:$key down"; #代表此变量值为ifconfig ens33:1 20.0.0.10 down

my $exit_code = 0; #指定退出状态码为0

#my $ssh_start_vip = "/usr/sbin/ip addr add $vip/24 brd $brdc dev $ifdev label $ifdev:$key;/usr/sbin/arping -q -A -c 1 -I $ifdev $vip;iptables -F;";

#my $ssh_stop_vip = "/usr/sbin/ip addr del $vip/24 dev $ifdev label $ifdev:$key";

##################################################################################

GetOptions(

'command=s' => \$command,

'ssh_user=s' => \$ssh_user,

'orig_master_host=s' => \$orig_master_host,

'orig_master_ip=s' => \$orig_master_ip,

'orig_master_port=i' => \$orig_master_port,

'new_master_host=s' => \$new_master_host,

'new_master_ip=s' => \$new_master_ip,

'new_master_port=i' => \$new_master_port,

);

exit &main();

sub main {

print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";

if ( $command eq "stop" || $command eq "stopssh" ) {

my $exit_code = 1;

eval {

print "Disabling the VIP on old master: $orig_master_host \n";

&stop_vip();

$exit_code = 0;

};

if ($@) {

warn "Got Error: $@\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

my $exit_code = 10;

eval {

print "Enabling the VIP - $vip on the new master - $new_master_host \n";

&start_vip();

$exit_code = 0;

};

if ($@) {

warn $@;

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "status" ) {

print "Checking the Status of the script.. OK \n";

exit 0;

}

else {

&usage();

exit 1;

}

}

sub start_vip() {

`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

## A simple system call that disable the VIP on the old_master

sub stop_vip() {

`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

print

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}

(2)创建 MHA 软件目录并拷贝配置文件,使用app1.cnf配置文件来管理 mysql 节点服务器

mkdir /etc/masterha

cp /opt/mha4mysql-manager-0.57/samples/conf/app1.cnf /etc/masterha

vim /etc/masterha/app1.cnf

#删除原有内容,直接复制并修改节点服务器的IP地址

[server default]

manager_log=/var/log/masterha/app1/manager.log

manager_workdir=/var/log/masterha/app1

master_binlog_dir=/usr/local/mysql/data

master_ip_failover_script=/usr/local/bin/master_ip_failover

master_ip_online_change_script=/usr/local/bin/master_ip_online_change

password=manager

ping_interval=1

remote_workdir=/tmp

repl_password=12345

repl_user=myslave

secondary_check_script=/usr/local/bin/masterha_secondary_check -s 192.168.174.18 -s 192.168.174.19

shutdown_script=""

ssh_user=root

user=mha

[server1]

hostname=192.168.174.15

port=3306

[server2]

candidate_master=1

check_repl_delay=0

hostname=192.168.174.18

port=3306

[server3]

hostname=192.168.174.19

port=3306

(3)首次配置需要在 Master 服务器上手动开启虚拟IP

/sbin/ifconfig ens33:1 192.168.174.0/24

ifconfig

(4)manager 节点上测试 ssh 无密码认证,如果正常最后会输出 successfully

masterha_check_ssh -conf=/etc/masterha/app1.cnf

Fri Aug 11 17:29:04 2023 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Fri Aug 11 17:29:04 2023 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Fri Aug 11 17:29:04 2023 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Fri Aug 11 17:29:04 2023 - [info] Starting SSH connection tests..

Fri Aug 11 17:29:05 2023 - [debug]

Fri Aug 11 17:29:04 2023 - [debug] Connecting via SSH from [email protected](192.168.174.15:22) to [email protected](192.168.174.18:22)..

Fri Aug 11 17:29:05 2023 - [debug] ok.

Fri Aug 11 17:29:05 2023 - [debug] Connecting via SSH from [email protected](192.168.174.15:22) to [email protected](192.168.174.19:22)..

Fri Aug 11 17:29:05 2023 - [debug] ok.

Fri Aug 11 17:29:06 2023 - [debug]

Fri Aug 11 17:29:04 2023 - [debug] Connecting via SSH from [email protected](192.168.174.18:22) to [email protected](192.168.174.15:22)..

Fri Aug 11 17:29:05 2023 - [debug] ok.

Fri Aug 11 17:29:05 2023 - [debug] Connecting via SSH from [email protected](192.168.174.18:22) to [email protected](192.168.174.19:22)..

Fri Aug 11 17:29:06 2023 - [debug] ok.

Fri Aug 11 17:29:06 2023 - [debug]

Fri Aug 11 17:29:05 2023 - [debug] Connecting via SSH from [email protected](192.168.174.19:22) to [email protected](192.168.174.15:22)..

Fri Aug 11 17:29:06 2023 - [debug] ok.

Fri Aug 11 17:29:06 2023 - [debug] Connecting via SSH from [email protected](192.168.174.19:22) to [email protected](192.168.174.18:22)..

Fri Aug 11 17:29:06 2023 - [debug] ok.

Fri Aug 11 17:29:06 2023 - [info] All SSH connection tests passed successfully.

(5)manager (slave01)节点上启动 MHA 并查看MHA状态以及日志

[root@slave01 mha4mysql-manager-0.57]# cd /usr/local/bin/

[root@slave01 bin]# ls

apply_diff_relay_logs masterha_check_status masterha_master_switch purge_relay_logs

filter_mysqlbinlog masterha_conf_host masterha_secondary_check save_binary_logs

masterha_check_repl masterha_manager masterha_stop scripts

masterha_check_ssh masterha_master_monitor master_ip_failover

[root@slave01 bin]# chmod 777 master_ip_failover

[root@slave01 bin]# ls

apply_diff_relay_logs masterha_check_status masterha_master_switch purge_relay_logs

filter_mysqlbinlog masterha_conf_host masterha_secondary_check save_binary_logs

masterha_check_repl masterha_manager masterha_stop scripts

masterha_check_ssh masterha_master_monitor master_ip_failover

[root@slave01 bin]# masterha_check_repl -conf=/etc/masterha/app1.cnf

(6)manager (slave01)节点上启动 MHA 并查看MHA状态以及日志

[root@master01 bin]# nohup masterha_manager --conf=/etc/masterha/app1.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /var/log/masterha/app1/manager.log 2>&1 &

[1] 66541

[root@master01 bin]# masterha_check_status --conf=/etc/masterha/app1.cnf

app1 (pid:66541) is running(0:PING_OK), master:192.168.174.15

[root@master01 bin]# cat /var/log/masterha/app1/manager.log | grep "current master"

Fri Aug 11 17:33:43 2023 - [info] Checking SSH publickey authentication settings on the current master..

192.168.174.15(192.168.174.15:3306) (current master)

八、故障测试

1.Master01 服务器上停止mysql服务

systemctl stop mysqld.service

ifconfig

2.manager 服务器(slave01)上监控观察日志记录,已切换成功

cat /var/log/masterha/app1/manager.log

3.查看slave01服务器,此时vip漂移到新的master上,已启用备用数据库

tail -f /var/log/masterha/app1/manager.log