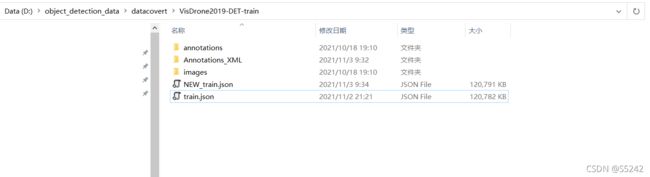

将visdrone数据集转化为coco格式并在mmdetection上训练,附上转好的json文件

visdrone是一个无人机的目标检测数据集,在很多目标检测的论文中都能看到它的身影。

标签从0到11分别为’ignored regions’,‘pedestrian’,‘people’,‘bicycle’,‘car’,‘van’,

‘truck’,‘tricycle’,‘awning-tricycle’,‘bus’,‘motor’,‘others’

现在先要用mmdetection自己训练一下这个数据集,需要把他转化为coco数据集格式

分两步走:

1. 将annotations中的txt标签转化为xml文件

需要改的地方有注释,就是几个路径改一下即可

import os

from PIL import Image

# 把下面的root_dir路径改成你自己的路径即可

root_dir = r"D:\object_detection_data\datacovert\VisDrone2019-DET-val/"

annotations_dir = root_dir+"annotations/"

image_dir = root_dir + "images/"

xml_dir = root_dir+"Annotations_XML/" #在工作目录下创建Annotations_XML文件夹保存xml文件

# 下面的类别也换成你自己数据类别,也可适用于其他的数据集转换

class_name = ['ignored regions','pedestrian','people','bicycle','car','van',

'truck','tricycle','awning-tricycle','bus','motor','others']

for filename in os.listdir(annotations_dir):

fin = open(annotations_dir+filename, 'r')

image_name = filename.split('.')[0]

img = Image.open(image_dir+image_name+".jpg") # 若图像数据是“png”转换成“.png”即可

xml_name = xml_dir+image_name+'.xml'

with open(xml_name, 'w') as fout:

fout.write('' +'\n')

fout.write('\t'+'VOC2007 '+'\n')

fout.write('\t'+'' +image_name+'.jpg'+''+'\n')

fout.write('\t'+'' +'\n')

fout.write('\t\t'+'' +'VisDrone2019-DET'+''+'\n')

fout.write('\t\t'+'' +'VisDrone2019-DET'+''+'\n')

fout.write('\t\t'+'' +'flickr'+''+'\n')

fout.write('\t\t'+'' +'Unspecified'+''+'\n')

fout.write('\t'+''+'\n')

fout.write('\t'+'' +'\n')

fout.write('\t\t'+'' +'LJ'+''+'\n')

fout.write('\t\t'+'' +'LJ'+''+'\n')

fout.write('\t'+''+'\n')

fout.write('\t'+'' +'\n')

fout.write('\t\t'+'' +str(img.size[0])+''+'\n')

fout.write('\t\t'+'' +str(img.size[1])+''+'\n')

fout.write('\t\t'+'' +'3'+''+'\n')

fout.write('\t'+''+'\n')

fout.write('\t'+'' +'0'+''+'\n')

for line in fin.readlines():

line = line.split(',')

fout.write('\t'+'+'\n')

fout.write('\t\t'+'' +class_name[int(line[5])]+''+'\n')

fout.write('\t\t'+'' +'Unspecified'+''+'\n')

fout.write('\t\t'+'' +line[6]+''+'\n')

fout.write('\t\t'+'' +str(int(line[7]))+''+'\n')

fout.write('\t\t'+'' +'\n')

fout.write('\t\t\t'+'' +line[0]+''+'\n')

fout.write('\t\t\t'+'' +line[1]+''+'\n')

# pay attention to this point!(0-based)

fout.write('\t\t\t'+'' +str(int(line[0])+int(line[2])-1)+''+'\n')

fout.write('\t\t\t'+'' +str(int(line[1])+int(line[3])-1)+''+'\n')

fout.write('\t\t'+''+'\n')

fout.write('\t'+''+'\n')

fin.close()

fout.write('')

2.xml2json

#!/usr/bin/python

# xml是voc的格式

# json是coco的格式

import sys, os, json, glob

import xml.etree.ElementTree as ET

INITIAL_BBOXIds = 1

# PREDEF_CLASSE = {}

PREDEF_CLASSE = { 'pedestrian': 1, 'people': 2,

'bicycle': 3, 'car': 4, 'van': 5, 'truck': 6, 'tricycle': 7,

'awning-tricycle': 8, 'bus': 9, 'motor': 10}

#我这里只想检测这十个类, 0和11没有加入转化。

# function

def get(root, name):

return root.findall(name)

def get_and_check(root, name, length):

vars = root.findall(name)

if len(vars) == 0:

raise NotImplementedError('Can not find %s in %s.'%(name, root.tag))

if length > 0 and len(vars) != length:

raise NotImplementedError('The size of %s is supposed to be %d, but is %d.'%(name, length, len(vars)))

if length == 1:

vars = vars[0]

return vars

def convert(xml_paths, out_json):

json_dict = {'images': [], 'type': 'instances',

'categories': [], 'annotations': []}

categories = PREDEF_CLASSE

bbox_id = INITIAL_BBOXIds

for image_id, xml_f in enumerate(xml_paths):

# 进度输出

sys.stdout.write('\r>> Converting image %d/%d' % (

image_id + 1, len(xml_paths)))

sys.stdout.flush()

tree = ET.parse(xml_f)

root = tree.getroot()

filename = get_and_check(root, 'filename', 1).text

size = get_and_check(root, 'size', 1)

width = int(get_and_check(size, 'width', 1).text)

height = int(get_and_check(size, 'height', 1).text)

image = {'file_name': filename, 'height': height,

'width': width, 'id': image_id + 1}

json_dict['images'].append(image)

## Cruuently we do not support segmentation

#segmented = get_and_check(root, 'segmented', 1).text

#assert segmented == '0'

for obj in get(root, 'object'):

category = get_and_check(obj, 'name', 1).text

if category not in categories:

new_id = max(categories.values()) + 1

categories[category] = new_id

category_id = categories[category]

bbox = get_and_check(obj, 'bndbox', 1)

xmin = int(get_and_check(bbox, 'xmin', 1).text) - 1

ymin = int(get_and_check(bbox, 'ymin', 1).text) - 1

xmax = int(get_and_check(bbox, 'xmax', 1).text)

ymax = int(get_and_check(bbox, 'ymax', 1).text)

if xmax <= xmin or ymax <= ymin:

continue

o_width = abs(xmax - xmin)

o_height = abs(ymax - ymin)

ann = {'area': o_width * o_height, 'iscrowd': 0, 'image_id': image_id + 1,

'bbox': [xmin, ymin, o_width, o_height], 'category_id': category_id,

'id': bbox_id, 'ignore': 0, 'segmentation': []}

json_dict['annotations'].append(ann)

bbox_id = bbox_id + 1

for cate, cid in categories.items():

cat = {'supercategory': 'none', 'id': cid, 'name': cate}

json_dict['categories'].append(cat)

# json_file = open(out_json, 'w')

# json_str = json.dumps(json_dict)

# json_file.write(json_str)

# json_file.close() # 快

json.dump(json_dict, open(out_json, 'w'), indent=4) # indent=4 更加美观显示 慢

if __name__ == '__main__':

xml_path = r'D:\object_detection_data\datacovert\VisDrone2019-DET-val/Annotations_XML/' #改一下读取xml文件位置

xml_file = glob.glob(os.path.join(xml_path, '*.xml'))

convert(xml_file, r'D:\object_detection_data\datacovert\VisDrone2019-DET-val/NEW_val.json') #这里是生成的json保存位置,改一下

训练记录:

这里选用的是configs/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.py模型。

首先下载对应权重,修改权重后面的全连接层的神经元个数

两阶段通用脚本如下,修改载入的权重和保存的权重名字运行即可。

import torch

pretrained_weights = torch.load('checkpoints/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth')

num_class = 10

pretrained_weights['state_dict']['roi_head.bbox_head.fc_cls.weight'].resize_(num_class+1, 1024)

pretrained_weights['state_dict']['roi_head.bbox_head.fc_cls.bias'].resize_(num_class+1)

pretrained_weights['state_dict']['roi_head.bbox_head.fc_reg.weight'].resize_(num_class*4, 1024)

pretrained_weights['state_dict']['roi_head.bbox_head.fc_reg.bias'].resize_(num_class*4)

torch.save(pretrained_weights, "faster_rcnn_r50_fpn_1x_%d.pth"%num_class)

后面加载这个修改后的权重即可。

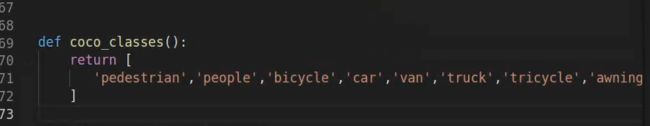

这里我只检测十个类 ,0 和11 对应的类没有检测。

接下来需要修改和类别相关的三个地方

- base/faster_rcnn_r50_fpn_coco.py中的 numclass=10

- mmdet/core/evalution/class_names.py下

这里修改为visdeone要检测的类别 - mmdet/datasets/coco.py下

修改完类别之后可以运行 下面这个命令检查标签对着没,对着就可以开始训练了。

python tools/misc/browse_dataset.py config/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.py

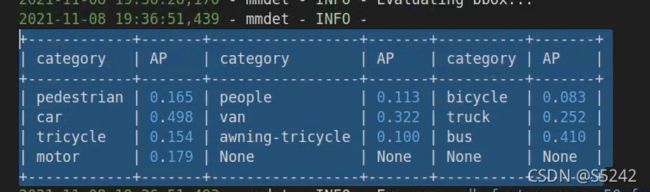

这个数据集场景比较复杂,小物体的map非常低,还把人分成了pedestrain和people,个人感觉这俩太像了,容易误检,所以这俩的map贼低,感觉分类略多。

我训练输入图片大小1080x720 ,训练了十轮结果如下.map感人。

下面随便放了几张测试出来的结果。

上面这一张图我红色框内的物体明显就没检测到,可以看出小目标效果不得行。

就这样了吧

为了方便同行快速上手测试这个数据集,我把转好的json资源放在了百度云—链接随后放上来。

链接:https://pan.baidu.com/s/1BnpYSsViBnuT7FJq-nzxWw

提取码:1111