在阿里云Serverless K8S集群上部署Spark任务并连接OSS(详细步骤)

在阿里云ASK集群上部署Spark任务并连接OSS

简介

ASK是阿里云的一个产品,属于Serverless Kubernetes 集群,这次实验是要在ASK集群上运行Spark计算任务(以WordCount为例),另外为了能让计算和存储分离,我使用了阿里云OSS来存放数据。

(连接OSS这块找了好多资料都不全,在本地可以运行的代码一放在集群就报错,遇到很多bug才终于弄好了,记录下来希望对以后的小伙伴有帮助)

环境准备

本机需要安装:

JAVA jdk1.8

IDEA

Maven

Docker(安装在Linux或者Windows)

需要在阿里云开通的服务有:

ASK集群:https://www.aliyun.com/product/cs/ask?spm=5176.166170.J_8058803260.27.586451643ru45z

OSS对象存储: https://www.aliyun.com/product/oss?spm=5176.166170.J_8058803260.32.58645164XpoJle

ACR镜像服务:https://www.aliyun.com/product/acr?spm=5176.19720258.J_8058803260.31.281e2c4astzVxy

一、在OSS中准备数据

oss://spark-on-k8s-1/hp1.txt

(按照这种【oss://桶名/路径/文件名】格式改成你自己的,后面代码要用到)

二、编写代码

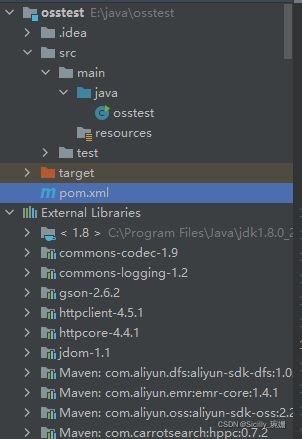

1.使用IDEA新建一个maven项目

目录结构如下:

需要写的就只有pom.xml文件和java下的osstest.java文件。下面会给出代码:

(1)osstest.java

这是一份词频统计(wordcount)的代码。步骤是:

- 连接OSS,获取到实现准备好的hp1.txt文件

- 对hp1.txt进行词频统计

- 把最终结果传回到OSS上

具体实现如下:

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

import java.util.stream.Collectors;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import scala.Tuple2;

public class osstest {

public static void main(String[] args) {

// 这些都是OSS的依赖包,不写的话在本地能跑,放上集群会报错

List<String> jarList = new ArrayList<>();

jarList.add("emr-core-1.4.1.jar");

jarList.add("aliyun-sdk-oss-3.4.1.jar");

jarList.add("commons-codec-1.9.jar");

jarList.add("jdom-1.1.jar");

jarList.add("commons-logging-1.2.jar");

jarList.add("httpclient-4.5.1.jar");

jarList.add("httpcore-4.4.1.jar");

String ossDepPath = jarList.stream()

.map(s -> "/opt/spark/jars/" + s)

.collect(Collectors.joining(","));

SparkConf conf = new SparkConf().setAppName("JavaWordCount");

// 如果在本地IDEA执行,需要打开下面一行代码

// .setMaster("local");

conf.set("spark.hadoop.fs.oss.impl", "com.aliyun.fs.oss.nat.NativeOssFileSystem");

// 如果在本地IDEA执行,需要打开下面一行代码

// conf.set("spark.hadoop.mapreduce.job.run-local", "true");

conf.set("spark.hadoop.fs.oss.endpoint", "oss-cn-shenzhen.aliyuncs.com");// 改成你存放文本的OSS桶的地区

conf.set("spark.hadoop.fs.oss.accessKeyId", "*****"); // 改成你自己的accessKeyId

conf.set("spark.hadoop.fs.oss.accessKeySecret", "******");// 改成你自己的accessKeySecret

// 需要指定oss依赖的路径,否则会报错

conf.set("spark.hadoop.fs.oss.core.dependency.path", ossDepPath);

System.out.println("----------开始-----------");

//创建sparkContext

JavaSparkContext sc = new JavaSparkContext(conf);

JavaRDD<String> lines = sc.textFile("oss://spark-on-k8s-1/hp1.txt", 10); // 改成你自己的读取文件路径

System.out.println("-----------读取数据"+lines.count()+"行。----------------");

JavaRDD<String> words = lines.flatMap(line -> Arrays.asList(line.split(" ")).iterator());

System.out.println("-----------3:"+words);

//将单词和一组合

JavaPairRDD<String, Integer> wordAndOne = words.mapToPair(w -> new Tuple2<>(w, 1));

System.out.println("-----------4:"+wordAndOne);

//聚合

JavaPairRDD<String, Integer> reduced = wordAndOne.reduceByKey((m, n) -> m + n);

System.out.println("-----------5:"+reduced);

//调整顺序

JavaPairRDD<Integer, String> swaped = reduced.mapToPair(tp -> tp.swap());

System.out.println("-----------6"+swaped);

//排序

JavaPairRDD<Integer, String> sorted = swaped.sortByKey(false);

System.out.println("-----------7"+sorted);

//调整顺序

JavaPairRDD<String, Integer> result = sorted.mapToPair(tp -> tp.swap());

System.out.println("-----------8"+result);

//将结果保存到oss

result.saveAsTextFile("oss://spark-on-k8s-1/hp1-result-1");// 改成你自己的输出文件路径

System.out.println("-----------结束------------------------");

//释放资源

sc.stop();

}

}

因此以上代码需要修改的地方有:

- 存储桶的endpoint

- accessKeyId

- accessKeySecret

- 输入输出的桶地址

(2)pom.xml

pom.xml声明了Spark和OSS的一些依赖。

注意EMR虽然是阿里云的另一项服务,在这里我们不需要开通它。但少了com.aliyun.emr这个依赖就不能访问到oss://开头的地址,所以要加进pom.xml里。

maven-assembly-plugin是用来自定义打包的。

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>org.examplegroupId>

<artifactId>osstestartifactId>

<version>2.0-SNAPSHOTversion>

<dependencies>

<dependency>

<groupId>com.aliyun.ossgroupId>

<artifactId>aliyun-sdk-ossartifactId>

<version>2.2.0version>

dependency>

<dependency>

<groupId>com.aliyun.dfsgroupId>

<artifactId>aliyun-sdk-dfsartifactId>

<version>1.0.3version>

dependency>

<dependency>

<groupId>com.aliyun.emrgroupId>

<artifactId>emr-coreartifactId>

<version>1.4.1version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-sql_2.12artifactId>

<version>2.4.3version>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-assembly-pluginartifactId>

<version>2.6version>

<configuration>

<appendAssemblyId>falseappendAssemblyId>

<descriptorRefs>

<descriptorRef>jar-with-dependenciesdescriptorRef>

descriptorRefs>

<archive>

<manifest>

<mainClass>osstestmainClass>

manifest>

archive>

configuration>

<executions>

<execution>

<id>make-assemblyid>

<phase>packagephase>

<goals>

<goal>assemblygoal>

goals>

execution>

executions>

plugin>

plugins>

build>

<properties>

<maven.compiler.source>8maven.compiler.source>

<maven.compiler.target>8maven.compiler.target>

properties>

project>

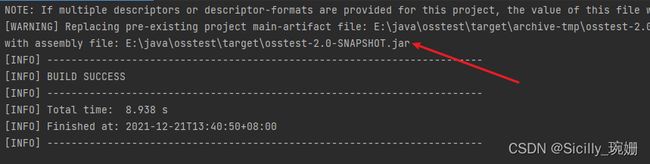

- 使用maven打包,先点clean,再点assembly:assembly

然后需要一台安装了Docker的机器(Linux或Windows都行)

创建一个test文件夹。

把打包好的osstest-2.0-SNAPSHOT.jar 和 其他要用到的第三方jar全部放到test文件夹下。

第三方jar就是代码中写到的那些,网上都可以下载到:

aliyun-sdk-oss-3.4.1.jar

hadoop-aliyun-2.7.3.2.6.1.0-129.jar

jdom-1.1.jar

httpclient-4.5.1.jar

httpcore-4.4.1.jar

commons-logging-1.2.jar

commons-codec-1.9.jar

emr-core-1.4.1.jar

三、准备镜像

- 在test文件夹中编写Dockerfile

# spark base image

FROM registry.cn-beijing.aliyuncs.com/eci_open/spark:2.4.4

RUN rm $SPARK_HOME/jars/kubernetes-client-*.jar

ADD https://repo1.maven.org/maven2/io/fabric8/kubernetes-client/4.4.2/kubernetes-client-4.4.2.jar $SPARK_HOME/jars

RUN mkdir -p /opt/spark/jars

COPY osstest-2.0-SNAPSHOT.jar /opt/spark/jars

COPY aliyun-sdk-oss-3.4.1.jar /opt/spark/jars

COPY hadoop-aliyun-2.7.3.2.6.1.0-129.jar /opt/spark/jars

COPY jdom-1.1.jar /opt/spark/jars

COPY httpclient-4.5.1.jar /opt/spark/jars

COPY httpcore-4.4.1.jar /opt/spark/jars

COPY commons-logging-1.2.jar /opt/spark/jars

COPY commons-codec-1.9.jar /opt/spark/jars

COPY emr-core-1.4.1.jar /opt/spark/jars

Dokerfile里做的事情是:

把阿里云提供的spark2.4.4作为基础镜像,然后创建了一个 /opt/spark/jars文件夹(注意这个路径和java代码中是一致的),然后把我们写的java代码打的jar包,和其他的第三方包都放进去。

- 构建镜像

接下来使用docker build命令,把我们的Dockerfile制作成一个镜像。

sudo docker build -t registry.cn-shenzhen.aliyuncs.com/sicilly/spark:0.9 -f Dockerfile .

注意在上述命令中:

registry.cn-shenzhen.aliyuncs.com/sicilly 需要改成你自己的镜像仓库地址

spark 是仓库名称,你可以自己起

0.9 是镜像版本,你可以自己起

再注意命令最后有一个英文的句号

- 上传到镜像仓库

使用docker push命令,把镜像推送的阿里云的镜像仓库。

sudo docker push registry.cn-shenzhen.aliyuncs.com/sicilly/spark:0.9

同上,需要改成你自己的镜像仓库地址

三、创建集群

- 创建一个ASK集群

1、自定义集群名。

2、选择地域、以及可用区。

3、专有网络可以用已有的也可以由容器服务自动创建的。开启SNAT。

4、是否公网暴露API server,如有需求建议开启。

5、开启privatezone,必须开启。

6、日志收集,建议开启。

-

安装ack-spark-operator

在容器服务管理控制台的导航栏中选择市场 > 应用目录,通过选择ack-spark-operator来进行部署。

四、提交到集群

上述东西都准备好了以后,就可以编写yaml文件,将任务提交到ASK执行了。

- 编写wordcount-operator-example.yaml

apiVersion: "sparkoperator.k8s.io/v1beta2"

kind: SparkApplication

metadata:

name: wordcount

namespace: default

spec:

type: Java

mode: cluster

image: "registry.cn-shenzhen.aliyuncs.com/sicilly/spark:0.9" # 改成你的镜像地址

imagePullPolicy: IfNotPresent

mainClass: osstest # 代码的主类名

mainApplicationFile: "local:///opt/spark/jars/osstest-2.0-SNAPSHOT.jar" # 代码所在位置

sparkVersion: "2.4.4"

restartPolicy:

type: OnFailure

onFailureRetries: 2

onFailureRetryInterval: 5

onSubmissionFailureRetries: 2

onSubmissionFailureRetryInterval: 10

timeToLiveSeconds: 36000

sparkConf:

"spark.kubernetes.allocation.batch.size": "10"

driver:

cores: 2

memory: "512m"

labels:

version: 2.4.4

spark-app: spark-wordcount

role: driver

annotations:

k8s.aliyun.com/eci-image-cache: "false"

serviceAccount: spark

executor:

cores: 1

instances: 2

memory: "512m"

labels:

version: 2.4.4

role: executor

annotations:

k8s.aliyun.com/eci-image-cache: "false"

如果你用的是我上面的代码,需要改的就只有镜像地址。

- 提交到ASK集群

方法一:使用kubectl。需要在windows上安装kubectl工具(安装方法),连接到你的ASK集群后输入下列命令即完成创建。

kubectl create -f wordcount-operator-example.yaml

方法二:如果不想安装kubectl,也可以在容器服务管理控制台上点击应用-无状态-使用YAML创建资源

kubectl get pods

kubectl logs -f wordcount-driver

没有报错说明成功了,有报错的话根据日志排查问题。

下面是一次成功执行的日志。

21/12/21 06:33:33 INFO SparkContext: Created broadcast 5 from broadcast at DAGScheduler.scala:1161

21/12/21 06:33:33 INFO DAGScheduler: Submitting 10 missing tasks from ResultStage 5 (MapPartitionsRDD[10] at saveAsTextFile at osstest.java:63) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9))

21/12/21 06:33:33 INFO TaskSchedulerImpl: Adding task set 5.0 with 10 tasks

21/12/21 06:33:33 INFO TaskSetManager: Starting task 0.0 in stage 5.0 (TID 40, 192.168.59.99, executor 1, partition 0, NODE_LOCAL, 7681 bytes)

21/12/21 06:33:33 INFO BlockManagerInfo: Added broadcast_5_piece0 in memory on 192.168.59.99:41645 (size: 27.2 KB, free: 116.9 MB)

21/12/21 06:33:33 INFO MapOutputTrackerMasterEndpoint: Asked to send map output locations for shuffle 1 to 192.168.59.99:57144

21/12/21 06:33:34 INFO TaskSetManager: Starting task 1.0 in stage 5.0 (TID 41, 192.168.59.99, executor 1, partition 1, NODE_LOCAL, 7681 bytes)

21/12/21 06:33:34 INFO TaskSetManager: Finished task 0.0 in stage 5.0 (TID 40) in 945 ms on 192.168.59.99 (executor 1) (1/10)

21/12/21 06:33:34 INFO TaskSetManager: Starting task 2.0 in stage 5.0 (TID 42, 192.168.59.99, executor 1, partition 2, NODE_LOCAL, 7681 bytes)

21/12/21 06:33:34 INFO TaskSetManager: Finished task 1.0 in stage 5.0 (TID 41) in 316 ms on 192.168.59.99 (executor 1) (2/10)

21/12/21 06:33:34 INFO TaskSetManager: Starting task 3.0 in stage 5.0 (TID 43, 192.168.59.99, executor 1, partition 3, NODE_LOCAL, 7681 bytes)

21/12/21 06:33:34 INFO TaskSetManager: Finished task 2.0 in stage 5.0 (TID 42) in 316 ms on 192.168.59.99 (executor 1) (3/10)

21/12/21 06:33:35 INFO TaskSetManager: Starting task 4.0 in stage 5.0 (TID 44, 192.168.59.99, executor 1, partition 4, NODE_LOCAL, 7681 bytes)

21/12/21 06:33:35 INFO TaskSetManager: Finished task 3.0 in stage 5.0 (TID 43) in 313 ms on 192.168.59.99 (executor 1) (4/10)

21/12/21 06:33:35 INFO TaskSetManager: Starting task 5.0 in stage 5.0 (TID 45, 192.168.59.99, executor 1, partition 5, NODE_LOCAL, 7681 bytes)

21/12/21 06:33:35 INFO TaskSetManager: Finished task 4.0 in stage 5.0 (TID 44) in 312 ms on 192.168.59.99 (executor 1) (5/10)

21/12/21 06:33:35 INFO TaskSetManager: Starting task 6.0 in stage 5.0 (TID 46, 192.168.59.99, executor 1, partition 6, NODE_LOCAL, 7681 bytes)

21/12/21 06:33:35 INFO TaskSetManager: Finished task 5.0 in stage 5.0 (TID 45) in 350 ms on 192.168.59.99 (executor 1) (6/10)

21/12/21 06:33:36 INFO TaskSetManager: Starting task 7.0 in stage 5.0 (TID 47, 192.168.59.99, executor 1, partition 7, NODE_LOCAL, 7681 bytes)

21/12/21 06:33:36 INFO TaskSetManager: Finished task 6.0 in stage 5.0 (TID 46) in 324 ms on 192.168.59.99 (executor 1) (7/10)

21/12/21 06:33:36 INFO TaskSetManager: Starting task 8.0 in stage 5.0 (TID 48, 192.168.59.99, executor 1, partition 8, NODE_LOCAL, 7681 bytes)

21/12/21 06:33:36 INFO TaskSetManager: Finished task 7.0 in stage 5.0 (TID 47) in 429 ms on 192.168.59.99 (executor 1) (8/10)

21/12/21 06:33:36 INFO TaskSetManager: Starting task 9.0 in stage 5.0 (TID 49, 192.168.59.99, executor 1, partition 9, NODE_LOCAL, 7681 bytes)

21/12/21 06:33:36 INFO TaskSetManager: Finished task 8.0 in stage 5.0 (TID 48) in 335 ms on 192.168.59.99 (executor 1) (9/10)

21/12/21 06:33:37 INFO TaskSetManager: Finished task 9.0 in stage 5.0 (TID 49) in 376 ms on 192.168.59.99 (executor 1) (10/10)

21/12/21 06:33:37 INFO TaskSchedulerImpl: Removed TaskSet 5.0, whose tasks have all completed, from pool

21/12/21 06:33:37 INFO DAGScheduler: ResultStage 5 (runJob at SparkHadoopWriter.scala:78) finished in 4.022 s

21/12/21 06:33:37 INFO DAGScheduler: Job 2 finished: runJob at SparkHadoopWriter.scala:78, took 4.741556 s

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 84

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 94

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 120

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 100

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 97

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 119

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 81

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 118

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 77

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 82

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 99

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 121

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 107

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 102

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 105

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 101

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 110

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 80

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 85

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 75

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 83

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 76

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 96

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 91

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 98

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 124

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 122

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 112

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 95

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 93

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 79

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 116

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 106

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 109

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 88

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 113

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 123

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 104

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 78

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 117

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 89

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 108

21/12/21 06:33:38 INFO BlockManagerInfo: Removed broadcast_5_piece0 on wordcount-1640068323479-driver-svc.default.svc:7079 in memory (size: 27.2 KB, free: 116.9 MB)

21/12/21 06:33:38 INFO BlockManagerInfo: Removed broadcast_5_piece0 on 192.168.59.99:41645 in memory (size: 27.2 KB, free: 116.9 MB)

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 92

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 103

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 90

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 111

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 86

21/12/21 06:33:38 INFO BlockManagerInfo: Removed broadcast_4_piece0 on wordcount-1640068323479-driver-svc.default.svc:7079 in memory (size: 3.0 KB, free: 116.9 MB)

21/12/21 06:33:38 INFO BlockManagerInfo: Removed broadcast_4_piece0 on 192.168.59.99:41645 in memory (size: 3.0 KB, free: 116.9 MB)

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 87

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 114

21/12/21 06:33:38 INFO ContextCleaner: Cleaned accumulator 115

21/12/21 06:33:39 INFO NativeOssFileSystem: OutputStream for key 'hp1-result-1/_SUCCESS' writing to tempfile '/tmp/hadoop-root/dfs/data/data/root/oss/output-4047609689034382569.data' for block 0

21/12/21 06:33:39 INFO NativeOssFileSystem: OutputStream for key 'hp1-result-1/_SUCCESS' closed. Now beginning upload

21/12/21 06:33:39 INFO NativeOssFileSystem: OutputStream for key 'hp1-result-1/_SUCCESS' upload complete

21/12/21 06:33:39 INFO SparkHadoopWriter: Job job_20211221063332_0010 committed.

-----------over------

21/12/21 06:33:39 INFO SparkUI: Stopped Spark web UI at http://wordcount-1640068323479-driver-svc.default.svc:4040

21/12/21 06:33:39 INFO KubernetesClusterSchedulerBackend: Shutting down all executors

21/12/21 06:33:39 INFO KubernetesClusterSchedulerBackend$KubernetesDriverEndpoint: Asking each executor to shut down

21/12/21 06:33:39 WARN ExecutorPodsWatchSnapshotSource: Kubernetes client has been closed (this is expected if the application is shutting down.)

21/12/21 06:33:39 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

21/12/21 06:33:39 INFO MemoryStore: MemoryStore cleared

21/12/21 06:33:39 INFO BlockManager: BlockManager stopped

21/12/21 06:33:39 INFO BlockManagerMaster: BlockManagerMaster stopped

21/12/21 06:33:39 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

21/12/21 06:33:39 INFO SparkContext: Successfully stopped SparkContext

21/12/21 06:33:39 INFO ShutdownHookManager: Shutdown hook called

21/12/21 06:33:39 INFO ShutdownHookManager: Deleting directory /tmp/spark-fe376e0d-8552-41fa-9620-685390a8ccbb

21/12/21 06:33:39 INFO ShutdownHookManager: Deleting directory /var/data/spark-528397fc-176a-4897-9129-9f4f14327b16/spark-21a04125-581f-48a0-8b84-36704c279704

五、查看结果

部分结果为:

(the,3306)

(,3056)

(to,1827)

(and,1787)

(a,1577)

(of,1235)

(was,1148)

(he,1018)

(Harry,903)

(in,898)

(his,893)

(had,691)

(--,688)

(said,659)

(at,580)

(you,578)

(it,547)

(on,544)

完成!

注意做完实验以后要删掉ASK集群,否则会一直扣费的!

参考资料

ECI SPARK https://github.com/aliyuneci/BestPractice-Serverless-Kubernetes/tree/master/eci-spark

在ECI中访问HDFS的数据 https://help.aliyun.com/document_detail/146235.html

在ECI中访问OSS数据 https://help.aliyun.com/document_detail/146237.html

云上大数据分析最佳实践 https://developer.aliyun.com/live/2196

ECI最佳实践-SPARK应用 https://help.aliyun.com/document_detail/146249.html

通过ASK创建Spark计算任务 https://help.aliyun.com/document_detail/165079.htm?spm=a2c4g.11186623.0.0.427a3edeER2KDl#task-2495864