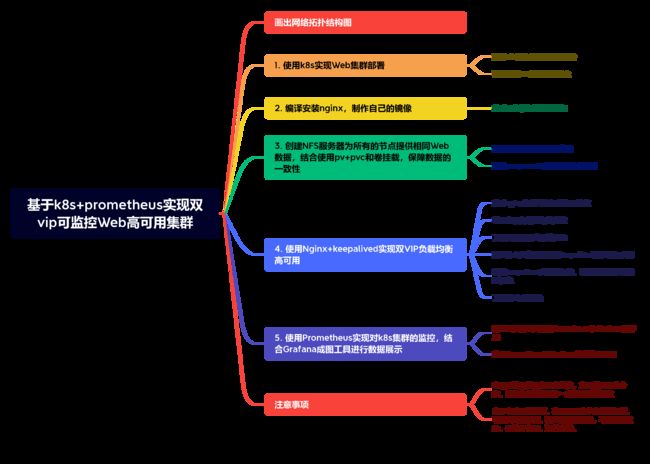

基于k8s+prometheus实现双vip可监控Web高可用集群

目录

一、规划整个项目的拓扑结构和项目的思维导图

二、修改好各个主机的主机名,并配置好每台机器的ip地址、网关和dns等

2.1修改每个主机的ip地址和主机名

2.2 关闭firewalld和selinux

三、使用k8s实现Web集群部署,实现1个master和3个node节点的k8s集群

3.1在k8s集群那4台服务器上安装好docker,这里根据官方文档进行安装

3.2 创建k8s集群,这里采用 kubeadm方式安装

3.2.1 确认docker已经安装好,启动docker,并且设置开机启动

3.2.2 配置 Docker使用systemd作为默认Cgroup驱动

3.2.3 关闭swap分区

3.2.4 修改hosts文件,和内核会读取的参数文件

3.2.5 安装kubeadm,kubelet和kubectl

3.2.6 部署Kubernetes Master

3.2.7 node节点服务器加入k8s集群

3.2.8 安装网络插件flannel(在master节点执行)

3.2.9 查看集群状态

四、编译安装nginx,制作自己的镜像

4.1建立一个一键安装nginx的脚本

4.2建立一个Dockerfile文件

五、创建NFS服务器为所有的节点提供相同Web数据,结合使用pv+pvc和卷挂载,保障数据的一致性

5.2在nfs-server服务器和4台k8s集群服务器上都安装好软件包

5.2在nfs-server服务器上创建共享文件的目录并创建共享文件

5.3在master服务器上创建pv

5.4在master服务器上创建pvc使用pv

5.5在master服务器上创建pod使用pvc

5.6创建一个service发布

5.7验证结果

六、使用Nginx+keepalived实现双VIP负载均衡高可用

6.1实现负载均衡功能

6.1.1编译一键安装nginx的脚本

6.1.2配置nginx里的负载均衡功能

6.1.3查看效果

6.1.4 查看负载均衡的分配情况

6.2用keepalived实现高可用

6.2.1 安装keepalived

6.2.2配置keepalived.conf文件

6.2.3 重启keepalived服务

6.2.4 测试访问

6.3压力测试

6.4 尝试优化整个web集群

七、使用Prometheus实现对k8s集群的监控,结合Grafana成图工具进行数据展示

7.1 搭建prometheus监控k8s集群

7.1.1 采用daemonset方式部署node-exporter

7.1.2 部署Prometheus

7.1.3 测试

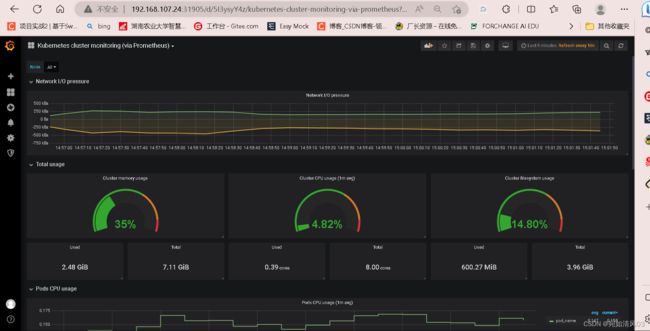

7.2 部署garafana

7.2.1 测试

概述

本项目对于前面所学的知识进行归纳总结,通过该实验提高对相关知识的掌握,利用k8s集群结合prometheus实现一个双vip可监控web的高可用集群,其中使用pv持久卷和pvc持久卷申请结合nfs保障数据一致性,采用k8s管理docker集群对外发布web服务,让外网的用户可以访问到内网的数据,使用nginx做负载均衡,keepalived实现双vip高可用,并通过ab软件进行压力测试和调整参数来实现性能优化,使用promentus+grafana对web进行监控和出图。

项目概述

项目名称:基于k8s+promehtues实现双vip可监控web高可用集群

项目环境:centos 7.9(7台,4台k8s集群2G2核,另外3台1G2核),docker 23.0.3,nginx1.21.1,keepalived 1.3.5,prometheus 2.0.0,grafana 6.1.4等

项目描述:利用k8s集群结合prometheus实现一个双vip可监控web的高可用集群,使用pv持久卷和pvc持久卷申请结合nfs实现数据一致性,采用k8s管理docker集群对外发布web服务,使用nginx做负载均衡,同时keepalived实现双vip高可用,通过ab软件进行压力测试,并通过调整参数来实现性能优化,最后使用promentusgrafana对web进行监控和出图。

项目流程:

1.规划整个项目的拓扑结构和项目的思维导图

2.修改好各个主机的主机名,并配置好每台机器的ip地址、网关和dns等

3.使用k8s实现Web集群部署,实现1个master和3个node节点的k8s集群

4.编译安装nginx,并制作自己的镜像供集群内部的服务器使用

5.创建NFS服务器为所有的节点提供相同Web数据,结合使用pv+pvc和卷挂载,保障数据的一致性

6.使用Nginx+keepalived实现双VIP负载均衡高可用,用ab软件进行压力测试,并做性能调优

7.使用Prometheus实现对k8s集群的监控,结合Grafana成图工具进行数据展示

项目心得:

通过网络拓扑图和思维导图的建立,提高了项目整体的落实和效率,对于k8s的使用和集群的部署更加熟悉,更加深刻的理解了docker技术,对于keepalived+nginx实现高可用负载均衡更为了解,对于Prometheus+Grafana实现系统监控有了更深的理解,提高了自己解决问题的能力,对linux里的架构理解更深入。

项目详细源码:

一、规划整个项目的拓扑结构和项目的思维导图

二、修改好各个主机的主机名,并配置好每台机器的ip地址、网关和dns等

项目服务器如下:

IP地址:192.168.107.20 主机名:load-balancer1

IP地址:192.168.107.21 主机名:load-balancer2

IP地址:192.168.107.23 主机名:nfs-server

IP地址:192.168.107.24 主机名:master

IP地址:192.168.107.25 主机名:node1

IP地址:192.168.107.26 主机名:node2

IP地址:192.168.107.27 主机名:node3

2.1修改每个主机的ip地址和主机名

本项目所有主机的网络模式为nat模式,进入/etc/sysconfig/network-scripts/ifcfg-ens33文件,将内容修改为一下内容

[root@master ~]# cd /etc/sysconfig/network-scripts/

[root@master network-scripts]# ls

ifcfg-ens33 ifdown-isdn ifdown-tunnel ifup-isdn ifup-Team

ifcfg-lo ifdown-post ifup ifup-plip ifup-TeamPort

ifdown ifdown-ppp ifup-aliases ifup-plusb ifup-tunnel

ifdown-bnep ifdown-routes ifup-bnep ifup-post ifup-wireless

ifdown-eth ifdown-sit ifup-eth ifup-ppp init.ipv6-global

ifdown-ippp ifdown-Team ifup-ippp ifup-routes network-functions

ifdown-ipv6 ifdown-TeamPort ifup-ipv6 ifup-sit network-functions-ipv6

[root@master network-scripts]# vi ifcfg-ens33

BOOTPROTO=none" #将dhcp改为none,为了实验的方便防止后面由于ip地址改变而出错,将ip地址静态化

NAME="ens33"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.107.24 #修改为规划好的ip地址

PREFIX=24

GATEWAY=192.168.107.2

DNS1=114.114.114.114

然后刷新网络服务,查看IP地址是否修改成功

[root@master network-scripts]# service network restart

Restarting network (via systemctl): [ 确定 ]

[root@master network-scripts]# ip add

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:73:a0:65 brd ff:ff:ff:ff:ff:ff

inet 192.168.107.24/24 brd 192.168.107.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe73:a065/64 scope link

valid_lft forever preferred_lft forever

测试能否上网

[root@master network-scripts]# ping www.baidu.com

PING www.a.shifen.com (14.119.104.254) 56(84) bytes of data.

64 bytes from 14.119.104.254 (14.119.104.254): icmp_seq=1 ttl=128 time=18.9 ms

64 bytes from 14.119.104.254 (14.119.104.254): icmp_seq=2 ttl=128 time=23.0 ms

64 bytes from 14.119.104.254 (14.119.104.254): icmp_seq=3 ttl=128 time=21.1 ms

^C

--- www.a.shifen.com ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2011ms

rtt min/avg/max/mdev = 18.990/21.073/23.060/1.671 ms

[root@master network-scripts]# 修改主机名

[root@master network-scripts]# hostnamectl set-hostname master

[root@master network-scripts]# su2.2 关闭firewalld和selinux

永久关闭firewalld和selinux

[root@master network-scripts]# systemctl disable firewalld #永久关闭firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@master network-scripts]# setenforce 0

[root@master network-scripts]#

[root@master network-scripts]# sed -i '/^SELINUX=/ s/enforcing/disabled/'

/etc/selinux/config #将selinux文件里的enforcing修改为disabled,这样保证永久关闭selinux

[root@master network-scripts]# cat /etc/selinux/config #查看selinux文件是否修改

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled #已经修改问disabled,关闭selinux 永久有效

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted 以上几步每个主机都要操作,这里不再详细写明。

三、使用k8s实现Web集群部署,实现1个master和3个node节点的k8s集群

3.1在k8s集群那4台服务器上安装好docker,这里根据官方文档进行安装

[root@master ~]# yum remove docker \

> docker-client \

> docker-client-latest \

> docker-common \

> docker-latest \

> docker-latest-logrotate \

> docker-logrotate \

> docker-engine

[root@master ~]# yum install -y yum-utils

[root@master ~]# yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

[root@master ~]# yum install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -y

[root@master ~]# systemctl start docker

[root@master ~]# docker ps #查看docker是否安装成功

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES3.2 创建k8s集群,这里采用 kubeadm方式安装

3.2.1 确认docker已经安装好,启动docker,并且设置开机启动

[root@master ~]# systemctl restart docker

[root@master ~]# systemctl enable docker

[root@master ~]# ps aux|grep docker

root 2190 1.4 1.5 1159376 59744 ? Ssl 16:22 0:00 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

root 2387 0.0 0.0 112824 984 pts/0 S+ 16:22 0:00 grep --color=auto docker3.2.2 配置 Docker使用systemd作为默认Cgroup驱动

每台服务器上都要操作,master和node上都要操作

[root@master ~]# cat < /etc/docker/daemon.json

> {

> "exec-opts": ["native.cgroupdriver=systemd"]

> }

> EOF

[root@master ~]# systemctl restart docker #重启docker

3.2.3 关闭swap分区

因为k8s不想使用swap分区来存储数据,使用swap会降低性能,每台服务器都需要操作

[root@master ~]# swapoff -a #临时关闭

[root@master ~]# sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab #永久关闭

3.2.4 修改hosts文件,和内核会读取的参数文件

每台机器上的/etc/hosts文件都需要修改

[root@master ~]# cat >> /etc/hosts << EOF

> 192.168.107.24 master

> 192.168.107.25 node1

> 192.168.107.26 node2

> 192.168.107.27 node3

> EOF修改

[rootmaster ~]#cat <> /etc/sysctl.conf 追加到内核会读取的参数文件里

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

EOF

[root@master ~]#sysctl -p 让内核重新读取数据,加载生效 3.2.5 安装kubeadm,kubelet和kubectl

kubeadm 是k8s的管理程序,在master上运行的,用来建立整个k8s集群,背后是执行了大量的脚本,帮助我们去启动k8s。

kubelet 是在node节点上用来管理容器的 --> 管理docker,告诉docker程序去启动容器

是master和node通信用的-->管理docker,告诉docker程序去启动容器。

一个在集群中每个节点(node)上运行的代理。 它保证容器(containers)都运行在 Pod 中。

kubectl 是在master上用来给node节点发号施令的程序,用来控制node节点的,告诉它们做什么事情的,是命令行操作的工具。

添加kubernetes YUM软件源

[root@master ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF安装kubeadm,kubelet和kubectl

[root@master ~]# yum install -y kubelet-1.23.6 kubeadm-1.23.6 kubectl-1.23.6

#最好指定版本,因为1.24的版本默认的容器运行时环境不是docker了设置开机自启,因为kubelet是k8s在node节点上的代理,必须开机要运行的

[root@master ~]# systemctl enable kubelet3.2.6 部署Kubernetes Master

只是master主机执行

提前准备coredns:1.8.4的镜像,后面需要使用,需要在每台机器上下载镜像

[root@master ~]# docker pull coredns/coredns:1.8.4

[root@master ~]# docker tag coredns/coredns:1.8.4 registry.aliyuncs.com/google_containers/coredns:v1.8.4初始化操作在master服务器上执行

[root@master ~]#kubeadm init \

--apiserver-advertise-address=192.168.107.24 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16执行成功后,将下面这段记录下来,为后面node节点加入集群做准备

kubeadm join 192.168.107.24:6443 --token kekb7f.a7vjm0duwvvwpvra \

--discovery-token-ca-cert-hash sha256:6505303121282ebcb315c9ae114941d5b075ee5b64cf7446000d0e0db02f09e9

完成初始化的新建目录和文件操作,在master上完成

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# chown $(id -u):$(id -g) $HOME/.kube/config3.2.7 node节点服务器加入k8s集群

测试node1节点是否能和master通信

[root@node1 ~]# ping master

PING master (192.168.107.24) 56(84) bytes of data.

64 bytes from master (192.168.107.24): icmp_seq=1 ttl=64 time=0.765 ms

64 bytes from master (192.168.107.24): icmp_seq=2 ttl=64 time=1.34 ms

^C

--- master ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 0.765/1.055/1.345/0.290 ms在所有的node节点上执行

[root@node1 ~]# kubeadm join 192.168.107.24:6443 --token kekb7f.a7vjm0duwvvwpvra \> --discovery-token-ca-cert-hash sha256:6505303121282ebcb315c9ae114941d5b075ee5b64cf7446000d0e0db02f09e9 在master上查看node是否已经加入集群

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 7m3s v1.23.6

node1 NotReady 93s v1.23.6

node2 NotReady 86s v1.23.6

node3 NotReady 82s v1.23.6

3.2.8 安装网络插件flannel(在master节点执行)

实现master上的pod和node节点上的pod之间通信

将flannel文件传入master主机

[root@master ~]# ls

anaconda-ks.cfg --image-repository

--apiserver-advertise-address=192.168.2.130 kube-flannel.yml

[root@master ~]# kubectl apply -f kube-flannel.yml #执行

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

3.2.9 查看集群状态

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 13m v1.23.6

node1 Ready 7m46s v1.23.6

node2 Ready 7m39s v1.23.6

node3 Ready 7m35s v1.23.6 至此,k8s集群就安装成功了。

四、编译安装nginx,制作自己的镜像

4.1建立一个一键安装nginx的脚本

在master服务器上执行

[root@master nginx]# vim onekey_install_nginx.sh

#!/bin/bash

#解决软件的依赖关系,需要安装的软件包

yum -y install zlib zlib-devel openssl openssl-devel pcre pcre-devel gcc gcc-c++ autoconf automake make psmisc net-tools lsof vim wget

#下载nginx软件

mkdir /nginx

cd /nginx

curl -O http://nginx.org/download/nginx-1.21.1.tar.gz

#解压软件

tar xf nginx-1.21.1.tar.gz

#进入解压后的文件夹

cd nginx-1.21.1

#编译前的配置

./configure --prefix=/usr/local/nginx1 --with-http_ssl_module --with-threads --with-http_v2_module --with-http_stub_status_module --with-stream

#编译

make -j 2

#编译安装

make install

4.2建立一个Dockerfile文件

内容如下:

[root@master nginx]# vim Dockerfile

FROM centos:7 #指明基础镜像

ENV NGINX_VERSION 1.21.1 #将1.21.1这个数值赋值NGINX_VERSION这个变量

ENV AUTHOR zhouxin # 作者zhouxin

LABEL maintainer="cali<[email protected]>" #标签

RUN mkdir /nginx #在容器中运行的命令

WORKDIR /nginx #指定进入容器的时候,在哪个目录下

COPY . /nginx #复制宿主机里的文件或者文件夹到容器的/nginx目录下

RUN set -ex; \ #在容器运行命令

bash onekey_install_nginx.sh ; \ #执行一键安装nginx的脚本

yum install vim iputils net-tools iproute -y #安装一些工具

EXPOSE 80 #声明开放的端口号

ENV PATH=/usr/local/nginx1/sbin:$PATH #定义环境变量

STOPSIGNAL SIGQUIT #屏蔽信号

CMD ["nginx","-g","daemon off;"] #在前台启动nginx程序, -g daemon off将off值赋给daemon这个变量,告诉nginx不要在后台启动,在前台启动,daemon是守护进程,默认在后台启动

创建镜像

[root@master nginx]# docker build -t zhouxin_nginx:1.0 .查看镜像

[root@master nginx]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

zhouxin_nginx 1.0 d6d326da3bb9 19 hours ago 621MB

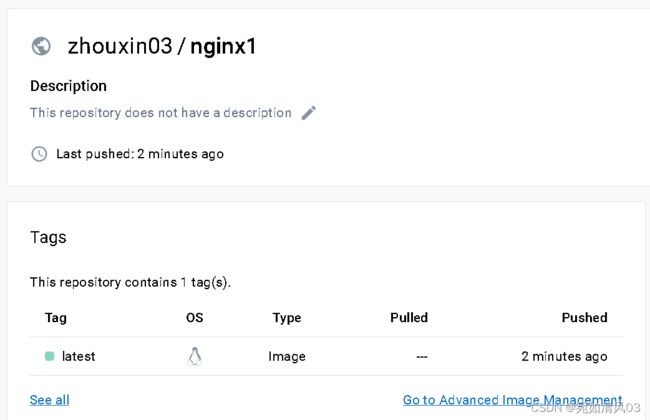

将自己制作的镜像推送到我的docker hub仓库以供其他3个node节点服务器使用,首先要在docker hub创建自己的账号,并创建自己的仓库,我已经创建了zhouxin03/nginx1的仓库

在master上将自己制作的镜像打标签,然后再推到自己的docker hub仓库里

[root@master nginx]# docker tag zhouxin_nginx:1.0 zhouxin03/nginx1

[root@master nginx]# docker push zhouxin03/nginx1docker hub上看到了则表示推送成功。

然后在3台node节点服务器上拉取这个镜像

[root@node1 ~]# docker pull zhouxin03/nginx1:latest #拉取镜像

latest: Pulling from zhouxin03/nginx1

2d473b07cdd5: Already exists

535a3cf740af: Pull complete

4f4fb700ef54: Pull complete

c652b3bc123a: Pull complete

b3eaccc7b7d0: Pull complete

Digest: sha256:1acfbc1de329f38f50fb354af3ccc36c084923aca0f97490b350f57d8ab9fc6f

Status: Downloaded newer image for zhouxin03/nginx1:latest

docker.io/zhouxin03/nginx1:latest

[root@node1 ~]# docker images #查看镜像

REPOSITORY TAG IMAGE ID CREATED SIZE

zhouxin_nginx 1.0 a06f4e68aed6 19 hours ago 621MB

zhouxin03/nginx1 latest d6d326da3bb9 20 hours ago 621MB五、创建NFS服务器为所有的节点提供相同Web数据,结合使用pv+pvc和卷挂载,保障数据的一致性

5.2在nfs-server服务器和4台k8s集群服务器上都安装好软件包

[root@nfs-server nginx]# yum install -y nfs-utils设置开机自动启动

[root@nfs-server nginx]# systemctl start nfs

[root@nfs-server nginx]# systemctl enable nfs5.2在nfs-server服务器上创建共享文件的目录并创建共享文件

[root@nfs-server nginx]# mkdir /nginx

[root@nfs-server nginx]# cd /nginx

[root@nfs-server nginx]# vim index.html

welcome!

name:zhouxin

Hunan Agricultural University

age: 20

然后编辑/etc/exports文件,并让其生效

[root@nfs-server nginx]# vim /etc/exports

/nginx 192.168.107.0/24 (rw,sync,all_squash)

[root@nfs-server nginx]# exportfs -av

exportfs: No options for /nginx 192.168.107.0/24: suggest 192.168.107.0/24(sync) to avoid warning

exportfs: No host name given with /nginx (rw,sync,all_squash), suggest *(rw,sync,all_squash) to avoid warning

exporting 192.168.107.0/24:/nginx

exporting *:/nginx设置共享目录的权限

[root@nfs-server nginx]# chown nobody:nobody /nginx

[root@nfs-server nginx]# ll -d /nginx

drwxr-xr-x 2 nobody nobody 24 4月 7 16:51 /nginx5.3在master服务器上创建pv

[root@master pod]# mkdir /pod

[root@master pod]# cd /pod

[root@master pod]# vim pv_nfs.yaml

apiVersion: v1

kind: PersistentVolume #资源类型

metadata:

name: zhou-nginx-pv #创建的pv的名字

labels:

type: zhou-nginx-pv

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany #访问模式,多个客户端读写

persistentVolumeReclaimPolicy: Recycle #回收策略-可以回收

storageClassName: nfs #pv名字

nfs:

path: "/nginx" # nfs-server共享目录的路径

server: 192.168.107.23 # nfs-server服务器的ip

readOnly: false #只读执行pv的yaml文件

[root@master pod]# kubectl apply -f pv_nfs.yaml

[root@master pod]# kubectl get pv #查看

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

zhou-nginx-pv 5Gi RWX Recycle Bound default/zhou-nginx-pvc nfs 81m

5.4在master服务器上创建pvc使用pv

[root@master pod]# vim pvc_nfs.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zhou-nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: nfs

执行并查看

[root@master pod]# kubectl apply -f pvc_nfs.yaml

[root@master pod]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

zhou-nginx-pvc Bound zhou-nginx-pv 5Gi RWX nfs 91m

5.5在master服务器上创建pod使用pvc

[root@master pod]# vim pv_pod.yaml

apiVersion: apps/v1

kind: Deployment #用副本控制器deployment创建

metadata:

name: nginx-deployment #deployment的名称

labels:

app: zhou-nginx

spec:

replicas: 40 #建立40个副本

selector:

matchLabels:

app: zhou-nginx

template: #根据此模版创建Pod的副本(实例)

metadata:

labels:

app: zhou-nginx

spec:

volumes:

- name: zhou-pv-storage-nfs

persistentVolumeClaim:

claimName: zhou-nginx-pvc #使用前面创建的pvc

containers:

- name: zhou-pv-container-nfs #容器名字

image: zhouxin_nginx:1.0 #使用之前自己制作的镜像

ports:

- containerPort: 80 #容器应用监听的端口号

name: "http-server"

volumeMounts:

- mountPath: "/usr/local/nginx1/html" #挂载到的容器里的目录,这里是自己编译安装的nginx下的html路径

name: zhou-pv-storage-nfs

执行和查看

[root@master pod]#kubectl apply -f pv_pod.yaml

[root@master pod]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deployment 40/40 40 40 38m

测试

[root@master pod]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-cf666f45f-2gjw7 1/1 Running 0 39m 10.244.2.130 node2

nginx-deployment-cf666f45f-2lgh6 1/1 Running 0 39m 10.244.3.122 node3

nginx-deployment-cf666f45f-2p9hh 1/1 Running 0 39m 10.244.3.118 node3

nginx-deployment-cf666f45f-2qbcc 1/1 Running 0 39m 10.244.2.132 node2

nginx-deployment-cf666f45f-59jzb 1/1 Running 0 39m 10.244.1.123 node1

nginx-deployment-cf666f45f-5pvzw 1/1 Running 0 39m 10.244.1.118 node1

nginx-deployment-cf666f45f-646ts 1/1 Running 0 39m 10.244.3.119 node3

....

....

....

nginx-deployment-cf666f45f-zs7wp 1/1 Running 0 39m 10.244.1.129 node1

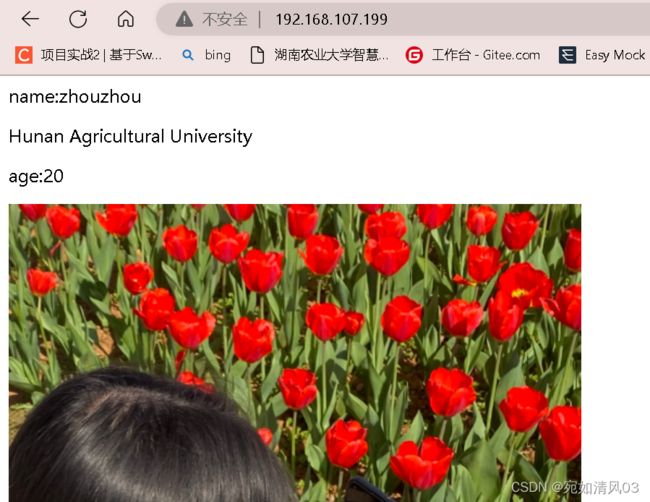

[root@master pod]# curl 10.244.2.130

welcome!

name:zhouxin

Hunan Agricultural University

age: 20

5.6创建一个service发布

[root@master pod]# vim my_service.yaml

apiVersion: v1

kind: Service

metadata:

name: my-nginx-nfs #service的名字

labels:

run: my-nginx-nfs

spec:

type: NodePort

ports:

- port: 8070

targetPort: 80

protocol: TCP

name: http

selector:

app: zhou-nginx #注意这里要用app的形式,跟前面的pv_pod.yaml文件对应,有些使用方法是run,不要搞错了

执行并查看

[root@master pod]# kubectl apply -f my_service.yaml

[root@master pod]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.1.0.1 443/TCP 28h

my-nginx-nfs NodePort 10.1.11.160 8070:30536/TCP 41m #这里的30536就是宿主机暴露的端口号,验证时用浏览器访问宿主机的这个端口号

5.7验证结果

使用浏览器访问4台k8s集群服务器任意一台的30536端口,都能显示出nfs-server服务器上的定制页面

六、使用Nginx+keepalived实现双VIP负载均衡高可用

6.1实现负载均衡功能

6.1.1编译一键安装nginx的脚本

在两台负载均衡器上

编译脚本

#!/bin/bash

#解决软件的依赖关系,需要安装的软件包

yum -y install zlib zlib-devel openssl openssl-devel pcre pcre-devel gcc gcc-c++ autoconf automake make psmisc net-tools lsof vim wget

#新建chaochao用户和组, -s /sbin/nologin 设置用户只能用来启动服务,没有其他作用

id zhouzhou || useradd zhouzhou -s /sbin/nologin

#下载nginx软件

mkdir /zhou_nginx -p

cd /zhou_nginx

wget http://nginx.org/download/nginx-1.21.1.tar.gz

#解压软件

tar xf nginx-1.21.1.tar.gz

#进入解压后的文件夹

cd nginx-1.21.1

#编译前的配置

./configure --prefix=/usr/local/zhou_nginx --user=zhouzhou --with-http_ssl_module --with-threads --with-http_v2_module --with-http_stub_status_module --with-stream

#编译,开启两个进程同时编译,速度会快些

make -j 2

#编译安装

make install

#修改PATH变量

echo "export NGINX_PATH=/usr/local/zhou_nginx" >>/etc/profile

echo "export PATH=$PATH:${NGINX_PATH}/sbin" >>/etc/profile

#执行修改了环境变量的脚本

source /etc/profile

#设置开机启动

chmod +x /etc/rc.d/rc.local

echo "/usr/local/zhou_nginx/nginx" >>/etc/rc.local

安装运行脚本

[root@load-balancer2 nginx]# bash onekey_install_nginx.sh 启动nginx

[root@load-balancer2 nginx]# nginx

[root@load-balancer1 sbin]# ps aux|grep nginx

root 4423 0.0 0.1 46220 1160 ? Ss 12:01 0:00 nginx: master process nginx

zhouzhou 4424 0.0 0.1 46680 1920 ? S 12:01 0:00 nginx: worker process

root 4434 0.0 0.0 112824 988 pts/0 S+ 12:08 0:00 grep --color=auto nginx

6.1.2配置nginx里的负载均衡功能

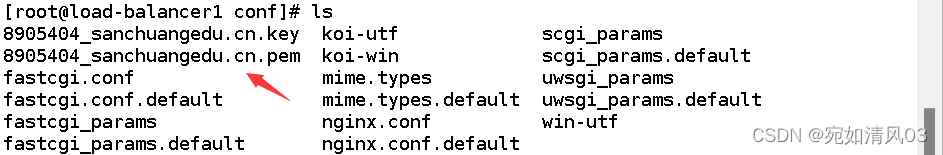

为了方便后面配置https服务,先将证书文件传入两台负载均衡器的nginx.conf配置文件同路径下

使用xftp传入

然后配置nginx里的负载均衡

第一台

[root@load-balancer1 sbin]# cd /usr/local/zhou_nginx

[root@load-balancer1 zhou_nginx]# ls

client_body_temp fastcgi_temp logs sbin uwsgi_temp

conf html proxy_temp scgi_temp

[root@load-balancer1 zhou_nginx]# cd conf

[root@load-balancer1 conf]# ls

8905404_sanchuangedu.cn.key koi-utf scgi_params

8905404_sanchuangedu.cn.pem koi-win scgi_params.default

fastcgi.conf mime.types uwsgi_params

fastcgi.conf.default mime.types.default uwsgi_params.default

fastcgi_params nginx.conf win-utf

fastcgi_params.default nginx.conf.default

[root@load-balancer1 conf]# vim nginx.conf

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

upstream zhouweb{ #定义一个负载均衡器的名字为:zhouweb

server 192.168.107.24:30536;

server 192.168.107.25:30536;

server 192.168.107.26:30536;

server 192.168.107.27:30536;

}

server {

listen 80;

server_name www.zhouxin1.com; #设置域名为www.zhouxin1.com

location / {

proxy_pass http://zhouweb; #调用负载均衡器

}

}

#配置https服务

server {

listen 443 ssl;

server_name zhouxin-https1.com; #设置域名为www.zhouxin-https1.com

ssl_certificate 8905404_sanchuangedu.cn.pem; #证书文件,必须与该文件放入同一目录下

ssl_certificate_key 8905404_sanchuangedu.cn.key;

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 5m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://zhouweb; #调用负载均衡器

}

}

}

[root@load-balancer1 conf]# nginx -s reload 重新加载配置文件

[root@load-balancer1 conf]# ps aux|grep nginx

root 4423 0.0 0.2 46264 2068 ? Ss 12:01 0:00 nginx: master process nginx

zhouzhou 17221 0.0 0.2 46700 2192 ? S 12:40 0:00 nginx: worker process

root 17246 0.0 0.0 112824 988 pts/0 S+ 13:01 0:00 grep --color=auto nginx

第二台

[root@load-balancer2 sbin]# cd /usr/local/zhou_nginx

[root@load-balancer2 zhou_nginx]# ls

client_body_temp fastcgi_temp logs sbin uwsgi_temp

conf html proxy_temp scgi_temp

[root@load-balancer1 zhou_nginx]# cd conf

[root@load-balancer1 conf]# ls

8905404_sanchuangedu.cn.key koi-utf scgi_params

8905404_sanchuangedu.cn.pem koi-win scgi_params.default

fastcgi.conf mime.types uwsgi_params

fastcgi.conf.default mime.types.default uwsgi_params.default

fastcgi_params nginx.conf win-utf

fastcgi_params.default nginx.conf.default

[root@load-balancer1 conf]# vim nginx.conf

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

upstream zhouweb{ #定义一个负载均衡器的名字为:zhouweb

server 192.168.107.24:30536;

server 192.168.107.25:30536;

server 192.168.107.26:30536;

server 192.168.107.27:30536;

}

server {

listen 80;

server_name www.zhouxin2.com; #设置域名为www.zhouxin2.com

location / {

proxy_pass http://zhouweb; #调用负载均衡器

}

}

#配置https服务

server {

listen 443 ssl;

server_name zhouxin-https2.com; #设置域名为www.zhouxin-https2.com

ssl_certificate 8905404_sanchuangedu.cn.pem; #证书文件,必须与该文件放入同一目录下

ssl_certificate_key 8905404_sanchuangedu.cn.key;

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 5m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://zhouweb; #调用负载均衡器

}

}

}

[root@load-balancer2 conf]# nginx -s reload 重新加载配置文件

[root@load-balancer2 conf]# ps aux|grep nginx

root 1960 0.0 0.2 47380 2808 ? Ss 20:11 0:00 nginx: master process nginx

zhouzhou 2274 0.0 0.2 47780 2556 ? S 20:42 0:00 nginx: worker process

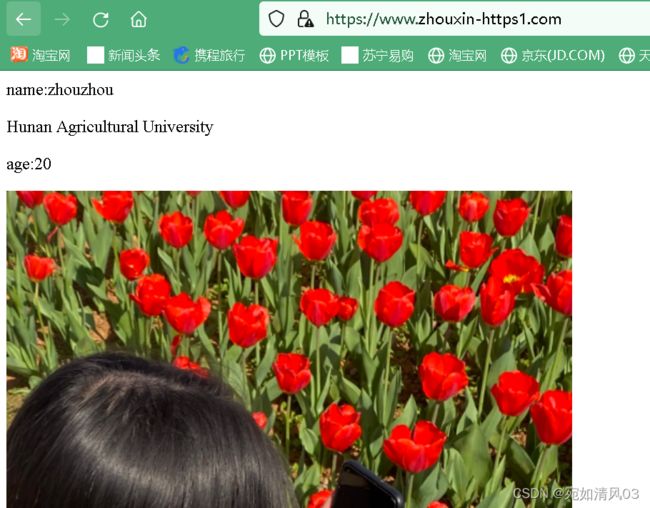

root 2278 0.0 0.0 112824 988 pts/0 S+ 20:54 0:00 grep --color=auto nginx6.1.3查看效果

先要修改windows的hosts文件

在C:\Windows\System32\drivers\etc的hosts文件

将hosts文件复制到桌面,然后进行修改后放回原路径替换原hosts文件

然后用浏览器访问

6.1.4 查看负载均衡的分配情况

用抓包工具来查看:tcpdump

[root@load-balancer1 conf]# yum install tcpdump -y6.2用keepalived实现高可用

6.2.1 安装keepalived

在两台负载均衡服务器上

并设置开机自动启动

[root@load-balancer1 conf]# yum install keepalived -y

[root@load-balancer1 conf]# systemctl enable keepalived6.2.2配置keepalived.conf文件

第一台

[root@load-balancer1 conf]# cd /etc/keepalived

[root@load-balancer1 keepalived]# ls

keepalived.conf

[root@load-balancer1 keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 { #定义一个vrrp协议的实例 名字叫VI_1 第一个vrrp实例

state MASTER #做master角色

interface ens33 #指定监听网络的接口,其实就是vip绑定到ens33这个个网络接口上

virtual_router_id 66 #虚拟路由器id

priority 120 #优先级 0~255

advert_int 1 #宣告消息的时间间隔 1秒 interval 间隔

authentication {

auth_type PASS #密码认证 password

auth_pass 1111 #具体密码

}

virtual_ipaddress { #vip 虚拟ip地址

192.168.107.188

}

}

vrrp_instance VI_2 {

state BACKUP

interface ens33

virtual_router_id 88

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.107.199

}

}第二台

[root@load-balancer2 conf]# cd /etc/keepalived

[root@load-balancer2 keepalived]# ls

keepalived.conf

[root@load-balancer2 keepalived]# vim keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 66

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.107.188

}

}

vrrp_instance VI_2 {

state MASTER

interface ens33

virtual_router_id 88

priority 120

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.107.199

}

}6.2.3 重启keepalived服务

第一台,可以看到已经有虚拟ip了

[root@load-balancer1 keepalived]# service keepalived restart

Redirecting to /bin/systemctl restart keepalived.service

ip a[root@load-balancer1 keepalived]# ip add

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:93:3d:90 brd ff:ff:ff:ff:ff:ff

inet 192.168.107.20/24 brd 192.168.107.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

第二台

[root@load-balancer2 keepalived]# service keepalived restart

Redirecting to /bin/systemctl restart keepalived.service

[root@load-balancer2 keepalived]# ip add

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:4b:2b:c6 brd ff:ff:ff:ff:ff:ff

inet 192.168.107.21/24 brd 192.168.107.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.107.199/32 scope global ens33

valid_lft forever preferred_lft forever 测试若其中一台机器挂了,vip会漂移到另外一台机器上

[root@load-balancer1 nginx]# service keepalived stop

Redirecting to /bin/systemctl stop keepalived.service

[root@load-balancer1 nginx]# ip add

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:93:3d:90 brd ff:ff:ff:ff:ff:ff

inet 192.168.107.20/24 brd 192.168.107.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe93:3d90/64 scope link

valid_lft forever preferred_lft forever [root@load-balancer2 nginx]# ip add

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:4b:2b:c6 brd ff:ff:ff:ff:ff:ff

inet 192.168.107.21/24 brd 192.168.107.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.107.199/32 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.107.188/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe4b:2bc6/64 scope link

valid_lft forever preferred_lft forever 6.2.4 测试访问

用浏览器访问虚拟ip地址

6.3压力测试

在其他的机器上对该集群进行ab压力测试

安装ab软件

[root@localhost ~]# yum install httpd-tools -y[root@localhost ~]# ab -c 20 -n 35000 -r http://192.168.107.20/

#20个并发数,35000个请求数查看吞吐率

经过多次测试,看到最高吞吐率为4200左右

6.4 尝试优化整个web集群

可以通过修改内核参数,nginx参数来优化

这里使用ulimit命令

[root@load-balancer1 conf]# ulimit -n 10000

七、使用Prometheus实现对k8s集群的监控,结合Grafana成图工具进行数据展示

这里参考了Prometheus监控K8S这篇博客

监控node的资源,可以放一个node_exporter,这是监控node资源的,node_exporter是Linux上的采集器,放上去就能采集到当前节点的CPU、内存、网络IO,等都可以采集的。

监控容器,k8s内部提供cadvisor采集器,pod、容器都可以采集到这些指标,都是内置的,不需要单独部署,只知道怎么去访问这个Cadvisor就可以了。

监控k8s资源对象,会部署一个kube-state-metrics这个服务,它会定时的API中获取到这些指标,帮存取到Prometheus里,要是告警的话,通过Alertmanager发送给一些接收方,通过Grafana可视化展示

7.1 搭建prometheus监控k8s集群

7.1.1 采用daemonset方式部署node-exporter

[root@master /]# mkdir /prometheus

[root@master /]# cd /prometheus

[root@master prometheus]# vim node_exporter.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-system

labels:

k8s-app: node-exporter

spec:

selector:

matchLabels:

k8s-app: node-exporter

template:

metadata:

labels:

k8s-app: node-exporter

spec:

containers:

- image: prom/node-exporter

name: node-exporter

ports:

- containerPort: 9100

protocol: TCP

name: http

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: node-exporter

name: node-exporter

namespace: kube-system

spec:

ports:

- name: http

port: 9100

nodePort: 31672

protocol: TCP

type: NodePort

selector:

k8s-app: node-exporter执行

[root@master prometheus]# kubectl apply -f node-exporter.yaml

daemonset.apps/node-exporter created

service/node-exporter created7.1.2 部署Prometheus

[root@master prometheus]# vim prometheus_rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system[root@master prometheus]# vim prometheus_comfig.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-services'

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: 'kubernetes-ingresses'

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name[root@master prometheus]# vim prometheus_deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: prometheus-deployment

name: prometheus

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- image: prom/prometheus:v2.0.0

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=24h"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/prometheus"

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 2500Mi

serviceAccountName: prometheus

volumes:

- name: data

emptyDir: {}

- name: config-volume

configMap:

name: prometheus-config

[root@master prometheus]# vim prometheus_service.yaml

kind: Service

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus

namespace: kube-system

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30003

selector:

app: prometheus

执行

[root@master prometheus]# kubectl apply -f prometheus_rbac.yaml

clusterrole.rbac.authorization.k8s.io/prometheus configured

serviceaccount/prometheus configured

clusterrolebinding.rbac.authorization.k8s.io/prometheus configured

[root@master prometheus]# kubectl apply -f prometheus_configmap.yaml

configmap/prometheus-config configured

[root@master prometheus]# kubectl apply -f prometheus_deploy.yaml

deployment.apps/prometheus created

[root@master prometheus]# kubectl apply -f prometheus_service.yaml

service/prometheus created

查看

[root@master prometheus]# kubectl get service -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.1.0.1 443/TCP 3d4h

default my-nginx-nfs NodePort 10.1.11.160 8070:30536/TCP 2d

kube-system kube-dns ClusterIP 10.1.0.10 53/UDP,53/TCP,9153/TCP 3d4h

kube-system node-exporter NodePort 10.1.245.229 9100:31672/TCP 24h

kube-system prometheus NodePort 10.1.60.0 9090:30003/TCP 24h

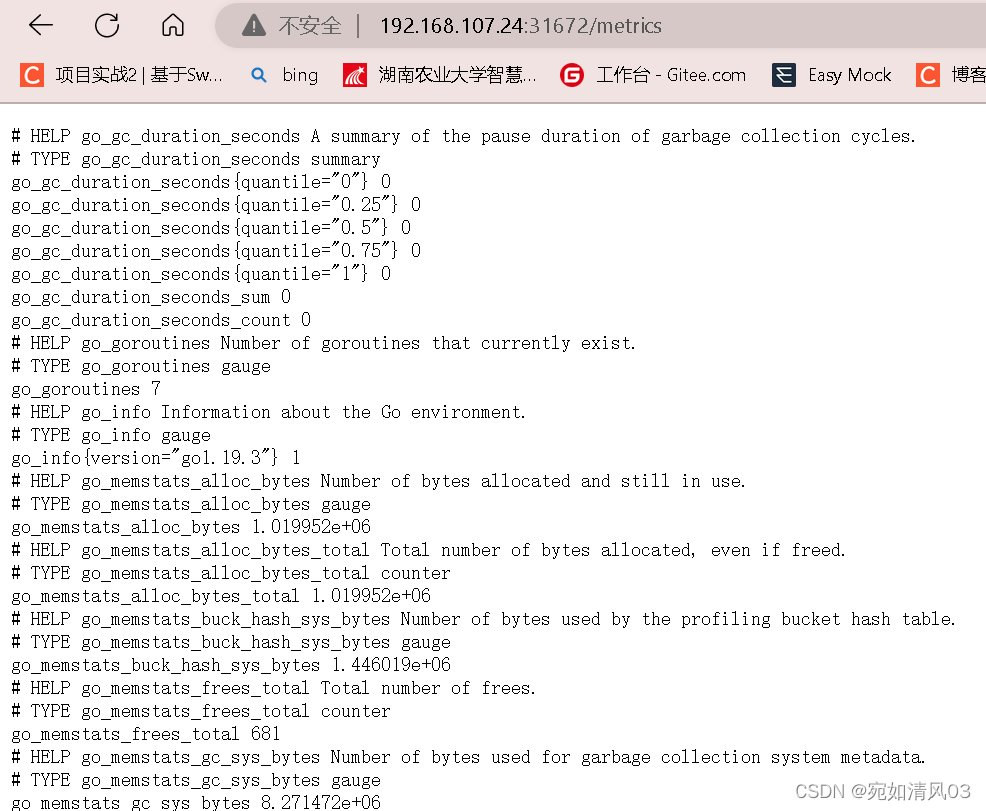

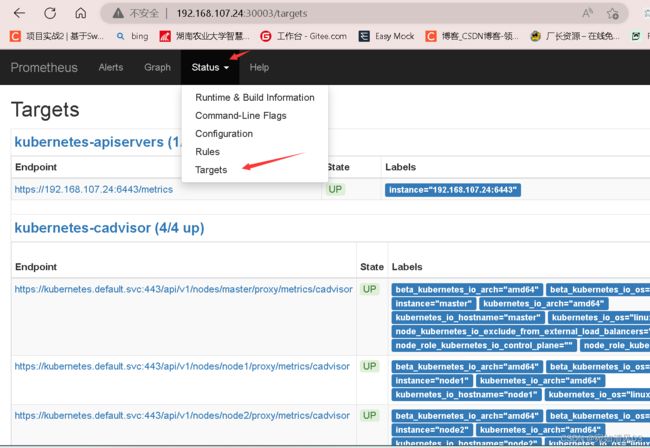

7.1.3 测试

用浏览器访问192.168.107.24:31672,这是node-exporter采集的数据

访问192.168.107.24:30003,这是Prometheus的页面,依次点击Status——Targets可以看到已经成功连接到k8s的apiserver

7.2 部署garafana

[root@master prometheus]# vim grafana_deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana-core

namespace: kube-system

labels:

app: grafana

component: core

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

component: core

spec:

containers:

- image: grafana/grafana:6.1.4

name: grafana-core

imagePullPolicy: IfNotPresent

# env:

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

env:

# The following env variables set up basic auth twith the default admin user and admin password.

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

# - name: GF_AUTH_ANONYMOUS_ORG_ROLE

# value: Admin

# does not really work, because of template variables in exported dashboards:

# - name: GF_DASHBOARDS_JSON_ENABLED

# value: "true"

readinessProbe:

httpGet:

path: /login

port: 3000

# initialDelaySeconds: 30

# timeoutSeconds: 1

#volumeMounts: #先不进行挂载

#- name: grafana-persistent-storage

# mountPath: /var

#volumes:

#- name: grafana-persistent-storage

#emptyDir: {}

[root@master prometheus]# vim grafana_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-system

labels:

app: grafana

component: core

spec:

type: NodePort

ports:

- port: 3000

selector:

app: grafana

component: core[root@master prometheus]# vim grafana_ing.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: grafana

namespace: kube-system

spec:

rules:

- host: k8s.grafana

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: grafana

port:

number: 3000执行

[root@master prometheus]# kubectl apply -f grafana_deploy.yaml

deployment.apps/grafana-core created

[root@master prometheus]# kubectl apply -f grafana_svc.yaml

service/grafana created

[root@master prometheus]# kubectl apply -f grafana_ing.yaml

ingress.networking.k8s.io/grafana created

查看

[root@master ~]# kubectl get service -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.1.0.1 443/TCP 3d21h

default my-nginx-nfs NodePort 10.1.11.160 8070:30536/TCP 2d16h

kube-system grafana NodePort 10.1.102.173 3000:31905/TCP 16h

kube-system kube-dns ClusterIP 10.1.0.10 53/UDP,53/TCP,9153/TCP 3d21h

kube-system node-exporter NodePort 10.1.245.229 9100:31672/TCP 40h

kube-system prometheus NodePort 10.1.60.0 9090:30003/TCP 40h

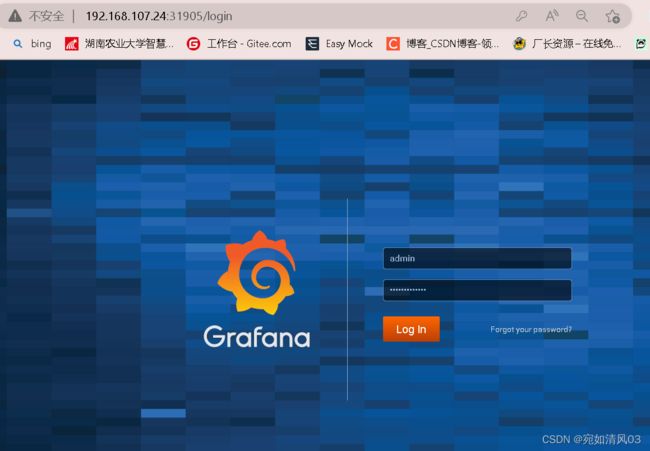

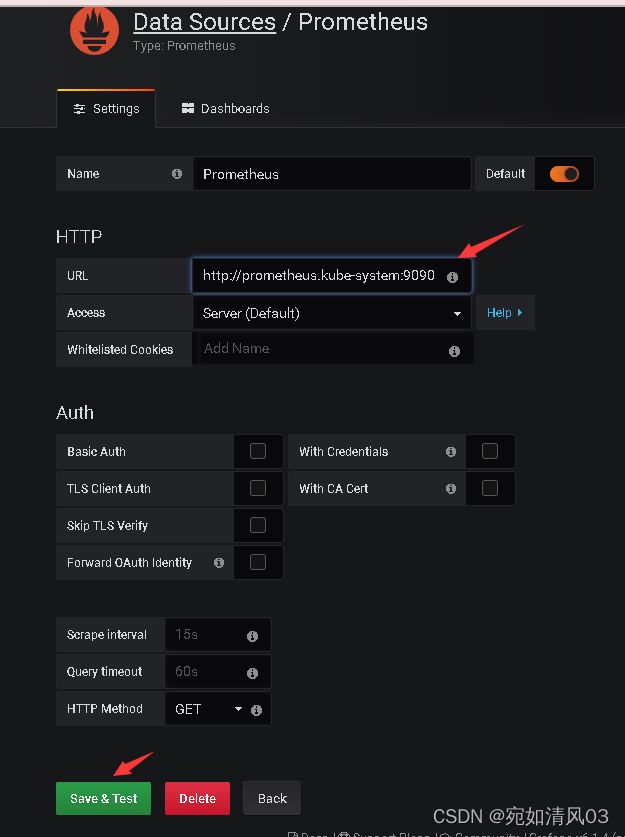

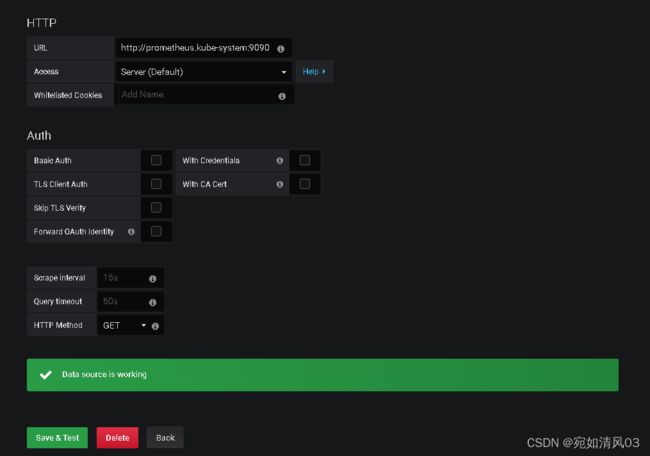

7.2.1 测试

访问192.168.107.24:31905,这是grafana的页面,账户、密码都是admin

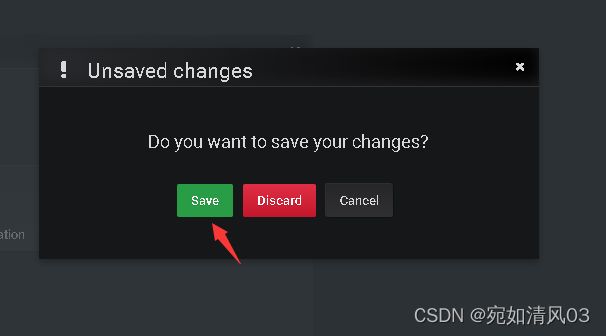

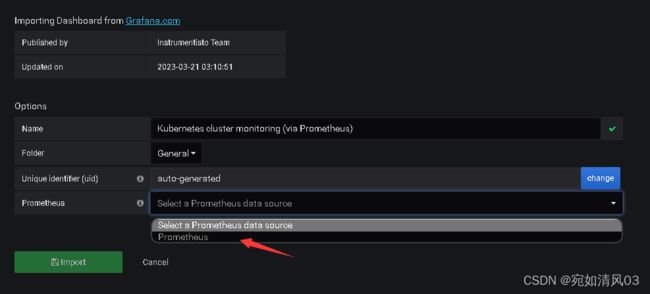

依次进行设置,这里的url需要注意

URL需要写成,service.namespace:port 的格式,例如:

[root@master ~]# kubectl get service -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.1.0.1 443/TCP 3d21h

default my-nginx-nfs NodePort 10.1.11.160 8070:30536/TCP 2d16h

kube-system grafana NodePort 10.1.102.173 3000:31905/TCP 16h

kube-system kube-dns ClusterIP 10.1.0.10 53/UDP,53/TCP,9153/TCP 3d21h

kube-system node-exporter NodePort 10.1.245.229 9100:31672/TCP 40h

kube-system prometheus NodePort 10.1.60.0 9090:30003/TCP 40h

#以这里为例,namespace是kube-system,service是prometheus,pod端口是9090,那么最后的URL就是;

http://prometheus.kube-system:9090 可以到这个网站去找模板

https://grafana.com/grafana/dashboards/

出图效果

以上便是本次项目的具体实施步骤,如有疑问以及错误之处,希望大家通过私信反应,期待与各位交流。