FATE学习:跟着日志读源码(一)日志目录结构

综述

介绍fate运行过程中产生的各个类型日志

版本说明

部署方式为KubeFATE。相关版本为:

- FATE: 1.5.1

- KubeFATE: v1.3.0

基本概念

- job:一个完整的调度单元,可以拆分成不同的task。

- task:最小的执行单元。

- component:预先定义的不同组件,具体的task执行,就是运行不同的组件。

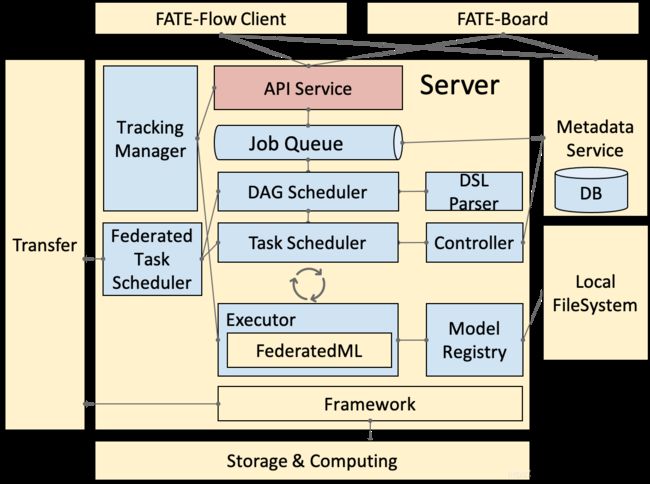

FATE框架

根据官方的架构图,在单个Party的上FATE-Flow 的架构如下

整体分成6大块:

- FATE-Flow Client: 客户端,提供给用户的交互接口,日常提交的命令

python fate_flow_client.py -f submit_job -c examples/test_hetero_lr_job_conf.json -d examples/test_hetero_lr_job_dsl.json

就是调用了客户端。

- FATE-Flow Server: 服务端,用于处理Client 提交的各类请求。

- FATE-Board: Web UI,用于监控FATE 任务的运行情况。

- Transfer : 用来处理多方联邦时的交互和通信

- Metadata Service: 对联邦过程,产生的各类Metadata进行存储、管理并提供查询

- Local FileSystem: 本地文件系统,进行持久化

- Storage & Computing: 底层存储和计算引擎

其中 FATE-Flow Server 最为复杂,

- API Service: 处理Client 提交的各类请求

- Job Queue: 异步调度的方式,所有提交的job 先进入job queue (见后文,所有job创建完毕,都是waiting状态),根据资源情况,进行下发(调度策略是啥??)

- DAG Scheduler: 每个job 会根据涉及的计算内容,解析为一个DAG(有向无环图),图中的每个节点为一个task。针对每个job,通过一个DAG Scheduler 进行该job下涉及task 的调度。

- Task Scheduler: task 在本Party的调度,涉及到跨多个Party 会调用Federated Task Scheduler 进行调度。

- Federated Task Scheduler:涉及到跨多个Party 的Task 的调度

- Executor: 联邦任务执行节点,支持不同的 Operator 容器。这里会调用Framework

- Framework: 计算框架抽象层,根据backend 的设置 调用底层的Storage & Computing,同时若为联邦任务,则和Transfer 进行通信。

- DSL Parser: 是调度的核心,通过 DSL parser 解析到一个计算任务的上下游关系及依赖等。

- Controller: 任务的控制器

- Model Registry: 模型管理器

- Tracking Manager:任务输入输出的实时追踪,包括每个 task 输出的 data 和 model。

日志分类

从FATE的框架,可以看出FATE 涉及大量的http/grpc 请求, 以及DB操作,因而按照日志的操作来源分,主要有一下三类:

- http/grpc 请求日志

- 操作DB的日志

- fate 框架运行时的日志

而从日志输出的位置来看,则可以分为如下三类:

- Console 日志

- 容器日志

- FATE Flow 日志

鉴于按照位置来看,可以更好的体现出整个执行的时序,下文会按照这个维度,来进行解析

Console 日志

控制台部分,也是就正常提交任务之后会产生的日志,例如 执行命令

python fate_flow_client.py -f upload -c examples/upload_guest.json

返回的日志为

{

"data": {

"board_url": "http://fateboard:8080/index.html#/dashboard?job_id=20210720163310959474309&role=local&party_id=0",

"job_dsl_path": "/data/projects/fate/jobs/20210720163310959474309/job_dsl.json",

"job_id": "20210720163310959474309",

"job_runtime_conf_on_party_path": "/data/projects/fate/jobs/20210720163310959474309/local/job_runtime_on_party_conf.json",

"job_runtime_conf_path": "/data/projects/fate/jobs/20210720163310959474309/job_runtime_conf.json",

"logs_directory": "/data/projects/fate/logs/20210720163310959474309",

"model_info": {

"model_id": "local-0#model",

"model_version": "20210720163310959474309"

},

"namespace": "cl",

"pipeline_dsl_path": "/data/projects/fate/jobs/20210720163310959474309/pipeline_dsl.json",

"table_name": "bgame3342_20210719",

"train_runtime_conf_path": "/data/projects/fate/jobs/20210720163310959474309/train_runtime_conf.json"

},

"jobId": "20210720163310959474309",

"retcode": 0,

"retmsg": "success"

}

包含4个部分:

- data: 提交任务的相关参数

- jobId: FATE为该job分配的id

- retcode: 提交请求的返回状态码

- retmsg: 提交请求返回的状态信息。如不成功,会有相关报错。

这里需要关注的日志信息,只有retmsg。

Server日志

这一部分日志,可以在部署fate_flow server 的容器中看到。

10.200.224.54 - - [20/Jul/2021 16:33:12] "POST /v1/party/20210720163310959474309/local/0/create HTTP/1.1" 200 -

10.200.224.54 - - [20/Jul/2021 16:33:12] "POST /v1/data/upload?%7B%22file%22:%20%22/data/projects/fate/python/cl/fc/data/predict_data/20210719/bgame3342_20210719.csv%22,%20%22head%22:%201,%20%22partition%22:%201,%20%22work_mode%22:%201,%20%22table_name%22:%20%22bgame3342_20210719%22,%20%22namespace%22:%20%22cl%22,%20%22count%22:%20752361,%20%22config%22:%20%22/data/projects/fate/python/cl/fc/config/20210719/upload_20210719_bgame3342.json%22,%20%22function%22:%20%22upload%22,%20%22drop%22:%20%221%22%7D HTTP/1.1" 200 -

10.200.224.54 - - [20/Jul/2021 16:33:14] "POST /v1/party/20210720163310959474309/local/0/resource/apply HTTP/1.1" 200 -

10.200.224.54 - - [20/Jul/2021 16:33:14] "POST /v1/party/20210720163310959474309/local/0/start HTTP/1.1" 200 -

记录了 FATE-Flow Server 收到的POST请求。

这一部分主要是federate_scheduler.py 向server 发起的POST 请求。

FATE Flow 日志

这一部分是fate运行过程中的主要产生的日志,用来排查fate job、task的运行状况。目录位于kubefate 的容器中, /data/projects/fate/logs/

其中 fate_flow 目录记录了fate 启动以来的总体的日志。 各个${jobid} 目录下,记录了各个job详细的日志。

相关处理类:python/fate_arch/common/log.py

fate_flow 目录下日志分类

总的结构类似,分成如下几个类型的日志

- DEBUG.log

- INFO.log

- ERROR.log

- WARNING.log

- peewee.log

- stat.log

- fate_flow_audit.log

- fate_flow_detect.log

- fate_flow_stat.log

- fate_flow_schedule.log

DEBUG、INFO、ERROR、WARNING

正常四个级别的日志输出

peewee.log

fate 操作数据是使用peewee 这一ORM 实现的,peewee.log 记录了相关操作的日志。关于peewee 可以参考官方文档

stat.log

记录了各param 的check ,感觉可有可无,不知道单独放一个日志的设计意图。

样例

[DEBUG] [2021-07-26 15:26:37,778] [1150092:140485761271616] - intersect_param.py[line:61]: Finish encode parameter check!

[DEBUG] [2021-07-26 15:26:37,778] [1150092:140485761271616] - intersect_param.py[line:184]: Finish intersect parameter check!

[DEBUG] [2021-07-26 15:26:37,778] [1150092:140485761271616] - intersect_param.py[line:61]: Finish encode parameter check!

[DEBUG] [2021-07-26 15:26:37,778] [1150092:140485761271616] - intersect_param.py[line:184]: Finish intersect parameter check!

[DEBUG] [2021-07-26 15:26:37,780] [1150092:140485761271616] - encrypt_param.py[line:71]: Finish encrypt parameter check!

[DEBUG] [2021-07-26 15:26:37,780] [1150092:140485761271616] - predict_param.py[line:44]: Finish predict parameter check!

[DEBUG] [2021-07-26 15:26:37,781] [1150092:140485761271616] - encrypt_param.py[line:71]: Finish encrypt parameter check!

[DEBUG] [2021-07-26 15:26:37,781] [1150092:140485761271616] - predict_param.py[line:44]: Finish predict parameter check!

[DEBUG] [2021-07-26 15:26:37,782] [1150092:140485761271616] - encrypt_param.py[line:71]: Finish encrypt parameter check!

[DEBUG] [2021-07-26 15:26:37,782] [1150092:140485761271616] - predict_param.py[line:44]: Finish predict parameter check!

fate_flow_audit.log

记录发起的grpc 请求。函数为 audit_logger。有调用的地方在

python/fate_flow/utils/api_utils.py 中的

federated_coordination_on_grpc

federated_coordination_on_http

forward_api

和 python/fate_flow/utils/grpc_utils.py 中的

unaryCall

日志样例:

[INFO] [2021-05-18 08:00:26,886] [1:140215515608832] - grpc_utils.py[line:93]: rpc receive: header {

task {

taskId: "2021051808002667189911"

}

src {

name: "2021051808002667189911"

partyId: "9999"

role: "fateflow"

callback {

ip: "10.200.96.205"

port: 9360

}

}

dst {

name: "2021051808002667189911"

partyId: "10000"

role: "fateflow"

}

command {

name: "fateflow"

}

operator: "POST"

conf {

overallTimeout: 30000

}

}

body {

key: "/v1/party/2021051808002667189911/host/10000/create"

value: "{\"name\": \"\", \"description\": \"\", \"tag\": \"\", \"is_initiator\": false, \"progress\": 0, \"ready_signal\": false, \"cancel_signal\": false, \"rerun_signal\": false, \"end_scheduling_updates\": 0, \"cores\": 0, \"memory\": 0, \"remaining_cores\": 0, \"remaining_memory\": 0, \"resource_in_use\": false, \"job_id\": \"2021051808002667189911\", \"dsl\": {\"components\": {\"dataio_0\": {\"module\": \"DataIO\", \"input\": {\"model\": [\"pipeline.dataio_0.dataio\"], \"data\": {\"data\": [\"args.eval_data\"]}}, \"output\": {\"data\": [\"train\"]}, \"CodePath\": \"federatedml/util/data_io.py/DataIO\"}, \"intersection_0\": {\"module\": \"Intersection\", \"output\": {\"data\": [\"train\"]}, \"input\": {\"data\": {\"data\": [\"dataio_0.train\"]}}, \"CodePath\": \"federatedml/statistic/intersect/intersect_model.py/IntersectGuest\"}, \"secureboost_0\": {\"module\": \"HeteroSecureBoost\", \"input\": {\"model\": [\"pipeline.secureboost_0.train\"], \"data\": {\"test_data\": [\"intersection_0.train\"]}}, \"output\": {\"data\": [\"train\"]}, \"CodePath\": \"federatedml/ensemble/boosting/hetero/hetero_secureboost_guest.py/HeteroSecureBoostingTreeGuest\"}}}, \"train_runtime_conf\": {\"initiator\": {\"role\": \"guest\", \"party_id\": 9999}, \"job_parameters\": {\"job_type\": \"train\", \"work_mode\": 1, \"backend\": 0, \"computing_engine\": \"EGGROLL\", \"federation_engine\": \"EGGROLL\", \"storage_engine\": \"EGGROLL\", \"engines_address\": {}, \"federated_mode\": \"MULTIPLE\", \"task_cores\": 2, \"task_parallelism\": 1, \"computing_partitions\": 2, \"federated_status_collect_type\": \"PULL\", \"model_id\": \"guest-9999#host-10000#model\", \"model_version\": \"2021051807524507002410\", \"eggroll_run\": {}, \"spark_run\": {}, \"rabbitmq_run\": {}, \"adaptation_parameters\": {\"task_nodes\": 1, \"task_cores_per_node\": 2, \"task_memory_per_node\": 0, \"request_task_cores\": 2, \"if_initiator_baseline\": true}}, \"role\": {\"guest\": [9999], \"host\": [10000]}, \"role_parameters\": {\"guest\": {\"args\": {\"data\": {\"train_data\": [{\"name\": \"breast_hetero_guest\", \"namespace\": \"experiment\"}], \"eval_data\": [{\"name\": \"breast_hetero_guest\", \"namespace\": \"experiment\"}]}}, \"dataio_0\": {\"with_label\": [true], \"label_name\": [\"y\"], \"label_type\": [\"int\"], \"output_format\": [\"dense\"]}}, \"host\": {\"args\": {\"data\": {\"train_data\": [{\"name\": \"breast_hetero_host\", \"namespace\": \"experiment\"}], \"eval_data\": [{\"name\": \"breast_hetero_host\", \"namespace\": \"experiment\"}]}}, \"dataio_0\": {\"with_label\": [false], \"output_format\": [\"dense\"]}}}, \"algorithm_parameters\": {\"secureboost_0\": {\"task_type\": \"classification\", \"learning_rate\": 0.1, \"num_trees\": 5, \"subsample_feature_rate\": 1, \"n_iter_no_change\": false, \"tol\": 0.0001, \"bin_num\": 50, \"objective_param\": {\"objective\": \"cross_entropy\"}, \"encrypt_param\": {\"method\": \"paillier\"}, \"predict_param\": {\"threshold\": 0.5}, \"cv_param\": {\"n_splits\": 5, \"shuffle\": false, \"random_seed\": 103, \"need_cv\": false}, \"validation_freqs\": 1}, \"evaluation_0\": {\"eval_type\": \"binary\"}}, \"config\": \"/data/projects/fate/examples/min_test_task/test/submit_job_guest.config_1621324363_9498\", \"dsl\": \"/data/projects/fate/examples/min_test_task/config/test_secureboost_train_dsl.json\", \"function\": \"submit_job\"}, \"roles\": {\"guest\": [9999], \"host\": [10000], \"arbiter\": []}, \"work_mode\": 1, \"initiator_role\": \"guest\", \"initiator_party_id\": 9999, \"role\": \"guest\", \"party_id\": 9999, \"runtime_conf\": {\"initiator\": {\"role\": \"guest\", \"party_id\": 9999}, \"job_parameters\": {\"job_type\": \"predict\", \"work_mode\": 1, \"backend\": 0, \"computing_engine\": \"EGGROLL\", \"federation_engine\": \"EGGROLL\", \"storage_engine\": \"EGGROLL\", \"engines_address\": {}, \"federated_mode\": \"MULTIPLE\", \"task_parallelism\": 1, \"computing_partitions\": 4, \"federated_status_collect_type\": \"PULL\", \"model_id\": \"guest-9999#host-10000#model\", \"model_version\": \"2021051807524507002410\", \"eggroll_run\": {}, \"spark_run\": {}, \"rabbitmq_run\": {}, \"adaptation_parameters\": {\"task_nodes\": 1, \"task_cores_per_node\": 4, \"task_memory_per_node\": 0, \"request_task_cores\": 4, \"if_initiator_baseline\": true}}, \"role\": {\"guest\": [9999], \"host\": [10000], \"arbiter\": []}, \"role_parameters\": {\"guest\": {\"args\": {\"data\": {\"eval_data\": [{\"name\": \"breast_hetero_guest\", \"namespace\": \"experiment\"}]}}}, \"host\": {\"args\": {\"data\": {\"eval_data\": [{\"name\": \"breast_hetero_host\", \"namespace\": \"experiment\"}]}}}}, \"config\": \"/data/projects/fate/examples/min_test_task/test/submit_job_guest.config_1621324825_2041\", \"function\": \"submit_job\"}, \"runtime_conf_on_party\": {\"initiator\": {\"role\": \"guest\", \"party_id\": 9999}, \"job_parameters\": {\"job_type\": \"predict\", \"work_mode\": 1, \"backend\": 0, \"computing_engine\": \"EGGROLL\", \"federation_engine\": \"EGGROLL\", \"storage_engine\": \"EGGROLL\", \"engines_address\": {}, \"federated_mode\": \"MULTIPLE\", \"task_parallelism\": 1, \"computing_partitions\": 4, \"federated_status_collect_type\": \"PULL\", \"model_id\": \"guest-9999#host-10000#model\", \"model_version\": \"2021051807524507002410\", \"eggroll_run\": {}, \"spark_run\": {}, \"rabbitmq_run\": {}, \"adaptation_parameters\": {\"task_nodes\": 1, \"task_cores_per_node\": 4, \"task_memory_per_node\": 0, \"request_task_cores\": 4, \"if_initiator_baseline\": true}}, \"role\": {\"guest\": [9999], \"host\": [10000], \"arbiter\": []}, \"role_parameters\": {\"guest\": {\"args\": {\"data\": {\"eval_data\": [{\"name\": \"breast_hetero_guest\", \"namespace\": \"experiment\"}]}}}, \"host\": {\"args\": {\"data\": {\"eval_data\": [{\"name\": \"breast_hetero_host\", \"namespace\": \"experiment\"}]}}}}, \"config\": \"/data/projects/fate/examples/min_test_task/test/submit_job_guest.config_1621324825_2041\", \"function\": \"submit_job\"}, \"src_role\": \"guest\", \"src_party_id\": 9999}"

}

fate_flow_detect.log

detector.py 产生的日志,涉及如下函数

- detect_running_task:探测正在运行的task

- detect_running_job:探测正在运行的job

- detect_resource_record:探测资源使用记录

- detect_expired_session:探测是否有过期的session,有的话,调用request_stop_jobs进行stop

[INFO] [2021-07-26 10:01:34,230] [1:139736070596352] - detector.py[line:38]: start to detect running task..

[INFO] [2021-07-26 10:01:34,241] [1:139736070596352] - detector.py[line:70]: finish detect 1 running task

[INFO] [2021-07-26 10:01:34,241] [1:139736070596352] - detector.py[line:74]: start detect running job

[INFO] [2021-07-26 10:01:34,251] [1:139736070596352] - detector.py[line:88]: finish detect running job

[INFO] [2021-07-26 10:01:34,251] [1:139736070596352] - detector.py[line:93]: start detect resource recycle

[INFO] [2021-07-26 10:01:34,255] [1:139736070596352] - detector.py[line:116]: finish detect resource recycle

[INFO] [2021-07-26 10:01:34,255] [1:139736070596352] - detector.py[line:120]: start detect expired session

[INFO] [2021-07-26 10:01:39,260] [1:139736070596352] - detector.py[line:38]: start to detect running task..

[INFO] [2021-07-26 10:01:39,270] [1:139736070596352] - detector.py[line:70]: finish detect 1 running task

[INFO] [2021-07-26 10:01:39,270] [1:139736070596352] - detector.py[line:74]: start detect running job

fate_flow_scheduler.log

这里和${jobid} 目录下的fate_flow_scheduler.log 记录具体job的调度细节不同,只记录最外层的job级别的调度。

[INFO] [2021-07-26 10:02:14,169] [1:139736062203648] - dag_scheduler.py[line:152]: schedule running job 202107260958386097411239

[INFO] [2021-07-26 10:02:55,598] [1:139736062203648] - dag_scheduler.py[line:152]: schedule running job 202107260959086853751240

[INFO] [2021-07-26 10:03:46,124] [1:139736062203648] - dag_scheduler.py[line:152]: schedule running job 202107261000016603571241

[INFO] [2021-07-26 10:04:37,635] [1:139736062203648] - dag_scheduler.py[line:158]: schedule running jobs finished

[INFO] [2021-07-26 10:04:37,635] [1:139736062203648] - dag_scheduler.py[line:161]: start schedule ready jobs

[INFO] [2021-07-26 10:04:37,645] [1:139736062203648] - dag_scheduler.py[line:163]: have 0 ready jobs

[INFO] [2021-07-26 10:04:37,645] [1:139736062203648] - dag_scheduler.py[line:171]: schedule ready jobs finished

[INFO] [2021-07-26 10:04:37,645] [1:139736062203648] - dag_scheduler.py[line:173]: start schedule rerun jobs

[INFO] [2021-07-26 10:04:37,652] [1:139736062203648] - dag_scheduler.py[line:175]: have 0 rerun jobs

[INFO] [2021-07-26 10:04:37,652] [1:139736062203648] - dag_scheduler.py[line:183]: schedule rerun jobs finished

[INFO] [2021-07-26 10:04:37,652] [1:139736062203648] - dag_scheduler.py[line:185]: start schedule end status jobs to update status

[INFO] [2021-07-26 10:04:37,673] [1:139736062203648] - dag_scheduler.py[line:187]: have 5 end status jobs

[INFO] [2021-07-26 10:04:37,674] [1:139736062203648] - dag_scheduler.py[line:189]: schedule end status job 202107260956208255521237

[INFO] [2021-07-26 10:04:37,829] [1:139736062203648] - dag_scheduler.py[line:189]: schedule end status job 202107260956590660001238

[INFO] [2021-07-26 10:04:37,956] [1:139736062203648] - dag_scheduler.py[line:189]: schedule end status job 202107260958386097411239

[INFO] [2021-07-26 10:04:38,088] [1:139736062203648] - dag_scheduler.py[line:189]: schedule end status job 202107260959086853751240

[INFO] [2021-07-26 10:04:38,225] [1:139736062203648] - dag_scheduler.py[line:189]: schedule end status job 202107261000016603571241

fate_flow_stat.log

记录各个进程的状态

[INFO] [2021-07-25 17:20:50,581] [1:139738990319424] - job_utils.py[line:345]: child process 676448 exit with exitcode 0

[INFO] [2021-07-25 17:20:50,582] [1:139738990319424] - job_utils.py[line:342]: no child process was immediately available

[INFO] [2021-07-25 17:20:56,256] [1:139738990319424] - job_utils.py[line:345]: child process 676533 exit with exitcode 0

[INFO] [2021-07-25 17:20:56,256] [1:139738990319424] - job_utils.py[line:342]: no child process was immediately available

[INFO] [2021-07-25 17:21:02,272] [1:139738990319424] - job_utils.py[line:345]: child process 676692 exit with exitcode 0

[INFO] [2021-07-25 17:21:02,273] [1:139738990319424] - job_utils.py[line:342]: no child process was immediately available

[INFO] [2021-07-25 17:21:09,043] [1:139738990319424] - job_utils.py[line:345]: child process 676378 exit with exitcode 0

[INFO] [2021-07-25 17:21:09,043] [1:139738990319424] - job_utils.py[line:342]: no child process was immediately available

[INFO] [2021-07-25 17:21:15,706] [1:139738990319424] - job_utils.py[line:345]: child process 676612 exit with exitcode 0

[WARNING] [2021-07-25 17:21:15,707] [1:139738990319424] - job_utils.py[line:348]: current process has no existing unwaited-for child processes.

${jobid} 目录下日志

${jobid} 目录下的日志与 fate_flow 的日志不完全一致,主要分成如下几个部分

- fate_flow_audit.log

- fate_flow_schedule.log

- fate_flow_sql.log

- r o l e / {role}/ role/{party_id}/

fate_flow_audit.log

同fate_flow 目录下的fate_flow_audit.log,fate_flow 下的fate_flow_audit.log 是各个${jobid} 中fate_flow_audit.log 的集合

fate_flow_schedule.log

记录fate调度的主要日志,函数为schedule_logger。不同于fate_flow 下的fate_flow_schedule.log,只记录dag_scheduler.py 的日志,调度过程中,记录了众多调用模块的日志,后文详细说明。

fate_flow_sql.log

记录操作DB的sql 日志。函数为sql_logger,FATE中操作DB的日志,调用在 job_saver.py:将job的各项metrics 写DB 和 save_model_info.py 将模型的相关信息写DB

python/fate_flow/operation/job_saver.py

create_job_family_entity 函数,创建job时,将job初始化信息写DB

execute_update 函数:更新job相关信息

FATE-1.5.1/python/fate_flow/utils/model_utils.py 中

save_model_info 函数,将模型相关信息写DB

r o l e / {role}/ role/{party_id}/

记录了各个role & party_id 下具体task执行的日志

具体目录结构为

- DEBUG.log

- INFO.log

- ERROR.log

- WARNING.log

- ${taskName}/DEBUG.log

- ${taskName}/INFO.log

- ${taskName}/ERROR.log

- ${taskName}/WARNING.log

- ${taskName}/peewee.log

- ${taskName}/fate_flow_schedule.log

- ${taskName}/stat.log

- ${taskName}/std.log

其中 DEBUG、INFO、WARNING、ERROR 是各个${taskName} 下对应日志的合集。

${taskName}/fate_flow_schedule.log 记录了task_executor.py 执行该task的日志

${taskName}/peewee.log 是该task 通过peewee 操作DB的记录

${taskName}/stat.log 记录了该task的相关统计值

${taskName}/std.log std 输出

Reference

【联邦学习之旅】C1 FATE Flow 源码解析