jenkins与apisix整合,实现自动化部署与负载均衡、灰度发布(蓝绿发布)

文章目录

-

- 1、安装

-

- 1.1、基于docker安装

- 1.2、基于RPM安装

- 2、灰度发布与蓝绿发布测试

-

- 2.1、compose安装nginx

-

- 2.1.1、创建目录

- 2.1.2、编辑nginx.conf配置文件

- 2.1.3、编辑docker-compose.yml文件

- 2.1.4、启动nginx

- 2.2、部署apisix和apisix-dashboard

- 2.3、traffic-split插件实现灰度和蓝绿发布

- 2.3.1、灰度发布

- 2.3.2、蓝绿发布

- 2.3.3、负载发布

- 3、jenkins与apisix整合,实现负载均衡和灰度发布

1、安装

安装教程:https://apisix.apache.org/zh/docs/apisix/installation-guide/

1.1、基于docker安装

# 下载

git clone https://github.com/apache/apisix-docker.git

cd apisix-docker/example

#启动

docker compose -p docker-apisix up -d

1.2、基于RPM安装

# 安装etcd

ETCD_VERSION='3.5.4'

wget https://github.com/etcd-io/etcd/releases/download/v${ETCD_VERSION}/etcd-v${ETCD_VERSION}-linux-amd64.tar.gz

tar -xvf etcd-v${ETCD_VERSION}-linux-amd64.tar.gz && \

cd etcd-v${ETCD_VERSION}-linux-amd64 && \

sudo cp -a etcd etcdctl /usr/bin/

nohup etcd >/tmp/etcd.log 2>&1 &

# 安装 OpenResty仓库

yum install -y https://repos.apiseven.com/packages/centos/apache-apisix-repo-1.0-1.noarch.rpm

# 安装 APISIX 的 RPM 仓库:

yum-config-manager --add-repo https://repos.apiseven.com/packages/centos/apache-apisix.repo

# 安装 APISIX

yum install apisix-2.13.1

#APISIX 安装完成后,你可以运行以下命令初始化 NGINX 配置文件和 etcd:

apisix init

#启动 APISIX

apisix start

2、灰度发布与蓝绿发布测试

三台虚拟机:

| 服务器 | ip | 应用 |

|---|---|---|

| m1 | 192.168.28.133 | apisix(网关) |

| s1 | 192.168.28.136 | nginx(web服务) |

| s2 | 192.168.28.132 | nginx(web服务) |

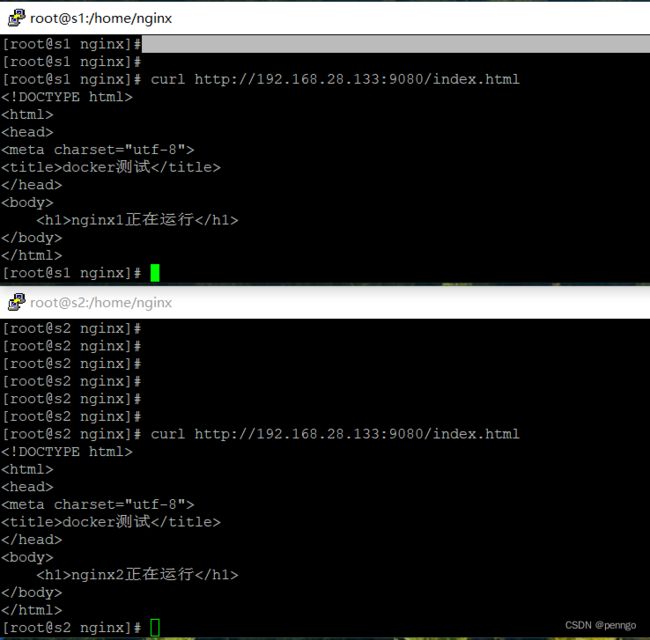

s1、s2机器作为负载机器,完全灰度和蓝绿发布的测试机器。

2.1、compose安装nginx

s1和s2两台机器负责提供web服务,两台机器都通过docker安装nginx。安装配置如下。

2.1.1、创建目录

mkdir -p /home/nginx/www /home/nginx/logs /home/nginx/conf

vi /home/nginx/www/index.html

# index.html内容

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>docker测试</title>

</head>

<body>

<h1>nginx正在运行</h1>

</body>

</html>

2.1.2、编辑nginx.conf配置文件

vi /home/nginx/conf/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

server{

listen 80;

server_name localhost;

charset utf-8;

location / {

root /usr/share/nginx/html/;

try_files $uri $uri/ =404;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

2.1.3、编辑docker-compose.yml文件

docker-compose.yml配置说明:https://docs.docker.com/compose/compose-file/

vi /home/nginx/docker-compose.yml

version: '3.3'

services:

nginx:

image: nginx

restart: always

hostname: nginx

container_name: nginx

privileged: true

ports:

- 9081:80

volumes:

- ./conf/nginx.conf:/etc/nginx/nginx.conf

- ./www/:/usr/share/nginx/html/

- ./logs/:/var/log/nginx/

2.1.4、启动nginx

# 启动

$ docker compose up -d

# 停止

$ docker compose down

2.2、部署apisix和apisix-dashboard

使用https://github.com/apache/apisix-docker的docker compose部署模板

相关配置文件

/usr/local/apisix-docker/apisix_conf/config.yaml

apisix:

node_listen: 9080 # APISIX listening port

enable_ipv6: false

enable_control: true

control:

ip: "0.0.0.0"

port: 9092

deployment:

admin:

allow_admin: # http://nginx.org/en/docs/http/ngx_http_access_module.html#allow

- 0.0.0.0/0 # We need to restrict ip access rules for security. 0.0.0.0/0 is for test.

admin_key:

- name: "admin"

key: edd1c9f034335f136f87ad84b625c8f1

role: admin # admin: manage all configuration data

- name: "viewer"

key: 4054f7cf07e344346cd3f287985e76a2

role: viewer

etcd:

host: # it's possible to define multiple etcd hosts addresses of the same etcd cluster.

- "http://etcd:2379" # multiple etcd address

prefix: "/apisix" # apisix configurations prefix

timeout: 30 # 30 seconds

plugin_attr:

prometheus:

export_addr:

ip: "0.0.0.0"

port: 9091

/usr/local/apisix-docker/dashboard_conf/conf.yaml

conf:

listen:

host: 0.0.0.0 # `manager api` listening ip or host name

port: 9000 # `manager api` listening port

allow_list: # If we don't set any IP list, then any IP access is allowed by default.

- 0.0.0.0/0

etcd:

endpoints: # supports defining multiple etcd host addresses for an etcd cluster

- "http://etcd:2379"

# yamllint disable rule:comments-indentation

# etcd basic auth info

# username: "root" # ignore etcd username if not enable etcd auth

# password: "123456" # ignore etcd password if not enable etcd auth

mtls:

key_file: "" # Path of your self-signed client side key

cert_file: "" # Path of your self-signed client side cert

ca_file: "" # Path of your self-signed ca cert, the CA is used to sign callers' certificates

# prefix: /apisix # apisix config's prefix in etcd, /apisix by default

log:

error_log:

level: warn # supports levels, lower to higher: debug, info, warn, error, panic, fatal

file_path:

logs/error.log # supports relative path, absolute path, standard output

# such as: logs/error.log, /tmp/logs/error.log, /dev/stdout, /dev/stderr

access_log:

file_path:

logs/access.log # supports relative path, absolute path, standard output

# such as: logs/access.log, /tmp/logs/access.log, /dev/stdout, /dev/stderr

# log example: 2020-12-09T16:38:09.039+0800 INFO filter/logging.go:46 /apisix/admin/routes/r1 {"status": 401, "host": "127.0.0.1:9000", "query": "asdfsafd=adf&a=a", "requestId": "3d50ecb8-758c-46d1-af5b-cd9d1c820156", "latency": 0, "remoteIP": "127.0.0.1", "method": "PUT", "errs": []}

authentication:

secret:

secret # secret for jwt token generation.

# NOTE: Highly recommended to modify this value to protect `manager api`.

# if it's default value, when `manager api` start, it will generate a random string to replace it.

expire_time: 3600 # jwt token expire time, in second

users: # yamllint enable rule:comments-indentation

- username: admin # username and password for login `manager api`

password: admin

- username: user

password: user

plugins: # plugin list (sorted in alphabetical order)

- api-breaker

- authz-keycloak

- basic-auth

- batch-requests

- consumer-restriction

- cors

# - dubbo-proxy

- echo

# - error-log-logger

# - example-plugin

- fault-injection

- grpc-transcode

- hmac-auth

- http-logger

- ip-restriction

- jwt-auth

- kafka-logger

- key-auth

- limit-conn

- limit-count

- limit-req

# - log-rotate

# - node-status

- openid-connect

- prometheus

- proxy-cache

- proxy-mirror

- proxy-rewrite

- redirect

- referer-restriction

- request-id

- request-validation

- response-rewrite

- serverless-post-function

- serverless-pre-function

# - skywalking

- sls-logger

- syslog

- tcp-logger

- udp-logger

- uri-blocker

- wolf-rbac

- zipkin

- server-info

- traffic-split

/usr/local/apisix-docker/etcd_conf/etcd.conf.yml

# Human-readable name for this member.

name: 'default'

# Path to the data directory.

data-dir:

# Path to the dedicated wal directory.

wal-dir:

# Number of committed transactions to trigger a snapshot to disk.

snapshot-count: 10000

# Time (in milliseconds) of a heartbeat interval.

heartbeat-interval: 100

# Time (in milliseconds) for an election to timeout.

election-timeout: 1000

# Raise alarms when backend size exceeds the given quota. 0 means use the

# default quota.

quota-backend-bytes: 0

# List of comma separated URLs to listen on for peer traffic.

listen-peer-urls: http://localhost:2380

# List of comma separated URLs to listen on for client traffic.

listen-client-urls: http://localhost:2379

# Maximum number of snapshot files to retain (0 is unlimited).

max-snapshots: 5

# Maximum number of wal files to retain (0 is unlimited).

max-wals: 5

# Comma-separated white list of origins for CORS (cross-origin resource sharing).

cors:

# List of this member's peer URLs to advertise to the rest of the cluster.

# The URLs needed to be a comma-separated list.

initial-advertise-peer-urls: http://localhost:2380

# List of this member's client URLs to advertise to the public.

# The URLs needed to be a comma-separated list.

advertise-client-urls: http://localhost:2379

# Discovery URL used to bootstrap the cluster.

discovery:

# Valid values include 'exit', 'proxy'

discovery-fallback: 'proxy'

# HTTP proxy to use for traffic to discovery service.

discovery-proxy:

# DNS domain used to bootstrap initial cluster.

discovery-srv:

# Initial cluster configuration for bootstrapping.

initial-cluster:

# Initial cluster token for the etcd cluster during bootstrap.

initial-cluster-token: 'etcd-cluster'

# Initial cluster state ('new' or 'existing').

initial-cluster-state: 'new'

# Reject reconfiguration requests that would cause quorum loss.

strict-reconfig-check: false

# Accept etcd V2 client requests

enable-v2: true

# Enable runtime profiling data via HTTP server

enable-pprof: true

# Valid values include 'on', 'readonly', 'off'

proxy: 'off'

# Time (in milliseconds) an endpoint will be held in a failed state.

proxy-failure-wait: 5000

# Time (in milliseconds) of the endpoints refresh interval.

proxy-refresh-interval: 30000

# Time (in milliseconds) for a dial to timeout.

proxy-dial-timeout: 1000

# Time (in milliseconds) for a write to timeout.

proxy-write-timeout: 5000

# Time (in milliseconds) for a read to timeout.

proxy-read-timeout: 0

client-transport-security:

# Path to the client server TLS cert file.

cert-file:

# Path to the client server TLS key file.

key-file:

# Enable client cert authentication.

client-cert-auth: false

# Path to the client server TLS trusted CA cert file.

trusted-ca-file:

# Client TLS using generated certificates

auto-tls: false

peer-transport-security:

# Path to the peer server TLS cert file.

cert-file:

# Path to the peer server TLS key file.

key-file:

# Enable peer client cert authentication.

client-cert-auth: false

# Path to the peer server TLS trusted CA cert file.

trusted-ca-file:

# Peer TLS using generated certificates.

auto-tls: false

# Enable debug-level logging for etcd.

debug: false

logger: zap

# Specify 'stdout' or 'stderr' to skip journald logging even when running under systemd.

log-outputs: [stderr]

# Force to create a new one member cluster.

force-new-cluster: false

auto-compaction-mode: periodic

auto-compaction-retention: "1"

prometheus和grafana配置省略

/usr/local/apisix-docker/docker-compose.yml

version: "3"

services:

apisix-dashboard:

image: apache/apisix-dashboard:2.13-alpine

restart: always

volumes:

- ./dashboard_conf/conf.yaml:/usr/local/apisix-dashboard/conf/conf.yaml

ports:

- "9000:9000"

networks:

apisix:

apisix:

image: apache/apisix:3.0.0-debian

restart: always

volumes:

- ./apisix_log:/usr/local/apisix/logs

- ./apisix_conf/config.yaml:/usr/local/apisix/conf/config.yaml:ro

depends_on:

- etcd

##network_mode: host

ports:

- "9180:9180/tcp"

- "9080:9080/tcp"

- "9091:9091/tcp"

- "9443:9443/tcp"

- "9092:9092/tcp"

networks:

apisix:

etcd:

image: bitnami/etcd:3.4.15

restart: always

volumes:

- etcd_data:/bitnami/etcd

environment:

ETCD_ENABLE_V2: "true"

ALLOW_NONE_AUTHENTICATION: "yes"

ETCD_ADVERTISE_CLIENT_URLS: "http://0.0.0.0:2379"

ETCD_LISTEN_CLIENT_URLS: "http://0.0.0.0:2379"

ports:

- "2379:2379/tcp"

networks:

apisix:

prometheus:

image: prom/prometheus:v2.25.0

restart: always

volumes:

- ./prometheus_conf/prometheus.yml:/etc/prometheus/prometheus.yml

ports:

- "9090:9090"

networks:

apisix:

grafana:

image: grafana/grafana:7.3.7

restart: always

ports:

- "3000:3000"

volumes:

- "./grafana_conf/provisioning:/etc/grafana/provisioning"

- "./grafana_conf/dashboards:/var/lib/grafana/dashboards"

- "./grafana_conf/config/grafana.ini:/etc/grafana/grafana.ini"

networks:

apisix:

networks:

apisix:

driver: bridge

volumes:

etcd_data:

driver: local

启动

cd /usr/local/apisix-docker

# 启动

$ docker compose up -d

# 停止

$ docker compose down

2.3、traffic-split插件实现灰度和蓝绿发布

官方教程:https://apisix.apache.org/zh/docs/apisix/plugins/traffic-split/

2.3.1、灰度发布

通过weighted_upstreams的weight属性来实现流量分流。按 1:9 的权重流量比例进行划分,其中10%的流量到达运行在s1服务器,90%的流量到达运行在s2服务器。

curl http://127.0.0.1:9180/apisix/admin/routes/1 \

-H 'X-API-KEY: edd1c9f034335f136f87ad84b625c8f1' -X PUT -d '

{

"uri": "/*",

"name": "route_gray",

"plugins": {

"traffic-split": {

"rules": [

{

"weighted_upstreams": [

{

"upstream": {

"name": "s1",

"type": "roundrobin",

"nodes": {

"192.168.28.136:9081":10

},

"timeout": {

"connect": 15,

"send": 15,

"read": 15

}

},

"weight": 1

},

{

"weight": 9

}

]

}

]

}

},

"upstream": {

"name": "s2",

"type": "roundrobin",

"nodes": {

"192.168.28.132:9081": 1

}

}

}'

2.3.2、蓝绿发布

在新功能发布时,线上环境临时变为蓝绿环境。新功能先发在蓝色环境(s1服务器)更新,并且限定客户端IP=192.168.28.132才能访问。

curl http://127.0.0.1:9180/apisix/admin/routes/2 \

-H 'X-API-KEY: edd1c9f034335f136f87ad84b625c8f1' -X PUT -d '

{

"uri": "/*",

"name": "route_blue",

"plugins": {

"traffic-split": {

"rules": [

{

"match": [

{

"vars": [

["remote_addr","==","192.168.28.132"]

]

}

],

"weighted_upstreams": [

{

"upstream": {

"name": "s2",

"type": "roundrobin",

"nodes": {

"192.168.28.132:9081":10

}

}

}

]

}

]

}

},

"upstream": {

"name": "s1",

"type": "roundrobin",

"nodes": {

"192.168.28.136:9081": 1

}

}

}'

curl访问

curl http://192.168.28.133:9080/index.html

2.3.3、负载发布

curl "http://127.0.0.1:9180/apisix/admin/routes/3" \

-H "X-API-KEY: dds1c9f0343e5f13rf8dad84bb25c89d" -X PUT -d '

{

"uri": "/*",

"name": "balance",

"desc": "负载模式",

"upstream": {

"type": "roundrobin",

"nodes": {

"192.168.28.132:9081": 1,

"192.168.28.136:9081": 1

}

}

}'

3、jenkins与apisix整合,实现负载均衡和灰度发布

jenkins部署脚本参考https://blog.csdn.net/penngo/article/details/126687946

pipeline {

// 省略的代码

parameters {

// 省略的代码

choice(name: 'type', choices:[

'灰度发布',

'负载发布'

],description: '发布方式')

}

stages {

// 省略的代码

}

post {

success{

script {

def status_balance = 1

def status_gray = 0

if(params.type == "灰度发布"){

status_balance = 0

status_gray = 1

}

def route_balance = """curl http://127.0.0.1:9180/apisix/admin/routes/3 -H 'X-API-KEY: dds1c9f0343e5f13rf8dad84bb25c89d' -X PATCH -i -d '{"status": ${status_balance} }'"""

def route_gray= """curl http://127.0.0.1:9180/apisix/admin/routes/2 -H 'X-API-KEY: dds1c9f0343e5f13rf8dad84bb25c89d' -X PATCH -i -d '{"status": ${status_gray} }'"""

sh("""

${route_balance}

${route_gray}

""")

}

}

}

}