Linux源码剖析匿名共享内存shmem原理

如下问题如果都清楚了就不用看本文了:

1. shmem ram文件系统的初始化流程是怎样的

2. shmem思想上想复用基于文件的操作流程,实现上shmem也引入了一个文件,那么类似文件open会生成struct file,shmem的struct file怎么生成的

3. shmem的phsycial page是怎么创建的,page属性是如何的(迁移属性,_refcount,_mapcount等)。

4. shmem page怎样回收的

概述

进程间共享匿名内存有两种重要的方式,其一是mmap设置MAP_SHARED | PRIVATE创建的虚拟地址区域,这片区域fork执行之后,由父子进程共享。其二是主动调用shmget/shmat相关接口。此外android操作系统的ashmem匿名共享内存也是基于linux内核的shmem实现。本文我们将从源码角度剖析内核shmem的设计和实现原理。

shmem设计的基本思想

当vma中的页面对应磁盘文件时,系统在缺页的时候为了读取一页会调用vma_area_struct->a_ops中的fault函数(filemap_fault)。要把一个page写回磁盘设备使用inode->i_mapping->a_ops或者page->mapping->a_ops在address_space_operations找到相应的writepage函数。当进行普通文件操作,如mmap(),read()和write()时,系统会通过struct file *filp的file_operations指针f_op进行相应的函数调用。f_op在文件open时候从inode->i_fop设置:

fs/read_write.c: static int do_dentry_open

上面的流程很清晰,不过却无法处理匿名页的情况,因为匿名页没有对应一个文件,所以为复用上述清晰的逻辑Linux引入了基于RAM文件系统的文件,vma都由这个文件系统中的一个文件作为后援。

同时,shmem中的page也要考虑如何回收:即页面回收时会写入swap分区当中。

虚拟文件系统初始化

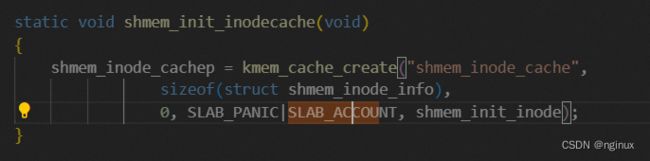

linux系统启动的过程中会调用到shmem_init初始化虚拟文件系统,linux给shmem创建虚拟文件,必然就有文件对应的inode,shmem文件系统创建的inode附带shmem_inode_info结构,这个结构含有文件系统的私有信息,SHMEM_I()函数以inode为参数,返回shmem_inode_info,而shmem_init_inode_cache就是初始化shmem_inode_info的kmem_cache:

shmem_inode_info定义在

各个字段的含义:

lock : 数据结构并发访问保护的自旋锁。

flags: 相关标志,详见linux mm.h中的介绍。

alloced: shmem创建的pages的数量。

swapped: 当前inode有很多pages,其中写入swapcache交换缓存的page数量。

shrinklist: 与huge page相关,暂不分析。

swaplist: shmem_inode_info结构体通过该字段挂到shmem.c中shmem_swaplist双向链表中,这个挂接过程是在shmem_writepage中实现,后面源码会分析到。也就是说inode对应的page要写入swapcache的时候,就要将shmem_inode_info挂到shmem_swaplist。

注册文件系统

shmem函数指针结构体

shmem中定义了address_space_operations结构体shmem_aops和vm_operations_struct shmem_vm_ops,分别对应缺页处理和写页面到swapcache中,定义分别如下:

static const struct vm_operations_struct shmem_vm_ops = {

.fault = shmem_fault,

.map_pages = filemap_map_pages,

#ifdef CONFIG_NUMA

.set_policy = shmem_set_policy,

.get_policy = shmem_get_policy,

#endif

};

static const struct address_space_operations shmem_aops = {

.writepage = shmem_writepage,

.set_page_dirty = __set_page_dirty_no_writeback,

#ifdef CONFIG_TMPFS

.write_begin = shmem_write_begin,

.write_end = shmem_write_end,

#endif

#ifdef CONFIG_MIGRATION

.migratepage = migrate_page,

#endif

.error_remove_page = generic_error_remove_page,

};匿名VMA使用shmem_vm_ops作为vm_operations_struct,所以中断缺页时会调用shmem_fault分配物理page。

文件和索引节点操作需要两个数据结构file_operations和inode_operations,分别定义如下:

static const struct file_operations shmem_file_operations = {

.mmap = shmem_mmap,

.get_unmapped_area = shmem_get_unmapped_area,

#ifdef CONFIG_TMPFS

.llseek = shmem_file_llseek,

.read_iter = shmem_file_read_iter,

.write_iter = generic_file_write_iter,

.fsync = noop_fsync,

.splice_read = generic_file_splice_read,

.splice_write = iter_file_splice_write,

.fallocate = shmem_fallocate,

#endif

};

static const struct inode_operations shmem_inode_operations = {

.getattr = shmem_getattr,

.setattr = shmem_setattr,

#ifdef CONFIG_TMPFS_XATTR

.listxattr = shmem_listxattr,

.set_acl = simple_set_acl,

#endif

};用户态空间向shmem 内存中写入数据就可以调用shmem_file_operations的write_iter接口。

shmem虚拟文件系统创建文件(类似普通文件的open过程)

shmem设计仿照了普通文件的流程,创建普通文件的时候,内核态会初始化struct file结构体和文件相应的inode,shmem这里使用虚拟文件系统也是类似流程,本小节描述shmem对应的文件的创建流程,具体实现函数为:shmem_file_setup,该函数主要创建shmem的strcut file和inode结构体 。

static struct file *__shmem_file_setup(struct vfsmount *mnt, const char *name, loff_t size,

unsigned long flags, unsigned int i_flags)

{

struct inode *inode;

struct file *res;

...

inode = shmem_get_inode(mnt->mnt_sb, NULL, S_IFREG | S_IRWXUGO, 0,

flags);

if (unlikely(!inode)) {

shmem_unacct_size(flags, size);

return ERR_PTR(-ENOSPC);

}

inode->i_flags |= i_flags;

inode->i_size = size;

clear_nlink(inode); /* It is unlinked */

res = ERR_PTR(ramfs_nommu_expand_for_mapping(inode, size));

if (!IS_ERR(res))

//新建file的f_op = shmem_file_operations

res = alloc_file_pseudo(inode, mnt, name, O_RDWR,

&shmem_file_operations);

if (IS_ERR(res))

iput(inode);

return res;

}

shmem_get_inode创建inode;alloc_file_psedo创建file对象。

shmem_get_inode函数:

static struct inode *shmem_get_inode(struct super_block *sb, const struct inode *dir,

umode_t mode, dev_t dev, unsigned long flags)

{

struct inode *inode;

struct shmem_inode_info *info;

struct shmem_sb_info *sbinfo = SHMEM_SB(sb);

ino_t ino;

if (shmem_reserve_inode(sb, &ino))

return NULL;

inode = new_inode(sb);

if (inode) {

...

switch (mode & S_IFMT) {

...

case S_IFREG:

inode->i_mapping->a_ops = &shmem_aops;

inode->i_op = &shmem_inode_operations;

inode->i_fop = &shmem_file_operations;

mpol_shared_policy_init(&info->policy,

shmem_get_sbmpol(sbinfo));

break;

...

}

lockdep_annotate_inode_mutex_key(inode);

} else

shmem_free_inode(sb);

return inode;

}shmem的物理page创建

发生缺页中断的时候,如果vma->vm_ops->fault存在,do_faul文件缺页处理函数中会调用该fault函数,具体可以参考Linux mmap系统调用视角看缺页中断_nginux的博客-CSDN博客

所以shmem的缺页中断会调用shmem_vm_ops 中的fault函数,即shmem_fault。核心函数是shmem_getpage_gfp:负责分配新页或者在swapcache或者swap分区中找到该页。

static vm_fault_t shmem_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

struct inode *inode = file_inode(vma->vm_file);

gfp_t gfp = mapping_gfp_mask(inode->i_mapping);

enum sgp_type sgp;

int err;

vm_fault_t ret = VM_FAULT_LOCKED;

...

处理与fallocate相关逻辑,暂不分析。

...

sgp = SGP_CACHE;

if ((vma->vm_flags & VM_NOHUGEPAGE) ||

test_bit(MMF_DISABLE_THP, &vma->vm_mm->flags))

sgp = SGP_NOHUGE;

else if (vma->vm_flags & VM_HUGEPAGE)

sgp = SGP_HUGE;

//缺页分配phsical page的核心函数

err = shmem_getpage_gfp(inode, vmf->pgoff, &vmf->page, sgp,

gfp, vma, vmf, &ret);

if (err)

return vmf_error(err);

return ret;

}shmem_getpage_gfp

/*

* shmem_getpage_gfp - find page in cache, or get from swap, or allocate

*

* If we allocate a new one we do not mark it dirty. That's up to the

* vm. If we swap it in we mark it dirty since we also free the swap

* entry since a page cannot live in both the swap and page cache.

*

* vmf and fault_type are only supplied by shmem_fault:

* otherwise they are NULL.

*/

static int shmem_getpage_gfp(struct inode *inode, pgoff_t index,

struct page **pagep, enum sgp_type sgp, gfp_t gfp,

struct vm_area_struct *vma, struct vm_fault *vmf,

vm_fault_t *fault_type)

{

struct address_space *mapping = inode->i_mapping;

struct shmem_inode_info *info = SHMEM_I(inode);

...

//先从mapping指向的缓存中查找,如果没有找到,尝试从swapcache和swap分区查找page

page = find_lock_entry(mapping, index);

if (xa_is_value(page)) {

error = shmem_swapin_page(inode, index, &page,

sgp, gfp, vma, fault_type);

if (error == -EEXIST)

goto repeat;

*pagep = page;

return error;

}

if (page && sgp == SGP_WRITE)

mark_page_accessed(page);

...

alloc_huge:

page = shmem_alloc_and_acct_page(gfp, inode, index, true);

if (IS_ERR(page)) {

alloc_nohuge:

//最终调用alloc_page创建物理page,内部会调用__SetPageSwapBacked(page);

page = shmem_alloc_and_acct_page(gfp, inode,

index, false);

}

...

if (sgp == SGP_WRITE)

__SetPageReferenced(page);

//将新建的page加入mapping对应的缓存空间,同时设置了page->mapping和page->index字段

error = shmem_add_to_page_cache(page, mapping, hindex,

NULL, gfp & GFP_RECLAIM_MASK,

charge_mm);

if (error)

goto unacct;

//page加入相应的lru链表,shmem是inactive anon lru链表。因为内核最终判定是加入哪个

//lru是通过page_is_file_lru,该函数:!PageSwapBacked,如果满足即file lru,否则

//anon lru。

lru_cache_add(page);

alloced = true;

...

}要点:

shmem_alloc_and_acct_page:最终调用alloc_page创建物理page,内部会调用__SetPageSwapBacked(page); 即shmem page是SwapBacked。

shmem_add_to_page_cache:将新建的page加入mapping对应的缓存空间,设置了page->mapping和page->index字段,同时增加了NR_FILE_PAGES和NR_SHMEM计数:

lru_cache_add:page加入相应的lru链表,shmem是inactive anon lru链表。因为内核最终判定是加入哪个lru是通过page_is_file_lru,该函数:!PageSwapBacked,如果满足即file lru,否则 anon lru。

shmem页面回写

shmem的物理page在内存紧张的时候会进行回收,由于不像file-back page可以写回磁盘,shmem的流程某些程度上类似anon page,会通过pageout换出到交换分区,其调用栈如下:

#0 shmem_writepage (page=0xffffea0000008000, wbc=0xffff888004857440) at mm/shmem.c:1371

#1 0xffffffff8135e671 in pageout (page=0xffffea0000008000, mapping=0xffff888000d78858) at mm/vmscan.c:830

#2 0xffffffff8134f168 in shrink_page_list (page_list=0xffff888004857850, pgdat=0xffff888007fda000, sc=0xffff888004857d90, ttu_flags=(unknown: 0), stat=0xffff888004857890, ignore_references=false)

at mm/vmscan.c:1355

#3 0xffffffff81351477 in shrink_inactive_list (nr_to_scan=1, lruvec=0xffff888005c6a000, sc=0xffff888004857d90, lru=LRU_INACTIVE_ANON) at mm/vmscan.c:1962

#4 0xffffffff81352312 in shrink_list (lru=LRU_INACTIVE_ANON, nr_to_scan=1, lruvec=0xffff888005c6a000, sc=0xffff888004857d90) at mm/vmscan.c:2172

#5 0xffffffff81352c97 in shrink_lruvec (lruvec=0xffff888005c6a000, sc=0xffff888004857d90) at mm/vmscan.c:2467

#6 0xffffffff813533f1 in shrink_node_memcgs (pgdat=0xffff888007fda000, sc=0xffff888004857d90) at mm/vmscan.c:2655

#7 0xffffffff81353b0a in shrink_node (pgdat=0xffff888007fda000, sc=0xffff888004857d90) at mm/vmscan.c:2772

#8 0xffffffff81355cd8 in kswapd_shrink_node (pgdat=0xffff888007fda000, sc=0xffff888004857d90) at mm/vmscan.c:3514

#9 0xffffffff813561ac in balance_pgdat (pgdat=0xffff888007fda000, order=0, highest_zoneidx=0) at mm/vmscan.c:3672

#10 0xffffffff81356ae4 in kswapd (p=0xffff888007fda000) at mm/vmscan.c:3930

#11 0xffffffff811a2249 in kthread (_create=) at kernel/kthread.c:292

shmem_writepage在回收页面时将shmem写到swapcache当中:

/*

* Move the page from the page cache to the swap cache.

*/

static int shmem_writepage(struct page *page, struct writeback_control *wbc)

{

struct shmem_inode_info *info;

struct address_space *mapping;

struct inode *inode;

swp_entry_t swap;

pgoff_t index;

VM_BUG_ON_PAGE(PageCompound(page), page);

BUG_ON(!PageLocked(page));

mapping = page->mapping;

index = page->index;

inode = mapping->host;

info = SHMEM_I(inode);

if (info->flags & VM_LOCKED)

goto redirty;

if (!total_swap_pages)

goto redirty;

...

//从swap分区中获取一个空闲槽位。

swap = get_swap_page(page);

if (!swap.val)

goto redirty;

/*

* Add inode to shmem_unuse()'s list of swapped-out inodes,

* if it's not already there. Do it now before the page is

* moved to swap cache, when its pagelock no longer protects

* the inode from eviction. But don't unlock the mutex until

* we've incremented swapped, because shmem_unuse_inode() will

* prune a !swapped inode from the swaplist under this mutex.

*/

mutex_lock(&shmem_swaplist_mutex);

//将当前shmem_inode_info挂接到shmem_swaplist当中,shmem_swaplist

if (list_empty(&info->swaplist))

list_add(&info->swaplist, &shmem_swaplist);

//将page添加到swapcache address_space当中

if (add_to_swap_cache(page, swap,

__GFP_HIGH | __GFP_NOMEMALLOC | __GFP_NOWARN,

NULL) == 0) {

spin_lock_irq(&info->lock);

shmem_recalc_inode(inode);

info->swapped++;

spin_unlock_irq(&info->lock);

swap_shmem_alloc(swap);

//从page cache space中删除

shmem_delete_from_page_cache(page, swp_to_radix_entry(swap));

mutex_unlock(&shmem_swaplist_mutex);

BUG_ON(page_mapped(page));

//开始向交换分区写入,或者写入磁盘,或者zram压缩内存。

swap_writepage(page, wbc);

return 0;

}

...

return 0;

}注意:

- swap_writepage会调用set_page_writeback设置page状态为writeback,也就说page正在回写,这影响/proc/meminfo Writeback统计,也就说不管是匿名页写回交换分区(或者压缩zram),还是write系统调用page cache向磁盘文件回写,都将统计到NR_WRITEBACK中,影响proc/meminfo的Writeback字段统计。