百度网盘高速直链提取

注:为了防止接口滥用,这里会屏蔽掉,仅供参考,接口自行探索。

最近发现了百度网盘的接口哇,然后就写了这个网站。

这个接口是www.an***.com/pan/.....。然后就开始了探索之旅。

首先是www.a*******.com/list,请求是POST,请求是百度网盘的分享链接和提取码,返回各种信息,shareID,shareUK,surl,bdclnd,fsid,是为后面提取链接用的。其他的是文件信息(大小,名称什么的)。

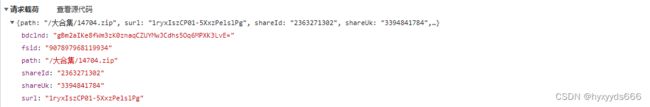

请求:

返回:

知道了这些,然后问题来了,当你上手写Python时,传数据,返回403......这个很好解释,没权限呗,请求头走起

header={"Accept":"*/*","Accept-Encoding":"gzip, deflate","Accept-Language":"zh-CN,zh;q=0.9","Connection":"keep-alive","Content-Length":"1911","Content-Type":"application/json; charset=UTF-8","Cookie":"__51vcke__JQGRABELTIK919aI=f5234120-4649-56e4-9626-da06ddcd4e8c; __51vuft__JQGRABELTIK919aI=1657722727458; __51uvsct__JQGRABELTIK919aI=4; __vtins__JQGRABELTIK919aI=%7B%22sid%22%3A%20%229c805819-ae46-5d39-93fb-025a7c8d0772%22%2C%20%22vd%22%3A%202%2C%20%22stt%22%3A%206946%2C%20%22dr%22%3A%206946%2C%20%22expires%22%3A%201657789254600%2C%20%22ct%22%3A%201657787454600%7D","Host":"www.ancode.top","Origin":"http://www.a******.com","Referer":"http://www.a******.com/","User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36","X-Requested-With":"XMLHttpRequest",}

OK,获取到文件信息。

{"code":0,"msg":"suc","shareUk":"1102886249261","shareId":"38694211620","bdclnd":"s7cCeoxL6CM/oFyqxKvoJqaCRQW7NSBYG5YJW8LsF/s=","data":[{"category":"5","fs_id":"923862963440171","isdir":"0","local_ctime":"1528261086","local_mtime":"1528261086","md5":"557f78673mce0c7ca2735b5849dc9170","path":"/DigitalLicense.exe","server_ctime":"1626177868","server_filename":"DigitalLicense.exe","server_mtime":"1626177868","size":"2.86MB","downing":0}],"surl":"1GROBHPI8E6WnhK6j7gkR0g"}下一个:获取直链接口:www.a*******.com/pan/getsuperlink

传参的上面也说了:shareID,shareUK,surl,bdclnd,fsid。

返回直链

请求

ps:这里的path值可有可无。

返回:

OK,直接获取到直链。

代码参考

import requests

from bs4 import BeautifulSoup

import json

import pyperclip

def get_list(link,pwd):

print('正在获取文件信息...')

data={"link":link, "pwd":pwd}#请求文件信息所需参数(分享链接和提取码)

data = json.dumps(data)#转化json类型

url='http://www.a*****.com/pan/list'#文件信息接口

header={"Accept":"*/*","Accept-Encoding":"gzip, deflate","Accept-Language":"zh-CN,zh;q=0.9","Connection":"keep-alive","Content-Length":"71","Content-Type":"application/json; charset=UTF-8","Cookie":"__51vcke__JQGRABELTIK919aI=f5234120-4649-56e4-9626-da06ddcd4e8c; __51vuft__JQGRABELTIK919aI=1657722727458; __51uvsct__JQGRABELTIK919aI=4; __vtins__JQGRABELTIK919aI=%7B%22sid%22%3A%20%229c805819-ae46-5d39-93fb-025a7c8d0772%22%2C%20%22vd%22%3A%202%2C%20%22stt%22%3A%206946%2C%20%22dr%22%3A%206946%2C%20%22expires%22%3A%201657789254600%2C%20%22ct%22%3A%201657787454600%7D","Host":"www.a*****.com","Origin":"http://www.a*****.com","Referer":"http://www.a*****.com/","User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36","X-Requested-With":"XMLHttpRequest",}

re=requests.post(url,headers=header,data=data)#请求文件信息

if "null" in re.text:

print('请检查分享链接和提取码是否正确,或已过期')

exit()

else:

soup = BeautifulSoup(re.content, "lxml")

return eval(soup.p.text)#返回文件信息字典

def get_super_link(surl,shareId,shareUk,bdclnd,fsid):

print('正在请求高速链接...')

data={"surl":surl,"shareId":shareId,"shareUk":shareUk,"bdclnd":bdclnd,"fsid":fsid}#请求高速链接所需参数(在文件信息里)

data=json.dumps(data)#转化json类型

url='http://www.a*****.com/pan/getsuperlink'#请求高速链接的接口

header={"Accept":"*/*","Accept-Encoding":"gzip, deflate","Accept-Language":"zh-CN,zh;q=0.9","Connection":"keep-alive","Content-Length":"1911","Content-Type":"application/json; charset=UTF-8","Cookie":"__51vcke__JQGRABELTIK919aI=f5234120-4649-56e4-9626-da06ddcd4e8c; __51vuft__JQGRABELTIK919aI=1657722727458; __51uvsct__JQGRABELTIK919aI=4; __vtins__JQGRABELTIK919aI=%7B%22sid%22%3A%20%229c805819-ae46-5d39-93fb-025a7c8d0772%22%2C%20%22vd%22%3A%202%2C%20%22stt%22%3A%206946%2C%20%22dr%22%3A%206946%2C%20%22expires%22%3A%201657789254600%2C%20%22ct%22%3A%201657787454600%7D","Host":"www.a*****.com","Origin":"http://www.a*****.com","Referer":"http://www.a*****.com/","User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36","X-Requested-With":"XMLHttpRequest",}

re=requests.post(url,headers=header,data=data)#请求高速链接

soup=BeautifulSoup(re.content,"lxml")

if soup.p == None:

print("目前仅支持单文件解析,请重新创建分享链接")

exit()

dicts=eval(soup.p.text)#提取高速链接的字典

url=dicts['url']#提取高速链接

return url#返回高速链接

list=get_list('https://pan.baidu.com/s/1TV8eyxBO21xVQ4hd_QVOuw','j6a')#输入正确的百度网盘分享链接和提取码,获取文件信息

#print(list)

link=get_super_link(list['surl'],list['shareId'],list['shareUk'],list['bdclnd'],list['data'][0]['fs_id'])#从文件信息提取所需参数并调用获取高速链接

pyperclip.copy(link)#将高速链接复制到剪切板

print(link)

print('高速链接已复制到剪贴板')

print("文件名:"+list['data'][0]['server_filename'])#输出文件名

print("文件大小:"+list['data'][0]['size'])#输出文件大小

print("注意:1.必须用IDM等下载器下载,浏览器不行\n"

import requests

from bs4 import BeautifulSoup

import json

import pyperclip

def get_list(link,pwd):

print('正在获取文件信息...')

data={"link":link, "pwd":pwd}#请求文件信息所需参数(分享链接和提取码)

data = json.dumps(data)#转化json类型

url='http://www.a*****.com/pan/list'#文件信息接口

header={"Accept":"*/*","Accept-Encoding":"gzip, deflate","Accept-Language":"zh-CN,zh;q=0.9","Connection":"keep-alive","Content-Length":"71","Content-Type":"application/json; charset=UTF-8","Cookie":"__51vcke__JQGRABELTIK919aI=f5234120-4649-56e4-9626-da06ddcd4e8c; __51vuft__JQGRABELTIK919aI=1657722727458; __51uvsct__JQGRABELTIK919aI=4; __vtins__JQGRABELTIK919aI=%7B%22sid%22%3A%20%229c805819-ae46-5d39-93fb-025a7c8d0772%22%2C%20%22vd%22%3A%202%2C%20%22stt%22%3A%206946%2C%20%22dr%22%3A%206946%2C%20%22expires%22%3A%201657789254600%2C%20%22ct%22%3A%201657787454600%7D","Host":"www.a*****.com","Origin":"http://www.a*****.com","Referer":"http://www.a*****.com/","User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36","X-Requested-With":"XMLHttpRequest",}

re=requests.post(url,headers=header,data=data)#请求文件信息

if "null" in re.text:

print('请检查分享链接和提取码是否正确,或已过期')

exit()

else:

soup = BeautifulSoup(re.content, "lxml")

return eval(soup.p.text)#返回文件信息字典

def get_super_link(surl,shareId,shareUk,bdclnd,fsid):

print('正在请求高速链接...')

data={"surl":surl,"shareId":shareId,"shareUk":shareUk,"bdclnd":bdclnd,"fsid":fsid}#请求高速链接所需参数(在文件信息里)

data=json.dumps(data)#转化json类型

url='http://www.a*****.com/pan/getsuperlink'#请求高速链接的接口

header={"Accept":"*/*","Accept-Encoding":"gzip, deflate","Accept-Language":"zh-CN,zh;q=0.9","Connection":"keep-alive","Content-Length":"1911","Content-Type":"application/json; charset=UTF-8","Cookie":"__51vcke__JQGRABELTIK919aI=f5234120-4649-56e4-9626-da06ddcd4e8c; __51vuft__JQGRABELTIK919aI=1657722727458; __51uvsct__JQGRABELTIK919aI=4; __vtins__JQGRABELTIK919aI=%7B%22sid%22%3A%20%229c805819-ae46-5d39-93fb-025a7c8d0772%22%2C%20%22vd%22%3A%202%2C%20%22stt%22%3A%206946%2C%20%22dr%22%3A%206946%2C%20%22expires%22%3A%201657789254600%2C%20%22ct%22%3A%201657787454600%7D","Host":"www.a*****.com","Origin":"http://www.a*****.com","Referer":"http://www.a*****.com/","User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36","X-Requested-With":"XMLHttpRequest",}

re=requests.post(url,headers=header,data=data)#请求高速链接

soup=BeautifulSoup(re.content,"lxml")

if soup.p == None:

print("目前仅支持单文件解析,请重新创建分享链接")

exit()

dicts=eval(soup.p.text)#提取高速链接的字典

url=dicts['url']#提取高速链接

return url#返回高速链接

list=get_list('https://pan.baidu.com/s/1TV8eyxBO21xVQ4hd_QVOuw','j6a')#输入正确的百度网盘分享链接和提取码,获取文件信息

#print(list)

link=get_super_link(list['surl'],list['shareId'],list['shareUk'],list['bdclnd'],list['data'][0]['fs_id'])#从文件信息提取所需参数并调用获取高速链接

pyperclip.copy(link)#将高速链接复制到剪切板

print(link)

print('高速链接已复制到剪贴板')

print("文件名:"+list['data'][0]['server_filename'])#输出文件名

print("文件大小:"+list['data'][0]['size'])#输出文件大小

print("注意:1.必须用IDM等下载器下载,浏览器不行")