【Kubernetes部署篇】Kubeadm方式搭建K8s集群 1.23.0版本

文章目录

-

- 一、初始化准备

- 二、安装kubeadm

- 三、初始化Master集群

- 四、将新的Node节点加入集群

- 五、部署CNI网络插件

- 六、其他配置

Kubernetes1.24(包括1.24)之后不在兼容docker,如果有需要兼容docker的需求,则安装一个

cri-docker的插件,本文使用的是kubernetes1.23版本。

一、初始化准备

1、关闭防火墙

Centos7 默认启动了防火墙,而Kubernetes的Master与Node节点之间会存在大量的网络通信,安全的做是在防火墙上配置各个组件需要相互通信的端口如下表:

| 组件 | 默认端口 |

|---|---|

| API Service | 8080(http非安全端口)、6443(https安全端口) |

| Controller Manager | 10252 |

| Scheduler | 10251 |

| kubelet | 10250、10255(只读端口) |

| etcd | 2379(供客户端访问)、2380(供etcd集群内部节点之间访问) |

| 集群DNS服务 | 53(tcp/udp) |

不止这些,需要用到其他组件,就需要开放组件的端口号,列如CNI网络插件calico需要179端口、镜像私服需要5000端口,根据所需集群组件在防火墙上面放开对应端口策略。

在安全的网络环境下,可以简单的将Firewalls服务关掉:

systemctl stop firewalld

systemctl disable firewalld

sed -i -r 's/SELINUX=[ep].*/SELINUX=disabled/g' /etc/selinux/config

2、配置时间同步保证集群内时间保持一致

yum -y install ntpdate

ntpdate ntp1.aliyun.com

3、禁用swap交换分区

sed -i -r '/swap/ s/^/#/' /etc/fstab

swapoff --all

4、修改Linux内核参数,添加网桥过滤器和地址转发功能

cat >> /etc/sysctl.d/kubernetes.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl -p

# 加载网桥过滤器模块

modprobe br_netfilter

# 验证是否生效

lsmod | grep br_netfilter

5、配置ipvs功能

在Kubernetes中Service有两种代理模型,分别是iptable和IPVS,两者对比IPVS的性能高,如果想要使用IPVS模型,需要手动载人IPVS模块

yum -y install ipset ipvsadm

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod +x /etc/sysconfig/modules/ipvs.modules

# 执行脚本

/etc/sysconfig/modules/ipvs.modules

# 验证ipvs模块

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

6、安装docker组件

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

yum makecache

# yum-utils软件用于提供yum-config-manager程序

yum install -y yum-utils

# 使用yum-config-manager创建docker存储库(阿里)

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 查看当前镜像源中支持的docker版本

yum list docker-ce --showduplicates

# 安装特定版docker-ce,安装时需要使用--setopt=obsoletes=0参数指定版本,否则yum会自动安装高版本

yum -y install --setopt=obsoletes=0 docker-ce-18.06.3.ce-3.el7

Docker默认使用的Cgroup Driver为默认文件驱动,而k8s默认使用的文件驱动为systemd,k8s要求驱动类型必须要一致,所以需要将docker文件驱动改成systemd

mkdir /etc/docker

cat <<EOF > /etc/docker/daemon.json

{

"registry-mirrors": ["https://aoewjvel.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

# 启动docker程序及开机自启

systemctl enable docker --now

7、域名解析

cat >> /etc/hosts << EOF

16.32.15.200 master

16.32.15.201 node1

16.32.15.202 node2

EOF

在指定主机上面修改主机名

hostnamectl set-hostname master && bash

hostnamectl set-hostname node1 && bash

hostnamectl set-hostname node2 && bash

二、安装kubeadm

配置国内yum源,一键安装 kubeadm、kubelet、kubectl

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

EOF

yum -y install --setopt=obsoletes=0 kubeadm-1.23.0 kubelet-1.23.0 kubectl-1.23.0

kubeadm将使用kubelet服务以容器方式部署kubernetes的主要服务,所以需要先启动kubelet服务

systemctl enable kubelet.service --now

三、初始化Master集群

kubeadm init \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version=v1.23.0 \

--pod-network-cidr=192.168.0.0/16 \

--service-cidr=10.96.0.0/12 \

--apiserver-advertise-address=16.32.15.200 \

--ignore-preflight-errors=all

-

–image-repository:指定国内阿里云的镜像源

-

–kubernetes-version:指定k8s版本号

-

–pod-network-cidr: pod网段

-

–service-cidr: service网段

-

–apiserver-advertise-address: apiserver地址

-

–ignore-preflight-errors:忽略检查的一些错误

如果初始化正常则会输出日志:

[init] Using Kubernetes version: v1.23.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local localhost.localdomain] and IPs [10.96.0.1 16.32.15.200]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost localhost.localdomain] and IPs [16.32.15.200 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost localhost.localdomain] and IPs [16.32.15.200 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 6.502504 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.23" in namespace kube-system with the configuration for the kubelets in the cluster

NOTE: The "kubelet-config-1.23" naming of the kubelet ConfigMap is deprecated. Once the UnversionedKubeletConfigMap feature gate graduates to Beta the default name will become just "kubelet-config". Kubeadm upgrade will handle this transition transparently.

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node localhost.localdomain as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node localhost.localdomain as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: de4oak.y15qhm3uqgzimflo

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 16.32.15.200:6443 --token de4oak.y15qhm3uqgzimflo \

--discovery-token-ca-cert-hash sha256:0f54086e09849ec84de3f3790b0c042d4a21f4cee54f57775e82ec0fe5e3d14c

由于kubernetes默认使用CA证书,所以需要为kubectl配置证书才可以访问到Master

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

配置完成证书后,我们就可以使用kubectl命令行工具对集群进行访问和操作了,如下是查看 kube-system 命名空间下的ConfigMap列表

kubectl -n kube-system get configmap

NAME DATA AGE

coredns 1 13m

extension-apiserver-authentication 6 13m

kube-proxy 2 13m

kube-root-ca.crt 1 13m

kubeadm-config 1 13m

kubelet-config-1.23 1 13m

需要留意一下保留token 用于添加节点到k8s集群中

kubeadm join 16.32.15.200:6443 --token de4oak.y15qhm3uqgzimflo \

--discovery-token-ca-cert-hash sha256:0f54086e09849ec84de3f3790b0c042d4a21f4cee54f57775e82ec0fe5e3d14c

# 如果忘记可以使用 'kubeadm token list'查看

kubeadm token list

四、将新的Node节点加入集群

在node节点上安装kubeadm、kubelet命令,无需安装kubectl

yum -y install --setopt=obsoletes=0 kubeadm-1.23.0 kubelet-1.23.0

systemctl start kubelet

systemctl enable kubelet

使用kubeadm join命令加入集群,直接复制Master节点初始化输出token值即可

kubeadm join 16.32.15.200:6443 --token de4oak.y15qhm3uqgzimflo \

--discovery-token-ca-cert-hash sha256:0f54086e09849ec84de3f3790b0c042d4a21f4cee54f57775e82ec0fe5e3d14c

Node成功加入集群后可以通过 kubectl get nodes 命令确认新的节点是否已加入

kubectl get nodes

NAME STATUS ROLES AGE VERSION

localhost.localdomain NotReady control-plane,master 90m v1.23.0

node1 NotReady <none> 59m v1.23.0

node2 NotReady <none> 30m v1.23.0

五、部署CNI网络插件

对于CNI网络插件可以有很多选择,比如Calico CNI:

calico.yaml下载地址

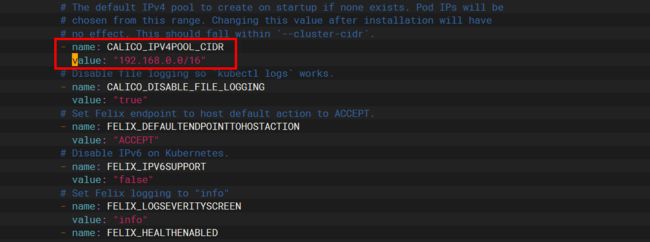

calico.yaml 下载好之后需要修改 CALICO_IPV4POOL_CIDR 值为初始化容器时 --pod-network-cidr向的网段,如下:

kubectl apply -f calico.yaml

# 查看网络Pod是否创建成功

kubectl get pods -n kube-system

如果下载慢 可以手动执行一下 这个需要在所有节点执行

# 获取要pull的镜像

grep image calico.yaml

docker pull calico/cni:v3.15.1

docker pull calico/pod2daemon-flexvol:v3.15.1

docker pull calico/node:v3.15.1

docker pull calico/kube-controllers:v3.15.1

查看节点状态为 Ready就绪

kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 3m48s v1.23.0

node1 Ready <none> 3m26s v1.23.0

node2 Ready <none> 3m19s v1.23.0

六、其他配置

1、token证书默认24小时 过期需要生成新的证书

kubeadm token create --print-join-command

2、集群初始化失败 重新初始化

kubeadm reset

3、calico组件始终未就绪 手动pull一下

# 获取要pull的镜像

grep image calico.yaml

docker pull calico/cni:v3.15.1

docker pull calico/pod2daemon-flexvol:v3.15.1

docker pull calico/node:v3.15.1

docker pull calico/kube-controllers:v3.15.1

4、自动补全功能

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

echo "source <(kubectl completion bash)" >> /etc/profile

source /etc/profile

5、设置master节点可调度,去除污点

kubectl taint nodes --all node-role.kubernetes.io/master-

kubectl taint nodes master node-role.kubernetes.io/control-plane:NoSchedule-

查看污点

kubectl describe nodes master |grep Taints

打标签

kubectl label nodes master node-role.kubernetes.io/worker= # 添加master节点worker

kubectl label nodes master node-role.kubernetes.io/master- # 删除master节点master