Spark SQL概述,DataFrames,创建DataFrames的案例,DataFrame常用操作(DSL风格语法),sql风格语法

一、 Spark SQL

1. Spark SQL概述

1.1. 什么是Spark SQL

Spark SQL是Spark用来处理结构化数据的一个模块,它提供了一个编程抽象叫做DataFrame并且作为分布式SQL查询引擎的作用。

1.2. 为什么要学习Spark SQL

我们已经学习了Hive,它是将Hive SQL转换成MapReduce然后提交到集群上执行,大大简化了编写MapReduce的程序的复杂性,由于MapReduce这种计算模型执行效率比较慢。所有Spark SQL的应运而生,它是将Spark SQL转换成RDD,然后提交到集群执行,执行效率非常快!

1.易整合

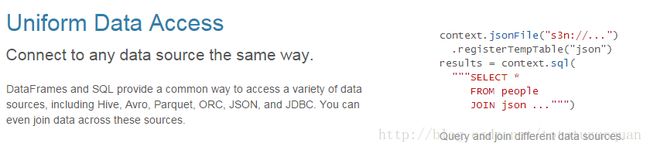

2.统一的数据访问方式

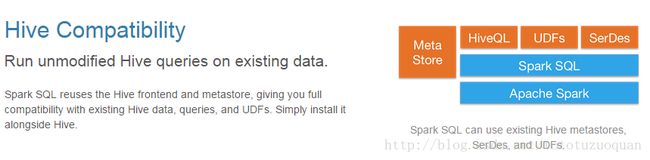

3.兼容Hive

4.标准的数据连接

2. DataFrames

2.1. 什么是DataFrames

与RDD类似,DataFrame也是一个分布式数据容器。然而DataFrame更像传统数据库的二维表格,除了数据以外,还记录数据的结构信息,即schema。同时,与Hive类似,DataFrame也支持嵌套数据类型(struct、array和map)。从API易用性的角度上 看,DataFrame API提供的是一套高层的关系操作,比函数式的RDD API要更加友好,门槛更低。由于与R和Pandas的DataFrame类似,Spark DataFrame很好地继承了传统单机数据分析的开发体验。

2.2. 创建DataFrames

连接spark-shell:

[root@hadoop1 spark-2.1.1-bin-hadoop2.7]# bin/spark-shell --master spark://hadoop1:7077,hadoop2:7077在Spark SQL中SQLContext是创建DataFrames和执行SQL的入口,在spark-1.5.2中已经内置了一个sqlContext

1.在本地创建一个文件,有三列,分别是id、name、age,用空格分隔,然后上传到hdfs上

hdfs dfs -put person.txt /

person.txt的内容如下:

1 zhangsan 19

2 lisi 20

3 wangwu 28

4 zhaoliu 26

5 tianqi 24

6 chengnong 55

7 zhouxingchi 58

8 mayun 50

9 yangliying 30

10 lilianjie 51

11 zhanghuimei 35

12 lian 53

13 zhangyimou 542.在spark shell执行下面命令,读取数据,将每一行的数据使用列分隔符分割

scala> val lineRDD = sc.textFile("hdfs://mycluster/person.txt").map(_.split(" "))

lineRDD: org.apache.spark.rdd.RDD[Array[String]] = MapPartitionsRDD[2] at map at :24 3.定义case class(相当于表的schema)

scala> case class Person(id:Int, name:String, age:Int)

defined class Person4.将RDD和case class关联

scala> val personRDD = lineRDD.map(x => Person(x(0).toInt, x(1), x(2).toInt))

personRDD: org.apache.spark.rdd.RDD[Person] = MapPartitionsRDD[3] at map at :28 5.将RDD转换成DataFrame

scala> val personDF = personRDD.toDF

personDF: org.apache.spark.sql.DataFrame = [id: int, name: string ... 1 more field]6.对DataFrame进行处理

scala> personDF.show

+---+-----------+---+

| id| name|age|

+---+-----------+---+

| 1| zhangsan| 19|

| 2| lisi| 20|

| 3| wangwu| 28|

| 4| zhaoliu| 26|

| 5| tianqi| 24|

| 6| chengnong| 55|

| 7|zhouxingchi| 58|

| 8| mayun| 50|

| 9| yangliying| 30|

| 10| lilianjie| 51|

| 11|zhanghuimei| 35|

| 12| lian| 53|

| 13| zhangyimou| 54|

+---+-----------+---+3.DataFrame常用操作

3.1 DSL风格语法

1.查看DataFrame中的内容

scala> personDF.show

+---+-----------+---+

| id| name|age|

+---+-----------+---+

| 1| zhangsan| 19|

| 2| lisi| 20|

| 3| wangwu| 28|

| 4| zhaoliu| 26|

| 5| tianqi| 24|

| 6| chengnong| 55|

| 7|zhouxingchi| 58|

| 8| mayun| 50|

| 9| yangliying| 30|

| 10| lilianjie| 51|

| 11|zhanghuimei| 35|

| 12| lian| 53|

| 13| zhangyimou| 54|

+---+-----------+---+2.查看DataFrame部分列中的内容

scala> personDF.select(personDF.col("name")).show

+-----------+

| name|

+-----------+

| zhangsan|

| lisi|

| wangwu|

| zhaoliu|

| tianqi|

| chengnong|

|zhouxingchi|

| mayun|

| yangliying|

| lilianjie|

|zhanghuimei|

| lian|

| zhangyimou|

+-----------+scala> personDF.select(col("name"),col("age")).show

+-----------+---+

| name|age|

+-----------+---+

| zhangsan| 19|

| lisi| 20|

| wangwu| 28|

| zhaoliu| 26|

| tianqi| 24|

| chengnong| 55|

|zhouxingchi| 58|

| mayun| 50|

| yangliying| 30|

| lilianjie| 51|

|zhanghuimei| 35|

| lian| 53|

| zhangyimou| 54|

+-----------+---+scala> personDF.select("name").show

+-----------+

| name|

+-----------+

| zhangsan|

| lisi|

| wangwu|

| zhaoliu|

| tianqi|

| chengnong|

|zhouxingchi|

| mayun|

| yangliying|

| lilianjie|

|zhanghuimei|

| lian|

| zhangyimou|

+-----------+3.打印DataFrame的Schema信息

scala> personDF.printSchema

root

|-- id: integer (nullable = true)

|-- name: string (nullable = true)

|-- age: integer (nullable = true)4. 查询所有的name和age,并将age +1

scala> personDF.select(col("id"),col("name"),col("age") + 1).show

+---+-----------+---------+

| id| name|(age + 1)|

+---+-----------+---------+

| 1| zhangsan| 20|

| 2| lisi| 21|

| 3| wangwu| 29|

| 4| zhaoliu| 27|

| 5| tianqi| 25|

| 6| chengnong| 56|

| 7|zhouxingchi| 59|

| 8| mayun| 51|

| 9| yangliying| 31|

| 10| lilianjie| 52|

| 11|zhanghuimei| 36|

| 12| lian| 54|

| 13| zhangyimou| 55|

+---+-----------+---------+scala> personDF.select(personDF("id"),personDF("name"),personDF("age") + 1).show

+---+-----------+---------+

| id| name|(age + 1)|

+---+-----------+---------+

| 1| zhangsan| 20|

| 2| lisi| 21|

| 3| wangwu| 29|

| 4| zhaoliu| 27|

| 5| tianqi| 25|

| 6| chengnong| 56|

| 7|zhouxingchi| 59|

| 8| mayun| 51|

| 9| yangliying| 31|

| 10| lilianjie| 52|

| 11|zhanghuimei| 36|

| 12| lian| 54|

| 13| zhangyimou| 55|

+---+-----------+---------+5. 过滤age大于等于40的

scala> personDF.filter(col("age") >= 40).show6. 按年龄进行分组并统计相同年龄的人数

scala> personDF.groupBy("age").count.show()

+---+-----+

|age|count|

+---+-----+

| 53| 1|

| 28| 1|

| 26| 1|

| 20| 1|

| 54| 1|

| 19| 1|

| 35| 1|

| 55| 1|

| 51| 1|

| 50| 1|

| 24| 1|

| 58| 1|

| 30| 1|

+---+-----+3.2 SQL风格语法:

- 在使用SQL的语法之前,需要先执行(也就是或先创建一个sqlContext):

scala> val sqlContext = new org.apache.spark.sql.SQLContext(sc)

warning: there was one deprecation warning; re-run with -deprecation for details

sqlContext: org.apache.spark.sql.SQLContext = org.apache.spark.sql.SQLContext@11fb9fc7- 如果想使用SQL风格的语法,需要将DataFrame注册成表

scala> personDF.registerTempTable("t_person")

warning: there was one deprecation warning; re-run with -deprecation for details- 查询年龄最大的前两名

scala> sqlContext.sql("select * from t_person order by age desc limit 2").show

+---+-----------+---+

| id| name|age|

+---+-----------+---+

| 7|zhouxingchi| 58|

| 6| chengnong| 55|

+---+-----------+---+- 显示表的Schema信息

scala> sqlContext.sql("desc t_person").show

+--------+---------+-------+

|col_name|data_type|comment|

+--------+---------+-------+

| id| int| null|

| name| string| null|

| age| int| null|

+--------+---------+-------+

scala>7.保存结果

result.save("hdfs://hadoop.itcast.cn:9000/sql/res1")

result.save("hdfs://hadoop.itcast.cn:9000/sql/res2", "json")

#以JSON文件格式覆写HDFS上的JSON文件

import org.apache.spark.sql.SaveMode._

result.save("hdfs://hadoop.itcast.cn:9000/sql/res2", "json" , Overwrite)

8.重新加载以前的处理结果(可选)

sqlContext.load("hdfs://hadoop.itcast.cn:9000/sql/res1")

sqlContext.load("hdfs://hadoop.itcast.cn:9000/sql/res2", "json")