日志采集 - Filebeat

Filebeat 是什么?

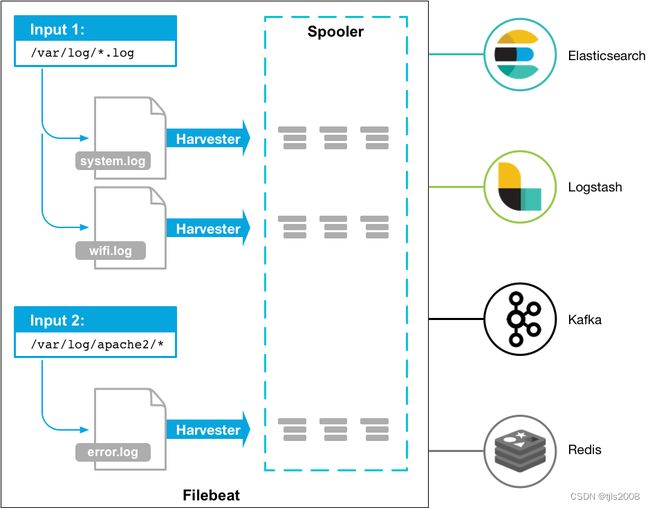

Filebeat是本地文件的日志数据采集器,可监控日志目录或特定日志文件(tail file),并将它们转发给Elasticsearch或Logstatsh进行索引、kafka等。

是使用 Golang 实现的轻量型日志采集器,也是 Elasticsearch stack 里面的一员。本质上是一个 agent ,可以安装在各个节点上,根据配置读取对应位置的日志,并上报到相应的地方去。

工作原理

Filebeat 由两个主要组件组成:harvester 和 prospector。

- 采集器 harvester 的主要职责是读取单个文件的内容。读取每个文件,并将内容发送到 the output。

- 查找器 prospector 的主要职责是管理 harvester 并找到所有要读取的文件来源。如果输入类型为日志,则查找器将查找路径匹配的所有文件,并为每个文件启动一个 harvester。

安装

# 1.安装

cd /usr/local

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.16.3-linux-x86_64.tar.gz

tar -zxf filebeat-7.16.3-linux-x86_64.tar.gz

rm -f filebeat-7.16.3-linux-x86_64.tar.gz

mv filebeat-7.16.3-linux-x86_64 filebeat

cd filebeat

mkdir -p conf/inputs.d

// 不要默认配置了,把默认配置进行重命名留存

mv filebeat.yml filebeat.example.yml

# 2.采集配置 ==============================

# 配置内容详见下面的"配置"

# 2.1)主配置

vim conf/filebeat.yml

# 2.2)子配置配置

vim conf/inputs.d/nginx.yml

vim conf/inputs.d/runtime.yml

# 3.启动 filebeat

nohup ./filebeat -e -c conf/filebeat.yml & # root 后台守护进程启动

调试说明

# 调试说明 ========================================

# 1)修改主配置 output为如下内容:

output.console:

pretty: true

# 2)执行下面命令,如果屏幕有数据输出说明程序启动正常

./filebeat -e -c conf/filebeat.yml配置

1. 主配置 filebeat/conf/filebeat.yml

# ============================== Filebeat inputs ===============================

filebeat.config.inputs:

enabled: true

path: ${path.config}/conf/inputs.d/*.yml

reload.enabled: true

reload.period: 10s

# ============================== Filebeat logging ===============================

logging.level: info

logging.to_files: true

logging.files:

rotateonstartup: false

path: /usr/local/filebeat/logs/filebeat.log

name: filebeat

# rotateeverybytes: 104857600

interval: 24h

keepfiles: 2

permissions: 0644

# ============================== Filebeat modules ==============================

#filebeat.config.modules:

# path: ${path.config}/modules.d/*.yml

# reload.enabled: false

# reload.period: 10s

# ================================== Outputs ===================================

output.kafka:

hosts: ["xx.xx.xx.xx:9092", "xx.xx.xx.xx:9092", "xx.xx.xx.xx:9092"]

topic: 'pc_app-%{[app_id]}-%{[app_type]}-%{[app_env]}-%{[log_type]}-%{[data_type]}'

required_acks: 1

注Note:

topic 如果没有 需要先申请

2. 子配置

Note: 只需修改 paths 和 fields 字段

Nginx

filebeat/conf/inputs.d/nginx.yml

- type: log

paths:

#- /usr/local/nginx/logs/access-json.log

encoding: utf8

scan_frequency: 5s

fields:

app_id: nginx

app_type: prod_official

app_env: prod

log_type: access

data_type: json

fields_under_root: true

- type: log

paths:

#- /usr/local/nginx/logs/error.log

encoding: utf8

scan_frequency: 5s

fields:

app_id: nginx

app_type: prod_official

app_env : prod

log_type: error

data_type: raw

fields_under_root: true

业务代码日志

filebeat/conf/inputs.d/runtime.yml

- type: log

paths:

# - /var/www/20230103/api/runtime/logs/runtime*.log

# - /var/www/20230103/app/runtime/logs/runtime*.log

# - /var/www/20230103/crm/runtime/logs/runtime*.log

# - /var/www/20230103/console/runtime/logs/runtime*.log

# - /var/www/20230103/pc/runtime/logs/runtime*.log

encoding: utf8

scan_frequency: 5s

fields:

app_id: runtime

app_type: official

app_env: yf

log_type: all

data_type: raw

fields_under_root: true

- type: log

paths:

# - /var/www/prod/api/runtime/logs/runtime*.log

# - /var/www/prod/app/runtime/logs/runtime*.log

# - /var/www/prod/crm/runtime/logs/runtime*.log

# - /var/www/prod/console/runtime/logs/runtime*.log

# - /var/www/prod/pc/runtime/logs/runtime*.log

encoding: utf8

scan_frequency: 5s

fields:

app_id: runtime

app_type: official

app_env: prod

log_type: all

data_type: raw

fields_under_root: true

request请求日志

filebeat/conf/inputs.d/downstream.yml

- type: log

paths:

- /var/www/prod/api/runtime/logs/downstream*.log

- /var/www/prod/app/runtime/logs/downstream*.log

- /var/www/prod/crm/runtime/logs/downstream*.log

- /var/www/prod/console/runtime/logs/downstream*.log

- /var/www/prod/pc/runtime/logs/downstream*.log

encoding: utf8

scan_frequency: 5s

fields:

app_id: downstream

app_type: official

app_env: prod

log_type: all

data_type: json

fields_under_root: true

php-fpm

filebeat/conf/inputs.d/php-fpm.yml

- type: log

paths:

- /usr/local/php/log/fpm_error.www.log

encoding: utf8

scan_frequency: 5s

fields:

app_id: phpfpm

app_type: official

app_env: all

log_type: error

data_type: raw

fields_under_root: true

日志格式示例

Nginx

# 日志格式

log_format json '{"@timestamp":"$time_iso8601",'

'"host":"$server_addr",'

'"clientip":"$remote_addr",'

'"size":$body_bytes_sent,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamhost":"$upstream_addr",'

'"http_host":"$host",'

'"url":"$request",'

'"referer":"$http_referer",'

'"agent":"$http_user_agent",'

'"http_x_forwarded_for":"$http_x_forwarded_for",'

'"request_body":"-",'

'"http_cookie":"-",'

'"http_user_agent":"$http_user_agent",'

'"status":"$status"}';

# 示例

{"@timestamp":"2022-03-30T15:48:50+08:00","host":"192.168.2.22","clientip":"100.125.68.198","size":1552,"responsetime":0.005,"upstreamtime":"0.005","upstreamhost":"127.0.0.1:9000","http_host":"passport.xiaofenglang.com","url":"POST /passport/prod/selectUser HTTP/1.1","referer":"-","agent":"-","http_x_forwarded_for":"10.15.1.84","request_body":"-","http_cookie":"-","http_user_agent":"-","status":"200"}