python大规模机器学习day9-使用SGD

使用SGD

实验要求:

1.重新排列数据

2.训练SGDClassifier

实验内容:

1.重新排列数据和SGD的训练器预测准确度

代码注释:

代码1:

import zlib //zlib用于数据压缩

from random import shuffle

def ram_shuffle(filename_in,filename_out,header=True):

with open(filename_in,‘rb’) as f:

zlines = [zlib.compress(line,9) for line in f] //compress函数用于压缩对象

if header:

first_row = zlines.pop(0) //pop用于移除属性栏

shuffle(zlines) //shuffle函数可以随机排序

with open(filename_out,‘wb’) as f: //wb用于写数据

if header:

f.write(zlib.decompress(first_row)) //decompress用于解压缩数据

for zline in zlines:

f.write(zlib.decompress(zline))

import os

local_path=os.getcwd()

source = ‘covtype.data’

ram_shuffle(filename_in=local_path+’\’+source,filename_out=local_path+’\shuffled_hour.data’,header=True)

代码2:

import csv, time

import numpy as np

from sklearn.linear_model import SGDClassifier

source=‘shuffled_covtype.data’

SEP=’,’

forest_type=[t+1 for t in range(7)]

SGD=SGDClassifier(loss=‘log’,penalty=None, random_state=1,average=True)

accuracy=0

holdout_count=0

prog_accuracy=0

prog_count=0

cold_start=200000 //冷启动值

k_holdout=10

with open(local_path+’\’+source, ‘rt’) as R:

iterator = csv.reader(R, delimiter=SEP)

for n,row in enumerate(iterator):

if n>250000: # Reducing the running time of the experiment //设置了限制器来观察250000个实例

break

# DATA PROCESSING

response = np.array([int(row[-1])]) #The response is the last value //array用于创造一个数组对象 //拿出最后一列作为样本标签值,row已经代表行,row[-1]就代表行中最后一个,即最后一列

features = np.array(list(map(float,row[:-1]))).reshape(1,-1) //reshape用于指定矩阵格式

#MACHINE LEARNING

if (n+1) >= cold_start and (n+1-cold_start) % k_holdout==0: //每10个被用于验证

if int(SGD.predict(features))==response[0]: //predict是训练后返回预测结果,返回的是标签值,训练数组有两组,一组是数据,一组是对应的标签值

accuracy +=1 //准确度计数器

holdout_count +=1 //总测试实例计数器

if (n+1-cold_start) % 25000 ==0 and (n+1) > cold_start: //每25000个观察实例统计训练准确度

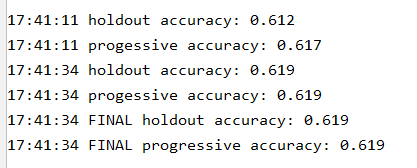

print (’%s holdout accuracy: %0.3f’ % (time.strftime(’%X’), accuracy / float(holdout_count))) //strftime返回当地时间

else:

# PROCESSIVE VALIDATION

if (n+1) >=cold_start:

if int(SGD.predict(features))==response[0]:

prog_accuracy+=1

prog_count +=1

if n % 25000 ==0 and n> cold_start:

print (’%s progessive accuracy: %0.3f’ % (time.strftime(’%X’), prog_accuracy/float(prog_count)))

# LEARNING PHASE //学习阶段

SGD.partial_fit(features, response, classes=forest_type) //样本数据和样本标签,所有可能类别,partial_fit就是SGD学习器方法

print (’%s FINAL holdout accuracy: %0.3f’ % (time.strftime(’%X’),accuracy/((n+1-cold_start)/float(k_holdout)))) //最终预测准确度

print (’%s FINAL progressive accuracy: %0.3f’ % (time.strftime(’%X’),prog_accuracy/float(prog_count))) //最终数据处理准确度

代码3:

import csv,time,os

import numpy as np

from sklearn.linear_model import SGDRegressor

from sklearn.feature_extraction import FeatureHasher

source=’\bikesharing\hour.csv’

local_path=os.getcwd()

SEP=’,’

def apply_log(x): return np.log(float(x)+1) //返回x的自然对数

def apply_exp(x): return np.exp(float(x))-1 //返回x的指数

SGD=SGDRegressor(loss=‘squared_loss’, penalty=None, random_state=1,average=True)

h=FeatureHasher //h作为哈希技巧的一个对象

val_rmse=0

val_rmsle=0

predictions_start = 16000 //冷启动值

with open(local_path+’\’+source,‘rt’) as R:

iterator =csv.DictReader(R,delimiter=SEP)

for n,row in enumerate(iterator):

# DATA PROCESSING

target=np.array([apply_log(row[‘cnt’])]) //和上一个例子中的row[-1]是一样的,此处也可见row的两种提取行数据的方法,索引或者标签值

features={k+’_’+v:1 for k,v in row.items() if k in [‘holiday’,‘hr’,‘mnth’,‘season’,‘weathersit’,‘weekday’,‘workingday’,‘yr’]} //items返回可遍历的键值对,上一个例子中features保存的类型是数组,这个例子中保存的是字典,保存时间信息

numeric_features={k:float(v) for k,v in row.items() if k in [‘hum’,‘temp’,‘atemp’,‘windspeed’]} //保存天气信息

features.update(numeric_features) //键值对更新,把后面的赋给前面

hashed_features = h().transform([features]) //transform得到数据的标准化

#MACHINE LEARNING

if (n+1) >= predictions_start:

#HOLDOUT AFTER N PHASE

predicted = SGD.predict(hashed_features) //得到预测后的值

val_rmse +=(apply_exp(predicted) - apply_exp(target))**2

val_rmsle += (predicted - target)**2

if (n-predictions_start+1) % 250 ==0 and (n+1) >predictions_start:

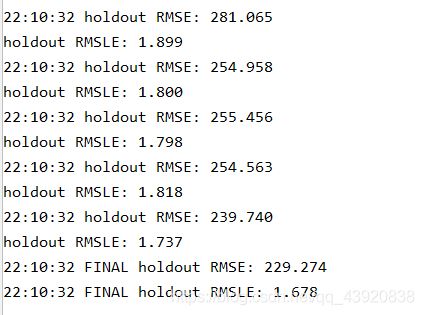

print (’%s holdout RMSE: %0.3f’ %(time.strftime(’%X’), (val_rmse/ float(n-predictions_start+1))**0.5))

print (‘holdout RMSLE: %0.3f’ % ((val_rmsle/float(n-predictions_start+1))**0.5))

else:

# LEARNING PHASE

SGD.partial_fit(hashed_features, target)

print (’%s FINAL holdout RMSE: %0.3f’ %(time.strftime(’%X’),(val_rmse/float(n-predictions_start+1))**0.5))

print (’%s FINAL holdout RMSLE: %0.3f’ %(time.strftime(’%X’), (val_rmsle/float(n-predictions_start+1))**0.5))

源代码:

import zlib

from random import shuffle

def ram_shuffle(filename_in,filename_out,header=True):

with open(filename_in,‘rb’) as f:

zlines = [zlib.compress(line,9) for line in f]

if header:

first_row = zlines.pop(0)

shuffle(zlines)

with open(filename_out,‘wb’) as f:

if header:

f.write(zlib.decompress(first_row))

for zline in zlines:

f.write(zlib.decompress(zline))

import os

local_path=os.getcwd()

source = ‘covtype.data’

ram_shuffle(filename_in=local_path+’\’+source,filename_out=local_path+’\shuffled_covtype.data’,header=True)

代码2:

import csv, time

import numpy as np

from sklearn.linear_model import SGDClassifier

source=‘shuffled_covtype.data’

SEP=’,’

forest_type=[t+1 for t in range(7)]

SGD=SGDClassifier(loss=‘log’,penalty=None, random_state=1,average=True)

accuracy=0

holdout_count=0

prog_accuracy=0

prog_count=0

cold_start=200000

k_holdout=10

with open(local_path+’\’+source, ‘rt’) as R:

iterator = csv.reader(R, delimiter=SEP)

for n,row in enumerate(iterator):

if n>250000: # Reducing the running time of the experiment

break

# DATA PROCESSING

response = np.array([int(row[-1])]) #The response is the last value

features = np.array(list(map(float,row[:-1]))).reshape(1,-1)

#MACHINE LEARNING

if (n+1) >= cold_start and (n+1-cold_start) % k_holdout==0:

if int(SGD.predict(features))==response[0]:

accuracy +=1

holdout_count +=1

if (n+1-cold_start) % 25000 ==0 and (n+1) > cold_start:

print (’%s holdout accuracy: %0.3f’ % (time.strftime(’%X’), accuracy / float(holdout_count)))

else:

# PROCESSIVE VALIDATION

if (n+1) >=cold_start:

if int(SGD.predict(features))==response[0]:

prog_accuracy+=1

prog_count +=1

if n % 25000 ==0 and n> cold_start:

print (’%s progessive accuracy: %0.3f’ % (time.strftime(’%X’), prog_accuracy/float(prog_count)))

# LEARNING PHASE

SGD.partial_fit(features, response, classes=forest_type)

print (’%s FINAL holdout accuracy: %0.3f’ % (time.strftime(’%X’),accuracy/((n+1-cold_start)/float(k_holdout))))

print (’%s FINAL progressive accuracy: %0.3f’ % (time.strftime(’%X’),prog_accuracy/float(prog_count)))

代码3:

import csv,time,os

import numpy as np

from sklearn.linear_model import SGDRegressor

from sklearn.feature_extraction import FeatureHasher

source=’\bikesharing\hour.csv’

local_path=os.getcwd()

SEP=’,’

def apply_log(x): return np.log(float(x)+1)

def apply_exp(x): return np.exp(float(x))-1

SGD=SGDRegressor(loss=‘squared_loss’, penalty=None, random_state=1,average=True)

h=FeatureHasher

val_rmse=0

val_rmsle=0

predictions_start = 16000

with open(local_path+’\’+source,‘rt’) as R:

iterator =csv.DictReader(R,delimiter=SEP)

for n,row in enumerate(iterator):

# DATA PROCESSING

target=np.array([apply_log(row[‘cnt’])])

features={k+’_’+v:1 for k,v in row.items() if k in [‘holiday’,‘hr’,‘mnth’,‘season’,‘weathersit’,‘weekday’,‘workingday’,‘yr’]}

numeric_features={k:float(v) for k,v in row.items() if k in [‘hum’,‘temp’,‘atemp’,‘windspeed’]}

features.update(numeric_features)

hashed_features = h().transform([features])

#MACHINE LEARNING

if (n+1) >= predictions_start:

#HOLDOUT AFTER N PHASE

predicted = SGD.predict(hashed_features)

val_rmse +=(apply_exp(predicted) - apply_exp(target))**2

val_rmsle += (predicted - target)**2

if (n-predictions_start+1) % 250 ==0 and (n+1) >predictions_start:

print (’%s holdout RMSE: %0.3f’ %(time.strftime(’%X’), (val_rmse/ float(n-predictions_start+1))**0.5))

print (‘holdout RMSLE: %0.3f’ % ((val_rmsle/float(n-predictions_start+1))**0.5))

else:

# LEARNING PHASE

SGD.partial_fit(hashed_features, target)

print (’%s FINAL holdout RMSE: %0.3f’ %(time.strftime(’%X’),(val_rmse/float(n-predictions_start+1))**0.5))

print (’%s FINAL holdout RMSLE: %0.3f’ %(time.strftime(’%X’), (val_rmsle/float(n-predictions_start+1))**0.5))

实验总结:

新版本print之后需要添加括号

流处理一次处理一行数据。每10个不被训练而用于验证,用来和训练结果比较,以保证训练准确。问题:为什么要冷启动?冷启动的含义在推荐系统中,相当于第一次进入程序中不立即进行推荐,而是到一定量后进行推荐。

训练器如何进行训练,无法得知。需要深入研究。也可以看出使用python包给使用者带来了很大的便利,不需要去了解机器学习背后的原理,只需要掌握工具就好。

第二个例子的研究方向值得思考,通过学习每一天的时间天气和共享单车量,来预测整个华盛顿的共享自行车数量,在这之前我想到的训练预测的方向都是分类问题,这个例子告诉我们也可以预测总数。