2022 Spring MIT6.824 Lab MapReduce

文章目录

-

- Lec1: 学习笔记

- 实验链接

- 实验

-

- Lab: MapReduce

- 结果

- 提交结果

- 查看结果

- 参考链接

- Github

Lec1: 学习笔记

- 如何优雅的打日志

- LabGuidance

- MapReducePager

实验链接

https://pdos.csail.mit.edu/6.824/labs/lab-mr.html

实验

Lab: MapReduce

实验目的是为了让我们实现MapReduce的框架,且代码给了很多MapReduce函数(在文件夹6.824/src/mrapps),代码采用动态链接库的形式加载不同的函数,从而实现不同的功能。

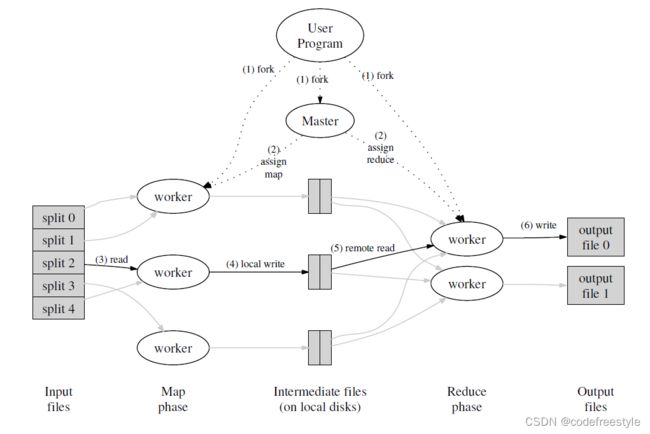

实验基本流程如下图所示:

实验需要注意的地方有:

- 实验中省略了分割文件的操作,实验中一个文件是一个Map任务;

- 实验中的

Coordinator对应图中的Master,Worker对应Worker; - 实验中不同的进程代表不同的角色,所有进程共享本地文件系统。当然,如果想在不同机器上执行不同的进程,可借助分布式文件系统进行文件的同步;

- 注意需要考虑进程

Crash的情况,如果一个任务超过10秒还没完成,则认为该任务失败了,则此任务会被重新分配给别的Worker; - 为了区分任务是否真正的完成,

Map和Reduce的处理结果会被保存在文件tmp-xxx中,当Coordinator确认该任务属于该Worker时,才会重命名将其变为最终结果文件;

代码更改:本实验只需更改mr/coordinator.go、mr/rpc.go和mr/worker.go。

常用命令:

go build -race -buildmode=plugin ../mrapps/wc.go

go run -race mrsequential.go wc.so pg*.txt

go run -race mrworker.go wc.so

具体实现如下:

-

文件

rpc.gopackage mr // // RPC definitions. // // remember to capitalize all names. // import ( "os" "sync" "time" ) import "strconv" // // example to show how to declare the arguments // and reply for an RPC. // type ExampleArgs struct { X int } type ExampleReply struct { Y int } const ( TASK_STATUS_NONE = 0 TASK_STATUS_MAP = 1 TASK_STATUS_REDUCE = 2 TASK_STATUS_DONE = 3 ) const ( STATUS_CODE_OK = 0 STATUS_CODE_ERROR = 1 ) type Task struct { WorkerId int32 TaskId int32 Deadline time.Time Status int32 // Map or Reduce Done bool File string m sync.Mutex } // Add your RPC definitions here. type GetTaskInfoArgs struct { WorkerId int32 } type PostTaskDoneArgs struct { WorkerId int32 Status int32 TaskId int32 } type GetTaskInfoReply struct { Id int32 NReduce int32 NMap int32 ATask *Task Status int32 } type PostTaskDoneReply struct { StatusCode int32 } // Cook up a unique-ish UNIX-domain socket name // in /var/tmp, for the coordinator. // Can't use the current directory since // Athena AFS doesn't support UNIX-domain sockets. func coordinatorSock() string { s := "/var/tmp/824-mr-" s += strconv.Itoa(os.Getuid()) return s } -

文件

coordinator.gopackage mr import ( "log" "sync" "sync/atomic" "time" ) import "net" import "os" import "net/rpc" import "net/http" type Coordinator struct { // Your definitions here. files []string nReduce int32 nMap int32 m sync.Mutex status int // the status of the coordinator, TASK_STATUS_MAP/TASK_STATUS_REDUCE/TASK_STATUS_DONE/TASK_STATUS_NIL mapTask []Task reduceTask []Task finishedMapTaskCnt int32 finishedReduceTaskCnt int32 todoMapTaskCnt int32 todoReduceTaskCnt int32 taskIndexQ chan int32 } // Your code here -- RPC handlers for the worker to call. // start a thread that listens for RPCs from worker.go func (c *Coordinator) server() { rpc.Register(c) rpc.HandleHTTP() //l, e := net.Listen("tcp", ":1234") sockname := coordinatorSock() os.Remove(sockname) l, e := net.Listen("unix", sockname) if e != nil { log.Fatal("listen error:", e) } go http.Serve(l, nil) } func (c *Coordinator) Schedule(args *GetTaskInfoArgs, reply *GetTaskInfoReply) error { workId := args.WorkerId sleepTime := 1 maxSleepTime := 32 for { oldStatus := c.status // all task Done if oldStatus == TASK_STATUS_DONE { reply.Status = TASK_STATUS_DONE return nil } if oldStatus == TASK_STATUS_MAP { reply.Status = TASK_STATUS_MAP oldToDoCnt := c.todoMapTaskCnt newToDoCnt := oldToDoCnt - 1 if oldToDoCnt > 0 { res := atomic.CompareAndSwapInt32(&(c.todoMapTaskCnt), oldToDoCnt, newToDoCnt) if res { index := <-c.taskIndexQ curr := &(c.mapTask[index]) curr.WorkerId = workId curr.Deadline = time.Now().Add(time.Second * 10) reply.ATask = curr reply.NMap = c.nMap reply.NReduce = c.nReduce reply.Id = workId //log.Printf("++++++++++++ Map task get: [%v], todoMapTask: %v", index, c.todoMapTaskCnt) break } } } else if oldStatus == TASK_STATUS_REDUCE { reply.Status = TASK_STATUS_REDUCE oldIndex := c.todoReduceTaskCnt newIndex := oldIndex - 1 if oldIndex > 0 { res := atomic.CompareAndSwapInt32(&(c.todoReduceTaskCnt), oldIndex, newIndex) if res { index := <-c.taskIndexQ curr := &(c.reduceTask[index]) curr.WorkerId = workId curr.Deadline = time.Now().Add(time.Second * 10) reply.ATask = curr reply.NMap = c.nMap reply.NReduce = c.nReduce reply.Id = workId break } } } time.Sleep(time.Duration(time.Microsecond * time.Duration(sleepTime))) sleepTime = sleepTime * 2 if sleepTime > maxSleepTime { sleepTime = 1 } } return nil } func (c *Coordinator) MarkTaskDone(args *PostTaskDoneArgs, reply *PostTaskDoneReply) error { taskId := args.TaskId status := args.Status workerId := args.WorkerId if TASK_STATUS_MAP == status { task := &c.mapTask[taskId] if task.Done { //log.Printf("Task[%v] already done by worker [%v].", task.TaskId, task.WorkerId) return nil } if task.WorkerId != workerId { reply.StatusCode = STATUS_CODE_ERROR //log.Printf("Woker Id does not match, expect [%v], actual [%v]", task.WorkerId, workerId) } if task.Status != TASK_STATUS_MAP { reply.StatusCode = STATUS_CODE_ERROR log.Fatalf("Task Status does not match, expect [%v], actual [%v]", TASK_STATUS_MAP, task.Status) } c.m.Lock() c.finishedMapTaskCnt++ //log.Printf("------------ Map task finished : [%v], finished task number : %v", taskId, c.finishedMapTaskCnt) if c.finishedMapTaskCnt == c.nMap { c.initReduceTask() // wait for worker rename done //time.Sleep(time.Second) c.status = TASK_STATUS_REDUCE } c.m.Unlock() task.m.Lock() task.Done = true task.m.Unlock() } else if TASK_STATUS_REDUCE == status { task := &c.reduceTask[taskId] if task.WorkerId != workerId { reply.StatusCode = STATUS_CODE_ERROR log.Fatalf("Woker Id does not match, expect [%v], actual [%v]", task.WorkerId, workerId) } if task.Status != TASK_STATUS_REDUCE { reply.StatusCode = STATUS_CODE_ERROR log.Fatalf("Task Status does not match, expect [%v], actual [%v]", TASK_STATUS_MAP, task.Status) } c.m.Lock() c.finishedReduceTaskCnt++ if c.finishedReduceTaskCnt == c.nReduce { c.status = TASK_STATUS_DONE } c.m.Unlock() task.m.Lock() task.Done = true task.m.Unlock() } else { log.Fatalf("Status not know: [%v]", status) } reply.StatusCode = STATUS_CODE_OK return nil } func (c *Coordinator) checkCrashedTask() bool { for { if c.status == TASK_STATUS_DONE { return true } if c.status == TASK_STATUS_MAP { for i := 0; i < int(c.nMap); i++ { curr := &c.mapTask[i] curr.m.Lock() if curr.WorkerId != -1 && curr.Done != true && curr.Deadline.Before(time.Now()) { c.taskIndexQ <- curr.TaskId c.m.Lock() c.todoMapTaskCnt++ //log.Printf("Schedule ************** new task found : [%v], todoMapTask: %v", curr.TaskId, c.todoMapTaskCnt) c.m.Unlock() } curr.m.Unlock() } } else if c.status == TASK_STATUS_REDUCE { for i := 0; i < int(c.nReduce); i++ { curr := &c.reduceTask[i] curr.m.Lock() if curr.WorkerId != -1 && curr.Done != true && curr.Deadline.Before(time.Now()) { c.taskIndexQ <- curr.TaskId c.m.Lock() c.todoReduceTaskCnt++ c.m.Unlock() } curr.m.Unlock() } } time.Sleep(time.Second * 10) } } // main/mrcoordinator.go calls Done() periodically to find out // if the entire job has finished. func (c *Coordinator) Done() bool { for { if c.status == TASK_STATUS_DONE { time.Sleep(5 * time.Second) return true } time.Sleep(time.Second) } // Your code here. return false } // MakeCoordinator create a Coordinator. // main/mrcoordinator.go calls this function. // nReduce is the number of reduce tasks to use. func MakeCoordinator(files []string, nReduce int32) *Coordinator { c := Coordinator{} // initialization. c.nReduce = nReduce c.files = files c.nMap = int32(len(files)) // one file one map task c.finishedReduceTaskCnt = 0 c.finishedMapTaskCnt = 0 c.todoMapTaskCnt = c.nMap c.todoReduceTaskCnt = c.nReduce c.status = TASK_STATUS_MAP c.initMapTask(files) // c.initReduceTask() go c.checkCrashedTask() c.server() return &c } func (c *Coordinator) initMapTask(files []string) { c.taskIndexQ = make(chan int32, c.nMap) c.mapTask = make([]Task, c.nMap) var i int32 = 0 for i = 0; i < c.nMap; i++ { c.mapTask[i] = Task{ File: files[i], TaskId: i, WorkerId: -1, Deadline: time.Now().Add(time.Second * 10), Status: TASK_STATUS_MAP, Done: false, } c.taskIndexQ <- i } } func (c *Coordinator) initReduceTask() { close(c.taskIndexQ) c.taskIndexQ = make(chan int32, c.nReduce) c.reduceTask = make([]Task, c.nReduce) var i int32 = 0 for i = 0; i < c.nReduce; i++ { c.reduceTask[i] = Task{ TaskId: i, WorkerId: -1, Deadline: time.Now().Add(time.Second * 10), Status: TASK_STATUS_REDUCE, Done: false, } c.taskIndexQ <- i } } -

文件

worker.go

package mr

import (

"encoding/json"

"fmt"

"io/ioutil"

"os"

"sort"

)

import "log"

import "net/rpc"

import "hash/fnv"

// Map functions return a slice of KeyValue.

type KeyValue struct {

Key string

Value string

}

// for sorting by key.

type ByKey []KeyValue

// for sorting by key.

func (a ByKey) Len() int { return len(a) }

func (a ByKey) Swap(i, j int) { a[i], a[j] = a[j], a[i] }

func (a ByKey) Less(i, j int) bool { return a[i].Key < a[j].Key }

// use ihash(key) % NReduce to choose the reduce

// task number for each KeyValue emitted by Map.

func ihash(key string) int {

h := fnv.New32a()

h.Write([]byte(key))

return int(h.Sum32() & 0x7fffffff)

}

// main/mrworker.go calls this function.

func Worker(mapf func(string, string) []KeyValue,

reducef func(string, []string) string) {

for {

DoWork(mapf, reducef)

//if !ok {

// log.Fatalf("Worker failed.\n")

// break

//}

}

}

func DoWork(mapf func(string, string) []KeyValue,

reducef func(string, []string) string) bool {

// declare an argument structure.

args := GetTaskInfoArgs{}

// fill in the argument(s).

pid := os.Getegid()

args.WorkerId = int32(pid)

// declare a reply structure.

reply := GetTaskInfoReply{}

// send the RPC request, wait for the reply.

// the "Coordinator.Example" tells the

// receiving server that we'd like to call

// the Example() method of struct Coordinator.

ok := call("Coordinator.Schedule", &args, &reply)

if !ok {

//log.Printf("call Coordinator.Schedule failed!\n")

return false

}

status := reply.Status

if TASK_STATUS_DONE == status {

return DoCleaningWork(pid)

}

//log.Printf("Worker get work, worker id [%v], job name [%v], job id [%v] \n", pid, getTaskName(reply.Status), reply.ATask.TaskId)

if TASK_STATUS_MAP == status {

return MapTaskPipeline(&reply, mapf)

} else if TASK_STATUS_REDUCE == status {

return ReduceTaskPipeline(&reply, reducef)

} else {

return DoUnExpectedWork(&reply)

}

}

func getTaskName(taskType int32) string {

switch taskType {

case TASK_STATUS_MAP:

return "[*Map task*]"

case TASK_STATUS_REDUCE:

return "[*Reduce task*]"

case TASK_STATUS_DONE:

return "[*Task Done*]"

default:

return "[*Not Known task*]"

}

}

func DoMapTask(reply *GetTaskInfoReply, mapf func(string, string) []KeyValue) bool {

filename := reply.ATask.File

file, err := os.Open(filename)

if err != nil {

log.Fatalf("Cannot open %v", filename)

return false

}

content, err := ioutil.ReadAll(file)

if err != nil {

log.Fatalf("Cannot read %v", filename)

return false

}

err = file.Close()

if err != nil {

log.Fatalf("Close file %v failed, error message: %v", filename, err)

return false

}

kva := mapf(filename, string(content))

hashMap := make(map[int][]KeyValue)

nReduce := int(reply.NReduce)

for _, kv := range kva {

hashKey := ihash(kv.Key) % nReduce

hashMap[hashKey] = append(hashMap[hashKey], kv)

}

for i := 0; i < nReduce; i++ {

fileName := getTmpMapResultFileName(int(reply.ATask.WorkerId), int(reply.ATask.TaskId), i)

file, err = os.Create(fileName)

if err != nil {

log.Fatalf("Create file %v failed, error message: %v", fileName, err)

return false

}

enc := json.NewEncoder(file)

for _, kv := range hashMap[i] {

err = enc.Encode(&kv)

if err != nil {

log.Fatalf("Encode value to file %v failed, error message: %v", fileName, err)

return false

}

}

err = file.Close()

if err != nil {

log.Fatalf("Close file failed, error message: %v", err)

return false

}

}

return true

}

func getTmpMapResultFileName(workerId int, mapTaskId int, reduceId int) string {

return fmt.Sprintf("tmp-mr-%v-%v-%v", workerId, mapTaskId, reduceId)

}

func getMapResultFileName(mapTaskId int, reduceId int) string {

return fmt.Sprintf("mr-%v-%v", mapTaskId, reduceId)

}

func getTmpReduceResultFileName(workerId int, reduceId int) string {

return fmt.Sprintf("tmp-mr-out-%v-%v", workerId, reduceId)

}

func getReduceResultFileName(reduceId int) string {

return fmt.Sprintf("mr-out-%v", reduceId)

}

func ReduceTaskPipeline(reply *GetTaskInfoReply, reducef func(string, []string) string) bool {

ok := DoReduceTask(reply, reducef)

if !ok {

return false

}

return MarkReduceWorkDone(reply)

}

func MapTaskPipeline(reply *GetTaskInfoReply, mapf func(string, string) []KeyValue) bool {

ok := DoMapTask(reply, mapf)

if !ok {

return false

}

return MarkMapWorkDone(reply)

}

func MarkReduceWorkDone(reply *GetTaskInfoReply) bool {

// declare an argument structure.

args := PostTaskDoneArgs{}

// fill in the argument(s).

args.Status = TASK_STATUS_REDUCE

args.TaskId = reply.ATask.TaskId

args.WorkerId = reply.ATask.WorkerId

// declare a reply structure.

postReply := PostTaskDoneReply{}

// send the RPC request, wait for the reply.

// the "Coordinator.Example" tells the

// receiving server that we'd like to call

// the Example() method of struct Coordinator.

ok := call("Coordinator.MarkTaskDone", &args, &postReply)

if !ok {

log.Fatalf("Call Coordinator.MarkTaskDone failed. \n")

return false

}

//log.Printf("Call Coordinator.MarkTaskDone successfully!\n")

taskId := int(reply.ATask.TaskId)

if postReply.StatusCode == STATUS_CODE_OK {

// rename file

oldFilePath := getTmpReduceResultFileName(int(args.WorkerId), taskId)

newFilePath := getReduceResultFileName(taskId)

err := os.Rename(oldFilePath, newFilePath)

if nil != err {

log.Fatalf("Rename from [%v] to [%v] failed, error message: %v\n", oldFilePath, newFilePath, err)

return false

}

}

//log.Printf("Reduce task done: [%v]", taskId)

return true

}

func MarkMapWorkDone(reply *GetTaskInfoReply) bool {

// declare an argument structure.

args := PostTaskDoneArgs{}

// fill in the argument(s).

args.Status = TASK_STATUS_MAP

args.TaskId = reply.ATask.TaskId

args.WorkerId = reply.ATask.WorkerId

// declare a reply structure.

postReply := PostTaskDoneReply{}

// send the RPC request, wait for the reply.

// the "Coordinator.Example" tells the

// receiving server that we'd like to call

// the Example() method of struct Coordinator.

ok := call("Coordinator.MarkTaskDone", &args, &postReply)

if !ok {

log.Fatalf("Call Coordinator.MarkTaskDone failed. \n")

return false

}

//log.Printf("Call Coordinator.MarkTaskDone successfully!\n")

taskId := int(reply.ATask.TaskId)

workerId := int(reply.ATask.WorkerId)

if postReply.StatusCode == STATUS_CODE_OK {

// rename file

for i := 0; i < int(reply.NReduce); i++ {

oldFilePath := getTmpMapResultFileName(workerId, taskId, i)

newFilePath := getMapResultFileName(taskId, i)

err := os.Rename(oldFilePath, newFilePath)

if nil != err {

log.Fatalf("Rename from [%v] to [%v] failed.\n", oldFilePath, newFilePath)

return false

}

}

}

//log.Printf("Map task done: [%v]", taskId)

return true

}

func DoReduceTask(reply *GetTaskInfoReply, reducef func(string, []string) string) bool {

reduceTaskId := int(reply.ATask.TaskId)

workerId := int(reply.ATask.WorkerId)

nMap := reply.NMap

// read all files

intermediate := []KeyValue{}

for i := 0; i < int(nMap); i++ {

fileName := getMapResultFileName(i, reduceTaskId)

file, err := os.Open(fileName)

if err != nil {

//log.Fatalf("cannot open %v, error message: %v", fileName, err) // see this as an failed task, return and the coordinator will reschedule it

return false

}

dec := json.NewDecoder(file)

for {

var kv KeyValue

if err = dec.Decode(&kv); err != nil {

//fmt.Printf("End of file found!")

break

}

intermediate = append(intermediate, kv)

}

file.Close()

}

sort.Sort(ByKey(intermediate))

outputFileName := getTmpReduceResultFileName(workerId, reduceTaskId)

outputFile, _ := os.Create(outputFileName)

i := 0

for i < len(intermediate) {

j := i + 1

for j < len(intermediate) && intermediate[j].Key == intermediate[i].Key {

j++

}

values := []string{}

for k := i; k < j; k++ {

values = append(values, intermediate[k].Value)

}

output := reducef(intermediate[i].Key, values)

fmt.Fprintf(outputFile, "%v %v\n", intermediate[i].Key, output)

i = j

}

err := outputFile.Close()

if nil != err {

log.Fatalf("Close file [%s] failed, error message: %v", outputFileName, err)

return false

}

return true

}

func DoCleaningWork(workerId int) bool {

//log.Printf("Woker[%v]: All work Done!", workerId)

os.Exit(0)

return true

}

func DoUnExpectedWork(reply *GetTaskInfoReply) bool {

//log.Printf("Woker[%v]: get unexpected work", reply.ATask.WorkerId)

os.Exit(0)

return true

}

// send an RPC request to the coordinator, wait for the response.

// usually returns true.

// returns false if something goes wrong.

func call(rpcname string, args interface{}, reply interface{}) bool {

// c, err := rpc.DialHTTP("tcp", "127.0.0.1"+":1234")

sockname := coordinatorSock()

c, err := rpc.DialHTTP("unix", sockname)

if err != nil {

log.Fatal("dialing:", err)

}

defer c.Close()

err = c.Call(rpcname, args, reply)

if err == nil {

return true

}

fmt.Println(err)

return false

}

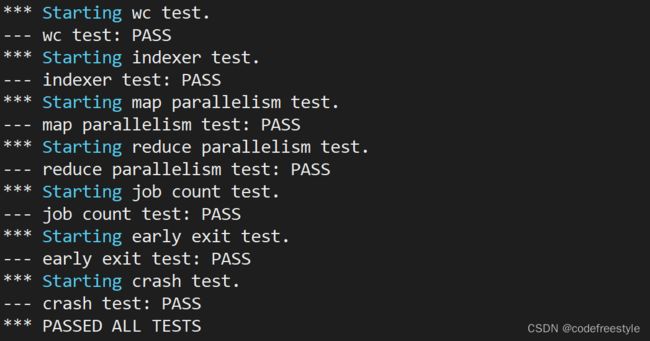

结果

$ ./test-mr-many.sh 10

提交结果

$ make lab1

查看结果

登录网站https://6824.scripts.mit.edu/2022/handin.py/student,可以看到提交的结果。

参考链接

https://mit-public-courses-cn-translatio.gitbook.io/mit6-824/

https://pdos.csail.mit.edu/6.824/schedule.html

https://pdos.csail.mit.edu/6.824/labs/submit.html

Github

源码:https://github.com/aerfalwl/mit6824