Elasticsearch、Kibana以及Java操作ES 的快速使用

docker 安装elastic search 、 kibana(可视化管理elastic search)

docker pull elasticsearch:7.12.1

docker pull kibana:7.12.1

创建docker自定义网络

docker自定义网络可以使得容器之间使用容器名网络互连,默认的网络不会有这功能。

一定要配置自定义网络,并将两个容器同时加到网络中,否则下面的http://es:9200会无法访问到es

docker network create es-net

启动elastic search、kibana容器

启动elastic search容器

docker run -d \

--name es \

-e "ES_JAVA_OPTS=-Xms512m -Xmx512m" \

-e "discovery.type=single-node" \

-v es-data:/usr/share/elasticsearch/data \

-v es-plugins:/usr/share/elasticsearch/plugins \

--privileged \

--network es-net \

-p 9200:9200 \

-p 9300:9300 \

elasticsearch:7.12.1

访问 http://192.168.137.139:9200 (注意换成自己服务器的ip地址)

启动kibana容器

docker run -d \

--name kibana \

-e ELASTICSEARCH_HOSTS=http://es:9200 \

--network=es-net \

-p 5601:5601 \

kibana:7.12.1

访问 http://192.168.137.139:5601 (注意换成自己服务器的ip地址)

给es安装 ik分词器

默认的分词器对中文并不友好,ik分词器可以更好的支持中文分词

下载地址:https://github.com/medcl/elasticsearch-analysis-ik/releases/tag/v7.12.1

(官方有其他的下载方式,可以参考:https://github.com/medcl/elasticsearch-analysis-ik)

查看es-plugins的挂载卷所在目录

docker volume inspect es-plugins

得到 /var/lib/docker/volumes/es-plugins/_data

将下载的文件解压缩并传到服务器挂在卷中

scp -r ik myserver:/var/lib/docker/volumes/es-plugins/_data

重启es服务

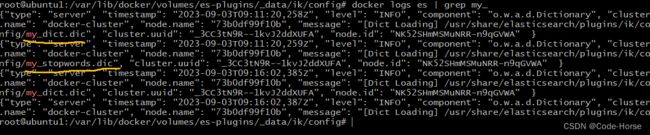

docker restart es

ocker logs es | grep ik # 查看ik分词器是否成功加载

IK分词器包含两种模式:(根据业务选择)

-

ik_smart:最少切分 -

ik_max_word:最细切分

扩展ik分词器的词典

cd /var/lib/docker/volumes/es-plugins/_data/ik/config/

oligei.dic文件

DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd">

<properties>

<comment>IK Analyzer 扩展配置comment>

<entry key="ext_dict">entry>

<entry key="ext_dict">my_dict.dicentry>

<entry key="ext_stopwords">entry>

<entry key="ext_stopwords">my_stopwords.dicentry>

properties>

重启es 和 kibana

docker restart es

docker restart kibana

ocker logs es | grep my_ # 查看日志是否加载了配置

es 索引库操作

创建索引库

PUT /user

{

"mappings": {

"properties": {

"info": {

"type": "text",

"analyzer": "ik_smart"

},

"email": {

"type": "keyword",

"index": "false"

},

"name": {

"type": "object",

"properties": {

"fistName": {

"type": "keyword"

},

"lastName": {

"type": "keyword"

}

}

}

}

}

}

删除索引库

DELETE /user

修改索引库

索引库不支持修改已有的属性,但可以增加属性

PUT /user/_mapping

{

"properties": {

"age": {

"type": "integer"

}

}

}

查询索引库

GET /user

es 文档操作

新增文档

POST /user/_doc/1

{

"info": "学习使我快乐",

"email": "[email protected]",

"age": "18",

"name": {

"firstName": "code",

"lastName": "horse"

}

}

删除文档

DELETE /user/_doc/1

修改文档

全量修改:先删除,后新建(如果没有,也会新建)

PUT /user/_doc/1

{

"info": "学习使我快乐222222222222222",

"email": "[email protected]",

"age": "18",

"name": {

"firstName": "code",

"lastName": "horse"

}

}

增量修改:只修改指定字段的值

POST /user/_update/1

{

"doc": {

"info": "学习使我快乐333333333333"

}

}

查询文档

GET /user/_doc/1

Java使用ES (RestAPI)

官方使用文档:https://www.elastic.co/guide/en/elasticsearch/client/index.html

本教程使用的是 Migrating from the High Level Rest Client

导入依赖

pom.xml

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>1.2.71version>

dependency>

springboot依赖管理有可能会给你导入的依赖版本会给覆盖掉

解决方案:覆盖springboot的版本

7.12.1

操作索引库

IndexDatabaseTest.java

public class IndexDatabaseTest {

private RestHighLevelClient client;

@BeforeEach

public void setUp() { // 创建es连接

this.client = new RestHighLevelClient(RestClient.builder(

HttpHost.create("http://192.168.137.139:9200")

));

}

@Test

public void clientStatus() { // 查看是否连接成功

System.out.println(client);

}

// 创建索引库

@Test

public void createIndexDataBase() throws IOException {

CreateIndexRequest request = new CreateIndexRequest("user");

String createIndexDataBaseDSL = "{\n" +

" \"mappings\": {\n" +

" \"properties\": {\n" +

" \"info\": {\n" +

" \"type\": \"text\",\n" +

" \"analyzer\": \"ik_smart\"\n" +

" },\n" +

" \"email\": {\n" +

" \"type\": \"keyword\",\n" +

" \"index\": \"false\"\n" +

" },\n" +

" \"name\": {\n" +

" \"type\": \"object\",\n" +

" \"properties\": {\n" +

" \"fistName\": {\n" +

" \"type\": \"keyword\"\n" +

" },\n" +

" \"lastName\": {\n" +

" \"type\": \"keyword\"\n" +

" }\n" +

" }\n" +

" }\n" +

" }\n" +

" }\n" +

"}";

request.source(createIndexDataBaseDSL, XContentType.JSON);

client.indices().create(request, RequestOptions.DEFAULT);

}

// 删除索引库

@Test

public void deleteIndexDataBase() throws IOException {

DeleteIndexRequest request = new DeleteIndexRequest("user");

client.indices().delete(request, RequestOptions.DEFAULT);

}

// 修改索引库(只支持增加mapping)

@Test

public void updateIndexDataBase() throws IOException {

PutMappingRequest request = new PutMappingRequest("user");

request.source("{\n" +

" \"properties\": {\n" +

" \"age\": {\n" +

" \"type\": \"integer\"\n" +

" }\n" +

" }\n" +

"}\n", XContentType.JSON);

client.indices().putMapping(request, RequestOptions.DEFAULT);

}

// 查找索引库

@Test

public void getIndexDataBase() throws IOException {

GetIndexRequest request = new GetIndexRequest("user");

GetIndexResponse getIndexResponse = client.indices().get(request, RequestOptions.DEFAULT);

Map<String, MappingMetadata> mappings = getIndexResponse.getMappings();

System.out.println(mappings.get("user").sourceAsMap().values());

}

@AfterEach

public void unMount() throws IOException { // 断开es连接

this.client.close();

}

}

操作文档

DocTest.java

public class DocTest {

private RestHighLevelClient client;

@BeforeEach

void setUp() { // 创建es连接

this.client = new RestHighLevelClient(RestClient.builder(

HttpHost.create("http://192.168.137.139:9200")

));

}

@Test

public void clientStatus() { // 查看是否连接成功

System.out.println(client);

}

// 创建文档

@Test

public void createIndexDataBase() throws IOException {

IndexRequest request = new IndexRequest("user").id("1");

String createDocDSL = "{\n" +

" \"info\": \"学习使我快乐\",\n" +

" \"email\": \"[email protected]\",\n" +

" \"name\": {\n" +

" \"firstName\": \"code\",\n" +

" \"lastName\": \"horse\"\n" +

" }\n" +

"}";

request.source(createDocDSL,XContentType.JSON);

client.index(request, RequestOptions.DEFAULT);

}

// 删除文档

@Test

public void deleteIndexDataBase() throws IOException {

DeleteRequest request = new DeleteRequest("user", "1");

client.delete(request, RequestOptions.DEFAULT);

}

// 修改文档 (API实现的是全量修改)

@Test

public void updateIndexDataBase() throws IOException {

UpdateRequest request = new UpdateRequest("user", "1");

request.doc("{\n" +

" \"info\": \"学习使我痛苦!!!!!!!\"\n" +

" }", XContentType.JSON);

client.update(request, RequestOptions.DEFAULT);

}

// 查找文档

@Test

public void getIndexDataBase() throws IOException {

GetRequest request = new GetRequest("user", "1");

GetResponse response = client.get(request, RequestOptions.DEFAULT);

String json = response.getSourceAsString();

System.out.println(json);

}

@Test

private Map<String, Object> getData(String text) {

Map<String, Object> map = new HashMap<>();

map.put("info", text);

map.put("email", text + "@qq.com");

Map<String, String> name = new HashMap<>();

name.put("firstName", text);

name.put("lastName", text);

map.put("name", name);

System.out.println(JSON.toJSONString(map));

return map;

}

// 批量导入文档

@Test

public void testBulk() throws IOException {

BulkRequest request = new BulkRequest();

for (int i = 1; i <= 200; i ++ ) {

String text = String.valueOf(i);

Map<String, Object> data = getData(text);

request.add(new IndexRequest("user")

.id(text)

.source(JSON.toJSONString(data), XContentType.JSON)

);

}

client.bulk(request, RequestOptions.DEFAULT);

}

@AfterEach

public void unMount() throws IOException { // 断开es连接

this.client.close();

}

}