二进制部署K8s群集+Openebs+KubeSphere+Ceph群集

二进制K8s集群部署——版本为v1.20.4

https://www.cnblogs.com/lizexiong/p/14882419.html

K8s群集配置表

| 主机名 | IP地址 | 组件 | 系统 | 配置 |

|---|---|---|---|---|

| k8smaster1 | 192.168.10.101 | docker、etcd、kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、kube-proxy、nginx、keepalived | Centos7.9 | 2C/2G |

| k8smaster2 | 192.168.10.102 | docker、etcd、kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、kube-proxy、nginx、keepalived | Centos7.9 | 2C/2G |

| k8smaster* | … | … | Centos7.9 | 2C/2G |

| k8snode1 | 192.168.10.103 | docker、etcd、kubelet.service、kube-proxy.service | Centos7.9 | 2C/2G |

| k8snode2 | 192.168.10.104 | docker、etcd、kubelet.service、kube-proxy.service | Centos7.9 | 2C/2G |

| k8snode3 | 192.168.10.104 | docker、kubelet.service、kube-proxy.service | Centos7.9 | 2C/2G |

| k8snode* | … | … | Centos7.9 | 2C/2G |

| k8snode* | … | … | Centos7.9 | 2C/2G |

k8s集群——k8smaster节组件点部署

一、基础环境配置初始化所有集群主机——(所有主机k8s主机操作)

1、所有节点修改主机名

192.168.10.101主机

hostnamectl set-hostname k8smaster1

su

192.168.10.102主机

hostnamectl set-hostname k8smaster2

su

192.168.10.103主机

hostnamectl set-hostname k8snode1

su

192.168.10.104主机

hostnamectl set-hostname k8snode2

su

2、关闭放火墙、内核安全机制、swap交换分区

systemctl stop firewalld

systemctl disable firewalld

sed -i "s/.*SELINUX=.*/SELINUX=disabled/g" /etc/selinux/config

swapoff -a

sed -i '/swap/s/^\(.*\)$/#\1/g' /etc/fstab

3、添加hosts文件

cat >> /etc/hosts << EOF

192.168.10.101 k8smaster1

192.168.10.102 k8smaster2

192.168.10.103 k8snode1

192.168.10.104 k8snode2

EOF

4、将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

5、配置国内yum和epel源

cd /etc/yum.repos.d/

wget http://mirrors.aliyun.com/repo/Centos-7.repo

mv aliyun.repo aliyun.repo.bak

mv Centos-7.repo CentOS-Base.repo

yum clean all

yum makecache

yum update

wget /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

6、配置时间同步

#安装chrony服务

yum -y install chrony

#启动chronyd服务

systemctl start chronyd

#设置为开机自启动

systemctl enable chronyd

#查看确认时间同步

chronyc sources -v

#或使用阿里云的时间同步服务

yum -y install ntpdate

ntpdate time1.aliyun.com

6、升级系统内核

uname -r

3.10.0-514.el7.x86_64

#下载kernel-ml-4.18.9.tar.gz相关版本的包,可根据情况访问http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/自行下载上传到服务器中

tar xf kernel-ml-4.18.9.tar.gz

cd kernel-ml-4.18.9

yum localinstall kernel-ml*

#查看当前的内核

cat /boot/grub2/grub.cfg |grep ^menuentry

menuentry 'CentOS Linux (4.18.9-1.el7.elrepo.x86_64) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-3.10.0-1127.el7.x86_64-advanced-abb47b92-d268-4d8c-a9df-43bf44522cab' {

menuentry 'CentOS Linux (3.10.0-1127.el7.x86_64) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-3.10.0-1127.el7.x86_64-advanced-abb47b92-d268-4d8c-a9df-43bf44522cab' {

menuentry 'CentOS Linux (0-rescue-63f20fbd07a048dc88574233e1ad966b) 7 (Core)' --class centos --class gnu-linux --class gnu --class os --unrestricted $menuentry_id_option 'gnulinux-0-rescue-63f20fbd07a048dc88574233e1ad966b-advanced-abb47b92-d268-4d8c-a9df-43bf44522cab' {

#设置默认启动新内核,并重新生成grub2

grub2-set-default 0 或者 grub2-set-default 'CentOS Linux (4.18.9-1.el7.elrepo.x86_64) 7 (Core)'

grub2-mkconfig -o /boot/grub2/grub.cfg

#重启所有节点

reboot

#再次查看内核

uname -r

4.18.9-1.el7.elrepo.x86_64

二、所有节点安装docker——(k8s所有节点操作)

1、下载docker的yum源并安装docker

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

yum -y install docker-ce-19.03.9-3.el7

systemctl enable docker && systemctl start docker

docker --version

2、设置docker镜像仓库地址加速地址

mkdir -p /etc/docker

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://w2hvqdzg.mirror.aliyuncs.com"]

}

systemctl daemon-reload

systemctl restart docker

#到此所有节点初始化完成

三、部署etcd群集

1、为etcd准备自签证书——(k8smaser1节点操作)

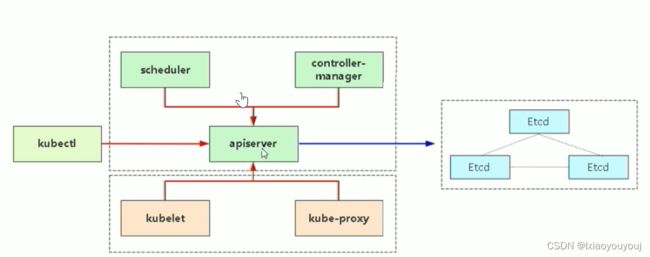

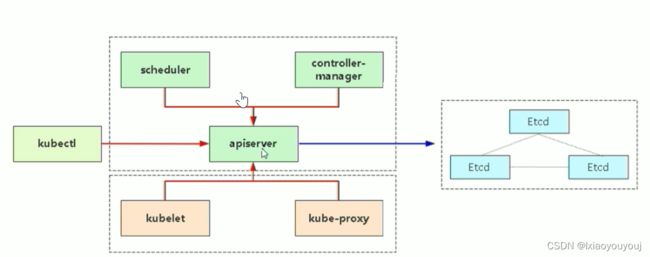

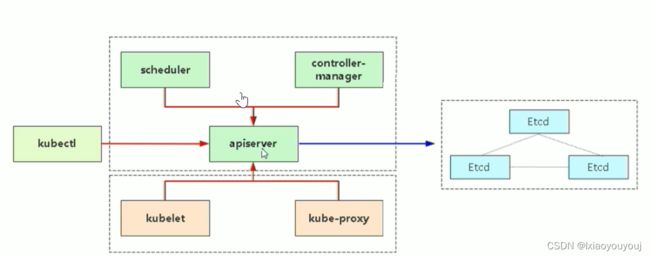

k8s 所有组件都是 采用https加密通信的,这些组件一般有两套根证书生成:k8s 组件(apiserver)和etcd。

红色线:k8s 自建证书颁发机构(CA),需要携带有它生成的客户端证书访问apiserver。

红色线:k8s 自建证书颁发机构(CA),需要携带有它生成的客户端证书访问apiserver。

蓝色线:etcd 自建证书颁发机构(CA),需要携带它颁发的客户端证书访问etcd。

准备 cfssl 证书生成工具

cfssl 是一个开源的证书管理工具, 使用 json 文件生成证书, 相比 openssl 更方便使用。

找任意一台服务器操作, 这里用 Master 节点。

#下载相关工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

生成 Etcd 证书

创建自签证书颁发机构(CA)

#创建证书相关工作目录

mkdir -p ~/TLS/{etcd,k8s}

cd ~/TLS/etcd

#创建ca自签机构的json配置文件

cat > ca-config.json<< EOF

{

"signing":{

"default":{

"expiry":"876000h"

},

"profiles":{

"www":{

"expiry":"87600h",

"usages":[

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json<< EOF

{

"CN":"etcd CA",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"Beijing",

"ST":"Beijing"

}

]

}

EOF

#初始化生成ca证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

ls *.pem

ca-key.pem ca.pem

#使用自签 CA证书 签发 Etcd HTTPS 证书,创建https证书申请文件

{

"CN":"etcd",

"hosts":[

"192.168.10.101",

"192.168.10.102",

"192.168.10.103",

"192.168.10.104"

],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing"

}

]

}

注意:上述文件 hosts 字段中 IP 为所有 etcd 节点的集群内部通信 IP, 一个都不能少! 为了方便后期扩容可以多写几个预留的 IP。

#生成https证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

#查看生成的https证书

ls server*pem

server-key.pem server.pem

2、安装部署etcd——(k8smaster1节点操做)

Etcd 是一个分布式键值存储系统, Kubernetes 使用 Etcd 进行数据存储; 为解决 Etcd 单点故障, 在生产中采用集群方式部署,避免 Etcd 单点故障, 这里使用 4 台组建集群, 可容忍 1 台机器故障, 如果使用 5 台组建集群, 可容忍 2 台机器故障。生产环境建议单独服务器部署这些etcd节点。

#下载etcd安装包

wget https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz

mkdir /opt/etcd/{bin,cfg,ssl} -p

tar zxvf etcd-v3.4.9-linux-amd64.tar.gz

mv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

#把前面生成的证书拷贝到k8群集etcd证书文件存放路径

#是etcd的ca证书和https证书

cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/

#创建etcd配置文件

cat > /opt/etcd/cfg/etcd.conf << EOF

#[Member]

ETCD_NAME="etcd-1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.10.101:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.10.101:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.101:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.101:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.10.101:2380,etcd-2=https://192.168.10.102:2380,etcd-3=https://192.168.10.103:2380,etcd-4=https://192.168.10.104:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

ETCD_NAME: etcd群集节点名称, 集群中唯一

ETCD_DATA_DIR: etcd数据存放目录

ETCD_LISTEN_PEER_URLS: 集群通信监听地址

ETCD_LISTEN_CLIENT_URLS: 客户端访问监听地址,也就是当前机器的ip地址,

ETCD_INITIAL_ADVERTISE_PEER_URLS: 集群通告地址

ETCD_ADVERTISE_CLIENT_URLS: 客户端通告地址

ETCD_INITIAL_CLUSTER: 集群节点地址,需要将每个etcd节点的ip和端口都写上。

ETCD_INITIAL_CLUSTER_TOKEN: 集群 Token

ETCD_INITIAL_CLUSTER_STATE: 加入集群的当前状态, new 是新集群, existing 表示加入已有集群

把当前节点的/opt/etcd目录上传到其他etcd集群节点的/opt/目录下

scp -r /opt/etcd/ root@192.168.10.102:/opt/

scp -r /opt/etcd/ root@192.168.10.103:/opt/

scp -r /opt/etcd/ root@192.168.10.104:/opt/

3、在其他etcd群集节点修改etcd相关配置文件——(Etcd群集节点操作)

vim /opt/etcd/cfg/etcd.conf

#[Member]

ETCD_NAME="etcd-1" #修改为当前节点名,节点 2 改为 etcd-2,节点 3 改为 etcd-3,节点4 改为 etcd-4,以此类推

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.10.101:2380" #修改为当前节点的IP地址

ETCD_LISTEN_CLIENT_URLS="https://192.168.10.101:2379" #修改为当前节点的IP地址

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.10.101:2380" #修改为当前节点的IP地址

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.10.101:2379" #修改为当前节点的IP地址

ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.10.101:2380,etcd-2=https://192.168.10.102:2380,etcd-3=https://192.168.10.103:2380,etcd-4=https://192.168.10.104:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

#在所有etcd节点配置systemd系统命令控制etcd启停自启动等

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/bin/etcd \

--cert-file=/opt/etcd/ssl/server.pem \

--key-file=/opt/etcd/ssl/server-key.pem \

--peer-cert-file=/opt/etcd/ssl/server.pem \

--peer-key-file=/opt/etcd/ssl/server-key.pem \

--trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \

--logger=zap

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#在所有etcd群集节点启动并设置etcd开机启动

systemctl daemon-reload

systemctl start etcd

systemctl enable etcd

#查看集群状态

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.10.101:2379,https://192.168.10.102:2379,https://192.168.10.103:2379,https://192.168.10.104:2379" endpoint health --write-out=table

+-----------------------------+--------+-------------+-------+

| ENDPOINT | HEALTH | TOOK | ERROR |

+-----------------------------+--------+-------------+-------+

| https://192.168.10.102:2379 | true | 18.673674ms | |

| https://192.168.10.103:2379 | true | 17.055034ms | |

| https://192.168.10.101:2379 | true | 20.266814ms | |

| https://192.168.10.104:2379 | true | 21.71931ms | |

+-----------------------------+--------+-------------+-------+

#当显示如上所示,则代表启动正常了。

#如果输出上面信息, 就说明集群部署成功。 如果有问题第一步先看日志:/var/log/message 或 journalctl -u etcd,或者journalctl -u etcd -f 实时查看日志。

#到此etcd集群部署成功

四、部署master节点组件(这里先部署一个主节点、剩余主节点我们后面加入)

部署kube-apiserver——(k8smaster1节点操作)

1、创建生成apiserver相关证书

安装apiserver,需要做一个自签证书,从而可以让apiserver通过https访问node节点。下面我们先生成apiserver的自签证书

#创建自签证书颁发机构(ca)

cd /root/TLS/k8s

cat > ca-config.json<< EOF

{

"signing":{

"default":{

"expiry":"876000h"

},

"profiles":{

"kubernetes":{

"expiry":"87600h",

"usages":[

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json<< EOF

{

"CN":"kubernetes",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"Beijing",

"ST":"Beijing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

#生成ca证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

ls *pem

ca-key.pem ca.pem

**使用自签CA证书签发 kube-apiserver HTTPS 证书**

#创建https证书申请文件

为了方便我们后面将单master扩展为多master集群,我们在hosts 数组中添加几个IP作为备用如下所示多余的就是备用IP地址,其中包含以后扩展的k8smaster、k8snode节点和群集漂移VIP地址

注意:记得不要忘记里面的node节点IP地址,不然无法和node节点进行通讯

cat > server-csr.json<< EOF

{

"CN":"kubernetes",

"hosts":[

"10.0.0.1", #集群IP

"127.0.0.1", #自己本身地址

"192.168.10.101",

"192.168.10.102",

"192.168.10.103",

"192.168.10.104",

"192.168.10.105",

"192.168.10.106",

"192.168.10.107",

"192.168.10.244", #集群虚拟漂移VIP地址

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

注意:创建https证书文件中的hosts地址框中除了集群IP、127.0.0.1、集群中其他节点IP、还要包含集群虚拟漂移VIP地址

如果没有集群虚拟漂移VIP地址的话后面在添加其他k8smaster节点和k8snode节点时,因为会修改某些配置文件中向apiserver通讯的https

地址为集群虚拟漂移VIP地址,如果没集群虚拟漂移VIP地址会发生各个组件无法和apiserver进行通讯导致集群一直处在NotReady状态

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

#查看生产的证书

ls server*pem

server-key.pem server.pem

2、下载k8s群集安装包

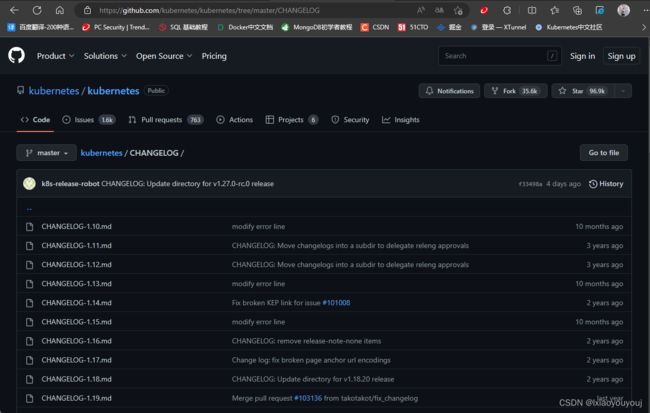

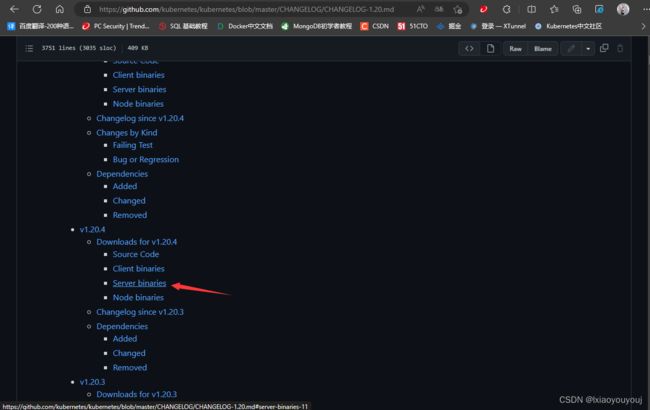

访问https://github.com/kubernetes/kubernetes/tree/master/CHANGELOG可以查询k8s 的各个版本

我们今天主要以K8s-v1.20.4版本为例演示

我们今天主要以K8s-v1.20.4版本为例演示

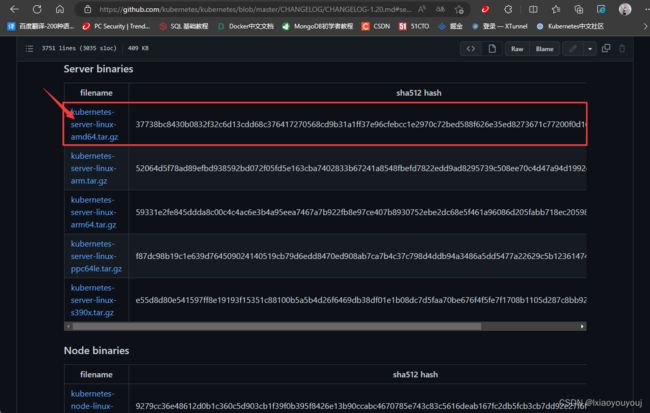

#下面的类型根据自己系统类型选择

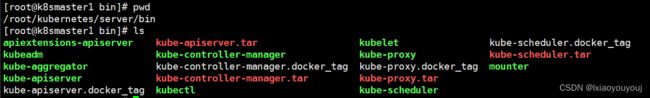

3、上传并解压安装包——k8smaser1节点操作

#然后将下载的kubernetes-server-linux-amd64.tar.gz 包上传到k8smaster1节点中

#解压安装包

tar -zxvf kubernetes-server-linux-amd64.tar.gz

#解压包的所有文件都在/server/bin 路径下

cd kubernetes/server/bin

部署master主要需要这些文件,kube-apiserver,kube-controller-manager,kubectl,kube-scheduler。

部署master主要需要这些文件,kube-apiserver,kube-controller-manager,kubectl,kube-scheduler。

4、创建k8smaster1节点安装文件夹

#创建k8smaster节点安装的相关文件夹

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

#将k8smaster1节点的相关执行文件拷贝到对应目录

cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin

cp kubectl /usr/bin/

**安装kube-apiserver**

#拷贝前面生成apiserver认证的证书

cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/

#创建apiserver配置文件

cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--etcd-servers=https://192.168.10.101:2379,https://192.168.10.102:2379,https://192.168.10.103:2379,https://192.168.10.104:2379 \\

--bind-address=192.168.10.101 \\

--secure-port=6443 \\

--advertise-address=192.168.10.101 \\

--allow-privileged=true \\

--service-cluster-ip-range=10.0.0.0/24 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=20000-32767 \\

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--service-account-issuer=api \\

--service-account-signing-key-file=/opt/kubernetes/ssl/server-key.pem \\

--etcd-cafile=/opt/etcd/ssl/ca.pem \\

--etcd-certfile=/opt/etcd/ssl/server.pem \\

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \\

--requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--proxy-client-cert-file=/opt/kubernetes/ssl/server.pem \\

--proxy-client-key-file=/opt/kubernetes/ssl/server-key.pem \\

--requestheader-allowed-names=kubernetes \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-username-headers=X-Remote-User \\

--enable-aggregator-routing=true \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

EOF

注: 上面两个\ 第一个是转义符, 第二个是换行符, 使用转义符是为了使用 EOF 保留换行符。

• --logtostderr:启用日志

• —v:日志等级

• --log-dir:日志目录

• --etcd-servers:etcd集群地址

• --bind-address:监听地址

• --secure-port:https安全端口

• --advertise-address:集群通告地址

• --allow-privileged:启用授权

• --service-cluster-ip-range:Service虚拟IP地址段

• --enable-admission-plugins:准入控制模块

• --authorization-mode:认证授权,启用RBAC授权和节点自管理

• --enable-bootstrap-token-auth: **启用TLS bootstrap机制下面会配置生成token验证文件**

• --token-auth-file:bootstrap token文件

• --service-node-port-range:Service nodeport类型默认分配端口范围

• --kubelet-client-xxx:apiserver访问kubelet客户端证书

• --tls-xxx-file:apiserver https证书

• 1.20版本必须加的参数:–service-account-issuer,–service-account-signing-key-file

• --etcd-xxxfile:连接Etcd集群证书

• --audit-log-xxx:审计日志

启动聚合层相关配置:

• –requestheader-client-ca-file

• –proxy-client-cert-file

• –proxy-client-key-file

• –requestheader-allowed-names

• –requestheader-extra-headers-prefix

• –requestheader-group-headers

• –requestheader-username-headers

• –enable-aggregator-routin

启用 TLS Bootstrapping 机制

TLS Bootstraping: Master apiserver 启用 TLS 认证后, Node 节点 kubelet 和 kubeproxy 要与 kube-apiserver 进行通信, 必须使用 CA 签发的有效证书才可以, 当 Node节点很多时, 这种客户端证书颁发需要大量工作, 同样也会增加集群扩展复杂度。 为了简化流程, Kubernetes 引入了 TLS bootstraping 机制来自动颁发客户端证书, kubelet会以一个低权限用户自动向 apiserver 申请证书, kubelet 的证书由 apiserver 动态签署。

所以强烈建议在 Node 上使用这种方式, 目前主要用于 kubelet, kube-proxy 还是由我们统一颁发一个证书。

TLS bootstraping 工作流程:

5、创建token文件

#创建上述配置文件中 token 文件

cat > /opt/kubernetes/cfg/token.csv << EOF

fb69c54a1cbcd419b668cf8418c3b8cd,kubelet-bootstrap,10001,"system:node-bootstrapper"

EOF

格式: token, 用户名, UID, 用户组

#token 也可自行生成替换

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

6、配置systemd 管理 apiserver

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

#kube-apiserver启动并设置开机启动

systemctl daemon-reload

systemctl start kube-apiserver

systemctl enable kube-apiserver

到此apiserver安装完成。接下来我们来部署scheduler和controller-manager

通过上图,我们可以了解到,scheduler和controller-manager 也是借助https链接apiserver的的6443 端口,scheduler和controller-manager 链接apiserver的时候,需要有一个身份:kubeconfig。也就是说scheduler和controller-manager 是通过kubeconfig 文件来链接apiserver的。同样的,kubelet和kube-proxy也是通过kubeconfig来链接apiserver的。

备注:kubeconfig是链接k8s 集群的一个配置文件,需要我们手动生成的。

我们先配置scheduler和controller-manager程序

然后在配置kubeconfig文件。

五、部署kube-controller-manager——(k8smaser1节点操作)

1、创建kube-controller-manager 的配置文件

cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOF

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--leader-elect=true \\

--kubeconfig=/opt/kubernetes/cfg/kube-controller-manager.kubeconfig \\

--bind-address=127.0.0.1 \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/16 \\

--service-cluster-ip-range=10.0.0.0/24 \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--experimental-cluster-signing-duration=876000h0m0s"

EOF

– kubeconfi:链接apiserver的配置文件

– leader-elect: 当该组件启动多个时, 自动选举( HA)

– cluster-signing-cert-file

– cluster-signing-key-file: 自动为 kubelet 颁发证书的 CA, 与 apiserver 保持一致

2、生成kube-controller-manager.kubeconfig文件

cd /root/TLS/k8s/

#创建证书请求文件

cat > kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

ls kube-controller-manager*

-rw-r--r-- 1 root root 1045 3月 27 13:12 kube-controller-manager.csr

-rw-r--r-- 1 root root 255 3月 27 13:11 kube-controller-manager-csr.json

-rw------- 1 root root 1679 3月 27 13:12 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1440 3月 27 13:12 kube-controller-manager.pem

生成kubeconfig 文件

#切换到刚才生成kube-controller-manager证书地方

cd /root/TLS/k8s/

#设置环境变量KUBE_CONFIG

KUBE_CONFIG="/opt/kubernetes/cfg/kube-controller-manager.kubeconfig"

#设置环境变量KUBE_APISERVER

KUBE_APISERVER="https://192.168.10.101:6443" # apiserver IP:PORT

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials kube-controller-manager \

--client-certificate=./kube-controller-manager.pem \

--client-key=./kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-controller-manager \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

#通过命令查看kube-controller-manager.kubeconfig是否生成成功

ll /opt/kubernetes/cfg/kube-controller-manager.kubeconfig

-rw------- 1 root root 6348 3月 27 13:15 /opt/kubernetes/cfg/kube-controller-manager.kubeconfig

3、配置systemd 管理 controller-manager

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

#启动controller-manager,并设置开机自启动

systemctl daemon-reload

systemctl start kube-controller-manager

systemctl enable kube-controller-manager

systemctl status kube-controller-manager

六、部署kube-scheduler——(k8smaser1节点操作)

1、创建kube-scheduler配置文件

cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOF

KUBE_SCHEDULER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--leader-elect \

--kubeconfig=/opt/kubernetes/cfg/kube-scheduler.kubeconfig \\

--bind-address=127.0.0.1"

EOF

– leader-elect: 当该组件启动多个时, 自动选举( HA)

– kubeconfi:链接apiserver的配置文件

2、生成kube-scheduler.kubeconfig文件

#切换目录

cd /root/TLS/k8s/

#创建证书请求文件

cat > kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

ll kube-scheduler*

-rw-r--r-- 1 root root 1029 3月 27 13:19 kube-scheduler.csr

-rw-r--r-- 1 root root 245 3月 27 13:17 kube-scheduler-csr.json

-rw------- 1 root root 1675 3月 27 13:19 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1424 3月 27 13:19 kube-scheduler.pem

**创建生成kubeconfig 文件**

#切换到刚才生成kube-scheduler 证书地方

cd /root/TLS/k8s/

#设置环境变量KUBE_CONFIG

KUBE_CONFIG="/opt/kubernetes/cfg/kube-scheduler.kubeconfig"

#配置环境变量KUBE_APISERVER

KUBE_APISERVER="https://192.168.10.101:6443" # apiserver IP:PORT

#生成kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials kube-scheduler \

--client-certificate=./kube-scheduler.pem \

--client-key=./kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-scheduler \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

ll /opt/kubernetes/cfg/kube-scheduler.kubeconfig

-rw------- 1 root root 6306 3月 27 13:20 /opt/kubernetes/cfg/kube-scheduler.kubeconfig

3、配置systemd管理scheduler

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

#kube-scheduler启动并设置开机启动

systemctl daemon-reload

systemctl start kube-scheduler

systemctl enable kube-scheduler

systemctl status kube-scheduler

#查看集群状态

**所有组件都已经启动成功, 通过 kubectl 工具查看当前集群组件状态:**

**如下输出说明 Master 节点组件运行正常。**

kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-3 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

如果执行kubectl get cs出现下面报错就说明我们没有配置kubectl的kubeconfig的证书

The connection to the server localhost:8080 was refused - did you specify the right host or port?

七、生成kubectl访问apiserver证书——(k8smaser1节点操作)

注意:通过kubectl 来访问apiserver时,也是通过https的。我们之前为etcd和apiserver自签证书的时候解释过,上图中红色线部分都是通过https的方式,需要有k8s CA机构生成的证书,各个节点的(scheduler,controller-manager、kubelet、kube-proxy)需要携带有它生成的客户端证书(kubeconfig)访问apiserver。

注意:通过kubectl 来访问apiserver时,也是通过https的。我们之前为etcd和apiserver自签证书的时候解释过,上图中红色线部分都是通过https的方式,需要有k8s CA机构生成的证书,各个节点的(scheduler,controller-manager、kubelet、kube-proxy)需要携带有它生成的客户端证书(kubeconfig)访问apiserver。

kubectl 访问apiserver也是同样的原理,需要也要带着客户端证书(kubeconfig)

为此我们需要为kubectl 单独分配一下kubeconfig。

为kubectl 分配kubeconfig的方式也是类似的。

1、生成kubectl访问apiserver证书

**注意cn字段是admin,并且将其绑定到system:master 组下面。这样就具备了链接k8s 集群管理员权限。**

#切换目录

cd /root/TLS/k8s/

#创建证书请求文件

cat > admin-csr.json<< EOF

{

"CN":"admin",

"hosts":[],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"Beijing",

"ST":"Beijing",

"O":"system:masters",

"OU":"System"

}

]

}

EOF

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

ll admin*

-rw-r--r-- 1 root root 1009 3月 27 14:25 admin.csr

-rw-r--r-- 1 root root 276 3月 27 14:25 admin-csr.json

-rw------- 1 root root 1675 3月 27 14:25 admin-key.pem

-rw-r--r-- 1 root root 1403 3月 27 14:25 admin.pem

2、生成kubeconfig 文件

#创建目录:因为kubectl的默认配置文件为/user/.kube/路径下的config文件

mkdir /root/.kube

#切换到刚才生成kube-scheduler 证书地方

cd /root/TLS/k8s/

#设置环境变量KUBE_CONFIG**

KUBE_CONFIG="/root/.kube/config"

#配置环境变量KUBE_APISERVER

KUBE_APISERVER="https://192.168.10.101:6443" # apiserver IP:PORT

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials cluster-admin \

--client-certificate=./admin.pem \

--client-key=./admin-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user=cluster-admin \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

ll /root/.kube/config

-rw------- 1 root root 6276 3月 27 14:28 /root/.kube/config

#然后在执行kubectl get cs

kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-3 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

#出现这些就说明没问题了

k8s集群——k8snode节点部署相关组件(kubelet,kube-proxy)

一、部署kubelet

1、在所有k8snode节点上创建k8s文件夹

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

2、把kubelet、kube-proxy及相关软件包复制到所有k8snode节点——(k8smaster1节点操作)

cd kubernetes/server/bin/

#复制 kube-proxy

scp kube-proxy root@192.168.10.103:/opt/kubernetes/bin/

scp kube-proxy root@192.168.10.104:/opt/kubernetes/bin/

#复制kubelet

scp kubelet root@192.168.10.103:/opt/kubernetes/bin/

scp kubelet root@192.168.10.104:/opt/kubernetes/bin/

#复制kubectl

scp kubectl root@192.168.10.103:/usr/bin/

scp kubectl root@192.168.10.104:/usr/bin/

#把生成的kubectl.kubeconfig文件拷贝到k8snode节点

cd /root/

scp -r .kube/ root@192.168.10.103:/root/

scp -r .kube/ root@192.168.10.104:/root/

#这样k8snode节点也可以使用kubectl命令了

3、创建kubelet.conf 文件——(所有k8snode节点操作)

#其中–hostname-override 改成对用node节点的主机名称,我们这里是k8snode1和k8snode2

cat > /opt/kubernetes/cfg/kubelet.conf << EOF

KUBELET_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--hostname-override=k8snode1 \\

--network-plugin=cni \\

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\

--config=/opt/kubernetes/cfg/kubelet-config.yml \\

--cert-dir=/opt/kubernetes/ssl \\

--pod-infra-container-image=lizhenliang/pause-amd64:3.0"

EOF

– hostname-override: 显示名称, 集群中唯一

– network-plugin: 启用 CNI

– kubeconfig: 空路径, 会自动生成, 后面用于连接 apiserver

– bootstrap-kubeconfig: 首次启动向 apiserver 申请证书

– config: 配置参数文件

– cert-dir: kubelet 证书生成目录

– pod-infra-container-image: 管理 Pod 网络容器的镜像

4、创建 kubelet 参数配置文件——(所有k8snode节点操作)

cat > /opt/kubernetes/cfg/kubelet-config.yml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

5、所有k8snode节点生成bootstrap.kubeconfig 客户端请求文件——(k8smaser1节点操作)

#将k8smaster1节点/opt/kubernetes/ssl/所有文件复制到所有k8snode节点

cd /opt/kubernetes/ssl

scp ca* server* root@192.168.10.103:/opt/kubernetes/ssl/

scp ca* server* root@192.168.10.104:/opt/kubernetes/ssl/

6、生成 kubelet的bootstrap.kubeconfig 配置文件,并复制到各个k8snode节点——(k8smaster1节点操作)

#配置环境变量KUBE_APISERVER

KUBE_APISERVER="https://192.168.10.101:6443" # apiserver IP:PORT

#配置环境变量token

TOKEN="fb69c54a1cbcd419b668cf8418c3b8cd" #与之前配置生成的 token.csv 里保持一致

#设置环境变量KUBE_CONFIG

KUBE_CONFIG="/opt/kubernetes/cfg/bootstrap.kubeconfig"

#生成bootstrap.kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials "kubelet-bootstrap" \

--token=${TOKEN} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

cd /opt/kubernetes/cfg

ll bootstrap.kubeconfig

-rw------- 1 root root 2168 3月 27 15:25 bootstrap.kubeconfig

#复制bootstrap.kubeconfig到所有k8snode节点

scp bootstrap.kubeconfig root@192.168.10.103:/opt/kubernetes/cfg/

scp bootstrap.kubeconfig root@192.168.10.104:/opt/kubernetes/cfg/

所有k8snode节点操作*********

7、配置systemd管理kubelet,启动并设置开机自启动——(所有k8snode节点操作)

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf

ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl start kubelet

systemctl enable kubelet

systemctl status kubelet

8、授权 kubelet-bootstrap 用户允许请求证书——(k8smaser1节点操作)

这一步主要是为后面添加k8snode节点做准备,让我们之前创建的token 文件中的用户,并绑定到角色node-bootstrapper,从而可以让k8snode 以token 文件中的用户带着node-bootstrapper角色最小权限的访问apiserver。

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

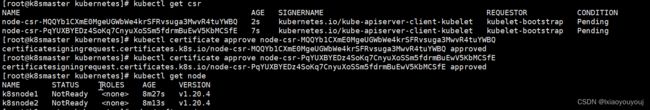

批准 kubelet 的证书申请并加入集群——(在k8smaster1节点上执行)

批准申请命令示例

kubectl certificate approve node-csr-uCEGPOIiDdlLODKts8J658HrFq9CZ–K6M4G7bjhk8A

查询集群状态——(k8smaser1节点操作)

由于网络插件还没有部署, 节点会没有准备就绪 NotReady

kubectl get node

NAME STATUS ROLES AGE VERSION

k8snode1 NotReady 8m27s v1.20.4

k8snode2 NotReady 8m13s v1.20.4

#而且这里也没有显示k8smaster节点的状态,想要探测到集群中master节点状态也需要在master节点部署kubelet、kube-proxy

##后面会有详细操作说明

二、部署kube-proxy——(所有k8snode节点操作)

1、创建kube-proxy配置文件

cat > /opt/kubernetes/cfg/kube-proxy.conf << EOF

KUBE_PROXY_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--config=/opt/kubernetes/cfg/kube-proxy-config.yml"

EOF

2、配置参数配置文件

cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOF

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig

hostnameOverride: k8snode1

clusterCIDR: 10.0.0.0/24

EOF

#注意:kubeconfig前面有两个空格。

#hostnameOverride 为当前主机名称。node1 节点为k8snode1,node2节点为k8snode2

3、生成 kube-proxy.kubeconfig 文件——(k8smaser1节点操作)

在k8smaster1 节点上生成 kube-proxy 证书

#切换目录

cd /root/TLS/k8s

#创建证书请求文件

cat > kube-proxy-csr.json<< EOF

{

"CN":"system:kube-proxy",

"hosts":[

],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"k8s",

"OU":"System"

}

]

}

EOF

#生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

ls kube-proxy*pem

kube-proxy-key.pem kube-proxy.pem

生成 kubeconfig 文件

KUBE_APISERVER="https://192.168.10.101:6443"

KUBE_CONFIG="/opt/kubernetes/cfg/kube-proxy.kubeconfig"

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

ll /opt/kubernetes/cfg/kube-proxy.kubeconfig

-rw------- 1 root root 6274 3月 27 15:36 /opt/kubernetes/cfg/kube-proxy.kubeconfig

将kube-proxy.kubeconfig拷贝到所有k8snode节点指定路径——(k8smaster1节点操作)

scp /opt/kubernetes/cfg/kube-proxy.kubeconfig root@192.168.10.103:/opt/kubernetes/cfg/

scp /opt/kubernetes/cfg/kube-proxy.kubeconfig root@192.168.10.104:/opt/kubernetes/cfg/

4、配置systemd管理kube-proxy——(所有k8snode节点操作)

cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#启动并设置开机启动

systemctl daemon-reload

systemctl start kube-proxy

systemctl status kube-proxy

systemctl enable kube-proxy

k8s群集——部署集群网络calico

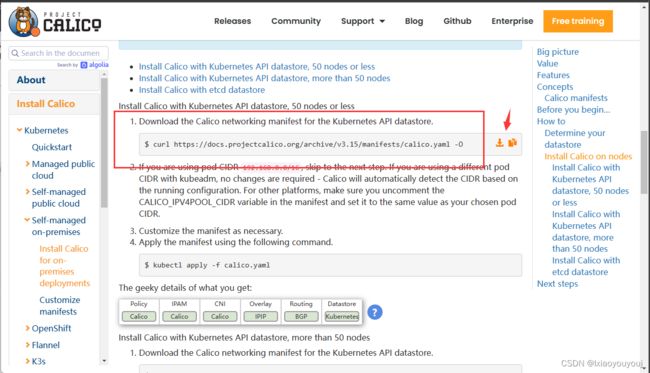

一、部署calico网路组件——(k8smaser1节点操作)

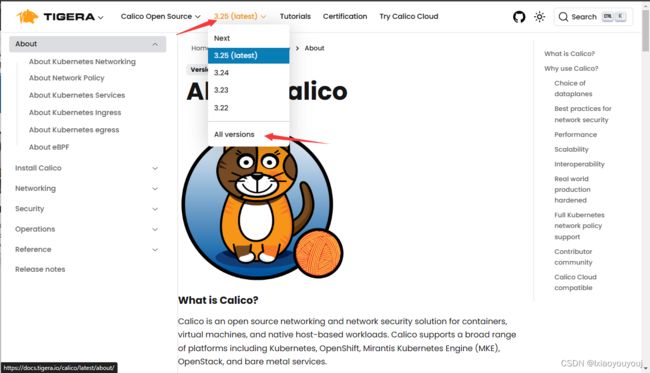

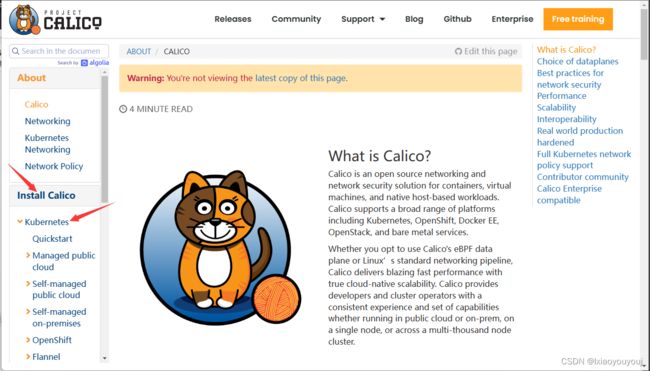

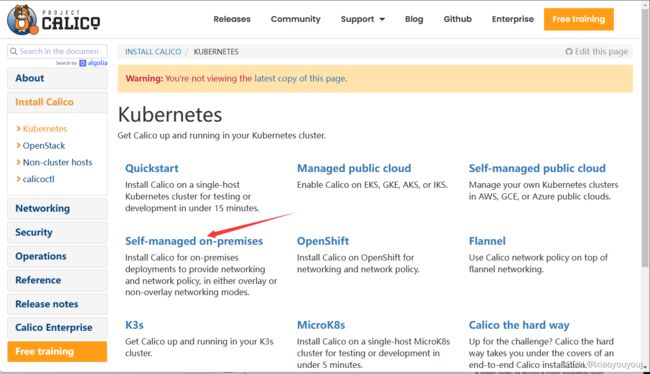

访问calico官网https://docs.tigera.io/

这里以calico3.15为例

这里以calico3.15为例

1、下载安装calico网络组件的安装文件

curl https://docs.projectcalico.org/archive/v3.15/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 176k 100 176k 0 0 187k 0 --:--:-- --:--:-- --:--:-- 187k

ll calico.yaml

-rw-r--r-- 1 root root 181004 3月 28 16:17 calico.yaml

kubectl apply -f calico.yaml

kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-lmv6k 1/1 Running 1 23h

calico-node-dqh44 1/1 Running 2 23h

calico-node-gnfz7 1/1 Running 1 23h

kubectl get node

NAME STATUS ROLES AGE VERSION

k8snode1 Ready 23h v1.20.4

k8snode2 Ready 23h v1.20.4

#可以看到在安装完集群网络后工作节点状态就变为了ready状态了

k8s群集——部署coredns

1、k8smaster1节点操作创建coredns.yaml文件——(k8smaser1节点操作)

vim coredns.yaml

#Warning: This is a file generated from the base underscore template file: coredns.yaml.base

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

serviceAccountName: coredns

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

- key: "CriticalAddonsOnly"

operator: "Exists"

containers:

- name: coredns

image: coredns/coredns:1.2.2

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.0.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

kubectl apply -f coredns.yaml

serviceaccount/coredns configured

clusterrole.rbac.authorization.k8s.io/system:coredns configured

clusterrolebinding.rbac.authorization.k8s.io/system:coredns configured

configmap/coredns configured

deployment.apps/coredns created

service/kube-dns configured

kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-lmv6k 1/1 Running 1 46h

calico-node-dqh44 1/1 Running 2 46h

calico-node-gnfz7 1/1 Running 1 46h

coredns-6d8f96d957-mjkdf 1/1 Running 0 27s

2、如果执行以下命令出错——(k8smaser1节点操作)

kubectl logs coredns-6d8f96d957-mjkdf -n kube-system

#出现如下错误

Error from server (Forbidden): Forbidden (user=kubernetes, verb=get, resource=nodes, subresource=proxy) ( pods/log coredns-6b9bb479b9-79qt8)

说明apiserver没有权限访问kubelet 所以要创建apiserver访问权限

3、创建授权配置文件授权 apiserver 访问 kubelet所有k8smaster节点都需要操作——(这里先在k8smaster1节点操做)

#编辑授权apiserver访问kubelet的yaml文件

vim apiserver-to-kubelet-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

#执行授权apiserver访问kubelet的yaml文件

kubectl apply -f apiserver-to-kubelet-rbac.yaml

kubectl logs coredns-6d8f96d957-mjkdf -n kube-system

.:53

2023/04/11 06:11:18 [INFO] CoreDNS-1.2.2

2023/04/11 06:11:18 [INFO] linux/amd64, go1.11, eb51e8b

CoreDNS-1.2.2

linux/amd64, go1.11, eb51e8b

2023/04/11 06:11:18 [INFO] plugin/reload: Running configuration MD5 = 18863a4483c30117a60ae2332bab9448

W0411 06:24:20.801128 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:350: watch of *v1.Endpoints ended with: too old resource version: 730901 (731192)

W0411 06:24:26.130903 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:355: watch of *v1.Namespace ended with: too old resource version: 729252 (731192)

W0411 06:29:47.732366 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:348: watch of *v1.Service ended with: too old resource version: 729252 (731192)

W0411 06:43:26.757851 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:348: watch of *v1.Service ended with: too old resource version: 731192 (733682)

W0412 07:24:20.172632 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:350: watch of *v1.Endpoints ended with: too old resource version: 1008607 (1008610)

W0412 07:26:17.959584 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:355: watch of *v1.Namespace ended with: too old resource version: 731192 (1008713)

W0412 07:26:54.061601 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:350: watch of *v1.Endpoints ended with: too old resource version: 1008728 (1008779)

W0412 07:26:54.835794 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:355: watch of *v1.Namespace ended with: too old resource version: 1008713 (1008789)

W0412 07:27:57.357091 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:355: watch of *v1.Namespace ended with: too old resource version: 1008789 (1008914)

W0412 07:28:05.938866 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:350: watch of *v1.Endpoints ended with: too old resource version: 1008779 (1008918)

W0412 07:28:47.303274 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:355: watch of *v1.Namespace ended with: too old resource version: 1008914 (1009091)

W0412 07:29:22.444485 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:350: watch of *v1.Endpoints ended with: too old resource version: 1008918 (1009139)

W0412 07:30:08.527392 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:350: watch of *v1.Endpoints ended with: too old resource version: 1009139 (1009148)

W0412 07:30:13.596922 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:355: watch of *v1.Namespace ended with: too old resource version: 1009091 (1009157)

W0412 07:30:36.928386 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:355: watch of *v1.Namespace ended with: too old resource version: 1009157 (1009172)

W0412 07:32:11.591105 1 reflector.go:341] github.com/coredns/coredns/plugin/kubernetes/controller.go:348: watch of *v1.Service ended with: too old resource version: 954709 (1009148)

#可以看到命令可以正常使用了

k8s群集——增加K8smaster节点升级为高可用架构

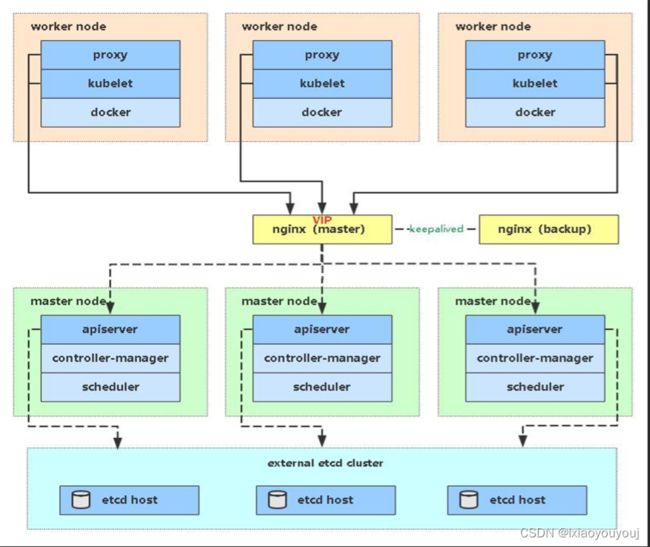

Kubernetes作为容器集群系统,通过健康检查+重启策略实现了Pod故障自我修复能力,通过调度算法实现将Pod分布式部署,并保持预期副本数,根据Node失效状态自动在其他Node拉起Pod,实现了应用层的高可用性。

针对Kubernetes集群,高可用性还应包含以下两个层面的考虑:

Etcd数据库的高可用性和Kubernetes Master组件的高可用性,而Etcd我们已经采用3个节点组建集群实现高可用,本节将对Master节点高可用进行说明和实施。

Master节点扮演着总控中心的角色,通过不断与工作节点上的Kubelet和kube-proxy进行通信来维护整个集群的健康工作状态。如果Master节点故障,将无法使用kubectl工具或者API做任何集群管理。

Master节点主要有三个服务kube-apiserver、kube-controller-manager和kube-scheduler,其中kube-controller-manager和kube-scheduler组件自身通过选择机制已经实现了高可用,所以Master高可用主要针对kube-apiserver组件,而该组件是以HTTP API提供服务,因此对他高可用与Web服务器类似,增加负载均衡器对其负载均衡即可,并且可水平扩容。

一、部署k8smaster2节点(如果添加多master操作和添加k8smaser2是一样的)

前面我们所有节点已经初始化和安装过docker了所以这里我们直接部署k8smaster2节点就好了

1、复制k8smaster1节点相关配置到k8smaster2节点——(k8smaser1节点操作)

scp -r /opt/kubernetes 192.168.10.102:/opt/

scp /usr/lib/systemd/system/kube* 192.168.10.102:/usr/lib/systemd/system/

scp /usr/bin/kubectl 192.168.10.102:/usr/bin/

scp -r ~/.kube 192.168.10.102:/root/

2、修改相关配置文件地址为本地地址——(k8smaser2节点操作)

vim /opt/kubernetes/cfg/kube-apiserver.conf

KUBE_APISERVER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--etcd-servers=https://192.168.10.101:2379,https://192.168.10.102:2379,https://192.168.10.103:2379,https://192.168.10.104:2379 \

--bind-address=192.168.10.102 \

--secure-port=6443 \

--advertise-address=192.168.10.102 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,ServiceAccount,LimitRanger,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth=true \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=20000-32767 \

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--service-account-issuer=api \

--service-account-signing-key-file=/opt/kubernetes/ssl/server-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \

--requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem \

--proxy-client-cert-file=/opt/kubernetes/ssl/server.pem \

--proxy-client-key-file=/opt/kubernetes/ssl/server-key.pem \

--requestheader-allowed-names=kubernetes \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--enable-aggregator-routing=true \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

#修改这两处

--bind-address= #修改为本机地址

--advertise-address= #修改为本机地址

vim /opt/kubernetes/cfg/kube-controller-manager.kubeconfig

server: https://192.168.10.102:6443 #修改server地址为本机地址

vim /opt/kubernetes/cfg/kube-scheduler.kubeconfig

server: https://192.168.10.102:6443 #修改server地址为本机地址

vim /opt/kubernetes/cfg/bootstrap.kubeconfig

server: https://192.168.10.102:6443 #修改server地址为本机地址

vim /opt/kubernetes/cfg/kube-proxy.kubeconfig

server: https://192.168.10.102:6443 #修改server地址为本机地址

vim /root/.kube/config

server: https://192.168.10.102:6443 #修改server地址为本机地址

3、启动设置开机自启主节点服务

systemctl daemon-reload

systemctl enable kube-apiserver kube-controller-manager kube-scheduler

systemctl start kube-apiserver kube-controller-manager kube-scheduler

4、如果执行kubectl get cs命令出错误——(k8smaser2节点操作)

kubectl get cs

tail -f /var/log/messges #查看日志发现是这个问题

k8s.io/client-go/informers/factory.go:134: Failed to watch *v1.ReplicationController: failed to list *v1.ReplicationController: Get "https://192.168.10.102:6443/api/v1/replicationcontrollers?limit=500&resourceVersion=0": dial tcp 192.168.10.102:6443: connect: connection refused

#可能是linux的内核安全机制没有彻底关闭

setenforce 0

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

#然后重启主节点上面的kube-apiserver服务

systemctl restart kube-apiserver.service

#再次查看

kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-3 Healthy {"health":"true"}

5、如果k8smaster2节点执行kubectl get cs出现以下错误

kubectl get cs

error:kubectl get cs No resources found.

原因是因为我们之前已经让k8smaster1节点批准 kubelet 的证书申请并加入集群了

在k8smaster2节点没有批准kubelet的证书申请并加入群集,所以我们要重新申请加入集群

#停止kubelet服务——(所有k8snode节操作)

systemctl stop kubelet.service

#删除kubelet认证自动生成的文件

cd /opt/kubernetes/ssl

ca-key.pem kubelet-client-2023-03-29-16-55-33.pem kubelet.crt server-key.pem

ca.pem kubelet-client-current.pem kubelet.key server.pem

rm -f kubelet-client-* kubelet*

#在启动kubelet服务

systemctl start kubelet.service

#创建授权过kubelet-bootstrap的k8smaster节点操作

kubectl delete clusterrolebinding kubelet-bootstrap #先删除之前的kubelet-bootstrap

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap #在重新创建授权下

#在k8smaster节点查看node节点的请求——(所有k8smaster节点都可操作)

kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-mJwuqA7DAf4UmB1InN_WEYhFWbQKOqUVXg9Bvc7Intk 4s kubelet-bootstrap Pending

node-csr-ydhzi9EG9M_Ozmbvep0ledwhTCanppStZoq7vuooTq8 11s kubelet-bootstrap Pending

#所有k8smaster授权kubelet的请求——(所有k8smaster节点操作)

kubectl certificate approve node-csr-mJwuqA7DAf4UmB1InN_WEYhFWbQKOqUVXg9Bvc7Intk

certificatesigningrequest.certificates.k8s.io/node-csr-mJwuqA7DAf4UmB1InN_WEYhFWbQKOqUVXg9Bvc7Intk approved

kubectl certificate approve node-csr-ydhzi9EG9M_Ozmbvep0ledwhTCanppStZoq7vuooTq8

certificatesigningrequest.certificates.k8s.io/node-csr-ydhzi9EG9M_Ozmbvep0ledwhTCanppStZoq7vuooTq8 approved

6、创建授权配置文件授权 apiserver 访问 kubelet(所有k8smaster节点都需操做)这里k8smaser2节点操作

k8smaster1节点已经执行过了,只需要在未执行的master节点执行

如果不进行授权可能导致kubectl logs 等命令执行出错

#编辑授权访问kubelet的yaml文件

vim apiserver-to-kubelet-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

#执行授权可以访问kubelet的yaml文件

kubectl apply -f apiserver-to-kubelet-rbac.yaml

#测试查看pod日志

kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-lmv6k 1/1 Running 2 3d17h

calico-node-dqh44 1/1 Running 3 3d17h

calico-node-gnfz7 1/1 Running 2 3d17h

calico-node-jqklg 1/1 Running 0 20h

calico-node-x48ld 1/1 Running 0 20h

coredns-6d8f96d957-mjkdf 1/1 Running 1 43h

kubectl logs coredns-6d8f96d957-mjkdf -n kube-system

.:53

2023/03/30 05:07:35 [INFO] CoreDNS-1.2.2

2023/03/30 05:07:35 [INFO] linux/amd64, go1.11, eb51e8b

CoreDNS-1.2.2

linux/amd64, go1.11, eb51e8b

2023/03/30 05:07:35 [INFO] plugin/reload: Running configuration MD5 = 18863a4483c30117a60ae2332bab9448

#这样就可以正常查看日志了

#到此所有master节点都部署完毕了,接下来我们要实现高可用的功能了

k8s群集——部署Nginx+Keepalived高可用负载均衡器

• Nginx是一个主流Web服务和反向代理服务器,这里用四层实现对apiserver实现负载均衡。

• Nginx是一个主流Web服务和反向代理服务器,这里用四层实现对apiserver实现负载均衡。

• Keepalived是一个主流高可用软件,基于VIP绑定实现服务器双机热备,在上述拓扑中,Keepalived主要根据Nginx运行状态判断是否需要故障转移(漂移VIP),例如当Nginx主节点挂掉,VIP会自动绑定在Nginx备节点,从而保证VIP一直可用,实现Nginx高可用。

注意1:为了节省机器,这里与K8s Master节点机器复用。也可以独立于k8s集群之外部署,只要nginx与apiserver能通信就行。

注意2:如果你是在公有云上,一般都不支持keepalived,那么你可以直接用它们的负载均衡器产品,直接负载均衡多台Master kube-apiserver,架构与上面一样

一、部署配置nginx+keepalived高可用负载均衡器——(所有k8smaser节点操作)

1、安装nginx+keepalived

yum -y install epel-release nginx keepalived

2、配置nginx

vim /etc/nginx/nginx.conf

# For more information on configuration, see:

# * Official English Documentation: http://nginx.org/en/docs/

# * Official Russian Documentation: http://nginx.org/ru/docs/

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

#(配置如下*********************************************************************************)

#四层负载均衡,为两台Master apiserver组件提供负载均衡

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.10.101:6443; # Master1 APISERVER IP:PORT

server 192.168.10.102:6443; # Master2 APISERVER IP:PORT

}

server {

listen 16443; #由于nginx与master节点复用,这个监听端口不能是6443,否则会冲突

proxy_pass k8s-apiserver;

}

}

#(配置如上********************************************************************************)

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 4096;

include /etc/nginx/mime.types;

default_type application/octet-stream;

# Load modular configuration files from the /etc/nginx/conf.d directory.

# See http://nginx.org/en/docs/ngx_core_module.html#include

# for more information.

include /etc/nginx/conf.d/*.conf;

server {

listen 80;

listen [::]:80;

server_name _;

root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

error_page 404 /404.html;

location = /404.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

}

3、配置keeplalived

cd /etc/keepalived/

mv keepalived.conf keepalived.conf.bak

vim keepalived.conf

global_defs {

notification_email {

acassen@firewall.loc

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER #注意:k8smaster1(nginx主)配置为NGINX_MASTER 其他k8smaster(nginx备)配置为NGINX_BACKUP

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh" #指定检查nginx工作状态脚本(根据nginx状态判断是否故障转移)

}

vrrp_instance VI_1 {

state MASTER

interface ens33 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟漂移VIP地址要和之前配置apiserver 生成server证书配置中的漂移VIP地址一致

virtual_ipaddress {

192.168.10.244/24

}

track_script {

check_nginx

}

}

4、创建keepalived配置文件中判断nginx工作状态的脚本文件

vim check_nginx.sh

#!/bin/bash

count=$(ss -antp |grep nginx |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

exit 1

else

exit 0

fi

5、赋予脚本执行权限

chmod +x /etc/keepalived/check_nginx.sh

6、启动nginx、keepalived

systemctl daemon-reload

systemctl enable nginx keepalived

systemctl start nginx keepalived

二、如果启动nginx失败出现以下错误

nginx: [emerg] unknown directive "stream" in /etc/nginx/nginx.conf:18

nginx: configuration file /etc/nginx/nginx.conf test failed

#说明缺少modules模块,要安装modules模块

yum -y install nginx-all-modules.noarch

#检查nginx配置文件是否正确

nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

#然后在启动nginx就没问题了

三、验证nginx+keepalived高可用负载均衡器是否正常工作

1、查看keepalived工作状态——(k8smaser1节点操作)

ip add

1: lo: ,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: ,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:e1:88:76 brd ff:ff:ff:ff:ff:ff

inet 192.168.10.101/24 brd 192.168.10.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.10.244/24 scope global secondary ens33 #可以看到VIP地址已经生成了

valid_lft forever preferred_lft forever

inet6 fe80::726c:ab0c:3c5f:95de/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::92fb:ba59:2bcd:6051/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: ,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:9d:17:5a:70 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

6: tunl0@NONE: ,UP,LOWER_UP> mtu 1440 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 10.244.93.64/32 brd 10.244.93.64 scope global tunl0

valid_lft forever preferred_lft forever

2、关闭k8smaster1节点Nginx,测试VIP是否漂移到备节点服务器——(k8smaser1节点操作)

systemctl stop nginx.service

pkill nginx

ip add

1: lo: ,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: ,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:e1:88:76 brd ff:ff:ff:ff:ff:ff

inet 192.168.10.101/24 brd 192.168.10.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::726c:ab0c:3c5f:95de/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::92fb:ba59:2bcd:6051/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: ,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:9d:17:5a:70 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

6: tunl0@NONE: ,UP,LOWER_UP> mtu 1440 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 10.244.93.64/32 brd 10.244.93.64 scope global tunl0

valid_lft forever preferred_lft forever

#已经看不到VIP了

3、在k8smaser2节点查看VIP是否飘过来了——(k8smaser2节点操作)

ip add

1: lo: ,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: ,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:85:60:1b brd ff:ff:ff:ff:ff:ff

inet 192.168.10.102/24 brd 192.168.10.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.10.244/24 scope global secondary ens33 #可以看到VIP已经飘过来了

valid_lft forever preferred_lft forever

inet6 fe80::92fb:ba59:2bcd:6051/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::726c:ab0c:3c5f:95de/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::8c84:a019:e9ba:d8d0/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

3: docker0: ,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:75:c3:0d:9d brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

6: tunl0@NONE: ,UP,LOWER_UP> mtu 1440 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 10.244.137.64/32 brd 10.244.137.64 scope global tunl0

valid_lft forever preferred_lft forever

4、k8smaster1节点重新启动nginx服务后,VIP又从新漂移绑定到ens33上,而Nginx Backup节点的ens33网卡上的VIP解绑了——(k8smaser1节点操作)

systemctl start nginx

ip add

1: lo: ,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: ,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:e1:88:76 brd ff:ff:ff:ff:ff:ff

inet 192.168.10.101/24 brd 192.168.10.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.10.244/24 scope global secondary ens33 #可以看到VIP地址又飘回来了

valid_lft forever preferred_lft forever

inet6 fe80::726c:ab0c:3c5f:95de/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::92fb:ba59:2bcd:6051/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: ,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:9d:17:5a:70 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

6: tunl0@NONE: ,UP,LOWER_UP> mtu 1440 qdisc noqueue state UNKNOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

inet 10.244.93.64/32 brd 10.244.93.64 scope global tunl0

valid_lft forever preferred_lft forever

#集群中任意一台机器访问负载均衡地址出现以下就说明没有问题

curl -k https://192.168.10.244:16443/version

{

"major": "1",

"minor": "20",

"gitVersion": "v1.20.4",

"gitCommit": "e87da0bd6e03ec3fea7933c4b5263d151aafd07c",

"gitTreeState": "clean",

"buildDate": "2021-02-18T16:03:00Z",

"goVersion": "go1.15.8",

"compiler": "gc",

"platform": "linux/amd64"

k8s群集——配置能查看节点显示所有master、node节点的状态

1、查看节点发现只显示node节点——(k8smaser1节点操作)

kubectl get node

NAME STATUS ROLES AGE VERSION

k8snode1 Ready 3d18h v1.20.4

k8snode2 Ready 3d18h v1.20.4

2、配置能查看所有master、node节点的状态——(k8snode1节点操作)

#复制kubelet、kube-proxy安装包到所有主节点

scp -r /opt/kubernetes/bin/* root@192.168.10.101:/opt/kubernetes/bin/

scp -r /opt/kubernetes/bin/* root@192.168.10.102:/opt/kubernetes/bin/

#复制kubelet、kube-proxy的所有配置文件到所有主节点

scp -r /opt/kubernetes/cfg/* root@192.168.10.101:/opt/kubernetes/cfg/

scp -r /opt/kubernetes/cfg/* root@192.168.10.102:/opt/kubernetes/cfg/

#复制kubelet、kube-proxy的service文件到所有主节点

scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.10.101:/usr/lib/systemd/system/

scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.10.101:/usr/lib/systemd/system/

3、修改kubelet、kube-proxy配置文件中的主机名为k8smaster的主机名——(所有k8smaser节点操作)

#k8smaster1操作

vim /opt/kubernetes/cfg/kubelet.conf

--hostname-override=k8smaster1

vim /opt/kubernetes/cfg/kube-proxy-config.yml

hostnameOverride: k8smaster1

#k8smaster2操作

vim /opt/kubernetes/cfg/kubelet.conf

--hostname-override=k8smaster2

vim /opt/kubernetes/cfg/kube-proxy-config.yml

hostnameOverride: k8smaster2

4、修改所有节点相关配置文件中和apiserver通讯的地址改为集群虚拟漂移VIP地址——(所有k8s节点操作)

#k8smaster1节点操作

#查看配置文件中和apiserver通讯的地址

grep 192.168.10.101 /opt/kubernetes/cfg/*

/opt/kubernetes/cfg/admin.conf: server: https://192.168.10.101:6443

/opt/kubernetes/cfg/bootstrap.kubeconfig: server: https://192.168.10.101:6443

/opt/kubernetes/cfg/kube-controller-manager.kubeconfig: server: https://192.168.10.101:6443

/opt/kubernetes/cfg/kubelet.kubeconfig: server: https://192.168.10.101:6443

/opt/kubernetes/cfg/kube-proxy.kubeconfig: server: https://192.168.10.101:6443

/opt/kubernetes/cfg/kube-scheduler.kubeconfig: server: https://192.168.10.101:6443

grep 192.168.10.101 /root/.kube/config

server: https://192.168.10.101:6443

#替换所有配置文件中的与apiserver通信地址为集群虚拟漂移VIP地址

sed -i 's#192.168.10.101:6443#192.168.10.244:16443#' /opt/kubernetes/cfg/*

sed -i 's#192.168.10.101:6443#192.168.10.244:16443#' /root/.kube/config

#k8smaster2节点操作

#查看配置文件中和apiserver通讯的地址

grep 192.168.10.102 /opt/kubernetes/cfg/*

/opt/kubernetes/cfg/admin.conf: server: https://192.168.10.102:6443

/opt/kubernetes/cfg/bootstrap.kubeconfig: server: https://192.168.10.102:6443

/opt/kubernetes/cfg/kube-controller-manager.kubeconfig: server: https://192.168.10.102:6443

/opt/kubernetes/cfg/kubelet.kubeconfig: server: https://192.168.10.102:6443

/opt/kubernetes/cfg/kube-proxy.kubeconfig: server: https://192.168.10.102:6443

/opt/kubernetes/cfg/kube-scheduler.kubeconfig: server: https://192.168.10.102:6443

grep 192.168.10.102 /root/.kube/config

server: https://192.168.10.102:6443

#替换所有配置文件中的与apiserver通信地址为集群虚拟漂移VIP地址

sed -i 's#192.168.10.102:6443#192.168.10.244:16443#' /opt/kubernetes/cfg/*

sed -i 's#192.168.10.102:6443#192.168.10.244:16443#' /root/.kube/config

#k8snode1节点操作

#查看配置文件中和apiserver通讯的地址

grep 192.168.10.103 /opt/kubernetes/cfg/*

/opt/kubernetes/cfg/bootstrap.kubeconfig: server: https://192.168.10.103:6443

/opt/kubernetes/cfg/kubelet.kubeconfig: server: https://192.168.10.103:6443

/opt/kubernetes/cfg/kube-proxy.kubeconfig: server: https://192.168.10.103:6443

grep 192.168.10.101 /root/.kube/config

server: https://192.168.10.101:6443

#替换所有配置文件中的与apiserver通信地址为集群虚拟漂移VIP地址

sed -i 's#192.168.10.103:6443#192.168.10.244:16443#' /opt/kubernetes/cfg/*

sed -i 's#192.168.10.101:6443#192.168.10.244:16443#' /root/.kube/config

#k8snode2节点操作

#查看配置文件中和apiserver通讯的地址

grep 192.168.10.104 /opt/kubernetes/cfg/*

/opt/kubernetes/cfg/bootstrap.kubeconfig: server: https://192.168.10.104:6443

/opt/kubernetes/cfg/kubelet.kubeconfig: server: https://192.168.10.104:6443

/opt/kubernetes/cfg/kube-proxy.kubeconfig: server: https://192.168.10.104:6443

grep 192.168.10.101 /root/.kube/config

server: https://192.168.10.101:6443

#替换所有配置文件中的与apiserver通信地址为集群虚拟漂移VIP地址

sed -i 's#192.168.10.104:6443#192.168.10.244:16443#' /opt/kubernetes/cfg/*

sed -i 's#192.168.10.101:6443#192.168.10.244:16443#' /root/.kube/config

所有k8smaster节点操作

#启动节点服务

systemctl daemon-reload

systemctl start kubelet kube-proxy

systemctl enable kubelet kube-proxy

5、查看kubelet_kubeconfig客户端发送的申请——(k8smaser1节点操作)

#所有k8smaster节点都可操作

kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-6zTjsaVSrhuyhIGqsefxzVoZfjklkdiKJFI-MCIDmia 89s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-4zTjsaVSrhuyhIGqsefxzVoZDCNKei-aE2jyTP81Uro 59s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

7、在所有k8smaster节点给kubelet授权——(所有k8smaster节点操作)

kubectl certificate approve node-csr-6zTjsaVSrhuyhIGqsefxzVoZfjklkdiKJFI-MCIDmia

kubectl certificate approve node-csr-4zTjsaVSrhuyhIGqsefxzVoZDCNKei-aE2jyTP81Uro

**************************************************************k8smaster1节点操作*******************************************************************************

kubectl get node

NAME STATUS ROLES AGE VERSION

k8smaster1 Ready 21h v1.20.4

k8smaster2 Ready 21h v1.20.4

k8snode1 Ready 3d18h v1.20.4

k8snode2 Ready 3d18h v1.20.4

#现在就可以看到所有节点状态了

k8s群集——新增node节点

**************************************************************k8snode1节点操作********************************************************************************

1、这里就省略节点初始化+时间同步+安装docker前面已经都演示过了照前面的做就行了

2、部署k8snode3节点

#拷贝已部署好的Node相关文件到新节点

scp -r /opt/* root@192.168.10.103:/opt/

scp -r /root/.kube root@192.168.10.103:/root/

scp -r /usr/bin/kubectl 192.168.10.103:/usr/bin/

scp -r scp -r /usr/lib/systemd/system/kube* root@192.168.10.103:/usr/lib/systemd/system/

**************************************************************k8snode3节点操作********************************************************************************

rm -rf /opt/kubernetes/ssl/kubelet*

#修改相关配置文件的节点名为k8snode3

vim /opt/kubernetes/cfg/kubelet.conf

--hostname-override=k8snode3

vim /opt/kubernetes/cfg/kube-proxy-config.yml

hostnameOverride: k8snode3

#启动节点服务

systemctl daemon-reload

systemctl start kubelet kube-proxy

systemctl enable kubelet kube-proxy

**************************************************************k8smaster1节点操作*******************************************************************************

kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-4zTjsaVSrhuyhIGqsefxzVoZDCNKei-aE2jyTP81Uro 89s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

**************************************************************所有k8smaster节点操作***************************************************************************

kubectl certificate approve node-csr-4zTjsaVSrhuyhIGqsefxzVoZDCNKei-aE2jyTP81Uro

**************************************************************k8smaster1节点操作*******************************************************************************

kubectl get node

NAME STATUS ROLES AGE VERSION

k8smaster1 Ready 21h v1.20.4

k8smaster2 Ready 21h v1.20.4

k8snode1 Ready 3d18h v1.20.4

k8snode2 Ready 3d18h v1.20.4

k8snode3 Ready 1m18s v1.20.4

安装openebs和KubeSphere

安装kubesphere的前提是必须要有k8s群集,k8s群集我们前面已经部署过了,下面开始安装kubesphere

1、检查k8s集群的资源——(k8smaster1节点操作)

kubectl cluster-info

Kubernetes control plane is running at https://192.168.10.244:16443

CoreDNS is running at https://192.168.10.244:16443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster1 Ready 11d v1.20.4

k8smaster2 Ready 11d v1.20.4

k8snode1 Ready 13d v1.20.4

k8snode2 Ready 13d v1.20.4

2、查看k8smaster是否打污点,如果有请先去掉污点,不然后续安装openebs时会出现报错——(所有k8smaster节点)

kubectl describe node k8smaster1 |grep Taint

Taints:

kubectl describe node k8smaster2 |grep Taint

Taints:

3、安装openebs

由于 在已有 Kubernetes 集群之上安装 KubeSphere 需要依赖集群已有的存储类型(StorageClass)

前提条件:

已有 Kubernetes 集群,并安装了 kubectl 或 Helm

Pod 可以被调度到集群的 master 节点(可临时取消 master 节点的 Taint)

关于第二个前提条件,是由于安装 OpenEBS 时它有一个初始化的 Pod 需要在 master 节点启动并创建 PV 给 KubeSphere 的有状态应用挂载。

因此,若您的 master 节点存在 Taint,建议在安装 OpenEBS 之前手动取消 Taint,待 OpenEBS 与 KubeSphere 安装完成后,再对 master 打上 Taint

创建openebs命名空间

kubectl create ns openebs

namespace/openebs created

#下载openebs.yaml文件

wget https://openebs.github.io/charts/openebs-operator.yaml

#修改openebs-operator.yaml

vim openebs-operator.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-hostpath

annotations:

openebs.io/cas-type: local

cas.openebs.io/config: |

- name: StorageType

value: hostpath

- name: BasePath

value: /var/openebs/local #这里可自定义数据落地的位置

provisioner: openebs.io/local

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

#查看StorgaeClass

kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

openebs-device openebs.io/local Delete WaitForFirstConsumer false 7m54s