WebRTC 视频发送和接收处理过程

这里看下视频发送和接收处理过程。分析所基于的应用程序,依然选择 WebRTC 的示例应用 peerconnection_client,代码版本 M96。

应用层创建 VideoTrackSource,创建 VideoTrackInterface,启动本地渲染,并将 VideoTrack 添加进 PeerConnection 中:

void Conductor::AddTracks() {

if (!peer_connection_->GetSenders().empty()) {

return; // Already added tracks.

}

. . . . . .

rtc::scoped_refptr video_device =

CapturerTrackSource::Create();

if (video_device) {

rtc::scoped_refptr video_track_(

peer_connection_factory_->CreateVideoTrack(kVideoLabel, video_device));

main_wnd_->StartLocalRenderer(video_track_);

result_or_error = peer_connection_->AddTrack(video_track_, {kStreamId});

if (!result_or_error.ok()) {

RTC_LOG(LS_ERROR) << "Failed to add video track to PeerConnection: "

<< result_or_error.error().message();

}

} else {

RTC_LOG(LS_ERROR) << "OpenVideoCaptureDevice failed";

}

main_wnd_->SwitchToStreamingUI();

}

在创建 VideoTrackSource,也就是 CapturerTrackSource 时,就会启动摄像头捕捉画面 StartCapture():

#0 webrtc::videocapturemodule::VideoCaptureModuleV4L2::StartCapture(webrtc::VideoCaptureCapability const&)

(this=0x5555557d13b9 , capability=...) at ../../modules/video_capture/linux/video_capture_linux.cc:106

#1 0x00005555557bc2fb in webrtc::test::VcmCapturer::Init(unsigned long, unsigned long, unsigned long, unsigned long)

(this=0x555558953630, width=640, height=480, target_fps=30, capture_device_index=0) at ../../test/vcm_capturer.cc:55

#2 0x00005555557bc480 in webrtc::test::VcmCapturer::Create(unsigned long, unsigned long, unsigned long, unsigned long)

(width=640, height=480, target_fps=30, capture_device_index=0) at ../../test/vcm_capturer.cc:70

#3 0x000055555579f35c in (anonymous namespace)::CapturerTrackSource::Create() () at ../../examples/peerconnection/client/conductor.cc:85

#4 0x00005555557a4545 in Conductor::AddTracks() (this=0x555558625bc0) at ../../examples/peerconnection/client/conductor.cc:454

#5 0x000055555579fb87 in Conductor::InitializePeerConnection() (this=0x555558625bc0) at ../../examples/peerconnection/client/conductor.cc:158

VideoCaptureModuleV4L2 内部会起一个视频捕捉线程,捕捉视频帧并把视频帧吐出去:

#6 0x0000555555907c22 in webrtc::videocapturemodule::VideoCaptureModuleV4L2::CaptureProcess() (this=0x555558685130)

at ../../modules/video_capture/linux/video_capture_linux.cc:411

#7 0x0000555555904f93 in webrtc::videocapturemodule::VideoCaptureModuleV4L2::::operator()(void) const (__closure=0x555558475490)

at ../../modules/video_capture/linux/video_capture_linux.cc:247

#8 0x0000555555907f55 in std::_Function_handler >::_M_invoke(const std::_Any_data &) (__functor=...) at /usr/include/c++/9/bits/std_function.h:300

CapturerTrackSource(VcmCapturer/VideoCaptureModuleV4L2)可以从硬件设备获取视频帧并吐出去。启动本地渲染的时候,会向 CapturerTrackSource 注册一个渲染器 (webrtc/src/examples/peerconnection/client/linux/main_wnd.cc):

void GtkMainWnd::StartLocalRenderer(webrtc::VideoTrackInterface* local_video) {

local_renderer_.reset(new VideoRenderer(this, local_video));

}

void GtkMainWnd::StopLocalRenderer() {

local_renderer_.reset();

}

. . . . . .

GtkMainWnd::VideoRenderer::VideoRenderer(

GtkMainWnd* main_wnd,

webrtc::VideoTrackInterface* track_to_render)

: width_(0),

height_(0),

main_wnd_(main_wnd),

rendered_track_(track_to_render) {

rendered_track_->AddOrUpdateSink(this, rtc::VideoSinkWants());

}

GtkMainWnd::VideoRenderer::~VideoRenderer() {

rendered_track_->RemoveSink(this);

}

视频渲染器将视频捕捉模块和图形渲染模块连接起来。它从视频捕捉模块收取视频帧,然后通过系统的窗口系统提供的图形渲染接口将视频帧渲染出来。视频渲染器收到视频捕捉模块送过来视频帧的过程如下:

#0 GtkMainWnd::VideoRenderer::OnFrame(webrtc::VideoFrame const&) (this=0x5555589536a0, video_frame=...)

at ../../examples/peerconnection/client/linux/main_wnd.cc:548

#1 0x0000555556037b5f in rtc::VideoBroadcaster::OnFrame(webrtc::VideoFrame const&) (this=0x555558953678, frame=...)

at ../../media/base/video_broadcaster.cc:90

#2 0x00005555557ddd6c in webrtc::test::TestVideoCapturer::OnFrame(webrtc::VideoFrame const&) (this=0x555558953630, original_frame=...)

at ../../test/test_video_capturer.cc:62

#3 0x00005555557bcc17 in webrtc::test::VcmCapturer::OnFrame(webrtc::VideoFrame const&) (this=0x555558953630, frame=...) at ../../test/vcm_capturer.cc:94

#4 0x000055555614d6cd in webrtc::videocapturemodule::VideoCaptureImpl::DeliverCapturedFrame(webrtc::VideoFrame&) (this=0x5555584ba400, captureFrame=...)

at ../../modules/video_capture/video_capture_impl.cc:111

#5 0x000055555614e05a in webrtc::videocapturemodule::VideoCaptureImpl::IncomingFrame(unsigned char*, unsigned long, webrtc::VideoCaptureCapability const&, long)

(this=0x5555584ba400, videoFrame=0x7fffcdd32000 "\022\177"..., videoFrameLength=614400, frameInfo=..., captureTime=0) at ../../modules/video_capture/video_capture_impl.cc:201

#6 0x0000555555907c22 in webrtc::videocapturemodule::VideoCaptureModuleV4L2::CaptureProcess() (this=0x5555584ba400)

实际的渲染过程还要稍微复杂一点。渲染器收到视频帧之后,先将视频帧的格式做一些转换,如将 YUV 转成 ARGB,旋转等,然后调度窗口系统取渲染:

void GtkMainWnd::VideoRenderer::OnFrame(const webrtc::VideoFrame& video_frame) {

gdk_threads_enter();

rtc::scoped_refptr buffer(

video_frame.video_frame_buffer()->ToI420());

if (video_frame.rotation() != webrtc::kVideoRotation_0) {

buffer = webrtc::I420Buffer::Rotate(*buffer, video_frame.rotation());

}

SetSize(buffer->width(), buffer->height());

// TODO(bugs.webrtc.org/6857): This conversion is correct for little-endian

// only. Cairo ARGB32 treats pixels as 32-bit values in *native* byte order,

// with B in the least significant byte of the 32-bit value. Which on

// little-endian means that memory layout is BGRA, with the B byte stored at

// lowest address. Libyuv's ARGB format (surprisingly?) uses the same

// little-endian format, with B in the first byte in memory, regardless of

// native endianness.

libyuv::I420ToARGB(buffer->DataY(), buffer->StrideY(), buffer->DataU(),

buffer->StrideU(), buffer->DataV(), buffer->StrideV(),

image_.get(), width_ * 4, buffer->width(),

buffer->height());

gdk_threads_leave();

g_idle_add(Redraw, main_wnd_);

}

接上面看到的函数中最后一行调度的 Redraw() 函数,更详细的渲染过程如下:

gboolean Redraw(gpointer data) {

GtkMainWnd* wnd = reinterpret_cast(data);

wnd->OnRedraw();

return false;

}

. . . . . .

void GtkMainWnd::OnRedraw() {

gdk_threads_enter();

VideoRenderer* remote_renderer = remote_renderer_.get();

if (remote_renderer && remote_renderer->image() != NULL &&

draw_area_ != NULL) {

width_ = remote_renderer->width();

height_ = remote_renderer->height();

if (!draw_buffer_.get()) {

draw_buffer_size_ = (width_ * height_ * 4) * 4;

draw_buffer_.reset(new uint8_t[draw_buffer_size_]);

gtk_widget_set_size_request(draw_area_, width_ * 2, height_ * 2);

}

const uint32_t* image =

reinterpret_cast(remote_renderer->image());

uint32_t* scaled = reinterpret_cast(draw_buffer_.get());

for (int r = 0; r < height_; ++r) {

for (int c = 0; c < width_; ++c) {

int x = c * 2;

scaled[x] = scaled[x + 1] = image[c];

}

uint32_t* prev_line = scaled;

scaled += width_ * 2;

memcpy(scaled, prev_line, (width_ * 2) * 4);

image += width_;

scaled += width_ * 2;

}

VideoRenderer* local_renderer = local_renderer_.get();

if (local_renderer && local_renderer->image()) {

image = reinterpret_cast(local_renderer->image());

scaled = reinterpret_cast(draw_buffer_.get());

// Position the local preview on the right side.

scaled += (width_ * 2) - (local_renderer->width() / 2);

// right margin...

scaled -= 10;

// ... towards the bottom.

scaled += (height_ * width_ * 4) - ((local_renderer->height() / 2) *

(local_renderer->width() / 2) * 4);

// bottom margin...

scaled -= (width_ * 2) * 5;

for (int r = 0; r < local_renderer->height(); r += 2) {

for (int c = 0; c < local_renderer->width(); c += 2) {

scaled[c / 2] = image[c + r * local_renderer->width()];

}

scaled += width_ * 2;

}

}

#if GTK_MAJOR_VERSION == 2

gdk_draw_rgb_32_image(draw_area_->window,

draw_area_->style->fg_gc[GTK_STATE_NORMAL], 0, 0,

width_ * 2, height_ * 2, GDK_RGB_DITHER_MAX,

draw_buffer_.get(), (width_ * 2) * 4);

#else

gtk_widget_queue_draw(draw_area_);

#endif

}

gdk_threads_leave();

}

如上面这段代码,peerconnection_client 的实现中,当远端渲染器存在时,才会真正开始渲染,包括本地预览和远端画面 —— 本地预览是画在远端画面的大窗口中的一小块区域上的。

VideoCaptureModuleV4L2 视频捕捉模块在将捕捉的视频帧送进渲染器去做本地预览之外,还会将视频帧送进视频编码器里:

#0 webrtc::VideoStreamEncoder::OnFrame(webrtc::VideoFrame const&) (this=0xf0000000a0, video_frame=...) at ../../video/video_stream_encoder.cc:1264

#1 0x0000555556037b5f in rtc::VideoBroadcaster::OnFrame(webrtc::VideoFrame const&) (this=0x5555586e1678, frame=...)

at ../../media/base/video_broadcaster.cc:90

#2 0x00005555557ddd20 in webrtc::test::TestVideoCapturer::OnFrame(webrtc::VideoFrame const&) (this=0x5555586e1630, original_frame=...)

at ../../test/test_video_capturer.cc:58

#3 0x00005555557bcc17 in webrtc::test::VcmCapturer::OnFrame(webrtc::VideoFrame const&) (this=0x5555586e1630, frame=...) at ../../test/vcm_capturer.cc:94

#4 0x000055555614d6cd in webrtc::videocapturemodule::VideoCaptureImpl::DeliverCapturedFrame(webrtc::VideoFrame&) (this=0x5555587da600, captureFrame=...)

at ../../modules/video_capture/video_capture_impl.cc:111

#5 0x000055555614e05a in webrtc::videocapturemodule::VideoCaptureImpl::IncomingFrame(unsigned char*, unsigned long, webrtc::VideoCaptureCapability const&, long)

(this=0x5555587da600, videoFrame=0x7fffd9d32000 "\022\177"..., videoFrameLength=614400, frameInfo=..., captureTime=0) at ../../modules/video_capture/video_capture_impl.cc:201

#6 0x0000555555907c22 in webrtc::videocapturemodule::VideoCaptureModuleV4L2::CaptureProcess() (this=0x5555587da600)

at ../../modules/video_capture/linux/video_capture_linux.cc:411

视频编码器在视频帧编码结束后,将编码的视频帧送进 PacedSender 的队列里:

#0 webrtc::PacingController::EnqueuePacket(std::unique_ptr >)

(this=0x55555692fb9a >::tuple, true>(webrtc::RtpPacketToSend*&, std::default_delete&&)+72>, packet=std::unique_ptr = {...}) at ../../modules/pacing/pacing_controller.cc:235

#1 0x000055555692edbc in webrtc::PacedSender::EnqueuePackets(std::vector >, std::allocator > > >)

(this=0x7fffc408e530, packets=std::vector of length 3, capacity 4 = {...}) at ../../modules/pacing/paced_sender.cc:128

#2 0x0000555557425593 in webrtc::RTPSender::EnqueuePackets(std::vector >, std::allocator > > >)

(this=0x7fffc405f4c0, packets=std::vector of length 0, capacity 0) at ../../modules/rtp_rtcp/source/rtp_sender.cc:517

#3 0x000055555743750f in webrtc::RTPSenderVideo::LogAndSendToNetwork(std::vector >, std::allocator > > >, unsigned long)

(this=0x7fffc40602c0, packets=std::vector of length 0, capacity 0, unpacketized_payload_size=2711)

at ../../modules/rtp_rtcp/source/rtp_sender_video.cc:210

#4 0x000055555743b701 in webrtc::RTPSenderVideo::SendVideo(int, absl::optional, unsigned int, long, rtc::ArrayView, webrtc::RTPVideoHeader, absl::optional, absl::optional)

(this=0x7fffc40602c0, payload_type=96, codec_type=..., rtp_timestamp=182197814, capture_time_ms=50000169, payload=..., video_header=..., expected_retransmission_time_ms=..., estimated_capture_clock_offset_ms=...) at ../../modules/rtp_rtcp/source/rtp_sender_video.cc:711

#5 0x000055555743bb06 in webrtc::RTPSenderVideo::SendEncodedImage(int, absl::optional, unsigned int, webrtc::EncodedImage const&, webrtc::RTPVideoHeader, absl::optional)

(this=0x7fffc40602c0, payload_type=96, codec_type=..., rtp_timestamp=182197814, encoded_image=..., video_header=..., expected_retransmission_time_ms=...)

at ../../modules/rtp_rtcp/source/rtp_sender_video.cc:750

#6 0x00005555568ec887 in webrtc::RtpVideoSender::OnEncodedImage(webrtc::EncodedImage const&, webrtc::CodecSpecificInfo const*)

(this=0x7fffc40a95a0, encoded_image=..., codec_specific_info=0x7fffd8d64950) at ../../call/rtp_video_sender.cc:604

#7 0x0000555556a612e2 in webrtc::internal::VideoSendStreamImpl::OnEncodedImage(webrtc::EncodedImage const&, webrtc::CodecSpecificInfo const*)

(this=0x7fffc40a31b0, encoded_image=..., codec_specific_info=0x7fffd8d64950) at ../../video/video_send_stream_impl.cc:566

#8 0x0000555556adbc25 in webrtc::VideoStreamEncoder::OnEncodedImage(webrtc::EncodedImage const&, webrtc::CodecSpecificInfo const*) (this=

0x7fffc40a4c90, encoded_image=..., codec_specific_info=0x7fffd8d64950) at ../../video/video_stream_encoder.cc:1936

#9 0x0000555557553770 in webrtc::LibvpxVp8Encoder::GetEncodedPartitions(webrtc::VideoFrame const&, bool) (this=

0x7fff880022f0, input_image=..., retransmission_allowed=true) at ../../modules/video_coding/codecs/vp8/libvpx_vp8_encoder.cc:1188

#10 0x0000555557552801 in webrtc::LibvpxVp8Encoder::Encode(webrtc::VideoFrame const&, std::vector > const*) (this=0x7fff880022f0, frame=..., frame_types=0x7fffc40a54d0) at ../../modules/video_coding/codecs/vp8/libvpx_vp8_encoder.cc:1075

#11 0x0000555556f92ad5 in webrtc::EncoderSimulcastProxy::Encode(webrtc::VideoFrame const&, std::vector > const*) (this=0x7fff88002270, input_image=..., frame_types=0x7fffc40a54d0) at ../../media/engine/encoder_simulcast_proxy.cc:53

#12 0x0000555556ada0c0 in webrtc::VideoStreamEncoder::EncodeVideoFrame(webrtc::VideoFrame const&, long)

(this=0x7fffc40a4c90, video_frame=..., time_when_posted_us=50000169389) at ../../video/video_stream_encoder.cc:1767

#13 0x0000555556ad8426 in webrtc::VideoStreamEncoder::MaybeEncodeVideoFrame(webrtc::VideoFrame const&, long)

(this=0x7fffc40a4c90, video_frame=..., time_when_posted_us=50000169389) at ../../video/video_stream_encoder.cc:1636

#14 0x0000555556ad3da1 in webrtc::VideoStreamEncoder::::operator()(void) const (__closure=0x7fffe4002638)

at ../../video/video_stream_encoder.cc:1333

#15 0x0000555556aed256 in webrtc::webrtc_new_closure_impl::ClosureTask >::Run(void)

(this=0x7fffe4002630) at ../../rtc_base/task_utils/to_queued_task.h:32

PacedSender 将 RTP 包通过 MediaChannel 发送出去:

#0 cricket::MediaChannel::SendRtp(unsigned char const*, unsigned long, webrtc::PacketOptions const&)

(this=0x7fff9c001398, data=0x69800000005 , len=93825004092765, options=...)

at ../../media/base/media_channel.cc:169

#1 0x000055555608ce42 in cricket::WebRtcVideoChannel::SendRtp(unsigned char const*, unsigned long, webrtc::PacketOptions const&)

(this=0x7fffc4092aa0, data=0x7fffa40017a0 "\260a\002\264", len=248, options=...) at ../../media/engine/webrtc_video_engine.cc:2034

#2 0x0000555557430fd4 in webrtc::RtpSenderEgress::SendPacketToNetwork(webrtc::RtpPacketToSend const&, webrtc::PacketOptions const&, webrtc::PacedPacketInfo const&) (this=0x7fffc405f200, packet=..., options=..., pacing_info=...) at ../../modules/rtp_rtcp/source/rtp_sender_egress.cc:555

#3 0x000055555742e285 in webrtc::RtpSenderEgress::SendPacket(webrtc::RtpPacketToSend*, webrtc::PacedPacketInfo const&)

(this=0x7fffc405f200, packet=0x7fff9c001340, pacing_info=...) at ../../modules/rtp_rtcp/source/rtp_sender_egress.cc:273

#4 0x000055555741cc89 in webrtc::ModuleRtpRtcpImpl2::TrySendPacket(webrtc::RtpPacketToSend*, webrtc::PacedPacketInfo const&)

(this=0x7fffc405e9f0, packet=0x7fff9c001340, pacing_info=...) at ../../modules/rtp_rtcp/source/rtp_rtcp_impl2.cc:376

#5 0x0000555556936fd5 in webrtc::PacketRouter::SendPacket(std::unique_ptr >, webrtc::PacedPacketInfo const&) (this=0x7fffc408df18, packet=std::unique_ptr = {...}, cluster_info=...)

at ../../modules/pacing/packet_router.cc:160

#6 0x00005555569347ca in webrtc::PacingController::ProcessPackets() (this=0x7fffc408e598) at ../../modules/pacing/pacing_controller.cc:590

#7 0x000055555692f1e3 in webrtc::PacedSender::Process() (this=0x7fffc408e530) at ../../modules/pacing/paced_sender.cc:183

#8 0x000055555692f6cb in webrtc::PacedSender::ModuleProxy::Process() (this=0x7fffc408e548) at ../../modules/pacing/paced_sender.h:152

#9 0x00005555573aaafe in webrtc::ProcessThreadImpl::Process() (this=0x7fffc408daa0) at ../../modules/utility/source/process_thread_impl.cc:257

#10 0x00005555573a8e9b in webrtc::ProcessThreadImpl::::operator()(void) const (__closure=0x7fffc4095e50)

at ../../modules/utility/source/process_thread_impl.cc:86

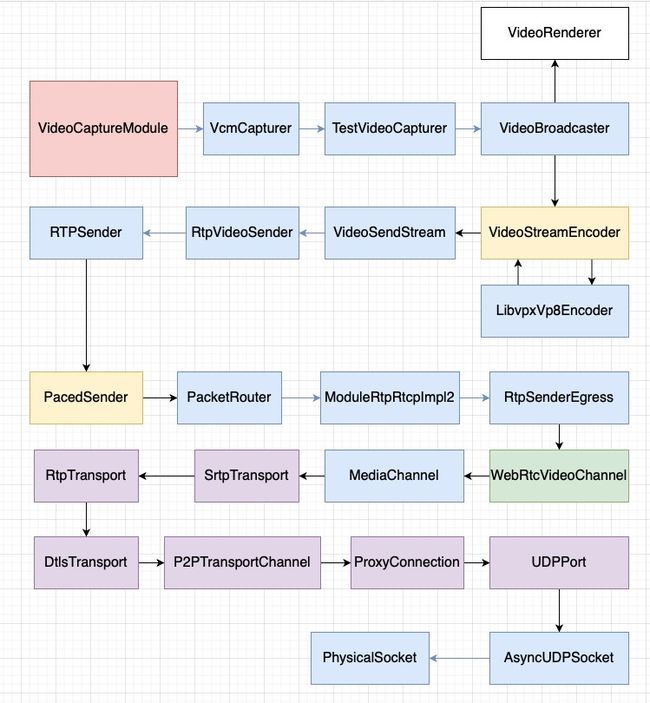

MediaChannel::SendRtp() 最终将 RTP 包发送到网络的过程与音频相同,这里不再赘述。视频的采集、预览、编码、发送完整的处理管线如下图:

黄色的模块是发生了转线程的模块。WebRtcVideoChannel 是子系统的边界。紫色的模块为传输系统的模块。

视频接收处理从创建远端流的 VideoTrack 开始:

#0 Conductor::OnAddTrack(rtc::scoped_refptr, std::vector, std::allocator > > const&)

(this=0x555556203808 >::moved_result()+50>, receiver=..., streams=std::vector of length 8088, capacity 8088 = {...}) at ../../examples/peerconnection/client/conductor.cc:218

#1 0x000055555624fbb4 in webrtc::SdpOfferAnswerHandler::ApplyRemoteDescription(std::unique_ptr >, std::map, std::allocator >, cricket::ContentGroup const*, std::less, std::allocator > >, std::allocator, std::allocator > const, cricket::ContentGroup const*> > > const&)

(this=0x7fffe40052e0, desc=std::unique_ptr = {...}, bundle_groups_by_mid=std::map with 2 elements = {...})

at ../../pc/sdp_offer_answer.cc:1783

#2 0x00005555562546ff in webrtc::SdpOfferAnswerHandler::DoSetRemoteDescription(std::unique_ptr >, rtc::scoped_refptr)

(this=0x7fffe40052e0, desc=std::unique_ptr = {...}, observer=...) at ../../pc/sdp_offer_answer.cc:2196

#3 0x000055555624cbbb in webrtc::SdpOfferAnswerHandler::)>::operator()(std::function)

(__closure=0x7fffeeffc340, operations_chain_callback=...) at ../../pc/sdp_offer_answer.cc:1510

#4 0x00005555562813b9 in rtc::rtc_operations_chain_internal::OperationWithFunctor)> >::Run(void) (this=0x7fffe40185b0)

at ../../rtc_base/operations_chain.h:71

#5 0x0000555556278f74 in rtc::OperationsChain::ChainOperation)> >(webrtc::SdpOfferAnswerHandler::)> &&)

(this=0x7fffe4005600, functor=...) at ../../rtc_base/operations_chain.h:154

#6 0x000055555624ce45 in webrtc::SdpOfferAnswerHandler::SetRemoteDescription(webrtc::SetSessionDescriptionObserver*, webrtc::SessionDescriptionInterface*)

(this=0x7fffe40052e0, observer=0x5555589a1a30, desc_ptr=0x555558a224d0) at ../../pc/sdp_offer_answer.cc:1494

#7 0x00005555561cc02f in webrtc::PeerConnection::SetRemoteDescription(webrtc::SetSessionDescriptionObserver*, webrtc::SessionDescriptionInterface*)

(this=0x7fffe4004510, observer=0x5555589a1a30, desc_ptr=0x555558a224d0) at ../../pc/peer_connection.cc:1362

然后为远端视频流的 VideoTrack 创建渲染器:

#0 GtkMainWnd::VideoRenderer::VideoRenderer(GtkMainWnd*, webrtc::VideoTrackInterface*)

(this=0x55555787a349 , main_wnd=0x21, track_to_render=0x55555588bd43 , std::allocator >::_M_construct(char const*, char const*, std::forward_iterator_tag)+225>)

at ../../examples/peerconnection/client/linux/main_wnd.cc:521

#1 0x00005555557b8d0e in GtkMainWnd::StartRemoteRenderer(webrtc::VideoTrackInterface*) (this=0x7fffffffd310, remote_video=0x7fffe40173d0)

at ../../examples/peerconnection/client/linux/main_wnd.cc:213

#2 0x00005555557a553f in Conductor::UIThreadCallback(int, void*) (this=0x555558807be0, msg_id=4, data=0x7fffe40173d0)

at ../../examples/peerconnection/client/conductor.cc:531

#3 0x00005555557b875e in (anonymous namespace)::HandleUIThreadCallback(gpointer) (data=0x7fffe400ce80)

at ../../examples/peerconnection/client/linux/main_wnd.cc:127

从网络接收编码视频数据包:

#0 cricket::WebRtcVideoChannel::OnPacketReceived(rtc::CopyOnWriteBuffer, long)

(this=0x5555557d7c73 (long)+28>, packet=..., packet_time_us=1640757073828715)

at ../../media/engine/webrtc_video_engine.cc:1713

#1 0x0000555556b736fe in cricket::BaseChannel::OnRtpPacket(webrtc::RtpPacketReceived const&) (this=0x7fffc0095950, parsed_packet=...)

at ../../pc/channel.cc:467

#2 0x00005555568bec90 in webrtc::RtpDemuxer::OnRtpPacket(webrtc::RtpPacketReceived const&) (this=0x7fffe4006988, packet=...) at ../../call/rtp_demuxer.cc:249

#3 0x0000555556bd5d12 in webrtc::RtpTransport::DemuxPacket(rtc::CopyOnWriteBuffer, long) (this=0x7fffe4006800, packet=..., packet_time_us=1640757073828715)

at ../../pc/rtp_transport.cc:194

#4 0x0000555556be0551 in webrtc::SrtpTransport::OnRtpPacketReceived(rtc::CopyOnWriteBuffer, long)

(this=0x7fffe4006800, packet=..., packet_time_us=1640757073828715) at ../../pc/srtp_transport.cc:226

#5 0x0000555556bd6898 in webrtc::RtpTransport::OnReadPacket(rtc::PacketTransportInternal*, char const*, unsigned long, long const&, int)

(this=0x7fffe4006800, transport=0x7fffe4000ef0, data=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , len=258, packet_time_us=@0x7fffeeffbbc8: 1640757073828715, flags=1) at ../../pc/rtp_transport.cc:268

#6 0x0000555556bd7d41 in sigslot::_opaque_connection::emitter(sigslot::_opaque_connection const*, rtc::PacketTransportInternal*, char const*, unsigned long, long const&, int) (self=0x7fffe4004bb0)

at ../../rtc_base/third_party/sigslot/sigslot.h:342

#7 0x0000555557276ec7 in sigslot::_opaque_connection::emit(rtc::PacketTransportInternal*, char const*, unsigned long, long const&, int) const (this=0x7fffe4004bb0) at ../../rtc_base/third_party/sigslot/sigslot.h:331

#8 0x000055555727656d in sigslot::signal_with_thread_policy::emit(rtc::PacketTransportInternal*, char const*, unsigned long, long const&, int)

(this=0x7fffe4000fe8, args#0=0x7fffe4000ef0, args#1=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , args#2=258, args#3=@0x7fffeeffbbc8: 1640757073828715, args#4=1) at ../../rtc_base/third_party/sigslot/sigslot.h:566

#9 0x0000555557275b90 in sigslot::signal_with_thread_policy::operator()(rtc::PacketTransportInternal*, char const*, unsigned long, long const&, int)

(this=0x7fffe4000fe8, args#0=0x7fffe4000ef0, args#1=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , args#2=258, args#3=@0x7fffeeffbbc8: 1640757073828715, args#4=1) at ../../rtc_base/third_party/sigslot/sigslot.h:570

#10 0x000055555727172e in cricket::DtlsTransport::OnReadPacket(rtc::PacketTransportInternal*, char const*, unsigned long, long const&, int)

(this=0x7fffe4000ef0, transport=0x7fffe4003800, data=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , size=258, packet_time_us=@0x7fffeeffbbc8: 1640757073828715, flags=0) at ../../p2p/base/dtls_transport.cc:627

#11 0x0000555557276d64 in sigslot::_opaque_connection::emitter(sigslot::_opaque_connection const*, rtc::PacketTransportInternal*, char const*, unsigned long, long const&, int) (self=0x7fffe40031f0)

at ../../rtc_base/third_party/sigslot/sigslot.h:342

#12 0x0000555557276ec7 in sigslot::_opaque_connection::emit(rtc::PacketTransportInternal*, char const*, unsigned long, long const&, int) const (this=0x7fffe40031f0) at ../../rtc_base/third_party/sigslot/sigslot.h:331

#13 0x000055555727656d in sigslot::signal_with_thread_policy::emit(rtc::PacketTransportInternal*, char const*, unsigned long, long const&, int)

(this=0x7fffe40038f8, args#0=0x7fffe4003800, args#1=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , args#2=258, args#3=@0x7fffeeffbbc8: 1640757073828715, args#4=0) at ../../rtc_base/third_party/sigslot/sigslot.h:566

#14 0x0000555557275b90 in sigslot::signal_with_thread_policy::operator()(rtc::PacketTransportInternal*, char const*, unsigned long, long const&, int)

(this=0x7fffe40038f8, args#0=0x7fffe4003800, args#1=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , args#2=258, args#3=@0x7fffeeffbbc8: 1640757073828715, args#4=0) at ../../rtc_base/third_party/sigslot/sigslot.h:570

#15 0x0000555557295941 in cricket::P2PTransportChannel::OnReadPacket(cricket::Connection*, char const*, unsigned long, long)

(this=0x7fffe4003800, connection=0x7fffe401ce90, data=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , len=258, packet_time_us=1640757073828715) at ../../p2p/base/p2p_transport_channel.cc:2215

#16 0x00005555572a4e14 in sigslot::_opaque_connection::emitter(sigslot::_opaque_connection const*, cricket::Connection*, char const*, unsigned long, long) (self=0x7fff84013a40) at ../../rtc_base/third_party/sigslot/sigslot.h:342

#17 0x000055555730fcb0 in sigslot::_opaque_connection::emit(cricket::Connection*, char const*, unsigned long, long) const (this=0x7fff84013a40) at ../../rtc_base/third_party/sigslot/sigslot.h:331

#18 0x000055555730f612 in sigslot::signal_with_thread_policy::emit(cricket::Connection*, char const*, unsigned long, long)

(this=0x7fffe401cf60, args#0=0x7fffe401ce90, args#1=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , args#2=258, args#3=1640757073828715) at ../../rtc_base/third_party/sigslot/sigslot.h:566

#19 0x000055555730ed67 in sigslot::signal_with_thread_policy::operator()(cricket::Connection*, char const*, unsigned long, long)

(this=0x7fffe401cf60, args#0=0x7fffe401ce90, args#1=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , args#2=258, args#3=1640757073828715) at ../../rtc_base/third_party/sigslot/sigslot.h:570

#20 0x00005555573004c7 in cricket::Connection::OnReadPacket(char const*, unsigned long, long)

(this=0x7fffe401ce90, data=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , size=258, packet_time_us=1640757073828715)

at ../../p2p/base/connection.cc:465

#21 0x00005555573134b8 in cricket::UDPPort::OnReadPacket(rtc::AsyncPacketSocket*, char const*, unsigned long, rtc::SocketAddress const&, long const&)

(this=0x7fffe401bbb0, socket=0x7fffe4007da0, data=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , size=258, remote_addr=..., packet_time_us=@0x7fffeeffc260: 1640757073828715) at ../../p2p/base/stun_port.cc:394

#22 0x0000555557312fa4 in cricket::UDPPort::HandleIncomingPacket(rtc::AsyncPacketSocket*, char const*, unsigned long, rtc::SocketAddress const&, long)

(this=0x7fffe401bbb0, socket=0x7fffe4007da0, data=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , size=258, remote_addr=..., packet_time_us=1640757073828715) at ../../p2p/base/stun_port.cc:335

#23 0x00005555572d854f in cricket::AllocationSequence::OnReadPacket(rtc::AsyncPacketSocket*, char const*, unsigned long, rtc::SocketAddress const&, long const&)

(this=0x7fffe4001ae0, socket=0x7fffe4007da0, data=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , size=258, remote_addr=..., packet_time_us=@0x7fffeeffc600: 1640757073828715) at ../../p2p/client/basic_port_allocator.cc:1641

#24 0x00005555572e857e in sigslot::_opaque_connection::emitter(sigslot::_opaque_connection const*, rtc::AsyncPacketSocket*, char const*, unsigned long, rtc::SocketAddress const&, long const&)

(self=0x7fffe40089a0) at ../../rtc_base/third_party/sigslot/sigslot.h:342

#25 0x00005555572eff15 in sigslot::_opaque_connection::emit(rtc::AsyncPacketSocket*, char const*, unsigned long, rtc::SocketAddress const&, long const&) const (this=0x7fffe40089a0)

at ../../rtc_base/third_party/sigslot/sigslot.h:331

#26 0x00005555572efda3 in sigslot::signal_with_thread_policy::emit(rtc::AsyncPacketSocket*, char const*, unsigned long, rtc::SocketAddress const&, long const&)

(this=0x7fffe4007df0, args#0=0x7fffe4007da0, args#1=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , args#2=258, args#3=..., args#4=@0x7fffeeffc600: 1640757073828715) at ../../rtc_base/third_party/sigslot/sigslot.h:566

#27 0x00005555572efbe0 in sigslot::signal_with_thread_policy::operator()(rtc::AsyncPacketSocket*, char const*, unsigned long, rtc::SocketAddress const&, long const&)

(this=0x7fffe4007df0, args#0=0x7fffe4007da0, args#1=0x7fffe400bba0 "\260aP\"\245\026\300\033Č\tT\276", , args#2=258, args#3=..., args#4=@0x7fffeeffc600: 1640757073828715) at ../../rtc_base/third_party/sigslot/sigslot.h:570

#28 0x00005555573475ff in rtc::AsyncUDPSocket::OnReadEvent(rtc::Socket*) (this=0x7fffe4007da0, socket=0x7fffe4008ef8)

at ../../rtc_base/async_udp_socket.cc:132

#29 0x00005555573477e9 in sigslot::_opaque_connection::emitter(sigslot::_opaque_connection const*, rtc::Socket*)

(self=0x7fffe4008b60) at ../../rtc_base/third_party/sigslot/sigslot.h:342

#30 0x000055555604eb9d in sigslot::_opaque_connection::emit(rtc::Socket*) const (this=0x7fffe4008b60)

at ../../rtc_base/third_party/sigslot/sigslot.h:331

#31 0x000055555604d8e6 in sigslot::signal_with_thread_policy::emit(rtc::Socket*)

(this=0x7fffe4008f00, args#0=0x7fffe4008ef8) at ../../rtc_base/third_party/sigslot/sigslot.h:566

#32 0x000055555604ccb5 in sigslot::signal_with_thread_policy::operator()(rtc::Socket*)

(this=0x7fffe4008f00, args#0=0x7fffe4008ef8) at ../../rtc_base/third_party/sigslot/sigslot.h:570

#33 0x0000555556047f11 in rtc::SocketDispatcher::OnEvent(unsigned int, int) (this=0x7fffe4008ef0, ff=1, err=0) at ../../rtc_base/physical_socket_server.cc:831

#34 0x00005555560492d4 in rtc::ProcessEvents(rtc::Dispatcher*, bool, bool, bool) (dispatcher=0x7fffe4008ef0, readable=true, writable=false, check_error=false)

at ../../rtc_base/physical_socket_server.cc:1222

#35 0x000055555604b232 in rtc::PhysicalSocketServer::WaitEpoll(int) (this=0x7fffe0002cd0, cmsWait=34) at ../../rtc_base/physical_socket_server.cc:1454

#36 0x0000555556049193 in rtc::PhysicalSocketServer::Wait(int, bool) (this=0x7fffe0002cd0, cmsWait=34, process_io=true)

at ../../rtc_base/physical_socket_server.cc:1169

#37 0x00005555560546a0 in rtc::Thread::Get(rtc::Message*, int, bool) (this=0x7fffe0001820, pmsg=0x7fffeeffcab0, cmsWait=-1, process_io=true)

at ../../rtc_base/thread.cc:547

通常情况下,一个 UDP 包里是放不下一帧编码视频数据的,因而在发送端,需要分片,通过多个 RTP 包发出去,而在接收端则需要将这些 RTP 包组帧,组成一个完整的编码视频帧。组帧并插入缓冲区的过程如下:

#0 webrtc::video_coding::FrameBuffer::InsertFrame(std::unique_ptr >)

(this=0x5555557cd6f9 (int, int)+33>, frame=std::unique_ptr = {...}) at ../../modules/video_coding/frame_buffer2.cc:408

#1 0x0000555556a493a5 in webrtc::internal::VideoReceiveStream2::OnCompleteFrame(std::unique_ptr >) (this=0x7fffc00b7b50, frame=std::unique_ptr = {...}) at ../../video/video_receive_stream2.cc:662

#2 0x0000555556a8d355 in webrtc::RtpVideoStreamReceiver2::OnCompleteFrames(absl::InlinedVector >, 3ul, std::allocator > > >)

(this=0x7fffc00b89a8, frames=...) at ../../video/rtp_video_stream_receiver2.cc:868

#3 0x0000555556a8d1e8 in webrtc::RtpVideoStreamReceiver2::OnAssembledFrame(std::unique_ptr >) (this=0x7fffc00b89a8, frame=std::unique_ptr = {...}) at ../../video/rtp_video_stream_receiver2.cc:856

#4 0x0000555556a8cafa in webrtc::RtpVideoStreamReceiver2::OnInsertedPacket(webrtc::video_coding::PacketBuffer::InsertResult)

(this=0x7fffc00b89a8, result=...) at ../../video/rtp_video_stream_receiver2.cc:763

#5 0x0000555556a8b284 in webrtc::RtpVideoStreamReceiver2::OnReceivedPayloadData(rtc::CopyOnWriteBuffer, webrtc::RtpPacketReceived const&, webrtc::RTPVideoHeader const&) (this=0x7fffc00b89a8, codec_payload=..., rtp_packet=..., video=...) at ../../video/rtp_video_stream_receiver2.cc:626

#6 0x0000555556a8ec63 in webrtc::RtpVideoStreamReceiver2::ReceivePacket(webrtc::RtpPacketReceived const&) (this=0x7fffc00b89a8, packet=...)

at ../../video/rtp_video_stream_receiver2.cc:971

#7 0x0000555556a8b73a in webrtc::RtpVideoStreamReceiver2::OnRecoveredPacket(unsigned char const*, unsigned long)

(this=0x7fffc00b89a8, rtp_packet=0x7fffc0120f10 "\220\340%|\242\026\376+z3\252", , rtp_packet_length=506)

at ../../video/rtp_video_stream_receiver2.cc:649

#8 0x00005555574531ee in webrtc::UlpfecReceiverImpl::ProcessReceivedFec() (this=0x7fffc00ba4f0) at ../../modules/rtp_rtcp/source/ulpfec_receiver_impl.cc:181

#9 0x0000555556a8eddc in webrtc::RtpVideoStreamReceiver2::ParseAndHandleEncapsulatingHeader(webrtc::RtpPacketReceived const&)

(this=0x7fffc00b89a8, packet=...) at ../../video/rtp_video_stream_receiver2.cc:989

#10 0x0000555556a8e978 in webrtc::RtpVideoStreamReceiver2::ReceivePacket(webrtc::RtpPacketReceived const&) (this=0x7fffc00b89a8, packet=...)

at ../../video/rtp_video_stream_receiver2.cc:956

#11 0x0000555556a8b962 in webrtc::RtpVideoStreamReceiver2::OnRtpPacket(webrtc::RtpPacketReceived const&) (this=0x7fffc00b89a8, packet=...)

at ../../video/rtp_video_stream_receiver2.cc:660

#12 0x00005555574bdd45 in webrtc::RtxReceiveStream::OnRtpPacket(webrtc::RtpPacketReceived const&) (this=0x7fffc00d40d0, rtx_packet=...)

at ../../call/rtx_receive_stream.cc:77

#13 0x00005555568bec90 in webrtc::RtpDemuxer::OnRtpPacket(webrtc::RtpPacketReceived const&) (this=0x7fffc00904b8, packet=...) at ../../call/rtp_demuxer.cc:249

#14 0x00005555568bae55 in webrtc::RtpStreamReceiverController::OnRtpPacket(webrtc::RtpPacketReceived const&) (this=0x7fffc0090458, packet=...)

at ../../call/rtp_stream_receiver_controller.cc:52

#15 0x000055555687916d in webrtc::internal::Call::DeliverRtp(webrtc::MediaType, rtc::CopyOnWriteBuffer, long)

(this=0x7fffc0090090, media_type=webrtc::MediaType::VIDEO, packet=..., packet_time_us=1640756514783548) at ../../call/call.cc:1596

#16 0x0000555556879641 in webrtc::internal::Call::DeliverPacket(webrtc::MediaType, rtc::CopyOnWriteBuffer, long)

(this=0x7fffc0090090, media_type=webrtc::MediaType::VIDEO, packet=..., packet_time_us=1640756514783548) at ../../call/call.cc:1618

#17 0x00005555560885e2 in cricket::WebRtcVideoChannel::::operator()(void) const (__closure=0x7fffe4070938)

at ../../media/engine/webrtc_video_engine.cc:1724

#18 0x00005555560a368e in webrtc::webrtc_new_closure_impl::SafetyClosureTask >::Run(void) (this=0x7fffe4070930) at ../../rtc_base/task_utils/to_queued_task.h:50

解码视频帧:

#0 webrtc::IncomingVideoStream::OnFrame(webrtc::VideoFrame const&)

(this=0x5555557d9d0d >(std::tuple >&)+28>, video_frame=...) at ../../common_video/incoming_video_stream.cc:37

#1 0x0000555556a65b0f in webrtc::internal::VideoStreamDecoder::FrameToRender(webrtc::VideoFrame&, absl::optional, int, webrtc::VideoContentType) (this=0x7fffc00d24d0, video_frame=..., qp=..., decode_time_ms=2, content_type=(unknown: 2)) at ../../video/video_stream_decoder2.cc:51

#2 0x00005555574f5e1d in webrtc::VCMDecodedFrameCallback::Decoded(webrtc::VideoFrame&, absl::optional, absl::optional)

(this=0x7fffc00b8728, decodedImage=..., decode_time_ms=..., qp=...) at ../../modules/video_coding/generic_decoder.cc:201

#3 0x0000555556ebb0e7 in webrtc::LibvpxVp8Decoder::ReturnFrame(vpx_image const*, unsigned int, int, webrtc::ColorSpace const*)

(this=0x7fffc0091720, img=0x7fff980013a0, timestamp=2718512881, qp=78, explicit_color_space=0x0)

at ../../modules/video_coding/codecs/vp8/libvpx_vp8_decoder.cc:367

#4 0x0000555556ebaab6 in webrtc::LibvpxVp8Decoder::Decode(webrtc::EncodedImage const&, bool, long)

(this=0x7fffc0091720, input_image=..., missing_frames=false) at ../../modules/video_coding/codecs/vp8/libvpx_vp8_decoder.cc:287

#5 0x00005555574f6806 in webrtc::VCMGenericDecoder::Decode(webrtc::VCMEncodedFrame const&, webrtc::Timestamp) (this=0x7fffc00b88f8, frame=..., now=...)

at ../../modules/video_coding/generic_decoder.cc:283

#6 0x00005555574f0284 in webrtc::VideoReceiver2::Decode(webrtc::VCMEncodedFrame const*) (this=0x7fffc00b8668, frame=0x7fffc0109488)

at ../../modules/video_coding/video_receiver2.cc:109

#7 0x0000555556a4b41f in webrtc::internal::VideoReceiveStream2::DecodeAndMaybeDispatchEncodedFrame(std::unique_ptr >) (this=0x7fffc00b7b50, frame=std::unique_ptr = {...}) at ../../video/video_receive_stream2.cc:846

#8 0x0000555556a4afbd in webrtc::internal::VideoReceiveStream2::HandleEncodedFrame(std::unique_ptr >) (this=0x7fffc00b7b50, frame=std::unique_ptr = {...}) at ../../video/video_receive_stream2.cc:780

#9 0x0000555556a4a712 in webrtc::internal::VideoReceiveStream2:: >, webrtc::internal::(anonymous namespace)::ReturnReason)>::operator()(std::unique_ptr >, webrtc::internal::(anonymous namespace)::ReturnReason) const

(__closure=0x7fffd986a7c0, frame=std::unique_ptr = {...}, res=webrtc::video_coding::FrameBuffer::kFrameFound)

at ../../video/video_receive_stream2.cc:733

#10 0x0000555556a4d053 in std::_Function_handler >, webrtc::video_coding::FrameBuffer::ReturnReason), webrtc::internal::VideoReceiveStream2::StartNextDecode():: >, webrtc::internal::(anonymous namespace)::ReturnReason)> >::_M_invoke(const std::_Any_data &, std::unique_ptr > &&, webrtc::video_coding::FrameBuffer::ReturnReason &&)

(__functor=..., __args#0=..., __args#1=@0x7fffd986a6ec: webrtc::video_coding::FrameBuffer::kFrameFound) at /usr/include/c++/9/bits/std_function.h:300

#11 0x00005555574c62b8 in std::function >, webrtc::video_coding::FrameBuffer::ReturnReason)>::operator()(std::unique_ptr >, webrtc::video_coding::FrameBuffer::ReturnReason) const (this=0x7fffd986a7c0, __args#0=std::unique_ptr = {...}, __args#1=webrtc::video_coding::FrameBuffer::kFrameFound)

at /usr/include/c++/9/bits/std_function.h:688

#12 0x00005555574bec04 in webrtc::video_coding::FrameBuffer::::operator()(void) const (__closure=0x7fff98134698)

at ../../modules/video_coding/frame_buffer2.cc:136

#13 0x00005555574c5bbb in webrtc::webrtc_repeating_task_impl::RepeatingTaskImpl >::RunClosure(void) (this=0x7fff98134670) at ../../rtc_base/task_utils/repeating_task.h:79

视频流的渲染:

#0 GtkMainWnd::VideoRenderer::OnFrame(webrtc::VideoFrame const&) (this=0x7fffe00176e0, video_frame=...)

at ../../examples/peerconnection/client/linux/main_wnd.cc:548

#1 0x0000555556037b5f in rtc::VideoBroadcaster::OnFrame(webrtc::VideoFrame const&) (this=0x7fffe00176b8, frame=...)

at ../../media/base/video_broadcaster.cc:90

#2 0x0000555556096029 in cricket::WebRtcVideoChannel::WebRtcVideoReceiveStream::OnFrame(webrtc::VideoFrame const&) (this=0x7fffc00b5200, frame=...)

at ../../media/engine/webrtc_video_engine.cc:3105

#3 0x0000555556a483b3 in webrtc::internal::VideoReceiveStream2::OnFrame(webrtc::VideoFrame const&) (this=0x7fffc00b7b50, video_frame=...)

at ../../video/video_receive_stream2.cc:591

#4 0x000055555737c24b in webrtc::IncomingVideoStream::Dequeue() (this=0x7fffc00081c0) at ../../common_video/incoming_video_stream.cc:56

#5 0x000055555737bc92 in webrtc::IncomingVideoStream::::operator()(void) (__closure=0x7fff981350d8)

at ../../common_video/incoming_video_stream.cc:47

#6 0x000055555737d104 in webrtc::webrtc_new_closure_impl::ClosureTask >::Run(void) (this=0x7fff981350d0) at ../../rtc_base/task_utils/to_queued_task.h:32