pytorch再次学习

目录

- 数据可视化

- 切换设备device

- 定义类

- 打印每层的参数大小

- 自动微分

-

- 计算梯度

- 禁用梯度追踪

- 优化模型参数

- 模型保存

- 模型加载

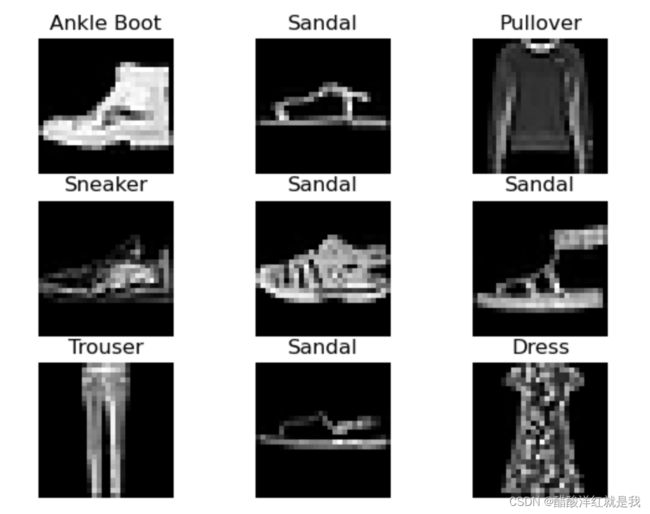

数据可视化

import torch

from torch.utils.data import Dataset

from torchvision import datasets

from torchvision.transforms import ToTensor

import matplotlib.pyplot as plt

training_data = datasets.FashionMNIST(

root="data1",

train=True,

download=True,

transform=ToTensor()

)

test_data = datasets.FashionMNIST(

root="data1",

train=False,

download=True,

transform=ToTensor()

)

labels_map = {

0: "T-Shirt",

1: "Trouser",

2: "Pullover",

3: "Dress",

4: "Coat",

5: "Sandal",

6: "Shirt",

7: "Sneaker",

8: "Bag",

9: "Ankle Boot",

}

cols, rows = 3, 3

for i in range(1, cols * rows + 1):

sample_idx = torch.randint(len(training_data), size=(1,)).item() #用于随机取出一个training_data

img, label = training_data[sample_idx]

plt.subplot(3,3,i) #此处i必须是1开始

plt.title(labels_map[label])

plt.axis("off")

plt.imshow(img.squeeze(), cmap="gray")

plt.show()

切换设备device

device = (

"cuda"

if torch.cuda.is_available()

else "mps"

if torch.backends.mps.is_available()

else "cpu"

)

print(f"Using {device} device")

定义类

class NeuralNetwork(nn.Module):

def __init__(self):

super().__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28*28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10),

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logits

打印每层的参数大小

print(f"Model structure: {model}\n\n")

for name, param in model.named_parameters():

print(f"Layer: {name} | Size: {param.size()} | Values : {param[:2]} \n")

自动微分

详见文章Variable

需要优化的参数需要加requires_grad=True,会计算这些参数对于loss的梯度

import torch

x = torch.ones(5) # input tensor

y = torch.zeros(3) # expected output

w = torch.randn(5, 3, requires_grad=True)

b = torch.randn(3, requires_grad=True)

z = torch.matmul(x, w)+b

loss = torch.nn.functional.binary_cross_entropy_with_logits(z, y)

计算梯度

计算导数

loss.backward()

print(w.grad)

print(b.grad)

禁用梯度追踪

训练好后进行测试,也就是不要更新参数时使用

z = torch.matmul(x, w)+b

print(z.requires_grad)

with torch.no_grad():

z = torch.matmul(x, w)+b

print(z.requires_grad)

优化模型参数

- 调用optimizer.zero_grad()来重置模型参数的梯度。梯度会默认累加,为了防止重复计算(梯度),我们在每次遍历中显式的清空(梯度累加值)。

- 调用loss.backward()来反向传播预测误差。PyTorch对每个参数分别存储损失梯度。

- 我们获取到梯度后,调用optimizer.step()来根据反向传播中收集的梯度来调整参数。

optmizer.zero_grad()

loss.backward()

optmizer.step()

模型保存

import torch

import torchvision.models as models

model = models.vgg16(weights='IMAGENET1K_V1')

torch.save(model.state_dict(), 'model_weights.pth')

模型加载

model = models.vgg16() # we do not specify ``weights``, i.e. create untrained model

model.load_state_dict(torch.load('model_weights.pth'))

model.eval()