hadoop(2.7.7) 完全分布式 + Hive2.3.4

组件版本

| 组件 | 版本 | 下载地址 |

|---|---|---|

| Hadoop | 2.7.7 | https://archive.apache.org/dist/hadoop/common/hadoop-2.7.7/hadoop-2.7.7.tar.gz |

| JDK | 1.8 | https://www.oracle.com/java/technologies/javase/javase-jdk8-downloads.html |

| Flink | 1.10.2 | https://archive.apache.org/dist/flink/flink-1.10.2/flink-1.10.2-bin-scala_2.11.tgz |

| Mysql | 5.7 | https://downloads.mysql.com/archives/get/p/23/file/mysql-5.7.18-linux-glibc2.5-x86_64.tar.gz |

| Hive | 2.3.4 | https://archive.apache.org/dist/hive/hive-2.3.4/apache-hive-2.3.4-bin.tar.gz |

| Spark | 2.1.1 | https://archive.apache.org/dist/spark/spark-2.1.1/spark-2.1.1-bin-hadoop2.7.tgz |

| KaFka | 2.0.0 | https://archive.apache.org/dist/kafka/2.0.0/kafka_2.11-2.0.0.tgz |

| Flume | 1.7.0 | https://archive.apache.org/dist/flume/1.7.0/apache-flume-1.7.0-bin.tar.gz |

| Redis | 4.0.1 | https://download.redis.io/releases/redis-4.0.10.tar.gz |

| fink-on-yarn | https://repo.maven.apache.org/maven2/org/apache/flink/flink-shaded-hadoop-2-uber/2.7.5-10.0/flink-shaded-hadoop-2-uber-2.7.5-10.0.jar |

**机器环境 **

| IP | 主机名 | 密码 |

|---|---|---|

| 192.168.222.201 | master | password |

| 192.168.222.202 | slave1 | password |

| 192.169.222.203 | slave2 | password |

1、机器基础环境

- 关闭防火墙,设置开机不自启(三台虚拟机都要做该操作)

- 配置hosts文件(三天能够互相通信)

- 配置SSH

- 时间同步配置NTP或使用date手动调整

2、安装jdk

2.1 解压java文件

[root@master ~]#

命令:

tar -xzf /chinaskills/jdk-8u201-linux-x64.tar.gz -C /usr/local/src/

2.2 重名名为java

[root@master ~]#

命令:

mv /usr/local/src/jdk1.8.0_201 /usr/local/src/java

2.3 配置java环境变量(仅当前用户生效)

[root@master ~]#

命令:

vi /root/.bash_prpofile

内容:

export JAVA_HOME=/usr/local/src/java

export PATH=$PATH:$JAVA_HOME/bin

2.4 加载环境变量

[root@master ~]#

命令:

source /root/.bash_profile

2.5 查看java版本信息

[root@master ~]#

命令:

java -version

输出信息:

java version "1.8.0_201"

Java(TM) SE Runtime Environment (build 1.8.0_201-b09)

Java HotSpot(TM) 64-Bit Server VM (build 25.201-b09, mixed mode)

2.6发送文件给slave1和slave2

[root@master ~]#

命令: ‘&’:任务放置在后台运行

scp -r /usr/local/src/java slave1:/usr/local/src/ &

scp -r /usr/local/src/java slave2:/usr/local/src/ &

scp /root/.bash_profile slave1:/root/ &

scp /root/.bash_profile slave2:/root/ &

3、部署hadoop完全分布式

3.1 解压hadoop文件

[root@master ~]#

命令:

tar -xzf /chinaskills/hadoop-2.7.7.tar.gz -C /usr/local/src/

3.2 重命名为hadoop

[root@master ~]#

命令:

mv /usr/local/src/hadoop-2.7.7 /usr/local/src/hadoop

3.3 配置hadoop环境变量(仅当前用户生效)

[root@master ~]#

命令:

vi /root/.bash_profile

配置内容:

export HADOOP_HOME=/usr/local/src/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

3.4 加载环境变量

[root@master ~]#

命令:

source /root/.bash_profile

3.5 查看hadoop的版本信息

[root@master ~]#

命令:

hadoop version

输出信息:

Hadoop 2.7.7

Subversion Unknown -r c1aad84bd27cd79c3d1a7dd58202a8c3ee1ed3ac

Compiled by stevel on 2018-07-18T22:47Z

Compiled with protoc 2.5.0

From source with checksum 792e15d20b12c74bd6f19a1fb886490

This command was run using /usr/local/src/hadoop/share/hadoop/common/hadoop-common-2.7.7.jar

3.6 配置hadoop-env.sh

[root@master ~]#

命令:

vi /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

配置内容:

export JAVA_HOME=/usr/local/src/java

3.7 配置core-site.xml

[root@master ~]#

命令:

vi /usr/local/src/hadoop/etc/hadoop/core-site.xml

配置内容:

<property>

<name>fs.defaultFSname>

<value>hdfs://master:9000value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/usr/local/src/hadoop/dfs/tmpvalue>

property>

3.8 配置hdfs-site.xml

[root@master ~]#

命令:

vi /usr/local/src/hadoop/etc/hadoop/hdfs-site.xml

配置内容:

<property>

<name>dfs.replicationname>

<value>3value>

property>

<property>

<name>dfs.namenode.name.dirname>

<value>/usr/local/src/hadoop/dfs/namevalue>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>/usr/local/src/hadoop/dfs/datavalue>

property>

3.9 配置mapred-site.xml

[root@master ~]#

命令:

cp /usr/local/src/hadoop/etc/hadoop/mapred-site.xml.template /usr/local/src/hadoop/etc/hadoop/mapred-site.xml

vi /usr/local/src/hadoop/etc/hadoop/mapred-site.xml

配置内容:

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

3.10 配置yarn-site.xml

[root@master ~]#

命令:

vi /usr/local/src/hadoop/etc/hadoop/yarn-site.xml

配置内容:

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.resourcemanager.addressname>

<value>master:8032value>

property>

<property>

<name>yarn.resourcemanager.scheduler.addressname>

<value>master:8030value>

property>

<property>

<name>yarn.resourcemanager.resource-tracker.addressname>

<value>master:8031value>

property>

<property>

<name>yarn.nodemanager.vmem-check-enabledname>

<value>falsevalue>

property>

3.11 配置slaves

[root@master ~]#

命令:

vi /usr/local/src/hadoop/etc/hadoop/slaves

配置内容:

master

slave1

slave2

3.12 将文件分发给slave1和slave2

[root@master ~]#

命令:

scp -r /usr/local/src/hadoop slave1:/usr/local/src/

scp -r /usr/local/src/hadoop slave2:/usr/local/src/

scp /root/.bash_profile slave1:/root/

scp /root/.bash_profile slave2:/root/

3.13 对namenode进行格式化

[root@master ~]#

命令:

hdfs namenode -format

最后十行输出信息:

21/10/19 01:18:21 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1164747633-192.168.222.201-1634577501149

21/10/19 01:18:21 INFO common.Storage: Storage directory /usr/local/src/hadoop/dfs/name has been successfully formatted.

21/10/19 01:18:21 INFO namenode.FSImageFormatProtobuf: Saving image file /usr/local/src/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

21/10/19 01:18:21 INFO namenode.FSImageFormatProtobuf: Image file /usr/local/src/hadoop/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 320 bytes saved in 0 seconds.

21/10/19 01:18:21 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

21/10/19 01:18:21 INFO util.ExitUtil: Exiting with status 0

21/10/19 01:18:21 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/192.168.222.201

************************************************************/

3.14 启动集群

[root@master ~]#

命令:

start-all.sh

输出信息:

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /usr/local/src/hadoop/logs/hadoop-root-namenode-master.out

slave2: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-root-datanode-slave2.out

slave1: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-root-datanode-slave1.out

master: starting datanode, logging to /usr/local/src/hadoop/logs/hadoop-root-datanode-master.out

Starting secondary namenodes [0.0.0.0]

The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established.

ECDSA key fingerprint is d3:a9:ba:a4:63:70:24:88:37:25:a2:60:2e:e1:e9:31.

Are you sure you want to continue connecting (yes/no)? yes

0.0.0.0: Warning: Permanently added '0.0.0.0' (ECDSA) to the list of known hosts.

0.0.0.0: starting secondarynamenode, logging to /usr/local/src/hadoop/logs/hadoop-root-secondarynamenode-master.out

starting yarn daemons

starting resourcemanager, logging to /usr/local/src/hadoop/logs/yarn-root-resourcemanager-master.out

slave2: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-root-nodemanager-slave2.out

slave1: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-root-nodemanager-slave1.out

master: starting nodemanager, logging to /usr/local/src/hadoop/logs/yarn-root-nodemanager-master.out

3.15 查看节点信息

[root@master ~]# jps

4448 DataNode

4325 NameNode

4853 NodeManager

4599 SecondaryNameNode

4744 ResourceManager

5128 Jps

[root@slave1 ~]# jps

3474 DataNode

3570 NodeManager

3682 Jps

[root@slave2 ~]# jps

3418 DataNode

3514 NodeManager

3626 Jps

3.16 运行pi程序测试

[root@master ~]#

命令:

hadoop jar /usr/local/src/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.7.jar pi 10 10

输出结果:

Number of Maps = 10

Samples per Map = 10

Wrote input for Map #0

Wrote input for Map #1

Wrote input for Map #2

Wrote input for Map #3

Wrote input for Map #4

Wrote input for Map #5

Wrote input for Map #6

Wrote input for Map #7

Wrote input for Map #8

Wrote input for Map #9

Starting Job

21/10/19 01:22:10 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.222.201:8032

21/10/19 01:22:11 INFO input.FileInputFormat: Total input paths to process : 10

21/10/19 01:22:11 INFO mapreduce.JobSubmitter: number of splits:10

21/10/19 01:22:11 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1634577560934_0001

21/10/19 01:22:11 INFO impl.YarnClientImpl: Submitted application application_1634577560934_0001

21/10/19 01:22:11 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1634577560934_0001/

21/10/19 01:22:11 INFO mapreduce.Job: Running job: job_1634577560934_0001

21/10/19 01:22:20 INFO mapreduce.Job: Job job_1634577560934_0001 running in uber mode : false

21/10/19 01:22:20 INFO mapreduce.Job: map 0% reduce 0%

21/10/19 01:22:31 INFO mapreduce.Job: map 20% reduce 0%

21/10/19 01:22:41 INFO mapreduce.Job: map 20% reduce 7%

21/10/19 01:22:50 INFO mapreduce.Job: map 40% reduce 7%

21/10/19 01:22:51 INFO mapreduce.Job: map 100% reduce 7%

21/10/19 01:22:52 INFO mapreduce.Job: map 100% reduce 100%

21/10/19 01:22:52 INFO mapreduce.Job: Job job_1634577560934_0001 completed successfully

21/10/19 01:22:52 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=226

FILE: Number of bytes written=1352945

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=2600

HDFS: Number of bytes written=215

HDFS: Number of read operations=43

HDFS: Number of large read operations=0

HDFS: Number of write operations=3

Job Counters

Launched map tasks=10

Launched reduce tasks=1

Data-local map tasks=10

Total time spent by all maps in occupied slots (ms)=242528

Total time spent by all reduces in occupied slots (ms)=19189

Total time spent by all map tasks (ms)=242528

Total time spent by all reduce tasks (ms)=19189

Total vcore-milliseconds taken by all map tasks=242528

Total vcore-milliseconds taken by all reduce tasks=19189

Total megabyte-milliseconds taken by all map tasks=248348672

Total megabyte-milliseconds taken by all reduce tasks=19649536

Map-Reduce Framework

Map input records=10

Map output records=20

Map output bytes=180

Map output materialized bytes=280

Input split bytes=1420

Combine input records=0

Combine output records=0

Reduce input groups=2

Reduce shuffle bytes=280

Reduce input records=20

Reduce output records=0

Spilled Records=40

Shuffled Maps =10

Failed Shuffles=0

Merged Map outputs=10

GC time elapsed (ms)=3019

CPU time spent (ms)=5370

Physical memory (bytes) snapshot=2012909568

Virtual memory (bytes) snapshot=22836789248

Total committed heap usage (bytes)=1383833600

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1180

File Output Format Counters

Bytes Written=97

Job Finished in 41.825 seconds

Estimated value of Pi is 3.20000000000000000000

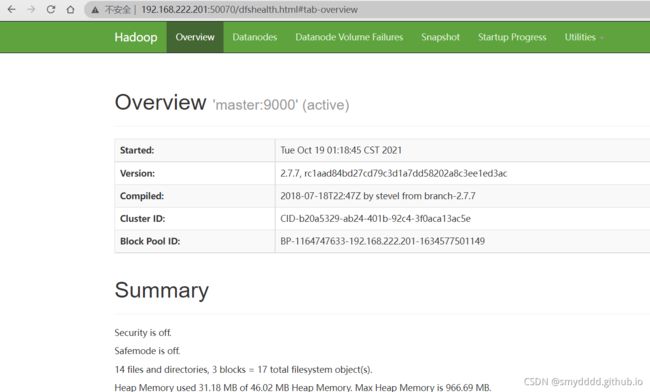

3.17 查看web界面

4、部署hive组件

4.1 解压hive文件

[root@master ~]#

命令:

tar -xzvf /chinaskills/apache-hive-2.3.4-bin.tar.gz -C /usr/local/src/

4.2 重命名为hive

[root@master ~]#

命令:

mv /usr/local/src/apache-hive-2.3.4-bin /usr/local/src/hive

4.3 配置hive环境变量(仅使当前用户生效)

[root@master ~]#

命令:

vi /root/.bash_profile

配置内容:

export HIVE_HOME=/usr/local/src/hive

export PATH=$PATH:$HIVE_HOME/bin

4.4 配置hive-site.xml文件

[root@master ~]#

命令:

cp /usr/local/src/hive/conf/hive-default.xml.template /usr/local/src/hive/conf/hive-site.xml

vi /usr/local/src/hive/conf/hive-site.xml

配置内容:

<property>

<name>javax.jdo.option.ConnectionURLname>

<value>jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true&useSSL=falsevalue>

property>

<property>

<name>javax.jdo.option.ConnectionDriverNamename>

<value>com.mysql.jdbc.Drivervalue>

property>

<property>

<name>javax.jdo.option.ConnectionUserNamename>

<value>rootvalue>

property>

<property>

<name>javax.jdo.option.ConnectionPasswordname>

<value>passwordvalue>

property>

<property>

<name>hive.metastore.schema.verificationname>

<value>falsevalue>

property>

4.5 添加mysql驱动包

[root@master ~]#

命令:

tar -xzvf /chinaskills/mysql-connector-java-5.1.47.tar.gz -C /chinaskills/

cp /chinaskills/mysql-connector-java-5.1.47/mysql-connector-java-5.1.47.jar /usr/local/src/hive/lib/

4.6 加载环境变量

[root@master ~]#

命令:

source /root/.bash_profile

4.7 hive初始化

[root@master ~]#

命令:

schematool -dbType mysql -initSchema

输出信息:

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://master:3306/hive?createDatabaseIfNotExist=true&useSSL=false

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: root

Starting metastore schema initialization to 2.3.0

Initialization script hive-schema-2.3.0.mysql.sql

Initialization script completed

schemaTool completed

4.8 进入hive shell

[root@master ~]# hive

which: no hbase in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/usr/local/mysql/bin:/usr/local/mysql/bin:/root/bin:/usr/local/src/java/bin:/root/bin:/usr/local/src/java/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/root/bin:/usr/local/src/java/bin:/usr/local/src/hadoop/bin:/usr/local/src/hadoop/sbin:/usr/local/src/hive/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/src/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/src/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/usr/local/src/hive/lib/hive-common-2.3.4.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> show databases;

OK

default

Time taken: 3.985 seconds, Fetched: 1 row(s)