MySQL项目之基于ProxySQL+keepalived的MGR高可用集群

文章目录

- 项目名称

- 项目描述

- 项目架构图

- 读写请求转发流程图

- 项目环境

- 项目步骤

- 详细步骤

-

-

- 一、配置好ip地址,修改主机名,在三台DBS上添加host别名映射

- 二、配置ansible

-

- 1.安装mysql

- 2.安装exporter

- 三、配置组复制

-

- 1.修改DBS的mysql配置文件`/etc/my.cnf`

- 2.启动DBS1,引导组复制

- 3.添加DBS2、DBS3到复制组中

- 四、配置ProxySQL

-

- 1.在两台PS上安装ProxySQL

- 2.向`mysql_servers`表中添加后端节点DBS1、DBS2和DBS3

- 3.监控后端节点

- 4.创建系统视图`sys.gr_member_routing_candidate_status`

- 5.向`mysql_group_replication_hostgroups`中插入记录

- 6.配置mysql_users

- 7.配置测试用的读写分离规则

- 8.测试MGR故障转移

- 五、配置keepalived

-

- 1.在两台PS上安装keepalived

- 2.修改配置文件

- 3.模拟PS宕机,测试vip漂移

- 4.通过vip远程查询后端mysql数据

- 5.健康检测+notify实现自动化结束服务

- 六、配置Prometheus

-

- 1.在Prom上安装Prometheus

- 2.配置Prometheus为服务

- 3.在两台PS上配置运行node_exporter

- 4.在三台DBS上配置mysqld_exporter

- 5.在Prometheus上配置获取exporter的数据

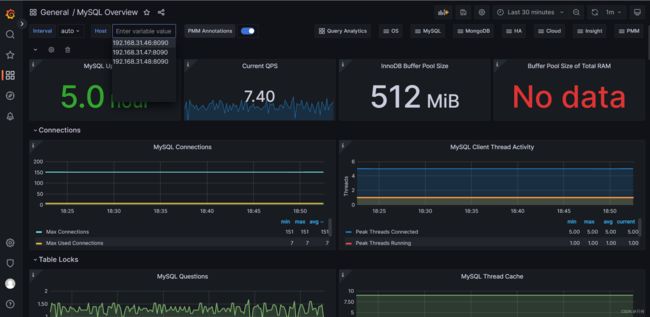

- 七、配置Grafana

-

- 1.安装

- 2.启动Grafana

- 3.访问服务并设置数据源

- 4.配置Dashboards仪表盘

- 八、配置DNS服务器

-

- 1.安装bind

- 2.启动named服务并设置开机自启

- 3.修改dns服务器地址配置文件

- 4.修改named主配置文件`/etc/named.conf`

- 5.修改区域配置文件,告诉named为zhihe.com提供域名解析

- 6.在数据配置文件目录创建`zhihe.com.zone`数据文件

- 7.配置`zhihe.com.zone`数据文件

- 8.使用命令检验配置文件

- 九、OLTP基准测试

-

- sysbench

- tpcc

-

- 一些需要注意的细节

-

-

- 一、准备环境时克隆失误

- 二、proxysql的admin用户默认不能远程登录

- 三、如果组中的节点purge过日志,那么新节点将无法从donor上获取完整的数据

- 四、在模拟故障转移时,发现重启的服务器无法正常开启组复制

- 五、自愿离组与非自愿离组

-

- 遇到的一些问题

-

-

- 一、组复制加入新节点时一直处于recoving?

- 二、加组失败,新节点包含了额外的gtid事务

-

- 集群的日常维护

-

-

- 开机

- 关机

- 日常常用命令

-

- 优化

-

-

- 动态读写分离

- 异地多活

- MHA做故障转移

- ProxySQL Cluster

-

- 小结

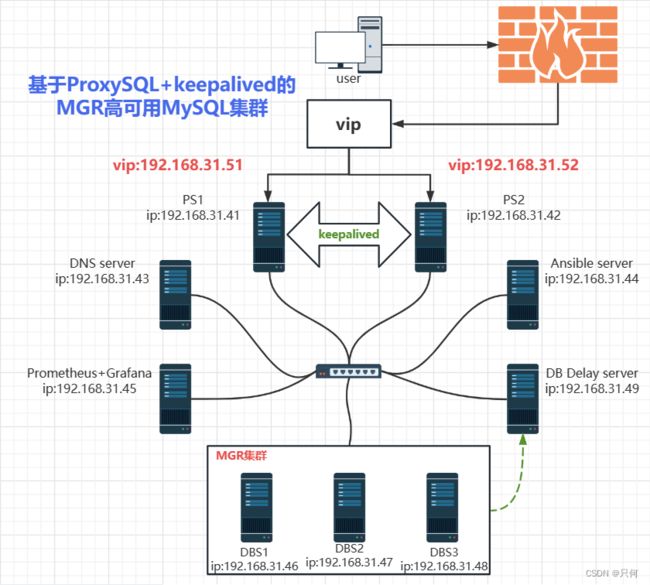

项目名称

基于ProxySQL+keepalived的MGR高可用MySQL集群

项目描述

本项目旨在搭建一个高可用性集群,基于ProxySQL代理转发、读写分离和keepalived双VIP保证代理可用性,并采用单主组复制技术确保数据强一致性。另外,本项目使用ansible实现快速部署,利用prometheus+grafana实现高可读性监控界面,同时通过延迟备份服务器进行灾备。最后,sysbench测试机将通过配置的dns服务器提供的域名解析实现对双VIP轮询的oltp混合读写和只写测试评估。

项目架构图

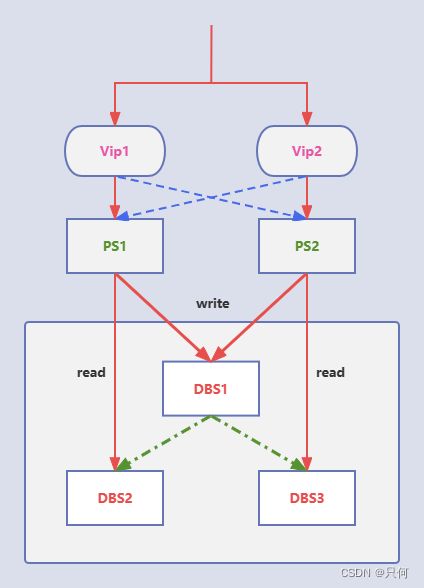

读写请求转发流程图

项目环境

虚拟机软件:VMware Workstation15.5.0

系统:CentOS 7.5.1804(10台 1核2G)

软件:mysql 5.7.40、proxysql-1.4.16-1.x86_64、ansible 2.9.27、keepalived v1.3.5、prometheus-2.43.0、node_exporter 1.5.0、mysqld_exporter 0.15.0、grafana-9.4.7、sysbench 1.0.17、bind-9.11.4-26.P2.el7_9.13.x86_64

IP规划:

| 主机 | IP地址 |

|---|---|

| PS1 | 192.168.31.41,VIP1:192.168.31.51 |

| PS2 | 192.168.31.42,VIP2:192.168.31.52 |

| DNS | 192.168.31.43 |

| Ansible | 192.168.31.44 |

| Prom | 192.168.31.45 |

| DBS1 | 192.168.31.46 |

| DBS2 | 192.168.31.47 |

| DBS3 | 192.168.31.48 |

| Delay backup | 192.168.31.49 |

| 压力测试机 | 192.168.31.50 |

项目步骤

0.画好项目拓扑图,进行ip规划

1.准备10台全新的centOS7.5的linux系统机,配置好ip地址,修改主机名,在三台组复制机上添加host别名映射

2.配置ansible服务器后在其上生成密钥对,给MGR集群、Proxy机、dns、delay_backup、prom建立免密通道

3.编写一键安装MySQL的脚本

4.编写ansible主机清单,编写playbook实现批量编译安装部署MySQL(3+2)和exporter⭐

5.配置DBS的主从复制,启用GTID,开启组复制并配置相关参数等⭐

6.配置ProxySQL,并进行测试⭐

7.配置keepalived实现双vip,并进行访问和vip漂移测试

8.配置DNS服务实现dns轮询双vip,并进行测试

9.配置Prom对PS机和MGR进行监控以及用grafana出图

10.内核以及MySQL参数优化

11.使用测试机用sysbench命令对整个MySQL集群进行压力测试

详细步骤

一、配置好ip地址,修改主机名,在三台DBS上添加host别名映射

修改ip配置文件:

$ vi /etc/sysconfig/network-scripts/ifcfg-ens33

BOOTPROTO=static

IPADDR=192.168.31.110

NETMASK=255.255.255.0

GATEWAY=192.168.31.1

DNS1=114.114.114.114

DEFROUTE=yes

NAME=ens33

DEVICE=ens33

ONBOOT=yes

修改主机名:

hostnamectl set-hostname dbs1

在三台DBS上修改/etc/hosts文件:

[root@dbs1 mysql]# vim /etc/hosts

192.168.31.46 dbs1

192.168.31.47 dbs2

192.168.31.48 dbs3

二、配置ansible

在ansible上分发密钥:

[root@ans ~]# ssh-keygen # 生成密钥

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa): # 不输入,使用默认目录

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase): # 不加密,直接回车

Enter same passphrase again: # 再次回车

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:F6D2e20IAtoO46rwfyvwbPioWvRfUi4CJu+s5Ape9Uc root@web1

The key's randomart image is:

+---[RSA 2048]----+

| . |

| . . |

| . o . |

| o o . . |

|. B ... SE. |

| *.*. .+.+ o |

|o.+*+ o.+.o o |

|**o.=o.+.. . |

|@==+ooo. |

+----[SHA256]-----+

[root@ans ~]# cd /root/.ssh/

[root@ans .ssh]# ls # 公钥私钥文件

id_rsa id_rsa.pub

[root@ans .ssh]# ssh-copy-id -i id_rsa.pub [email protected] # 分发公钥

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "id_rsa.pub"

The authenticity of host '192.168.31.41 (192.168.31.41)' can't be established.

ECDSA key fingerprint is SHA256:ttyWNvrI38R3W95ovlB/4fseSAdmIql3cxM9jA1HxOE.

ECDSA key fingerprint is MD5:83:50:45:4e:7e:76:eb:8c:d1:9d:25:a7:cd:ca:76:a0.

Are you sure you want to continue connecting (yes/no)? yes # 确认执行

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

[email protected]'s password: # 输入对应服务器密码

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '[email protected]'"

and check to make sure that only the key(s) you wanted were added.

# 同样分发到所有需要ansible管理的服务器上

1.安装mysql

编写一键安装mysql脚本,如下可自取,需要注意的是第二条和第三条命令中的软件包名用的是你当前文件夹下的软件包,可以自行修改,需提前将软件包传到虚拟机中,软件包名字改成你自己的。另外需要自定义root密码,可以使用以下命令修改:

[root@localhost mysql]# sed -i 's/{passwd}/你的密码/' 一键安装脚本.sh

#!/bin/bash

#解决软件的依赖关系

yum install cmake ncurses-devel gcc gcc-c++ vim lsof bzip2 openssl-devel ncurses-compat-libs -y

#解压mysql二进制安装包

tar xf mysql-5.7.40-linux-glibc2.12-x86_64.tar.gz

#移动mysql解压后的文件到/usr/local下改名叫mysql

mv mysql-5.7.40-linux-glibc2.12-x86_64 /usr/local/mysql

#新建组和用户 mysql

groupadd mysql

#mysql这个用户的shell 是/bin/false 属于mysql组

useradd -r -g mysql -s /bin/false mysql

#关闭firewalld防火墙服务,并且设置开机不要启动

service firewalld stop

systemctl disable firewalld

#临时关闭selinux

setenforce 0

#永久关闭selinux

sed -i '/^SELINUX=/ s/enforcing/disabled/' /etc/selinux/config

#新建存放数据的目录

mkdir /data/mysql -p

#修改/data/mysql目录的权限归mysql用户和mysql组所有,这样mysql用户可以对这个文件夹进行读写了

chown mysql:mysql /data/mysql/

#只是允许mysql这个用户和mysql组可以访问,其他人都不能访问

chmod 750 /data/mysql/

#进入/usr/local/mysql/bin目录

cd /usr/local/mysql/bin/

#初始化mysql

./mysqld --initialize --user=mysql --basedir=/usr/local/mysql/ --datadir=/data/mysql &>passwd.txt

#让mysql支持ssl方式登录的设置

./mysql_ssl_rsa_setup --datadir=/data/mysql/

#获得临时密码

tem_passwd=$(cat passwd.txt |grep "temporary"|awk '{print $NF}')

# 修改PATH变量,加入mysql bin目录的路径

#临时修改PATH变量的值,临时修改只对用source执行本脚本时有效

export PATH=/usr/local/mysql/bin/:$PATH

#重新启动linux系统后也生效,永久修改,不用source执行本脚本时可以通过bash或者su - root来刷新PATH变量使其生效

echo 'PATH=/usr/local/mysql/bin:$PATH' >>/root/.bashrc

#复制support-files里的mysql.server文件到/etc/init.d/目录下叫mysqld

cp ../support-files/mysql.server /etc/init.d/mysqld

#修改/etc/init.d/mysqld脚本文件里的datadir目录的值

sed -i '70c datadir=/data/mysql' /etc/init.d/mysqld

#生成/etc/my.cnf配置文件

cat >/etc/my.cnf <<EOF

[mysqld_safe]

[client]

socket=/data/mysql/mysql.sock

[mysqld]

socket=/data/mysql/mysql.sock

port = 3306

open_files_limit = 8192

innodb_buffer_pool_size = 512M

character-set-server=utf8mb4

[mysql]

auto-rehash

prompt=\\u@\\d \\R:\\m mysql>

EOF

#修改内核的open file的数量

ulimit -n 65535

#设置开机启动的时候也配置生效

#设置软限制和硬限制

echo "* soft nofile 65535" >>/etc/security/limits.conf

echo "* hard nofile 65535" >>/etc/security/limits.conf

#启动mysqld进程

service mysqld start

#将mysqld添加到linux系统里服务管理名单里

/sbin/chkconfig --add mysqld

#设置mysqld服务开机启动

/sbin/chkconfig mysqld on

#初次修改密码需要使用--connect-expired-password 选项

#-e 后面接的表示是在mysql里需要执行命令 execute 执行

#set password 修改root用户的密码,自行决定

mysql -uroot -p$tem_passwd --connect-expired-password -e "set password='{passwd}';"

#检验上一步修改密码是否成功,如果有输出能看到mysql里的数据库,说明成功。

mysql -uroot -p'{passwd}' -e "show databases;"

编写主机清单:

[dbserver]

192.168.31.46

192.168.31.47

192.168.31.48

[proxyServer]

192.168.31.41

192.168.31.42

[dns]

192.168.31.43

[Prometheus]

192.168.31.45

[delayBackupServer]

192.168.31.49

编写playbook:(需事先将mysql的安装包和一键安装mysql脚本传到playbooks目录下)

- hosts: dbserver proxyServer

remote_user: root

tasks:

- name: copy mysql.tar

copy: src=/etc/ansible/playbooks/mysql-5.7.40-linux-glibc2.12-x86_64.tar.gz dest=/root/

- name: copy script

copy: src=/etc/ansible/playbooks/one_key_install_mysql.sh dest=/root/

- name: install mysql

script: /etc/ansible/playbooks/one_key_install_mysql.sh

- name: rm mysql.tar

shell: rm -f /root/mysql-5.7.40-linux-glibc2.12-x86_64.tar.gz

copy script没有必要,可以删去,这里我是事后想起来的,就不删了

检测语法:

[root@ansible playbooks]# ansible-playbook --syntax-check onekey_install.yaml

playbook: onekey_install.yaml # 语法ok

查看目标主机:

[root@ansible playbooks]# ansible-playbook --list-hosts onekey_install.yaml

playbook: onekey_install.yaml

play #1 (dbserver proxyServer): dbserver proxyServer TAGS: []

pattern: [u'dbserver proxyServer']

hosts (5):

192.168.31.48

192.168.31.41

192.168.31.42

192.168.31.46

192.168.31.47

模拟执行:

[root@ansible playbooks]# ansible-playbook --check onekey_install.yaml

PLAY [dbserver proxyServer] ********************************************************

TASK [Gathering Facts] *************************************************************

ok: [192.168.31.41]

ok: [192.168.31.46]

ok: [192.168.31.42]

ok: [192.168.31.48]

ok: [192.168.31.47]

TASK [copy mysql.tar] **************************************************************

changed: [192.168.31.42]

changed: [192.168.31.41]

changed: [192.168.31.46]

changed: [192.168.31.47]

changed: [192.168.31.48]

TASK [copy script] *****************************************************************

changed: [192.168.31.48]

changed: [192.168.31.47]

changed: [192.168.31.46]

changed: [192.168.31.42]

changed: [192.168.31.41]

TASK [install mysql] ***************************************************************

changed: [192.168.31.46]

changed: [192.168.31.47]

changed: [192.168.31.48]

changed: [192.168.31.41]

changed: [192.168.31.42]

PLAY RECAP *************************************************************************

192.168.31.41 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.31.42 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.31.46 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.31.47 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.31.48 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

模拟执行没报错,执行playbook:

[root@ansible playbooks]# ansible-playbook mysql_install.yaml

PLAY [dbserver proxyServer] ********************************************************

TASK [Gathering Facts] *************************************************************

ok: [192.168.31.47]

ok: [192.168.31.42]

ok: [192.168.31.48]

ok: [192.168.31.46]

ok: [192.168.31.41]

TASK [copy mysql.tar] **************************************************************

changed: [192.168.31.42]

changed: [192.168.31.46]

changed: [192.168.31.41]

changed: [192.168.31.47]

changed: [192.168.31.48]

TASK [copy script] *****************************************************************

changed: [192.168.31.46]

changed: [192.168.31.48]

changed: [192.168.31.41]

changed: [192.168.31.42]

changed: [192.168.31.47]

TASK [install mysql] ***************************************************************

changed: [192.168.31.42]

changed: [192.168.31.48]

changed: [192.168.31.46]

changed: [192.168.31.47]

changed: [192.168.31.41]

PLAY RECAP *************************************************************************

192.168.31.41 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.31.42 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.31.46 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.31.47 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.31.48 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

这里有个小错误,误以为ansible的script模块是控制远程主机执行其本地的脚本,因此还将脚本copy到了远程主机上,但实际上不需要,只需指定ansible本机上的脚本即可,Ansible的script模块可以在远程主机上执行本地主机的脚本。脚本将在控制节点上进行复制,然后通过SSH传输到远程主机并在那里执行。

执行完毕后在远程主机上执行:

[root@ps2 ~]# su

[root@ps2 ~]# mysql --version

mysql Ver 14.14 Distrib 5.7.40, for linux-glibc2.12 (x86_64) using EditLine wrapper

[root@ps2 ~]# ps aux|grep mysql

root 2522 0.0 0.0 11820 1612 ? S 11:31 0:00 /bin/sh /usr/local/mysql/bin/mysqld_safe --datadir=/data/mysql --pid-file=/data/mysql/ps2.pid

mysql 2676 0.4 11.1 1544672 207120 ? Sl 11:31 0:00 /usr/local/mysql/bin/mysqld --basedir=/usr/local/mysql --datadir=/data/mysql --plugin-dir=/usr/local/mysql/lib/plugin --user=mysql --log-error=ps2.err --open-files-limit=8192 --pid-file=/data/mysql/ps2.pid --socket=/data/mysql/mysql.sock --port=3306

root 2757 0.0 0.0 112824 984 pts/0 S+ 11:33 0:00 grep --color=automysql

可以看到mysql命令可用,mysql进程也正常运行

2.安装exporter

在两台PS上安装node_exporter,在三台DBS上安装mysqld_exporter

编写playbook:

- hosts: dbserver

remote_user: root

tasks:

- name: copy mysqld_exporter.tar

copy: src=/etc/ansible/playbooks/mysqld_exporter-0.15.0-rc.0.linux-amd64.tar.gz dest=/root/

- name: install mysqld_exporter

script: /etc/ansible/playbooks/mysqld_exporter_install.sh

- name: rm mysqld_exporter.tar

shell: rm -f /root/mysqld_exporter-0.15.0-rc.0.linux-amd64.tar.gz

- hosts: proxyServer

remote_user: root

tasks:

- name: copy node_exporter.tar

copy: src=/etc/ansible/playbooks/node_exporter-1.5.0.linux-amd64.tar.gz dest=/root/

- name: install node_exporter

script: /etc/ansible/playbooks/node_exporter_install.sh

- name: rm node_exporter.tar

shell: rm -f /root/node_exporter-1.5.0.linux-amd64.tar.gz

编写export安装脚本:

[root@ansible playbooks]# cat mysqld_exporter_install.sh

tar xf /root/mysqld_exporter-0.15.0-rc.0.linux-amd64.tar.gz

mv /root/mysqld_exporter-0.15.0-rc.0.linux-amd64 /mysqld_exporter

echo "PATH=$PATH:/mysqld_exporter/">>/root/.bashrc

[root@ansible playbooks]# cat node_exporter_install.sh

tar xf /root/node_exporter-1.5.0.linux-amd64.tar.gz

mv /root/node_exporter-1.5.0.linux-amd64 /node_exporter

echo "PATH=$PATH:/node_exporter/">>/root/.bashrc

语法检测:

[root@ansible playbooks]# ansible-playbook --syntax-check exporter_install.yaml

playbook: exporter_install.yaml

查看目标主机:

[root@ansible playbooks]# ansible-playbook --list-hosts exporter_install.yaml

playbook: exporter_install.yaml

play #1 (dbserver): dbserver TAGS: []

pattern: [u'dbserver']

hosts (3):

192.168.31.48

192.168.31.46

192.168.31.47

play #2 (proxyServer): proxyServer TAGS: []

pattern: [u'proxyServer']

hosts (2):

192.168.31.41

192.168.31.42

模拟执行:

[root@ansible playbooks]# ansible-playbook --check exporter_install.yaml

PLAY [dbserver] ********************************************************************

TASK [Gathering Facts] *************************************************************

ok: [192.168.31.48]

ok: [192.168.31.46]

ok: [192.168.31.47]

TASK [copy mysqld_exporter.tar] ****************************************************

changed: [192.168.31.46]

changed: [192.168.31.47]

changed: [192.168.31.48]

TASK [install mysqld_exporter] *****************************************************

changed: [192.168.31.46]

changed: [192.168.31.47]

changed: [192.168.31.48]

TASK [rm mysqld_exporter.tar] ******************************************************

skipping: [192.168.31.47]

skipping: [192.168.31.46]

skipping: [192.168.31.48]

PLAY [proxyServer] *****************************************************************

TASK [Gathering Facts] *************************************************************

ok: [192.168.31.42]

ok: [192.168.31.41]

TASK [copy node_exporter.tar] ******************************************************

changed: [192.168.31.41]

changed: [192.168.31.42]

TASK [install node_exporter] *******************************************************

changed: [192.168.31.42]

changed: [192.168.31.41]

TASK [rm node_exporter.tar] ********************************************************

skipping: [192.168.31.41]

skipping: [192.168.31.42]

PLAY RECAP *************************************************************************

192.168.31.41 : ok=3 changed=2 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

192.168.31.42 : ok=3 changed=2 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

192.168.31.46 : ok=3 changed=2 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

192.168.31.47 : ok=3 changed=2 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

192.168.31.48 : ok=3 changed=2 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

执行playbook:

[root@ansible playbooks]# ansible-playbook exporter_install.yaml

PLAY [dbserver] ********************************************************************

TASK [Gathering Facts] *************************************************************

ok: [192.168.31.48]

ok: [192.168.31.46]

ok: [192.168.31.47]

TASK [copy mysqld_exporter.tar] ****************************************************

changed: [192.168.31.48]

changed: [192.168.31.47]

changed: [192.168.31.46]

TASK [install mysqld_exporter] *****************************************************

changed: [192.168.31.46]

changed: [192.168.31.48]

changed: [192.168.31.47]

TASK [rm mysqld_exporter.tar] ******************************************************

[WARNING]: Consider using the file module with state=absent rather than running

'rm'. If you need to use command because file is insufficient you can add 'warn:

false' to this command task or set 'command_warnings=False' in ansible.cfg to get

rid of this message.

changed: [192.168.31.48]

changed: [192.168.31.46]

changed: [192.168.31.47]

PLAY [proxyServer] *****************************************************************

TASK [Gathering Facts] *************************************************************

ok: [192.168.31.41]

ok: [192.168.31.42]

TASK [copy node_exporter.tar] ******************************************************

changed: [192.168.31.42]

changed: [192.168.31.41]

TASK [install node_exporter] *******************************************************

changed: [192.168.31.42]

changed: [192.168.31.41]

TASK [rm node_exporter.tar] ********************************************************

changed: [192.168.31.42]

changed: [192.168.31.41]

PLAY RECAP *************************************************************************

192.168.31.41 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.31.42 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.31.46 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.31.47 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

192.168.31.48 : ok=4 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

看到执行过程中出现警告,原因是我使用了shell模块来删除exporter压缩包,而ansible建议使用file模块来删除文件

file模块语法为:

[root@ansible ~]# ansible all -m file -a "path=/var/tmp/hello.txt state=absent" # 删除文件

测试运行exporter:

[root@ps2 /]# node_exporter

ts=2023-05-15T09:59:36.545Z caller=node_exporter.go:180 level=info msg="Starting node_exporter" version="(version=1.5.0, branch=HEAD, revision=1b48970ffcf5630534fb00bb0687d73c66d1c959)"

……

ts=2023-05-15T09:59:36.548Z caller=tls_config.go:232 level=info msg="Listening on" address=[::]:9100

ts=2023-05-15T09:59:36.548Z caller=tls_config.go:235 level=info msg="TLS is disabled." http2=false address=[::]:9100

^C

[root@dbs1 mysqld_exporter]# mysqld_exporter

ts=2023-05-15T10:01:02.601Z caller=mysqld_exporter.go:216 level=info msg="Starting mysqld_exporter" version="(version=0.15.0-rc.0, branch=HEAD, revision=bdb0fed3dee0fc0164cde86d13d50cc11ca65067)"

ts=2023-05-15T10:01:02.602Z caller=mysqld_exporter.go:217 level=info msg="Build context" build_context="(go=go1.20.3, platform=linux/amd64, user=root@4e0558944a6c, date=20230414-18:19:06, tags=netgo)"

ts=2023-05-15T10:01:02.602Z caller=config.go:150 level=error msg="failed to validate config" section=client err="no user specified in section or parent"

ts=2023-05-15T10:01:02.602Z caller=mysqld_exporter.go:221 level=info msg="Error parsing host config" file=/root/.my.cnf err="no configuration found"

mysqld_exporter的启动需要相关配置参数,下文配置Prometheus监控的时候再进行详细配置,这里只完成安装即可

三、配置组复制

先停止三台DBS上的mysql服务:

service mysqld stop

1.修改DBS的mysql配置文件/etc/my.cnf

DBS1:

[mysqld_safe]

[client]

socket=/data/mysql/mysql.sock

[mysqld]

#datadir=/data/mysql # 已在安装时指定

socket=/data/mysql/mysql.sock

port = 3306

open_files_limit = 8192

innodb_buffer_pool_size = 512M

character-set-server=utf8mb4

server-id=1 # 每个mysql服务的唯一标识

gtid_mode=on # 必须开启,因为组复制基于GTID

enforce_gtid_consistency=on # 必须开启,原因同上

log-bin=/data/mysql/master-bin # 必须开启二进制日志,组复制从日志记录中收集信息

binlog_format=row # 必须使用row格式,保证数据一致性

binlog_checksum=none # 必须关闭,组复制的设计缺陷导致不能对他们校验

master_info_repository=TABLE # 必须,组复制要将master的元数据写入到mysql.slave_master_info中

relay_log_info_repository=TABLE # 必须,relay log的元数据写入到mysql.slave_relay_log_info中

relay_log=/data/mysql/relay-log # 必须,如果不给,将采用默认值

log_slave_updates=ON # 必须开启,这样新节点随便选哪个作为donor都可以进行异步复制

sync-binlog=1 # 建议开启,为了保证每次事务提交都立刻将binlog刷盘,保证出现故障也不丢失日志

log-error=/data/mysql/error.log

pid-file=/data/mysql/mysqld.pid

transaction_write_set_extraction=XXHASH64 # 必须,表示写集和以XXHASH64的算法进行hash,

loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa" # 须为有效uuid值,可以使用uuidgen生成,也可以全a

loose-group_replication_start_on_boot=off # 建议设置为OFF,表示组复制功能不随MySQL实例启动而启动

loose-group_replication_member_weigth = 40 # 非必需,权重越高,自动选举为primary节点的优先级就越高

loose-group_replication_local_address="192.168.31.46:20001" # 必须,表示本机上用于组内各节点之间通信的地址和端口

loose-group_replication_group_seeds="192.168.31.46:20001,192.168.31.47:20002,192.168.31.48:20003" # 指定种子节点列表。当新节点加入组时,只会联系种子节点,建议,将组中所有节点都加入到种子列表中

[mysql]

auto-rehash

prompt=\u@\d \R:\m mysql>

DBS2:仅列出每个节点不一致的几行

server-id=2

loose-group_replication_member_weigth = 30

loose-group_replication_local_address="192.168.31.47:20002"

DBS3:

server-id=3

loose-group_replication_member_weigth = 20

loose-group_replication_local_address="192.168.31.48:20003"

2.启动DBS1,引导组复制

先启动DBS1的MySQL服务。

service mysqld start

连上DBS1节点,创建用于复制的用户。我这里创建的用户为repl,密码为Zh_000000。

mysql -uroot -p'Zh_000000';

create user repl@'192.168.31.%' identified by 'Zh_000000';

grant replication slave on *.* to repl@'192.168.31.%';

在DBS1上配置恢复通道。

change master to

master_user='repl',

master_password='Zh_000000'

for channel 'group_replication_recovery';

安装组复制插件。

install plugin group_replication soname 'group_replication.so';

引导、启动组复制功能。

set @@global.group_replication_bootstrap_group=on;

start group_replication;

set @@global.group_replication_bootstrap_group=off;

查看DBS1是否ONLINE。

select * from performance_schema.replication_group_members\G

3.添加DBS2、DBS3到复制组中

先启动DBS2和DBS3的mysql服务:

service mysqld start

连接上mysql,再在DBS2和DBS3上指定恢复通道,用于恢复数据。

change master to

master_user='repl',

master_password='Zh_000000'

for channel 'group_replication_recovery';

最后,在DBS2和DBS3上安装组复制插件,并启动组复制功能即可。

install plugin group_replication soname 'group_replication.so';

start group_replication;

在任意一个节点上查看DBS1、DBS2、DBS3是否都是ONLINE。

root@(none) 21:41 mysql>select * from performance_schema.replication_group_members\G

*************************** 1. row ***************************

CHANNEL_NAME: group_replication_applier

MEMBER_ID: a4364011-f2d1-11ed-91c7-000c293331c1

MEMBER_HOST: dbs3

MEMBER_PORT: 3306

MEMBER_STATE: ONLINE

*************************** 2. row ***************************

CHANNEL_NAME: group_replication_applier

MEMBER_ID: cb3246da-f2d1-11ed-82e0-000c29f590e6

MEMBER_HOST: dbs1

MEMBER_PORT: 3306

MEMBER_STATE: ONLINE

*************************** 3. row ***************************

CHANNEL_NAME: group_replication_applier

MEMBER_ID: cbbf471b-f2d1-11ed-97bd-000c2939c1eb

MEMBER_HOST: dbs2

MEMBER_PORT: 3306

MEMBER_STATE: ONLINE

3 rows in set (0.00 sec)

在DBS1上进行建库建表测试:

root@(none) 21:52 mysql>create database test;

Query OK, 1 row affected (0.00 sec)

root@(none) 21:53 mysql>use test;

Database changed

root@test 21:54 mysql>create table student(id int,name varchar(20),age smallint);

Query OK, 0 rows affected (0.01 sec)

在DBS2和DBS3上查看:

root@(none) 21:53 mysql>show databases

-> ;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| test |

+--------------------+

5 rows in set (0.01 sec)

root@(none) 21:54 mysql>use test

Database changed

root@test 21:54 mysql>show tables;

+----------------+

| Tables_in_test |

+----------------+

| student |

+----------------+

1 row in set (0.00 sec)

同步成功!

至此,DBS1、DBS2、DBS3组成的3节点单主模型的组复制配置完成。下面配置ProxySQL。

四、配置ProxySQL

提示:因为之后要配置keepalived实现ProxySQL的高可用,所以以下对ProxySQL的配置都是同时在两台PS上进行

1.在两台PS上安装ProxySQL

cat <<EOF | tee /etc/yum.repos.d/proxysql.repo

[proxysql_repo]

name= ProxySQL

baseurl=http://repo.proxysql.com/ProxySQL/proxysql-1.4.x/centos/\$releasever

gpgcheck=1

gpgkey=http://repo.proxysql.com/ProxySQL/repo_pub_key

EOF

yum -y install proxysql

安装好后启动proxysql并连上ProxySQL的Admin管理接口:

[root@ps1 ~]# service proxysql start

Starting ProxySQL: 2023-05-16 13:44:06 [INFO] Using config file /etc/proxysql.cnf

DONE!

[root@ps1 ~]# mysql -uadmin -padmin -h127.0.0.1 -P6032

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 1

Server version: 5.5.30 (ProxySQL Admin Module)

Copyright (c) 2000, 2022, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

admin@(none) 13:44 mysql>

2.向mysql_servers表中添加后端节点DBS1、DBS2和DBS3

use main;

delete from mysql_servers;

insert into mysql_servers(hostgroup_id,hostname,port)

values(10,'192.168.31.46',3306),

(10,'192.168.31.47',3306),

(10,'192.168.31.48',3306);

load mysql servers to runtime;

save mysql servers to disk;

查看3个节点是否都是ONLINE:

admin@main 14:34 mysql>select hostgroup_id,hostname,port,status,weight from mysql_servers;

+--------------+---------------+------+--------+--------+

| hostgroup_id | hostname | port | status | weight |

+--------------+---------------+------+--------+--------+

| 10 | 192.168.31.46 | 3306 | ONLINE | 1 |

| 10 | 192.168.31.47 | 3306 | ONLINE | 1 |

| 10 | 192.168.31.48 | 3306 | ONLINE | 1 |

+--------------+---------------+------+--------+--------+

3 rows in set (0.00 sec)

3.监控后端节点

首先,在DBS1上创建ProxySQL用于监控的用户。注意,这里监控用户的权限和ProxySQL代理普通mysql实例不一样,ProxySQL代理组复制时,是从MGR的系统视图sys.gr_member_routing_candidate_status中获取监控指标,所以授予监控用户对该视图的查询权限。

# 在DBS1上执行:

mysql> create user monitor@'192.168.31.%' identified by 'Zh_000000';

mysql> grant select on sys.* to monitor@'192.168.31.%';

然后回到ProxySQL上配置监控。

set mysql-monitor_username='monitor';

set mysql-monitor_password='Zh_000000';

load mysql variables to runtime;

save mysql variables to disk;

4.创建系统视图sys.gr_member_routing_candidate_status

在DBS1节点上,创建系统视图sys.gr_member_routing_candidate_status,该视图将为ProxySQL提供组复制相关的监控状态指标。

通过官方提供的视图生成语句写成了addition_to_sys.sql脚本,执行如下语句导入MySQL即可。

mysql -uroot -pZh_000000 < addition_to_sys.sql

addition_to_sys.sql为以下内容:

USE sys;

DELIMITER $$

CREATE FUNCTION IFZERO(a INT, b INT)

RETURNS INT

DETERMINISTIC

RETURN IF(a = 0, b, a)$$

CREATE FUNCTION LOCATE2(needle TEXT(10000), haystack TEXT(10000), offset INT)

RETURNS INT

DETERMINISTIC

RETURN IFZERO(LOCATE(needle, haystack, offset), LENGTH(haystack) + 1)$$

CREATE FUNCTION GTID_NORMALIZE(g TEXT(10000))

RETURNS TEXT(10000)

DETERMINISTIC

RETURN GTID_SUBTRACT(g, '')$$

CREATE FUNCTION GTID_COUNT(gtid_set TEXT(10000))

RETURNS INT

DETERMINISTIC

BEGIN

DECLARE result BIGINT DEFAULT 0;

DECLARE colon_pos INT;

DECLARE next_dash_pos INT;

DECLARE next_colon_pos INT;

DECLARE next_comma_pos INT;

SET gtid_set = GTID_NORMALIZE(gtid_set);

SET colon_pos = LOCATE2(':', gtid_set, 1);

WHILE colon_pos != LENGTH(gtid_set) + 1 DO

SET next_dash_pos = LOCATE2('-', gtid_set, colon_pos + 1);

SET next_colon_pos = LOCATE2(':', gtid_set, colon_pos + 1);

SET next_comma_pos = LOCATE2(',', gtid_set, colon_pos + 1);

IF next_dash_pos < next_colon_pos AND next_dash_pos < next_comma_pos THEN

SET result = result +

SUBSTR(gtid_set, next_dash_pos + 1,

LEAST(next_colon_pos, next_comma_pos) - (next_dash_pos + 1)) -

SUBSTR(gtid_set, colon_pos + 1, next_dash_pos - (colon_pos + 1)) + 1;

ELSE

SET result = result + 1;

END IF;

SET colon_pos = next_colon_pos;

END WHILE;

RETURN result;

END$$

CREATE FUNCTION gr_applier_queue_length()

RETURNS INT

DETERMINISTIC

BEGIN

RETURN (SELECT sys.gtid_count( GTID_SUBTRACT( (SELECT

Received_transaction_set FROM performance_schema.replication_connection_status

WHERE Channel_name = 'group_replication_applier' ), (SELECT

@@global.GTID_EXECUTED) )));

END$$

CREATE FUNCTION gr_member_in_primary_partition()

RETURNS VARCHAR(3)

DETERMINISTIC

BEGIN

RETURN (SELECT IF( MEMBER_STATE='ONLINE' AND ((SELECT COUNT(*) FROM

performance_schema.replication_group_members WHERE MEMBER_STATE != 'ONLINE') >=

((SELECT COUNT(*) FROM performance_schema.replication_group_members)/2) = 0),

'YES', 'NO' ) FROM performance_schema.replication_group_members JOIN

performance_schema.replication_group_member_stats USING(member_id));

END$$

CREATE VIEW gr_member_routing_candidate_status AS SELECT

sys.gr_member_in_primary_partition() as viable_candidate,

IF( (SELECT (SELECT GROUP_CONCAT(variable_value) FROM

performance_schema.global_variables WHERE variable_name IN ('read_only',

'super_read_only')) != 'OFF,OFF'), 'YES', 'NO') as read_only,

sys.gr_applier_queue_length() as transactions_behind, Count_Transactions_in_queue as 'transactions_to_cert' from performance_schema.replication_group_member_stats;$$

DELIMITER ;

视图创建后,可以查看该视图:

DBS1上:

root@(none) 14:40 mysql>select * from sys.gr_member_routing_candidate_status;

+------------------+-----------+---------------------+----------------------+

| viable_candidate | read_only | transactions_behind | transactions_to_cert |

+------------------+-----------+---------------------+----------------------+

| YES | NO | 0 | 0 |

+------------------+-----------+---------------------+----------------------+

DBS2上:

root@(none) 14:41 mysql>select * from sys.gr_member_routing_candidate_status;

+------------------+-----------+---------------------+----------------------+

| viable_candidate | read_only | transactions_behind | transactions_to_cert |

+------------------+-----------+---------------------+----------------------+

| YES | YES | 0 | 0 |

+------------------+-----------+---------------------+----------------------+

5.向mysql_group_replication_hostgroups中插入记录

ProxySQL代理单主模型组复制时,如果想让ProxySQL来自动调整节点所属读、写组,需要开启read_only监控,并在mysql_group_replication_hostgroups表中插入一条记录。

这里设置4种组的hostgroup_id为:

写组 -->hg=10

备写组 -->hg=20

读组 -->hg=30

离线组 -->hg=40

delete from mysql_group_replication_hostgroups;

insert into mysql_group_replication_hostgroups(writer_hostgroup,backup_writer_hostgroup,reader_hostgroup,offline_hostgroup,active,max_writers,writer_is_also_reader,max_transactions_behind)

values(10,20,30,40,1,1,0,0);

load mysql servers to runtime;

save mysql servers to disk;

上述配置中,我把writer_is_also_reader设置为false,让master只负责写操作。

admin@main 15:02 mysql>select * from mysql_group_replication_hostgroups\G

*************************** 1. row ***************************

writer_hostgroup: 10

backup_writer_hostgroup: 20

reader_hostgroup: 30

offline_hostgroup: 40

active: 1

max_writers: 1

writer_is_also_reader: 0

max_transactions_behind: 0

comment: NULL

再看看节点的分组调整情况:

admin@main 15:06 mysql>select hostgroup_id, hostname, port,status from runtime_mysql_servers;

+--------------+---------------+------+--------------+

| hostgroup_id | hostname | port | status |

+--------------+---------------+------+--------------+

| 10 | 192.168.31.46 | 3306 | ONLINE |

| 30 | 192.168.31.48 | 3306 | ONLINE |

| 30 | 192.168.31.47 | 3306 | ONLINE |

| 20 | 192.168.31.47 | 3306 | OFFLINE_HARD |

+--------------+---------------+------+--------------+

查看对MGR的监控指标。

admin@main 15:14 mysql>select hostname,

-> port,

-> viable_candidate,

-> read_only,

-> transactions_behind,

-> error

-> from mysql_server_group_replication_log

-> order by time_start_us desc

-> limit 6;

+---------------+------+------------------+-----------+---------------------+-------+

| hostname | port | viable_candidate | read_only | transactions_behind | error |

+---------------+------+------------------+-----------+---------------------+-------+

| 192.168.31.48 | 3306 | YES | YES | 0 | NULL |

| 192.168.31.47 | 3306 | YES | YES | 0 | NULL |

| 192.168.31.46 | 3306 | YES | NO | 0 | NULL |

| 192.168.31.48 | 3306 | YES | YES | 0 | NULL |

| 192.168.31.47 | 3306 | YES | YES | 0 | NULL |

| 192.168.31.46 | 3306 | YES | NO | 0 | NULL |

+---------------+------+------------------+-----------+---------------------+-------+

6.配置mysql_users

在DBS1节点上执行:

grant all on *.* to root@'192.168.31.%' identified by 'Zh_000000';

回到ProxySQL,向mysql_users表插入记录。

delete from mysql_users;

insert into mysql_users(username,password,default_hostgroup,transaction_persistent)

values('root','Zh_000000',10,1);

load mysql users to runtime;

save mysql users to disk;

7.配置测试用的读写分离规则

delete from mysql_query_rules;

insert into mysql_query_rules(rule_id,active,match_digest,destination_hostgroup,apply)

VALUES (1,1,'^SELECT.*FOR UPDATE$',10,1),

(2,1,'^SELECT',30,1);

load mysql query rules to runtime;

save mysql query rules to disk;

测试是否按预期进行读写分离。

mysql -uroot -pZh_000000 -h192.168.31.41 -P6033 -e 'create database gr_test;'

mysql -uroot -pZh_000000 -h192.168.31.41 -P6033 -e 'select user,host from mysql.user;'

mysql -uroot -pZh_000000 -h192.168.31.41 -P6033 -e 'show databases;'

查看语句路由状态:

admin@(none) 18:28 mysql>select hostgroup,digest_text from stats_mysql_query_digest;

+-----------+----------------------------------+

| hostgroup | digest_text |

+-----------+----------------------------------+

| 10 | show databases |

| 30 | select user,host from mysql.user |

| 10 | create database gr_test |

| 10 | select @@version_comment limit ? |

+-----------+----------------------------------+

select语句路由到读组hg=30上,show操作按照默认主机组路由到hg=10,create操作路由到hg=10这个写组。

8.测试MGR故障转移

将MGR的某个节点停掉,例如直接关闭当前master节点DBS1的mysql服务。

在DBS1上执行:

[root@dbs1 ~]# service mysqld stop

Shutting down MySQL............. SUCCESS!

然后,看看ProxySQL上的节点状态。

admin@(none) 18:29 mysql>select hostgroup_id, hostname, port,status from runtime_mysql_servers;

+--------------+---------------+------+---------+

| hostgroup_id | hostname | port | status |

+--------------+---------------+------+---------+

| 10 | 192.168.31.48 | 3306 | ONLINE |

| 40 | 192.168.31.46 | 3306 | SHUNNED |

| 30 | 192.168.31.47 | 3306 | ONLINE |

+--------------+---------------+------+---------+

结果显示DBS1的状态为SHUNNED,表示该节点被ProxySQL避开了。且DBS2节点移到了hg=10的组中,说明该节点被选举为了新的Master节点。

再将DBS1加回组中。在DBS1上执行:

shell> service mysqld start

mysql> start group_replication;

然后,看看ProxySQL上的节点状态。

admin@(none) 19:17 mysql>select hostgroup_id, hostname, port,status from runtime_mysql_servers;

+--------------+---------------+------+--------+

| hostgroup_id | hostname | port | status |

+--------------+---------------+------+--------+

| 10 | 192.168.31.48 | 3306 | ONLINE |

| 30 | 192.168.31.46 | 3306 | ONLINE |

| 30 | 192.168.31.47 | 3306 | ONLINE |

+--------------+---------------+------+--------+

可见,DBS1已经重新ONLINE,并且DBS2仍保持在hg=10的组中。

至此,ProxySQL代理的实现读写分离的MGP集群配置结束,接下来在ProxySQL服务器上配置keepalived实现高可用

五、配置keepalived

1.在两台PS上安装keepalived

yum install keepalived -y

2.修改配置文件

PS1:

[root@ps1 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

默认选项全部保留

}

vrrp_instance VI_1 {

state MASTER # 更想让LB1当master

interface ens33 # 网卡为ens33

virtual_router_id 51 # id默认51就行

priority 120 # 优先级调高一点,更容易当选

advert_int 1 # 默认1s,不改

authentication { # 默认就行

auth_type PASS

auth_pass 1111

}

virtual_ipaddress { # vip保留一个就行,先确保这个ip没被占用

192.168.31.51

}

}

vrrp_instance VI_2 { # 添加实例2

state BACKUP # LB1在实例2中作为backup

interface ens33

virtual_router_id 52 # 虚拟路由器id与上一个实例不一致

priority 100 # 优先级调低

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.31.52 # vip也需与上一个实例不一致

}

}

PS2:

[root@ps2 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

默认选项全部保留

}

vrrp_instance VI_1 {

state BACKUP # 希望LB2当backup

interface ens33

virtual_router_id 51 # 和LB1保持一致

priority 100 # 优先级比LB1低一点,更容易当backup

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress { # 同样只保留一个,与LB1一致

192.168.31.51

}

}

vrrp_instance VI_2 { # LB2同样添加实例2

state MASTER # LB2在实例2中作为master

interface ens33

virtual_router_id 52 # 与LB1保持一致

priority 120 # 优先级比LB1高

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.31.52 # 与LB1保持一致

}

}

启动服务查看进程和ip

[root@ps1 ~]# service keepalived start

Redirecting to /bin/systemctl start keepalived.service

[root@ps1 ~]# ps aux|grep keep

root 3105 0.0 0.0 118712 1380 ? Ss 15:31 0:00 /usr/sbin/keepalived -D

root 3106 0.0 0.1 129640 3304 ? S 15:31 0:00 /usr/sbin/keepalived -D

root 3107 0.0 0.1 133812 2928 ? S 15:31 0:05 /usr/sbin/keepalived -D

root 3211 0.0 0.0 112824 984 pts/0 R+ 19:35 0:00 grep --color=auto keep

查看ip:

[root@ps1 ~]# ip ad

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:f4:71:d3 brd ff:ff:ff:ff:ff:ff

inet 192.168.31.41/24 brd 192.168.31.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.31.51/32 scope global ens33

valid_lft forever preferred_lft forever

[root@ps2 ~]# ip ad

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:b8:cf:8e brd ff:ff:ff:ff:ff:ff

inet 192.168.31.42/24 brd 192.168.31.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.31.52/32 scope global ens33

valid_lft forever preferred_lft forever

PS1和PS2各自当选一个master,互为主备

3.模拟PS宕机,测试vip漂移

关闭PS1的keepalived服务:

[root@ps1 ~]# service keepalived stop

Redirecting to /bin/systemctl stop keepalived.service

[root@ps1 ~]# ip ad

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:f4:71:d3 brd ff:ff:ff:ff:ff:ff

inet 192.168.31.41/24 brd 192.168.31.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

看到vip已经不见了,再去PS2上查看ip:

[root@ps2 ~]# ip ad

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:b8:cf:8e brd ff:ff:ff:ff:ff:ff

inet 192.168.31.42/24 brd 192.168.31.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.31.52/32 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.31.51/32 scope global ens33

valid_lft forever preferred_lft forever

发现vip成功漂移到了PS2上,实现了ProxySQL服务的高可用

4.通过vip远程查询后端mysql数据

[root@ps1 ~]# mysql -uroot -pZh_000000 -h192.168.31.52 -P6033 -e 'create database free;'

[root@ps1 ~]# mysql -uroot -pZh_000000 -h192.168.31.52 -P6033 -e 'show databases;'

+--------------------+

| Database |

+--------------------+

| information_schema |

| free |

| gr_test |

| mysql |

| performance_schema |

| sys |

| test |

+--------------------+

成功执行

5.健康检测+notify实现自动化结束服务

思路:

-

通过在配置文件中添加

vrrp_script模块并在vrrp实例中通过track_script模块调用该模块,vrrp_script模块中每秒执行一次用于监控proxysql服务是否正常运行的脚本,当监控脚本返回值为1时,将vrrp实例的权重值减少一定数值成为backup,再通过notify_backup功能去执行关闭keepalived的命令或者脚本。 -

用于监控proxysql服务是否正常运行的脚本可以通过

netstat查看6032、6033端口是否存在或者通过ps检测proxysql进程是否存在或service proxysql status查看状态等来进行判断。 -

另外还可以配置

notify_master来启动proxysql服务,这样proxysql就不用设置开机自启了

感兴趣的可以自行配置

至此,keepalived配置完成,接下来进行Prometheus监控的配置。

六、配置Prometheus

1.在Prom上安装Prometheus

[root@prom ~]# mkdir /prom

[root@prom ~]# cd /prom

# 传入软件包

[root@prom prom]# ls

prometheus-2.43.0.linux-amd64.tar.gz

[root@prom prom]# tar xf prometheus-2.43.0.linux-amd64.tar.gz

[root@prom prom]# ls

prometheus-2.43.0.linux-amd64 prometheus-2.43.0.linux-amd64.tar.gz

[root@prom prom]# mv prometheus-2.43.0.linux-amd64 prometheus-2.43.0

[root@prom prom]# cd prometheus-2.43.0

[root@prom prometheus-2.43.0]# ls

console_libraries LICENSE prometheus promtool

consoles NOTICE prometheus.yml

[root@prom prometheus-2.43.0]# PATH=$PATH:/prom/prometheus-2.43.0 # 将当前目录加入到PATH变量

[root@prom prometheus-2.43.0]# echo "PATH=$PATH:/prom/prometheus-2.43.0" >>/root/.bashrc # 登录自动生效

[root@prom prometheus-2.43.0]# service firewalld stop # 关闭防火墙

Redirecting to /bin/systemctl stop firewalld.service

[root@prom prometheus-2.43.0]# systemctl disable firewalld # 关闭防火墙开机自启

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@prom prometheus-2.43.0]# setenforce 0 # 关闭selinux

[root@prom prometheus-2.43.0]# sed -i '/^SELINUX=/ s/enforcing/disabled/' /etc/selinux/config # 永久关闭selinux

2.配置Prometheus为服务

[root@prom prometheus-2.43.0]# vi /usr/lib/systemd/system/prometheus.service # 在该目录下添加文件即注册服务

[root@prom prometheus-2.43.0]# systemctl daemon-reload # 刷新服务

[root@prom prometheus-2.43.0]# service prometheus start # 使用service方式启动

Redirecting to /bin/systemctl start prometheus.service

[root@prom prometheus-2.43.0]# ps aux |grep prom

root 1628 1.4 2.0 798700 39088 ? Ssl 15:57 0:00 /promprometheus-2.43.0/prometheus --config.file=/prom/prometheus-2.43.0/prometheus.yml

root 1635 0.0 0.0 112720 984 pts/0 R+ 15:57 0:00 grep --color=auto prom

[root@prom ~]# systemctl enable prometheus # 设置开机自启

Created symlink from /etc/systemd/system/multi-user.target.wants/prometheus.service to /usr/lib/systemd/system/prometheus.service.

看效果

在windows浏览器上输入ip:9090进入prom自带的web界面,在搜索框中选择要查看的指标即可看到出图效果,prom自带的出图效果不太好,后续使用grafana效果会好很多

prom在没有给其他服务器配置exporter时默认监控自身状态,下面说exporter如何配置

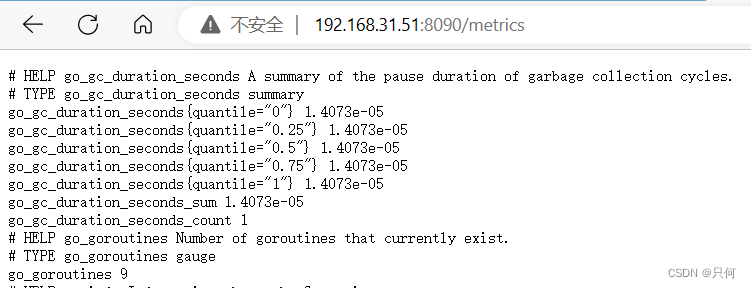

3.在两台PS上配置运行node_exporter

因为之前通过ansible已经在PS上安装好了node_exporter,现在直接启动即可:

[root@ps1 ~]# nohup node_exporter --web.listen-address 0.0.0.0:8090 &

[root@ps1 ~]# ps aux|grep exporter

root 3134 0.5 0.7 724656 13096 pts/0 Sl 16:10 0:00 node_exporter --web.listen-address 0.0.0.0:8090

root 3138 0.0 0.0 112824 988 pts/0 R+ 16:11 0:00 grep --color=auto exporter

看效果

exporter自带的web服务上存放着收集到的指标

windows浏览器上访问exporter服务器ip:8090(端口是你自己定的)

可以看到Metircs链接,点击进入后就是数据了

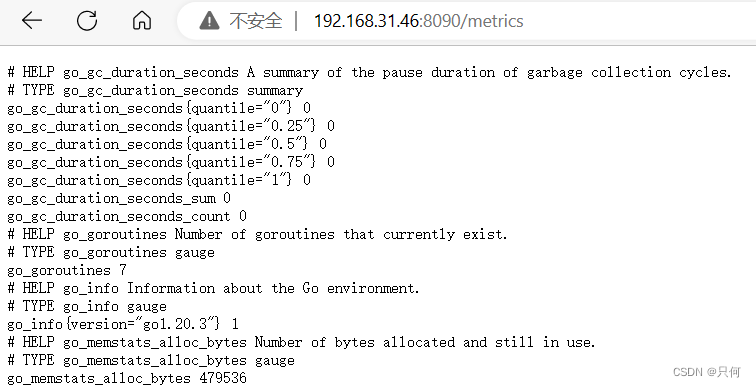

4.在三台DBS上配置mysqld_exporter

在DBS1上建立用于mysqld_exporter访问的授权用户:

grant all on *.* to 'mysqld_exporter'@'%' identified by '123456';

在事先解压好的mysqld_exporter目录下编辑连接本机数据库的配置文件:

[root@dbs1 mysqld_exporter]# pwd

/mysqld_exporter

[root@dbs1 mysqld_exporter]# ls

LICENSE mysqld_exporter NOTICE

[root@dbs1 mysqld_exporter]# vim my.cnf

[client]

user=mysqld_exporter

password=123456

后台启动:

nohup mysqld_exporter --config.my-cnf=/mysqld_exporter/my.cnf --web.listen-address 0.0.0.0:8090 &

[root@dbs1 mysqld_exporter]# nohup mysqld_exporter --config.my-cnf=/mysqld_exporter/my.cnf --web.listen-address 0.0.0.0:8090 &

[root@dbs1 mysqld_exporter]# ps aux|grep exporter

root 1915 0.2 0.4 719528 7976 pts/0 Sl 17:18 0:00 mysqld_exporter --config.my-cnf=/mysqld_exporter/my.cnf --web.listen-address 0.0.0.0:8090

root 1919 0.0 0.0 112824 988 pts/0 R+ 17:18 0:00 grep --color=auto exporter

看效果:

windows浏览器上访问mysqld_exporter服务器ip:8090(端口是你自己定的)

5.在Prometheus上配置获取exporter的数据

[root@prom prometheus-2.43.0]# vi prometheus.yml # 在结尾添加以下配置

# 添加exporter

- job_name: "PS1"

static_configs:

- targets: ["192.168.31.41:8090"]

- job_name: "PS2"

static_configs:

- targets: ["192.168.31.42:8090"]

- job_name: "DBS1"

static_configs:

- targets: ["192.168.31.46:8090"]

- job_name: "DBS2"

static_configs:

- targets: ["192.168.31.47:8090"]

- job_name: "DBS3"

static_configs:

- targets: ["192.168.31.48:8090"]

[root@prom prometheus-2.43.0]# service prometheus restart # 重启prometheus服务

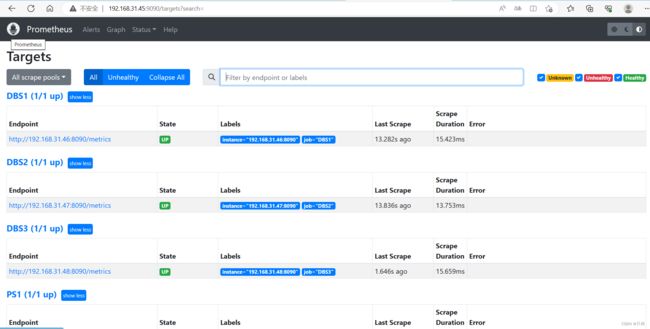

看效果

进入Prom的Web界面,点击Status中的Targets就可以看到监控的节点

至此,Prometheus监控+exporter采集配置完毕,接下来进行grafana的部署,可以得到更好的图形化效果

七、配置Grafana

1.安装

安装在Prom服务器上:

wget https://dl.grafana.com/enterprise/release/grafana-enterprise-9.4.7-1.x86_64.rpm # 不用代理下的很慢

[root@prom /]# cd grafana/ # 这里我是传入的安装包

[root@prom grafana]# ls

grafana-enterprise-9.4.7-1.x86_64.rpm

[root@prom grafana]# yum install grafana-enterprise-9.4.7-1.x86_64.rpm -y

2.启动Grafana

[root@prom grafana]# service grafana-server start

Starting grafana-server (via systemctl): [ 确定 ]

[root@prom grafana]# ps aux |grep graf

grafana 2144 25.1 5.5 1160540 104000 ? Ssl 17:55 0:02 /usr/share/grafana/bin/grafana server --config=/etc/grafana/grafana.ini --pidfile=/var/run/grafana/grafana-server.pid --packaging=rpm cfg:default.paths.logs=/var/log/grafana cfg:default.paths.data=/var/lib/grafana cfg:default.paths.plugins=/var/lib/grafana/plugins cfg:default.paths.provisioning=/etc/grafana/provisioning

root 2152 0.0 0.0 112720 980 pts/0 S+ 17:55 0:00 grep --color=auto graf

[root@prom grafana]# netstat -anplut |grep graf

tcp 0 0 192.168.31.45:41200 34.120.177.193:443 ESTABLISHED 2144/grafana

tcp 0 283 192.168.31.45:58284 185.199.110.133:443 ESTABLISHED 2144/grafana

tcp6 0 0 :::3000 :::* LISTEN 2144/grafana

[root@prom grafana]# systemctl enable grafana-server # 设置开机自启

可以看到grafana默认监听3000端口

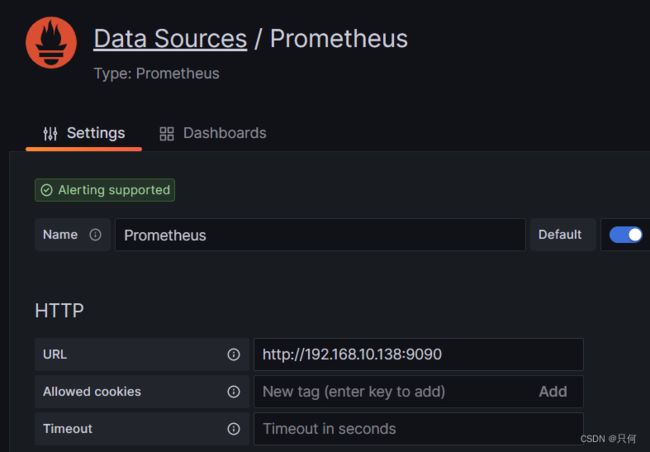

3.访问服务并设置数据源

在Windows上访问3000服务器ip的端口,输入用户名:admin,密码:admin之后设置新密码就可以进入服务页面了

设置数据源

点击add Data source后点击Prometheus

在URL处填入Prom的ip地址:端口

滑倒最下面点击save & test,出现如下图标即可

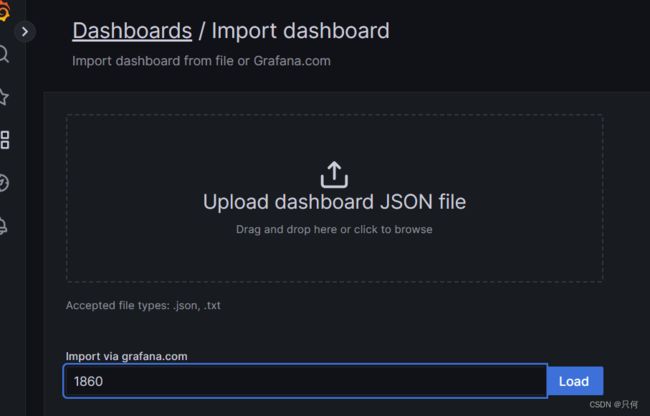

4.配置Dashboards仪表盘

即通过PromQL以及对Graf的配置设计的展示数据的界面

官方免费的仪表盘:Dashboards | Grafana Labs

左边的四方格就是Dashboards仓库,可以点击import导入模板,导入json文件或者输入模板ID号进行导入

输入模板ID后点击load

图形已经可以看到了,但因为我导入的1860模板为node_exporter数据的仪表盘,所以还要添加一个监控mysqld_exporter的仪表盘,如7362:

至此,Grafana配置结束,整个集群基本已经搭建完成,接下来需要搭建一台DNS服务器用于给测试机进行域名解析

八、配置DNS服务器

1.安装bind

yum install bind -y

yum install bind-utils -y # 提供了许多的dns域名查询命令

2.启动named服务并设置开机自启

[root@dns ~]# service named start

Redirecting to /bin/systemctl start named.service

[root@dns ~]# systemctl enable named

Created symlink from /etc/systemd/system/multi-user.target.wants/named.service to /usr/lib/systemd/system/named.service.

[root@dns ~]# ps aux |grep named

named 1796 0.1 3.0 167280 57496 ? Ssl 19:13 0:00 /usr/sbin/named -u named -c /etc/named.conf

root 1819 0.0 0.0 112720 984 pts/0 S+ 19:13 0:00 grep --color=auto named

[root@dns ~]# netstat -anplut |grep named

tcp 0 0 127.0.0.1:53 0.0.0.0:* LISTEN 1796/named

tcp 0 0 127.0.0.1:953 0.0.0.0:* LISTEN 1796/named

tcp6 0 0 ::1:53 :::* LISTEN 1796/named

tcp6 0 0 ::1:953 :::* LISTEN 1796/named

udp 0 0 127.0.0.1:53 0.0.0.0:* 1796/named

udp6 0 0 ::1:53 :::* 1796/named

[root@dns named]# setenforce 0 # 关闭selinux

[root@dns named]# sed -i '/^SELINUX=/ s/enforcing/disabled/' /etc/selinux/config # 永久关闭selinux

[root@dns named]# systemctl disable firewalld # 关闭防火墙开机自启

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@dns named]# service firewalld stop # 关闭防火墙

Redirecting to /bin/systemctl stop firewalld.service

默认占用53端口,且只用于自身dns解析(只监听回环地址的53端口)

3.修改dns服务器地址配置文件

[root@dns ~]# vi /etc/resolv.conf

# Generated by NetworkManager

#nameserver 114.114.114.114

nameserver 127.0.0.1

设置dns服务器为自己,即127.0.0.1

对resolv.conf文件的修改只是临时修改,重启失效,永久修改需修改ifens33文件配置静态ip和dns,或者修改dhcp服务器分配的dns地址

[root@dns ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens33

DNS1=127.0.0.1

尝试解析qq.com:

[root@dns ~]# cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 127.0.0.1

[root@dns ~]# nslookup qq.com

Server: 127.0.0.1

Address: 127.0.0.1#53

Non-authoritative answer:

Name: qq.com

Address: 123.151.137.18

Name: qq.com

Address: 61.129.7.47

Name: qq.com

Address: 183.3.226.35

4.修改named主配置文件/etc/named.conf

[root@dns ~]# vi /etc/named.conf

options {

listen-on port 53 { any; }; # 将127.0.0.1改为any

listen-on-v6 port 53 { ::1; }; # ipv6一般用不着,用的时候也可以改成any

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

recursing-file "/var/named/data/named.recursing";

secroots-file "/var/named/data/named.secroots";

allow-query { any; }; # 将localhost改成any

[root@dns ~]# service named restart # 重启服务

5.修改区域配置文件,告诉named为zhihe.com提供域名解析

[root@dns ~]# vi /etc/named.rfc1912.zones # 插入新的zone,如下配置,建议插在localhost后面

zone "zhihe.com" IN {

type master;

file "zhihe.com.zone";

allow-update { none; };

};

6.在数据配置文件目录创建zhihe.com.zone数据文件

[root@dns ~]# cd /var/named

[root@dns named]# cp -a named.localhost zhihe.com.zone

# 注意cp时加-a,保留原来文件的属性,不然属组是root,named是看不了的

[root@dns named]# ls

data named.ca named.localhost slaves dynamic named.empty named.loopback zhihe.com.zone

7.配置zhihe.com.zone数据文件

[root@dns named]# vi zhihe.com.zone

$TTL 1D # 缓存时间 1天

@ IN SOA @ rname.invalid. (

0 ; serial # 序列号

1D ; refresh

1H ; retry # 区域服务器多长时间尝试重连

1W ; expire # 区域服务器数据过期时间

3H ) ; minimum # 多久去主服务器拿一次数据

NS @ # @表示zhihe.com这个域

A 127.0.0.1

www IN A 192.168.31.51 # 访问PS1的vip

www IN A 192.168.31.52 # 访问PS2的vip,相同域名不同ip,起到负载均衡的作用,一般轮询访问

* IN A 192.168.31.51 # 泛域名解析,前面解析不了的就用这条,一般放在最后

8.使用命令检验配置文件

[root@dns named]# named-checkconf /etc/named.rfc1912.zones

[root@dns named]# named-checkzone zhihe.com /var/named/zhihe.com.zone

zone zhihe.com/IN: loaded serial 0

OK

客户机测试

客户机测试前进行dns修改:

[root@ansible ~]# vi /etc/resolv.conf

nameserver 192.168.31.43

ping www.baidu.com查看是否正常解析:

[root@ansible src]# ping www.baidu.com

PING www.a.shifen.com (14.119.104.189) 56(84) bytes of data.

64 bytes from 14.119.104.189 (14.119.104.189): icmp_seq=1 ttl=54 time=25.2 ms

64 bytes from 14.119.104.189 (14.119.104.189): icmp_seq=2 ttl=54 time=28.0 ms

[root@ansible src]# ping www.zhihe.com

PING www.zhihe.com (192.168.31.52) 56(84) bytes of data.

64 bytes from 192.168.31.52 (192.168.31.52): icmp_seq=1 ttl=64 time=0.652 ms

64 bytes from 192.168.31.52 (192.168.31.52): icmp_seq=2 ttl=64 time=1.47 ms

九、OLTP基准测试

测试前先将内核参数调整一下

ulimit -n 100000 # open files

ulimit -u 100000 # max user processes

ulimit -s 100000 # stack size

sysbench

yum安装

yum install sysbench -y

[root@ansible ~]# sysbench --version

sysbench 1.0.17

我们主要进行OLTP中的混合读写(range select)和只写测试,下面先列出测试用到的命令,测试结果放在后面

OLTP混合读写(range select)测试:

#数据准备

sysbench --threads=1000 --report-interval=5 --db-driver=mysql --mysql-user=root --mysql-password=Zh_000000 --mysql-port=6033 --mysql-host=www.zhihe.com --mysql-db=test --table_size=6000 --tables=2 oltp_read_write prepare

#开始测试

sysbench --threads=1000 --time=60 --report-interval=5 --db-driver=mysql --mysql-user=root --mysql-password=Zh_000000 --mysql-port=6033 --mysql-host=www.zhihe.com --mysql-db=test --table_size=6000 --tables=2 oltp_read_write run

#清除数据

sysbench --threads=8 --time=30 --report-interval=5 --db-driver=mysql --mysql-user=root --mysql-password=Zh_000000 --mysql-port=6033 --mysql-host=www.zhihe.com --mysql-db=test --table_size=6000 --tables=2 oltp_read_write cleanup

OLTP只写测试:

# 数据准备

sysbench --threads=100 --report-interval=5 --db-driver=mysql --mysql-user=root --mysql-password=Zh_000000 --mysql-port=6033 --mysql-host=www.zhihe.com --mysql-db=test --table_size=6000 --tables=2 oltp_insert prepare

# 开启测试

sysbench --threads=64 --time=30 --report-interval=5 --db-driver=mysql --mysql-user=root --mysql-password=Zh_000000 --mysql-port=6033 --mysql-host=www.zhihe.com --mysql-db=test --table_size=6000 --tables=2 oltp_insert run

# 清除数据

sysbench --threads=32 --time=30 --report-interval=5 --db-driver=mysql --mysql-user=root --mysql-password=Zh_000000 --mysql-port=6033 --mysql-host=www.zhihe.com --mysql-db=test --table_size=6000 --tables=2 oltp_insert cleanup

混合读写测试结果:

[root@ansible sysbench]# sysbench --threads=8 --time=30 --report-interval=5 --db-driver=mysql --mysql-user=root --mysql-password=Zh_000000 --mysql-port=6033 --mysql-host=www.zhihe.com --mysql-db=test --table_size=6000 --tables=2 oltp_read_write run

sysbench 1.0.17 (using system LuaJIT 2.0.4)

Running the test with following options:

Number of threads: 8

Report intermediate results every 5 second(s)

Initializing random number generator from current time

Initializing worker threads...

Threads started!

[ 5s ] thds: 8 tps: 180.92 qps: 3632.95 (r/w/o: 2544.64/691.29/397.02) lat (ms,95%): 55.82 err/s: 0.00 reconn/s: 0.00

[ 10s ] thds: 8 tps: 182.82 qps: 3658.53 (r/w/o: 2562.23/694.26/402.04) lat (ms,95%): 58.92 err/s: 0.00 reconn/s: 0.00

[ 15s ] thds: 8 tps: 174.59 qps: 3488.93 (r/w/o: 2440.81/666.75/381.37) lat (ms,95%): 62.19 err/s: 0.00 reconn/s: 0.00

[ 20s ] thds: 8 tps: 181.01 qps: 3620.13 (r/w/o: 2534.69/689.63/395.81) lat (ms,95%): 58.92 err/s: 0.20 reconn/s: 0.00

[ 25s ] thds: 8 tps: 182.40 qps: 3662.09 (r/w/o: 2565.06/700.62/396.41) lat (ms,95%): 56.84 err/s: 0.00 reconn/s: 0.00

[ 30s ] thds: 8 tps: 140.35 qps: 2800.60 (r/w/o: 1959.30/535.21/306.09) lat (ms,95%): 90.78 err/s: 0.00 reconn/s: 0.00

SQL statistics:

queries performed:

read: 73080

write: 19912

other: 11406

total: 104398

transactions: 5219 (173.53 per sec.)

queries: 104398 (3471.10 per sec.)

ignored errors: 1 (0.03 per sec.)

reconnects: 0 (0.00 per sec.)

General statistics:

total time: 30.0731s

total number of events: 5219

Latency (ms):

min: 28.76

avg: 46.03

max: 126.84

95th percentile: 69.29

sum: 240249.01

Threads fairness:

events (avg/stddev): 652.3750/15.15

execution time (avg/stddev): 30.0311/0.02

只写测试结果:

[root@ansible sysbench]# sysbench --threads=64 --time=30 --report-interval=5 --db-driver=mysql --mysql-user=root --mysql-password=Zh_000000 --mysql-port=6033 --mysql-host=www.zhihe.com --mysql-db=test --table_size=6000 --tables=2 oltp_insert run

sysbench 1.0.17 (using system LuaJIT 2.0.4)

Running the test with following options:

Number of threads: 64

Report intermediate results every 5 second(s)

Initializing random number generator from current time

Initializing worker threads...

Threads started!

[ 5s ] thds: 64 tps: 2160.12 qps: 2160.12 (r/w/o: 0.00/2160.12/0.00) lat (ms,95%): 51.94 err/s: 0.00 reconn/s: 0.00

[ 10s ] thds: 64 tps: 2151.38 qps: 2151.38 (r/w/o: 0.00/2151.38/0.00) lat (ms,95%): 50.11 err/s: 0.00 reconn/s: 0.00

[ 15s ] thds: 64 tps: 2116.18 qps: 2116.18 (r/w/o: 0.00/2116.18/0.00) lat (ms,95%): 48.34 err/s: 0.00 reconn/s: 0.00

[ 20s ] thds: 64 tps: 2067.17 qps: 2067.17 (r/w/o: 0.00/2067.17/0.00) lat (ms,95%): 53.85 err/s: 0.00 reconn/s: 0.00

[ 25s ] thds: 64 tps: 1241.23 qps: 1241.23 (r/w/o: 0.00/1241.23/0.00) lat (ms,95%): 70.55 err/s: 0.00 reconn/s: 0.00

[ 30s ] thds: 64 tps: 1336.96 qps: 1336.96 (r/w/o: 0.00/1336.96/0.00) lat (ms,95%): 80.03 err/s: 0.00 reconn/s: 0.00

SQL statistics:

queries performed:

read: 0

write: 55434

other: 0

total: 55434

transactions: 55434 (1827.12 per sec.)

queries: 55434 (1827.12 per sec.)

ignored errors: 0 (0.00 per sec.)

reconnects: 0 (0.00 per sec.)

General statistics:

total time: 30.3379s

total number of events: 55434

Latency (ms):

min: 8.29

avg: 34.99

max: 605.31

95th percentile: 55.82

sum: 1939771.01

Threads fairness:

events (avg/stddev): 866.1562/5.81

execution time (avg/stddev): 30.3089/0.01

经过测试可以得出当只写时该集群在同时连接数为64时最好,而128或者32的同时连接数性能都会有所下降;而当混合读写时该集群最多支持8个同时连接数,硬件条件有限,该结果仅供参考

tpcc

不可行,因为tpcc生成的表不符合组复制要求,在插入数据时报错如下:

3098, HY000, The table does not comply with the requirements by an external plugin.

Retrying ...

下列步骤仅供参考:

因为tpcc命令需要使用mysql,所以在PS2对整个集群进行测试

unzip tpcc-mysql-master.zip

mv tpcc-mysql-master /tpcc

cd /tpcc

[root@ps2 tpcc-mysql-master]# ls

add_fkey_idx.sql load.sh count.sql README.md create_table.sql schema2 Dockerfile scripts drop_cons.sql src

load_multi_schema.sh

[root@ps2 tpcc-mysql-master]# cd src

[root@ps2 src]# make

[root@ps2 src]# cd ..

[root@ps2 tpcc-mysql-master]# ls

add_fkey_idx.sql README.md

count.sql schema2

create_table.sql scripts

Dockerfile src

drop_cons.sql tpcc_load

load_multi_schema.sh tpcc_start

load.sh

[root@ps2 tpcc-mysql-master]# ls

add_fkey_idx.sql create_table.sql drop_cons.sql load.sh schema2 src tpcc_start

count.sql Dockerfile load_multi_schema.sh README.md scripts tpcc_load

# 编译之后生成了tpcc_load和tpcc_start命令以及load_multi_schema.sh

在DBS1上建立测试库、测试表:

#将create_table.sql和add_fkey_idx.sql传到DBS1上

mysql> create database tpcc_test;

mysql> use tpcc_test

mytsql> source /root/create_table.sql;

……

mysql> show tables;

+---------------------+

| Tables_in_tpcc_test |

+---------------------+

| customer |

| district |

| history |

| item |

| new_orders |

| order_line |

| orders |

| stock |

| warehouse |

+---------------------+

9 rows in set (0.00 sec)

在测试机上对测试表插入数据:

$> ./tpcc_load -h www.zhihe.com -P 6033 -d tpcc_test -u root -p Zh_000000 -w 4 # 所需时间较长

如果报错:

./tpcc_load: error while loading shared libraries: libmysqlclient.so.20: cannot open shared object file: No such file or directory

# 执行以下命令

export LD_LIBRARY_PATH=/usr/local/mysql/lib:$LD_LIBRARY_PATH #将MySQL客户端库的安装路径/lib添加到系统的共享库搜索路径中

在DBS1上添加索引【注意一定要先插入数据再添加索引,若先创建索引,则数据插入的会更慢】:

mysql> source /root/add_fkey_idx.sql;

进行测试:

./tpcc_start -h www.zhihe.com -P 3306 -d tpcc_test -u root -p Zh_000000 -w 4 -c 128 -r 120 -l 200

至此项目算是完成了

一些需要注意的细节

一、准备环境时克隆失误

因为该项目我的所有机器都是使用桥接,而我克隆时的模板机是使用nat模式的虚拟机,导致后来都要重新更改模式,还挺麻烦的;另外克隆前也最好先将模板机的ip配置文件写成一个差不多的模板,不然在VMWare上一台台去改真的不方便/(ㄒoㄒ)/~~

二、proxysql的admin用户默认不能远程登录

所以还是需要在本机上安装mysql,或者在第一次启动前对配置文件进行某些修改或许可行(没去了解~)

三、如果组中的节点purge过日志,那么新节点将无法从donor上获取完整的数据

这时新节点上的恢复过程会让它重新选择下一个donor。但很可能还是会失败,因为实际环境中,既然purge了某节点上的一段日志,很可能同时会去所有节点上也Purge。(注意,purge不是事件,不会写入到binlog中,所以不会复制到其它节点上,换句话说,某节点Purge后,那么它的binlog和其它节点的binlog是不一致的)。

所以,在新节点加入组之前,应该先通过备份恢复的方式,从组中某节点上备份目前的数据到新节点上,然后再让新节点去加组,这样加组的过程将非常快,且能保证不会因为purge的原因而加组失败。至于如何备份恢复,可以使用如mysqldump等工具。

我这里做实验的环境,所有节点都是刚安装好的全新实例,数据量小,也没purge过日志,所以直接加入到组中就可以。

四、在模拟故障转移时,发现重启的服务器无法正常开启组复制

root@(none) 18:34 mysql>start group_replication;

ERROR 3092 (HY000): The server is not configured properly to be an active member of the group. Please see more details on error log.

原因是在另外两台的my.cnf里用于组间通信的端口配置错误,导致重启的服务器无法正常与组间其它成员通信,因此开启组复制失败。

loose-group_replication_local_address="192.168.31.46:20001" # 表示本机上用于组内各节点之间通信的地址和端口

在另外两台服务器上将端口修改好后重启mysql服务后重新开启组复制即可解决。

五、自愿离组与非自愿离组

自愿离组:即stop group_replication

非自愿离组:除了自愿离组其它都是非自愿离组

每个mysql服务都有一个令牌用于投票,执行操作都需要多数的令牌才能执行——自愿离组令牌数会减少,而非自愿离组令牌不会减少

也就是说:假设有5台服务器的组复制集群,那令牌数为5,执行操作时需要不少于3票才能通过执行;当两台服务器自愿离组时,令牌数减二,此时只需不少于2票操作即可通过执行。

但如果这两台服务器是非自愿离组,则令牌数仍为5,则操作需要剩下3台都通过才能执行,否则将被阻塞。

可以测试下,无论自愿退出多少个节点,只要组中还有节点,组都不会被阻塞。

遇到的一些问题

一、组复制加入新节点时一直处于recoving?

当加入一个新节点时,一切配置都正确,但是新节点死活就是不同步数据,随便执行一个语句都卡半天,查看performance_schema.replication_group_members表时,还发现这个新节点一直处于recovering装态。

这时,请查看新节点的错误日志。以下是我截取出来的一行。

[root@xuexi ~]# tail /data/error.log

2023-05-14T17:41:22.314085Z 10 [ERROR] Plugin group_replication reported: 'There was an error when connecting to the donor server. Please check that group_replication_recovery channel credentials and all MEMBER_HOST column values of performance_schema.replication_group_members table are correct and DNS resolvable.'

很显然,连接donor的时候出错,让我们检测通道凭据,并且查看member_host字段的主机名是否正确解析。一切正确配置的情况下,通道凭据是没错的,错就错在member_host的主机名。

当和donor建立通道连接时,首先会通过member_host字段的主机名去解析donor的地址。这个主机名默认采取的是操作系统默认的主机名,而非ip地址。所以,必须设置DNS解析,或者/etc/hosts文件,将member_host对应的主机名解析为donor的ip地址。

当所有节点的主机名都相同时也会报错,因为新节点会将这个主机名解析到本机。

我这里之所以显示错误,是以为开头没有修改hosts文件添加映射。

在三台DBS上修改hosts文件:

[root@dbs1 mysql]# vim /etc/hosts

192.168.31.46 dbs1

192.168.31.47 dbs2

192.168.31.48 dbs3

二、加组失败,新节点包含了额外的gtid事务

如果新节点中包含了额外的数据,例如,新节点上多了一个用户,创建这个用户是会产生gtid事务的,当这个节点要加入到组时会报错。以下是error.log中的内容:

2023-05-14T12:56:29.300453Z 0 [ERROR] Plugin group_replication reported: 'This member has more executed transactions than those present in the gro

up. Local transactions: 48f1d8aa-7798-11e8-bf9a-000c29296408:1-2 > Group transactions: 481024ff-7798-11e8-89da-000c29ff1054:1-4,

bbbbbbbb-bbbb-bbbb-bbbb-bbbbbbbbbbbb:1-6'

2023-05-14T12:56:29.300536Z 0 [ERROR] Plugin group_replication reported: 'The member contains transactions not present in the group. The member wi

ll now exit the group.'

错误已经指明了是新节点的事务和组中的事务不匹配,有多余的事务,从而导致加组失败。

如果你已经明确这些多余的事务造成的数据不会影响到组中的节点,正如多了一个可能永远也用不上的用户,或者多了几个和组复制完全无关的数据库。

这时,可以将这些无关紧要的gtid给删掉,但是想删除这些gtid还真没那么容易。purge日志不行,停掉MySQL后删日志文件也不行,把binlog关掉再打开也不行。它们都会把以前的事务记录到Previous_gtid中。真正行之有效的方法是将全局变量executed_gtid设置为空。方法为:

mysql> reset master;

然后,再去加组。

集群的日常维护

开机

DBS:

mysql开机自启

# 开启组复制

mysql -uroot -pZh_000000

DBS1:

set @@global.group_replication_bootstrap_group=on;

start group_replication;

set @@global.group_replication_bootstrap_group=off;

DBS2、DBS3:

start group_replication;

# 检查是否都online:

select * from performance_schema.replication_group_members\G

# 开启msqld_exporter

nohup mysqld_exporter --config.my-cnf=/mysqld_exporter/my.cnf --web.listen-address 0.0.0.0:8090 &

PS:

mysql开机自启、proxysql开机自启

# 开启keepalived服务

$> service keepalived start

# 看节点的分组调整情况以及在线状态:

$> mysql -uadmin -padmin -h127.0.0.1 -P6032

mysql> select hostgroup_id, hostname, port,status from runtime_mysql_servers;

# 开启node_exporter

nohup node_exporter --web.listen-address 0.0.0.0:8090 &

Prom:

prometheus和grafana开机自启

service prometheus start # 要是没启动

service grafana-server start

DNS:

named服务开机自启

关机

PS:

service proxysql stop

service keepalived stop

DBS:

stop group_replication;

Prom:

service prometheus stop

service grafana-server stop

日常常用命令

PS:

查看对MGR的监控指标。

select hostname,

-> port,

-> viable_candidate,

-> read_only,

-> transactions_behind,

-> error

-> from mysql_server_group_replication_log

-> order by time_start_us desc

-> limit 6;

查看语句路由状态:

select hostgroup,digest_text from stats_mysql_query_digest;

DNS:

更新DNS服务器配置且不会中断正在进行的DNS请求

service named reload

测试机:

查看域名解析详情

dig @192.168.31.43 zhihe.com A

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7_9.13 <<>> @192.168.31.43 zhihe.com A

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 23979

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 1, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;zhihe.com. IN A

;; ANSWER SECTION:

zhihe.com. 86400 IN A 127.0.0.1

;; AUTHORITY SECTION:

zhihe.com. 86400 IN NS zhihe.com.

;; Query time: 0 msec

;; SERVER: 192.168.31.43#53(192.168.31.43)

;; WHEN: 三 5月 17 20:35:27 CST 2023

;; MSG SIZE rcvd: 68

优化

动态读写分离

通过ProxySQL可以很好地实现根据主服务器负载动态调整读请求分发比例。

具体可以这么做:

- 在ProxySQL中配置主服务器和从服务器,作为后端数据库实例。

- 定期通过ProxySQL的STATS命令获取主服务器和从服务器的连接数、查询响应时间等指标,作为负载评估的依据。

- 根据主服务器的负载情况,动态调整ProxySQL的读查询路由规则,以调整主从服务器的读请求分发比例。

- 当主服务器负载较高时(连接数接近上限、响应时间变长),ProxySQL可以减小主服务器的读请求比例,增加从服务器的读请求比例,以分担主服务器压力。

- 当主服务器负载减小,ProxySQL可以逐渐增加主服务器的读请求比例,降低从服务器的读请求比例,以将更多的读请求分发到主服务器,从而达到合理利用资源的目的。

具体的实现可以通过ProxySQL的ProxySQL管理接口(管理端口6032)运行SQL命令来调整路由规则,ProxySQL可以动态加载这些规则调整读写分离的行为。所以,通过定期检测主从服务器的负载,然后动态调整ProxySQL的路由规则,可以比较轻松地实现根据主服务器负载调整读请求分发的效果。

这种基于监控的动态调整方案,可以比静态的配置更加精确和适应环境变化,从而达到充分利用主从服务器资源的目的。所以,ProxySQL是实现这种动态读写分离的一个很好的选择。

异地多活

异地多活方案是指主服务器和从服务器部署在不同的机房,当一侧机房不可用时,可以快速切换到另一侧机房以保证业务连续性。主要步骤如下:

- 主数据库同步复制到异地从数据库,保证从数据库的数据同步更新。

- 应用程序同时连接主数据库和从数据库,但只有主数据库处于可用状态时,应用才发送读写请求到主数据库。

- 通过心跳检测机制检测主数据库的可用性。当主数据库不可用时,应用程序切换到从数据库,此时从数据库切换为主数据库。

- 当主数据库恢复正常时,从数据库通过增量数据同步将数据同步回主数据库,然后应用程序切回主数据库。

这种方案的优势是通过数据库层面实现业务的高可用,减少了应用程序的切换逻辑,更加透明和高效。但是也增加了一定的复杂性,需要解决的技术挑战也更多。

MHA做故障转移

虽然组复制本身就是为了实现高可用性而设计的,可以通过自动故障转移来确保数据的连续性。但是,组复制只能检测到数据库服务是否正常运行,无法检测到连接或网络问题等导致的故障。

当发生这些类型的故障时,组复制可能会认为某个节点已经掉线,执行故障转移操作。如果此时节点并没有真正宕机,而只是由于网络等原因导致了暂时的中断,那么执行的故障转移操作可能会影响数据的一致性。这种情况下,MHA 可以通过更加智能化的判断来避免误判,从而减少不必要的故障转移操作。

此外,MHA 还提供了很多便捷的功能,比如自动进行主从切换、快速恢复从节点同步、平滑升级等等。因此,在 MySQL 高可用性方案的设计中,通常会选择使用组复制和 MHA 的结合方式,以获得更好的性能和可靠性。

ProxySQL Cluster

在原生集群(ProxySQL Cluster)功能中,使用master、候选master和slave的概念,master和候选master负责投票,负责写入、更改配置,并同步到集群中的其它节点。master故障后,还可以从候选Master中选举一个新的master。这些特性能保证ProxySQL集群的可用性、伸缩性以及数据的一致性,

是优于使用第三方工具的选择。

小结

至此,该项目也算完结了,非常感谢大家的关注和支持。

在本项目中,我收获了很多宝贵的经验和技能。首先,我掌握了利用ProxySQL实现MySQL代理转发和读写分离的方法,同时也加深了对keepalived双VIP的理解。其次,我提高了自己使用ansible自动化部署的能力。此外,我还熟悉了采用prometheus+grafana监控数据库性能,并使用延迟备份服务器进行灾备。最后,我还熟悉了通过sysbench和tpcc进行测试的操作,进一步加深了自己对MySQL集群的理解和实践。

总之,在这个项目中,我不仅获得了技术上的收获,还锻炼了自己的合作能力和项目管理能力。我相信这些经验和技能会对我今后的职业发展产生积极的影响,并帮助我更好地应对未来的挑战。

尽管在这个项目中取得了一些小小的成就,但是仍有很多技术需要学习和提高。我非常希望能得到大家的建议和指导,同时也希望能不断掌握更多技术,提升自身的素质和能力,为今后的项目做好充分准备。感谢大家的观看,期待与大家共同探讨、交流更多的技术话题。