低成本指令数据集构建:《Self-Instruct: Aligning Language Model with Self Generated Instructions》阅读笔记

最近有点好奇指令数据集是如何构建的,就读了一下SELF-INSTRUCT的论文

简介

摘要翻译:大型“指令微调”语言模型(即经过微调以响应指令)已表现出对于新任务的zero-shot泛化的非凡能力。 然而,它们严重依赖于人工编写的指令数据,而这些数据通常在数量、多样性和创造力方面受到限制,因此阻碍了微调模型的通用性。 我们引入了 SELF-INSTRUCT,这是一个用自己生成的数据自举来提高预训练语言模型的指令跟踪能力的框架。 我们的pipeline从语言模型生成指令、输入和输出样本,在过滤掉无效或相似的样本后,使用它们来微调原始模型。 将我们的方法应用于普通 GPT3后,在SUPER-NATURALINSTRUCTIONS数据集上比原始模型绝对提高了 33%。与使用私人用户数据和人工标注进行训练的 I n s t r u c t G P T 001 InstructGPT_{001} InstructGPT001 的性能相当。 为了进一步评估,我们为新任务创建了一组专家编写的指令,并通过人工评估表明,使用 SELF-INSTRUCT 微调后的 GPT3 的性能大幅优于使用现有公共指令数据集,与 I n s t r u c t G P T 001 InstructGPT_{001} InstructGPT001 相比仅 5% 的绝对差距。 SELF-INSTRUCT 提供了一种几乎无需标注的方法,用于将预训练语言模型与指令对齐,我们同时发布了大型合成数据集,以促进未来指令微调的研究。

近期NLP文献见证了构建遵循自然语言指令的模型的活跃,这包含着两个关键组件:大型预训练语言模型和人工编写指令数据集(如PROMPTSOURCE和SUPERNATURALINSTRUCTIONS)。但是收集这些人工编写的指令数据有几个缺点:

- 成本高昂

- 缺乏多样性

这篇文章提出了一种半自动的方法来改进人工生成指令数据集,文章贡献有:

- 提出了一种框架:SELF-INSTRUCT,该框架可以仅使用最少的人工标注,生成大量的用于指令调优的数据;

- 通过多个实验验证了文章方法的有效性;

- 文章发布了52K的使用SELF-INSTRUCT指令数据集,以及一份人工手动编写的新任务数据集用来构建和评估指令调优模型。

方法

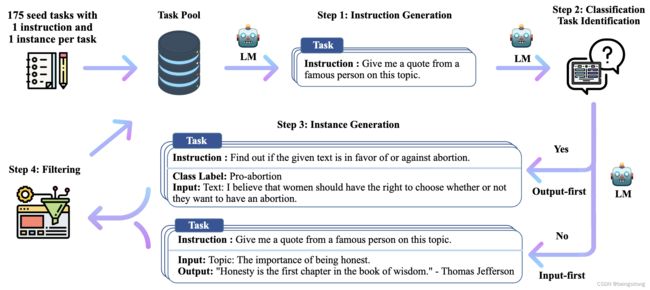

SELF-INSTRUCT的流程框架图如上图,主要分为四个步骤。

在解释每个步骤的详情之前,先看一下如何指令数据的定义。

The instruction data we want to generate contains a set of instructions { I t } \{I_t\} {It} , each of which defines a task t in natural language. Task t has n t ≥ 1 n_t \ge 1 nt≥1 input-output instances { ( X t , i , Y t , i ) } i = 1 n t \{(X_{t,i},Y_{t,i})\}^{n_t}_{i=1} {(Xt,i,Yt,i)}i=1nt. A model is expected to produce the output, given the task instruction and the corresponding input: M ( I t , X t , i ) = Y t , i , f o r i ∈ { 1 , ⋯ , n t } M(I_t,X_{t,i}) = Y_{t,i}, for \ i \in \{1, \cdots, n_t\} M(It,Xt,i)=Yt,i,for i∈{1,⋯,nt}

一条指令数据集由instruction、input、output三个部分组成。需要注意的是insturction和input之间没有严格的区分,比如下面两个例子表达的意思是一样的,只是在形式上不一样。

# 例子1

instruction: "write an essay about school safety"

input:""

output:"...."

# 例子2

instruction: "write an essay about the following topic"

input:"school safety"

output:"...."

步骤一 指令生成

作者构建了175个种子任务(每个包含1个指令和一个实例),用这175个任务来初始化任务池,每次从任务池中采样8个任务作为大语言模型的输入数据样例,这8个任务中,有6个是从175个人工手写任务中抽取的,2个是在前面的步骤中由模型生成的任务中抽取的。(一开始还没有模型生成的数据时,8个任务全部都是人工手写的)

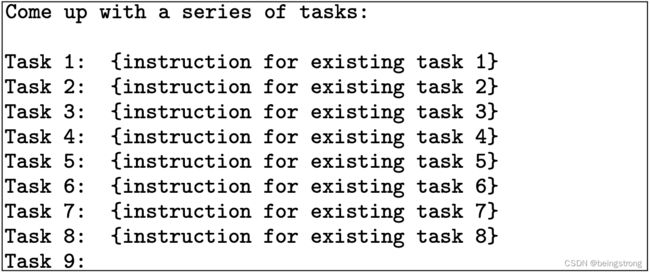

请求的prompt模板如下图(论文中Table 5)

注:在斯坦福羊驼 中使用模型是 text-davinci-003 ,prompt模板如下(这里的序号有点问题,在issue中有说明):

You are asked to come up with a set of 30 diverse task instructions. These task instructions will be given to a GPT model and we will evaluate the GPT model for completing the instructions.

Here are the requirements:

1. Try not to repeat the verb for each instruction to maximize diversity.

2. The language used for the instruction also should be diverse. For example, you should combine questions with imperative instrucitons.

3. The type of instructions should be diverse. The list should include diverse types of tasks like open-ended generation, classification, editing, etc.

4. A GPT language model should be able to complete the instruction. For example, do not ask the assistant to create any visual or audio output. For another example, do not ask the assistant to wake you up at 5pm or set a reminder because it cannot perform any action.

5. The instructions should be in English.

6. The instructions should be 1 to 2 sentences long. Either an imperative sentence or a question is permitted.

7. You should generate an appropriate input to the instruction. The input field should contain a specific example provided for the instruction. It should involve realistic data and should not contain simple placeholders. The input should provide substantial content to make the instruction challenging but should ideally not exceed 100 words.

8. Not all instructions require input. For example, when a instruction asks about some general information, "what is the highest peak in the world", it is not necssary to provide a specific context. In this case, we simply put "" in the input field.

9. The output should be an appropriate response to the instruction and the input. Make sure the output is less than 100 words.

10. Make sure the output is gramatically correct with punctuation if needed.

List of 30 tasks:

步骤二 分类任务识别

判断生成的指令是否是一个分类任务,将分类任务定义为output的标签是一个有限的小的标签集合的任务。

是否是分类任务也是由模型来判断的,使用few-shot方式来实现,用种子任务集合中的12个分类任务指令和19个非分类任务指令作为few-shot的例子。具体模板如下(对应论文中的Table 6):

Can the following task be regarded as a classification task with finite output labels?

Task: Given my personality and the job, tell me if I would be suitable.

Is it classification? Yes

Task: Give me an example of a time when you had to use your sense of humor.

Is it classification? No

Task: Replace the placeholders in the given text with appropriate named entities.

Is it classification? No

Task: Fact checking - tell me if the statement is true, false, or unknown, based on your knowledge and common sense.

Is it classification? Yes

Task: Return the SSN number for the person.

Is it classification? No

Task: Detect if the Reddit thread contains hate speech.

Is it classification? Yes

Task: Analyze the sentences below to identify biases.

Is it classification? No

Task: Select the longest sentence in terms of the number of words in the paragraph, output the sentence index.

Is it classification? Yes

Task: Find out the toxic word or phrase in the sentence.

Is it classification? No

Task: Rank these countries by their population.

Is it classification? No

Task: You are provided with a news article, and you need to identify all the categories that this article belongs to. Possible categories include: Music, Sports, Politics, Tech, Finance, Basketball, Soccer, Tennis, Entertainment, Digital Game, World News. Output its categories one by one, seperated by comma.

Is it classification? Yes

Task: Given the name of an exercise, explain how to do it.

Is it classification? No

Task: Select the oldest person from the list.

Is it classification? Yes

Task: Find the four smallest perfect numbers.

Is it classification? No

Task: Does the information in the document supports the claim? You can answer "Support" or "Unsupport".

Is it classification? Yes

Task: Create a detailed budget for the given hypothetical trip.

Is it classification? No

Task: Given a sentence, detect if there is any potential stereotype in it. If so, you should explain the stereotype. Else, output no.

Is it classification? No

Task: Explain the following idiom to me, and try to give me some examples.

Is it classification? No

Task: Is there anything I can eat for a breakfast that doesn't include eggs, yet includes protein, and has roughly 700-1000 calories?

Is it classification? No

Task: Answer the following multiple choice question. Select A, B, C, or D for the final answer.

Is it classification? Yes

Task: Decide whether the syllogism is logically sound.

Is it classification? Yes

Task: How can individuals and organizations reduce unconscious bias?

Is it classification? No

Task: What are some things you can do to de-stress?

Is it classification? No

Task: Find out the largest one from a set of numbers. Output the number directly.

Is it classification? Yes

Task: Replace the token in the text with proper words that are consistent with the context. You can use multiple words for each token.

Is it classification? No

Task: Write a cover letter based on the given facts.

Is it classification? No

Task: Identify the pos tag of the word in the given sentence.

Is it classification? Yes

Task: Write a program to compute the sum of integers from k to n.

Is it classification? No

Task: In this task, you need to compare the meaning of the two sentences and tell if they are the same. Output yes or no.

Is it classification? Yes

Task: To make the pairs have the same analogy, write the fourth word.

Is it classification? No

Task: Given a set of numbers, find all possible subsets that sum to a given number.

Is it classification? No

Task: {instruction for the target task}

步骤三 实例生成

给定指令及其任务类别,为每个指令生成实例。根据指令是否是分类任务,采取不同的prompt模板。

-

对于非分类任务,采用Input-first Approach,也就是先让LLM对于指令先生成一个输入(input),再生成对应的输出(output)(如果任务不需要input,就直接生成结果)。对应的prompt模板如下(论文中的table 7):

Come up with examples for the following tasks. Try to generate multiple examples when possible. If the task doesn't require additional input, you can generate the output directly. Task: Which exercises are best for reducing belly fat at home? Output: - Lying Leg Raises - Leg In And Out - Plank - Side Plank - Sit-ups Task: Extract all the country names in the paragraph, list them separated by commas. Example 1 Paragraph: Dr. No is the sixth novel by the English author Ian Fleming to feature his British Secret Service agent James Bond. Written at Fleming's Goldeneye estate in Jamaica, it was first published in the United Kingdom by Jonathan Cape in 1958. In the novel Bond looks into the disappearance in Jamaica of two fellow MI6 operatives who had been investigating Doctor No. Bond travels to No's Caribbean island and meets Honeychile Rider, who is there to collect shells. They are captured and taken to a luxurious facility carved into a mountain. The character of Doctor No, the son of a German missionary and a Chinese woman, was influenced by Sax Rohmer's Fu Manchu stories. Dr. No was the first of Fleming's novels to face widespread negative reviews in Britain, but it was received more favourably in the United States. Output: English, British, Jamaica, the United Kingdom, German, Chinese, Britain, the United States. Task: Converting 85 F to Celsius. Output: 85°F = 29.44°C Task: Sort the given list ascendingly. Example 1 List: [10, 92, 2, 5, -4, 92, 5, 101] Output: [-4, 2, 5, 5, 10, 92, 92, 101] Example 2 Input 2 - List: [9.99, 10, -5, -1000, 5e6, 999] Output: [-1000, -5, 9.99, 10, 999, 5e6] Task: Suggest a better and more professional rephrasing of the following sentence. Example 1 Sentence: This house is surprisingly not constructed very well, and you probably need more money to fix it after you buy it. If you ask me, I would suggest you to consider other candidates. Output: This house does not seem to be constructed well, so you may need to spend more money to fix it after you purchase it. I would suggest that you look at other properties. Example 2 Sentence: Just so you know, we did an experiment last week and found really surprising results - language model can improve itself! Output: Our experiments last week demonstrated surprising results, proving that the language model can improve itself. Task: Read the following paragraph and answer a math question about the paragraph. You need to write out the calculation for getting the final answer. Example 1 Paragraph: Gun violence in the United States results in tens of thousands of deaths and injuries annually, and was the leading cause of death for children 19 and younger in 2020. In 2018, the most recent year for which data are available as of 2021, the Centers for Disease Control and Prevention's (CDC) National Center for Health Statistics reports 38,390 deaths by firearm, of which 24,432 were by suicide. The rate of firearm deaths per 100,000 people rose from 10.3 per 100,000 in 1999 to 12 per 100,000 in 2017, with 109 people dying per day or about 14,542 homicides in total, being 11.9 per 100,000 in 2018. In 2010, there were 19,392 firearm-related suicides, and 11,078 firearm-related homicides in the U.S. In 2010, 358 murders were reported involving a rifle while 6,009 were reported involving a handgun; another 1,939 were reported with an unspecified type of firearm. In 2011, a total of 478,400 fatal and nonfatal violent crimes were committed with a firearm. Question: How many more firearm-related deaths were there in 2018 compared to 2010? Output: 38390 - (19392 + 11078) = 38390 - 30470 = 7920. So, in 2018, there were 7920 more deaths by firearm than in 2010. Task: Write Python code to solve this leetcode problem. Example 1 Problem: You are given two non-empty linked lists representing two non-negative integers. The digits are stored in reverse order, and each of their nodes contains a single digit. Add the two numbers and return the sum as a linked list. You may assume the two numbers do not contain any leading zero, except the number 0 itself. Output: class Solution(object): def addTwoNumbers(self, l1, l2): carry = 0 root = n = ListNode(0) while l1 or l2 or carry: v1 = v2 = 0 if l1: v1 = l1.val l1 = l1.next if l2: v2 = l2.val l2 = l2.next carry, val = divmod(v1+v2+carry, 10) n.next = ListNode(val) n = n.next return root.next Task: Solve the equation and find the value of X. Show your steps. Example 1 Equation: 10X + 5 = 10 Output: 10X = 5, X = 0.5 Example 2 Equation: X + Y + 120 = 100 Output: X + Y = -20, X = -20 - Y Task: Write a program to compute the sum of integers from k to n. Output: def sum(k, n): sum = 0 for i in range(k, n+1): sum += i return sum Task: Select the oldest person from the given list. Example 1 List: George Washington, Confucius, Michael Jordan, Michelangelo Output: Confucious Example 2 List: Alan Turing, Geoffrey Hinton, Yann LeCun, Yoshua Bengio Output: Alan Turing Task: Turn down a job offer by sending an email to a recruiter explaining the reason. Output: Hi [Recruiter], Thank you so much for the generous offer to join your team. As we discussed, I’ve admired the company for a number of years, and am a proud endorser of its products. However, after further consideration of where I currently am in my career, I’ve decided to accept an offer at another company. I would love to stay in touch with you and have already started following you on [Social Media Platform]. Again, thank you so much for your time and consideration. Thanks again, [Your Name] Task: {Instruction for the target task} -

对于分类任务,采用Output-first Approach,先生成一个可能的类别标签,再在类别标签的条件下生成input,prompt模板如下(Table 8):

Given the classification task definition and the class labels, generate an input that corresponds to each of the class labels. If the task doesn't require input, just generate possible class labels.

Task: Classify the sentiment of the sentence into positive, negative, or mixed.

Class label: mixed

Sentence: I enjoy the flavor of the restaurant but their service is too slow.

Class label: Positive

Sentence: I had a great day today. The weather was beautiful and I spent time with friends and family.

Class label: Negative

Sentence: I was really disappointed by the latest superhero movie. I would not recommend it to anyone.

Task: Given a dialogue, classify whether the user is satisfied with the service. You should respond with "Satisfied" or "Unsatisfied".

Class label: Satisfied

Dialogue:

- Agent: Thank you for your feedback. We will work to improve our service in the future.

- Customer: I am happy with the service you provided. Thank you for your help.

Class label: Unsatisfied

Dialogue:

- Agent: I am sorry we will cancel that order for you, and you will get a refund within 7 business days.

- Customer: oh that takes too long. I want you to take quicker action on this.

Task: Given some political opinions, classify whether the person belongs to Democrats or Republicans.

Class label: Democrats

Opinion: I believe that everyone should have access to quality healthcare regardless of their income level.

Class label: Republicans

Opinion: I believe that people should be able to keep more of their hard-earned money and should not be taxed at high rates.

Task: Tell me if the following email is a promotion email or not.

Class label: Promotion

Email: Check out our amazing new sale! We've got discounts on all of your favorite products.

Class label: Not Promotion

Email: We hope you are doing well. Let us know if you need any help.

Task: Detect if the Reddit thread contains hate speech.

Class label: Hate Speech

Thread: All people of color are stupid and should not be allowed to vote.

Class label: Not Hate Speech

Thread: The best way to cook a steak on the grill.

Task: Does the information in the document supports the claim? You can answer "Support" or "Unsupport".

Class label: Unsupport

Document: After a record-breaking run that saw mortgage rates plunge to all-time lows and home prices soar to new highs, the U.S. housing market finally is slowing. While demand and price gains are cooling, any correction is likely to be a modest one, housing economists and analysts say. No one expects price drops on the scale of the declines experienced during the Great Recession.

Claim: The US housing market is going to crash soon.

Class label: Support

Document: The U.S. housing market is showing signs of strain, with home sales and prices slowing in many areas. Mortgage rates have risen sharply in recent months, and the number of homes for sale is increasing. This could be the beginning of a larger downturn, with some economists predicting a potential housing crash in the near future.

Claim: The US housing market is going to crash soon.

Task: Answer the following multiple-choice question. Select A, B, C, or D for the final answer.

Class label: C

Question: What is the capital of Germany?

A. London

B. Paris

C. Berlin

D. Rome

Class label: D

Question: What is the largest planet in our solar system?

A) Earth

B) Saturn

C) Mars

D) Jupiter

Class label: A

Question: What is the process by which plants make their own food through photosynthesis?

A) Respiration

B) Fermentation

C) Digestion

D) Metabolism

Class label: B

Question: Who wrote the novel "The Great Gatsby"?

A) Ernest Hemingway

B) F. Scott Fitzgerald

C) J.D. Salinger

D) Mark Twain

Task: You need to read a code and detect if there is a syntax error or not. Output true if there is an error, output false if there is not.

Class label: true

Code:

def quick_sort(arr):

if len(arr) < 2

return arr

Class label: False

Code:

def calculate_average(numbers):

total = 0

for number in numbers:

total += number

return total / len(numbers)

Task: You are provided with a news article, and you need to identify all the categories that this article belongs to. Possible categories include Sports and Politics. Output its categories one by one, separated by a comma.

Class label: Sports

Article: The Golden State Warriors have won the NBA championship for the second year in a row.

Class label: Politics

Article: The United States has withdrawn from the Paris Climate Agreement.

Class label: Politics, Sports

Article: The government has proposed cutting funding for youth sports programs.

Task: Given a credit card statement, the cardholder's spending habits, and the account balance, classify whether the cardholder is at risk of defaulting on their payments or not.

Class label: At risk

Credit card statement: Purchases at high-end clothing stores and luxury hotels.

Cardholder's spending habits: Frequent purchases at luxury brands and high-end establishments.

Account balance: Over the credit limit and multiple missed payments.

Class label: Not at risk

Credit card statement: Purchases at grocery stores and gas stations.

Cardholder's spending habits: Regular purchases for necessary expenses and occasional dining out.

Account balance: Slightly below the credit limit and no missed payments.

Task: Given a social media post, the hashtags used, and a topic. classify whether the post is relevant to the topic or not.

Class label: Relevant

Post: I can't believe the government is still not taking action on climate change. It's time for us to take matters into our own hands.

Hashtags: #climatechange #actnow

Topic: Climate change

Class label: Not relevant

Post: I just bought the new iPhone and it is amazing!

Hashtags: #apple #technology

Topic: Travel

Task: The answer will be 'yes' if the provided sentence contains an explicit mention that answers the given question. Otherwise, answer 'no'.

Class label: Yes

Sentence: Jack played basketball for an hour after school.

Question: How long did Jack play basketball?

Class label: No

Sentence: The leaders of the Department of Homeland Security now appear before 88 committees and subcommittees of Congress.

Question: How often are they required to appear?

Task: Tell me what's the second largest city by population in Canada.

Class label: Montreal

Task: Classifying different types of mathematical equations, such as linear, and quadratic equations, based on the coefficients and terms in the equation.

Class label: Linear equation

Equation: y = 2x + 5

Class label: Quadratic equation

Equation: y = x^2 - 4x + 3

Task: Tell me the first number of the given list.

Class label: 1

List: 1, 2, 3

Class label: 2

List: 2, 9, 10

Task: Which of the following is not an input type? (a) number (b) date (c) phone number (d) email address (e) all of these are valid inputs.

Class label: (e)

Task: {Instruction for the target task}

步骤四 过滤和后处理

为了保证指令的多样性,一个指令只有与任务池中已有的指令的ROUGE-L小于0.7才会被考虑加入到任务池,同时过滤掉包含一些特殊词(如image/picture/graph等)的生成指令,因为这些指令LM还不会处理。(注:虽然过滤是在论文中的第四个步骤,但是代码实现时,对于指令的过滤是在第一步生成指令的时候就过滤了不满足要求的指令,当任务池中的指令达到生成数量比如52k后就停止第一步的指令生成)

## 开源代码 https://github.com/yizhongw/self-instruct/blob/main/self_instruct/bootstrap_instructions.py#L41C1-L71C1

def post_process_gpt3_response(response):

if response is None or response["choices"][0]["finish_reason"] == "length":

return []

raw_instructions = re.split(r"\n\d+\s?\. ", response["choices"][0]["text"])

instructions = []

for inst in raw_instructions:

inst = re.sub(r"\s+", " ", inst).strip()

inst = inst.strip().capitalize()

if inst == "":

continue

# filter out too short or too long instructions

if len(inst.split()) <= 3 or len(inst.split()) > 150:

continue

# filter based on keywords that are not suitable for language models.

if any(find_word_in_string(word, inst) for word in ["image", "images", "graph", "graphs", "picture", "pictures", "file", "files", "map", "maps", "draw", "plot", "go to"]):

continue

# We found that the model tends to add "write a program" to some existing instructions, which lead to a lot of such instructions.

# And it's a bit comfusing whether the model need to write a program or directly output the result.

# Here we filter them out.

# Note this is not a comprehensive filtering for all programming instructions.

if inst.startswith("Write a program"):

continue

# filter those starting with punctuation

if inst[0] in string.punctuation:

continue

# filter those starting with non-english character

if not inst[0].isascii():

continue

instructions.append(inst)

return instructions

对于生成的实例,过滤掉重复的、过滤掉与相同input不同output的实例。

并用启发式方法识别和过滤掉一些实例,比如是否太长、是否太短、输出是输入的重复等。

## 论文开源代码 https://github.com/yizhongw/self-instruct/blob/main/self_instruct/prepare_for_finetuning.py#L110C1-L192C1

def filter_duplicate_instances(instances):

# if the instances have same non-empty input, but different output, we will not use such instances

same_input_diff_output = False

for i in range(1, len(instances)):

for j in range(0, i):

if instances[i][1] == "":

continue

if instances[i][1] == instances[j][1] and instances[i][2] != instances[j][2]:

same_input_diff_output = True

break

if same_input_diff_output:

return []

# remove duplicate instances

instances = list(set(instances))

return instances

def filter_invalid_instances(instances):

filtered_instances = []

for instance in instances:

# if input and output are the same, we will not use such instances

if instance[1] == instance[2]:

continue

# if output is empty, we will not use such instances

if instance[2] == "":

continue

# if input or output ends with a colon, these are usually imcomplete generation. We will not use such instances

if instance[1].strip().endswith(":") or instance[2].strip().endswith(":"):

continue

filtered_instances.append(instance)

return filtered_instances

def parse_instances_for_generation_task(raw_text, instruction, response_metadata):

instances = []

raw_text = raw_text.strip()

if re.findall("Example\s?\d*\.?", raw_text):

instance_texts = re.split(r"Example\s?\d*\.?", raw_text)

instance_texts = [it.strip() for it in instance_texts if it.strip() != ""]

for instance_text in instance_texts:

inst_input, inst_output = parse_input_output(instance_text)

instances.append((instruction.strip(), inst_input.strip(), inst_output.strip()))

elif re.findall(r"Output\s*\d*\s*:", raw_text):

# we assume only one input/output pair in this case

inst_input, inst_output = parse_input_output(raw_text)

instances.append((instruction.strip(), inst_input.strip(), inst_output.strip()))

else:

return []

# if the generation stops because of length, we remove the last instance

if response_metadata["response"]["choices"][0]["finish_reason"] == "length":

instances = instances[:-1]

instances = filter_invalid_instances(instances)

instances = filter_duplicate_instances(instances)

return instances

def parse_instances_for_classification_task(raw_text, instruction, response_metadata):

instances = []

if not "Class label:" in raw_text:

return []

instance_texts = raw_text.split("Class label:")[1:]

for instance_text in instance_texts:

instance_text = instance_text.strip()

fields = instance_text.split("\n", 1)

if len(fields) == 2:

# the first field split by \n is the class label

class_label = fields[0].strip()

# the rest is the input

input_text = fields[1].strip()

elif len(fields) == 1:

# the first field split by \n is the input

class_label = fields[0].strip()

input_text = ""

else:

raise ValueError("Invalid instance text: {}".format(instance_text))

instances.append((instruction.strip(), input_text.strip(), class_label.strip()))

# if the generation stops because of length, we remove the last instance

if response_metadata["response"]["choices"][0]["finish_reason"] == "length":

instances = instances[:-1]

instances = filter_invalid_instances(instances)

instances = filter_duplicate_instances(instances)

return instances

GPT3+SELF-INSTRUCT生成数据

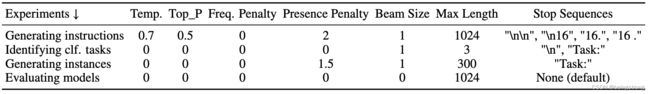

将GPT3(OpenAI 的"davinci"引擎)作为提出的self-instruct方法的试验大语言模型,在论文试验进行的2022年12月,GPT3的api收费标准为$0.02/1000 token,生成整个数据集花费约600美元。调用API的超参如下图(论文的Table 4)

生成数据的统计数据如下图(论文Table 1)

指令数据多样性:

- 通过对生成的指令进行句法分析,绘制最常见的20个动词和它们的4个最常见直接名词如下图(论文Figure 3),这些数据占整个数据集的14%。

-

比较生成的数据与种子指令数据的相似性,对于每一个生成的指令,计算与175个种子指令的最大ROUGE-L,绘制结果如下图所示(论文Figure 4)。

-

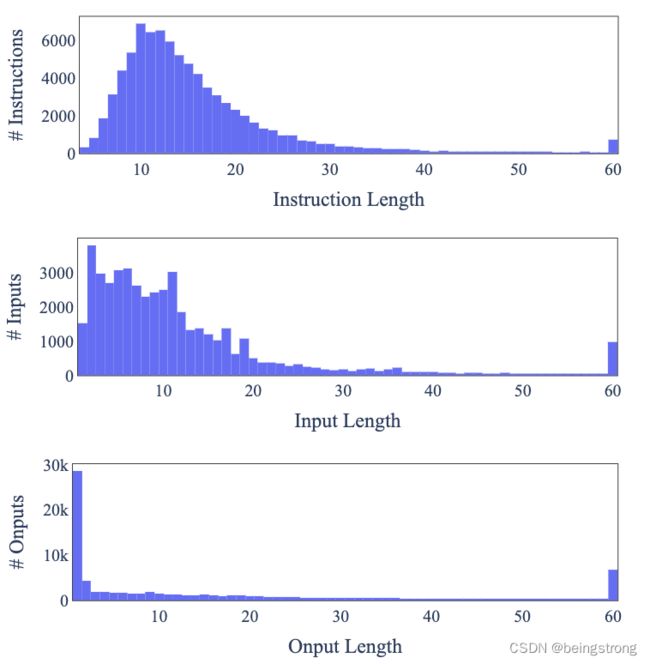

指令长度、input长度、output长度的分布如下图(论文Figure 5)

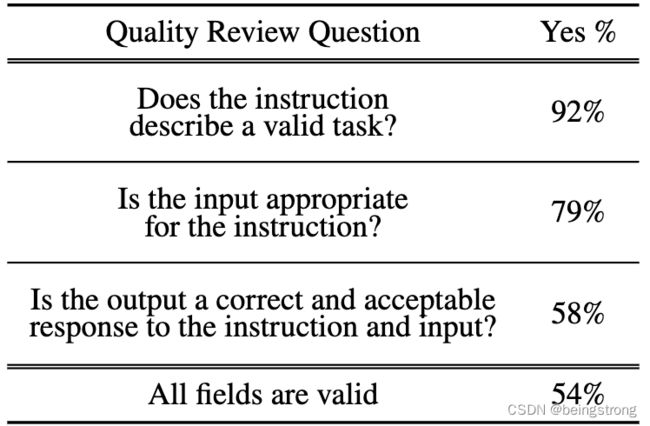

数据质量:

试验部分

论文作者做了几个试验来验证生成的指令数据对于提升模型的性能是有帮助的。因为使用GPT3生成了指令数据集,训练模型还是GPT3,使用OpenAI的finetune API对模型进行微调。(试验部分目前不太关注,就不详细记录了)

参考资料

- 开源代码:https://github.com/yizhongw/self-instruct

- 论文:Wang, Yizhong, Yeganeh Kordi, Swaroop Mishra, Alisa Liu, NoahA. Smith, Daniel Khashabi, and Hannaneh Hajishirzi. 2022. “Self-Instruct: Aligning Language Model with Self Generated Instructions,” December.

- https://github.com/tatsu-lab/stanford_alpaca