k8s快速入门

Kubernetes Introduction

Kubernetes is an open source container orchestration engine for automating deployment, scaling, and management of containerized applications. The open source project is hosted by the Cloud Native Computing Foundation (CNCF).

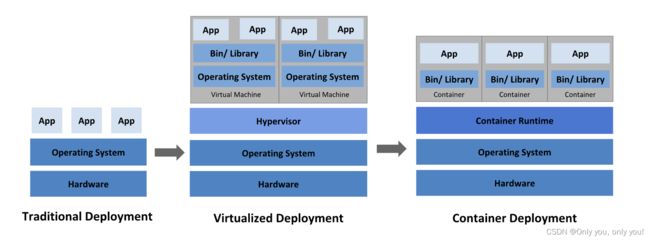

Going back in time

Traditional deployment era: Early on, organizations ran applications on physical servers. There was no way to define resource boundaries for applications in a physical server, and this caused resource allocation issues. For example, if multiple applications run on a physical server, there can be instances where one application would take up most of the resources, and as a result, the other applications would underperform. A solution for this would be to run each application on a different physical server. But this did not scale as resources were underutilized, and it was expensive for organizations to maintain many physical servers.

Virtualized deployment era: As a solution, virtualization was introduced. It allows you to run multiple Virtual Machines (VMs) on a single physical server’s CPU. Virtualization allows applications to be isolated between VMs and provides a level of security as the information of one application cannot be freely accessed by another application.

Virtualization allows better utilization of resources in a physical server and allows better scalability because an application can be added or updated easily, reduces hardware costs, and much more. With virtualization you can present a set of physical resources as a cluster of disposable virtual machines.

Each VM is a full machine running all the components, including its own operating system, on top of the virtualized hardware.

Container deployment era: Containers are similar to VMs, but they have relaxed isolation properties to share the Operating System (OS) among the applications. Therefore, containers are considered lightweight. Similar to a VM, a container has its own filesystem, share of CPU, memory, process space, and more. As they are decoupled from the underlying infrastructure, they are portable across clouds and OS distributions.

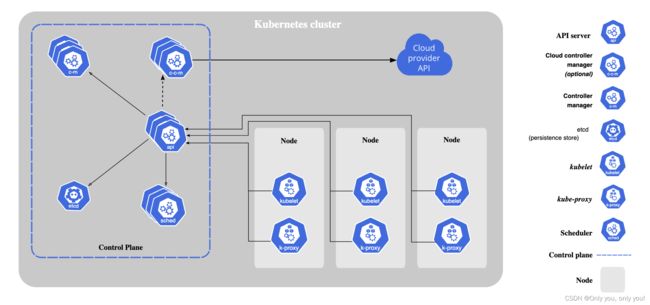

Kubernetes Components

A Kubernetes cluster consists of a set of worker machines, called nodes, that run containerized applications. Every cluster has at least one worker node.

The worker node(s) host the Pods that are the components of the application workload. The control plane manages the worker nodes and the Pods in the cluster. In production environments, the control plane usually runs across multiple computers and a cluster usually runs multiple nodes, providing fault-tolerance and high availability.

Control Plane Components

The control plane’s components make global decisions about the cluster (for example, scheduling), as well as detecting and responding to cluster events (for example, starting up a new pod when a deployment’s replicas field is unsatisfied).

Control plane components can be run on any machine in the cluster. However, for simplicity, set up scripts typically start all control plane components on the same machine, and do not run user containers on this machine. See Creating Highly Available clusters with kubeadm for an example control plane setup that runs across multiple machines.

kube-apiserver

The API server is a component of the Kubernetes control plane that exposes the Kubernetes API. The API server is the front end for the Kubernetes control plane.

The main implementation of a Kubernetes API server is kube-apiserver. kube-apiserver is designed to scale horizontally—that is, it scales by deploying more instances. You can run several instances of kube-apiserver and balance traffic between those instances.

etcd

Consistent and highly-available key value store used as Kubernetes’ backing store for all cluster data.

If your Kubernetes cluster uses etcd as its backing store, make sure you have a back up plan for those data.

You can find in-depth information about etcd in the official documentation.

kube-scheduler

Control plane component that watches for newly created Pods with no assigned node, and selects a node for them to run on.

Factors taken into account for scheduling decisions include: individual and collective resource requirements, hardware/software/policy constraints, affinity and anti-affinity specifications, data locality, inter-workload interference, and deadlines.

kube-controller-manager

Control plane component that runs controller processes.

Logically, each controller is a separate process, but to reduce complexity, they are all compiled into a single binary and run in a single process.

Some types of these controllers are:

- Node controller: Responsible for noticing and responding when nodes go down.

- Job controller: Watches for Job objects that represent one-off tasks, then creates Pods to run those tasks to completion.

- EndpointSlice controller: Populates EndpointSlice objects (to provide a link between Services and Pods).

- ServiceAccount controller: Create default ServiceAccounts for new namespaces.

cloud-controller-manager

A Kubernetes control plane component that embeds cloud-specific control logic. The cloud controller manager lets you link your cluster into your cloud provider’s API, and separates out the components that interact with that cloud platform from components that only interact with your cluster.

The cloud-controller-manager only runs controllers that are specific to your cloud provider. If you are running Kubernetes on your own premises, or in a learning environment inside your own PC, the cluster does not have a cloud controller manager.

As with the kube-controller-manager, the cloud-controller-manager combines several logically independent control loops into a single binary that you run as a single process. You can scale horizontally (run more than one copy) to improve performance or to help tolerate failures.

The following controllers can have cloud provider dependencies:

- Node controller: For checking the cloud provider to determine if a node has been deleted in the cloud after it stops responding

- Route controller: For setting up routes in the underlying cloud infrastructure

- Service controller: For creating, updating and deleting cloud provider load balancers

Node Components

Node components run on every node, maintaining running pods and providing the Kubernetes runtime environment.

kubelet

An agent that runs on each node in the cluster. It makes sure that containers are running in a Pod.

The kubelet takes a set of PodSpecs that are provided through various mechanisms and ensures that the containers described in those PodSpecs are running and healthy. The kubelet doesn’t manage containers which were not created by Kubernetes.

kube-proxy

kube-proxy is a network proxy that runs on each node in your cluster, implementing part of the Kubernetes Service concept.

kube-proxy maintains network rules on nodes. These network rules allow network communication to your Pods from network sessions inside or outside of your cluster.

kube-proxy uses the operating system packet filtering layer if there is one and it’s available. Otherwise, kube-proxy forwards the traffic itself.

Container runtime

The container runtime is the software that is responsible for running containers.

Kubernetes supports container runtimes such as containerd, CRI-O, and any other implementation of the Kubernetes CRI (Container Runtime Interface).

Addons

Addons use Kubernetes resources (DaemonSet, Deployment, etc) to implement cluster features. Because these are providing cluster-level features, namespaced resources for addons belong within the kube-system namespace.

Selected addons are described below; for an extended list of available addons, please see Addons.

DNS

While the other addons are not strictly required, all Kubernetes clusters should have cluster DNS, as many examples rely on it.

Cluster DNS is a DNS server, in addition to the other DNS server(s) in your environment, which serves DNS records for Kubernetes services.

Containers started by Kubernetes automatically include this DNS server in their DNS searches.

Web UI (Dashboard)

Dashboard is a general purpose, web-based UI for Kubernetes clusters. It allows users to manage and troubleshoot applications running in the cluster, as well as the cluster itself.

Container Resource Monitoring

Container Resource Monitoring records generic time-series metrics about containers in a central database, and provides a UI for browsing that data.

Cluster-level Logging

A cluster-level logging mechanism is responsible for saving container logs to a central log store with search/browsing interface.

YAML语法

apiVersion: v1

kind: Pod

metadata:

name: busybox-sleep

spec:

containers:

- name: busybox

image: busybox:1.28

args:

- sleep

- "1000000"

---

apiVersion: v1

kind: Pod

metadata:

name: busybox-sleep-less

spec:

containers:

- name: busybox

image: busybox:1.28

args:

- sleep

- "1000"

kubectl

可以借助kubectl工具对k8s各种对象、资源进行操作,kubectl命令大全。

/*

command:指定要对一个或多个资源执行的操作,例如 create、get、describe、delete。

TYPE:指定资源类型。资源类型不区分大小写, 可以指定单数、复数或缩写形式。

NAME:指定资源的名称。名称区分大小写。 如果省略名称,则显示所有资源的详细信息。

flags: 指定可选的参数。例如,可以使用 -s 或 --server 参数指定 Kubernetes API 服务器的地址和端口。

*/

kubectl [command] [TYPE] [NAME] [flags]

# 使用命令创建/删除/查看pod(controller)

kubectl create ns dev

kubectl run nginx --image=nginx:1.17.1 --port=80 -n myns // nginx是pod的deployment类型的控制器名

kubectl get deployment -n myns

kubectl delete pod nginx-xxx -n myns // 删除会自动重启新pod,nginx-xxx是pod名

kubectl delete deployment ngnix -n myns // 删除pod的控制器deployment,就不会再重启新pod了

kubectl get pod nginx-xxx -o wide -n myns // 查看pod信息

# 使用ngnix.yaml中的定义创建/删除资源

kubectl apply -f ngnix.yaml

kubectl delete -f nginx.yaml

# 查看pod详细描述信息,可用来排查错误(含有pod相关日志)

kubectl describe pod mypod -n myns

kubectl describe pod/mypod -n myns

# 编辑/修改yaml配置

kubectl edit svc/docker-registry -n myns

# 交互方式进入容器,默认进入第一个容器,-c可指定容器

kubectl exec -ti <pod-name> -n myns -- /bin/bash

# 生成yaml

kubectl create deployment nginx --image=nginx:1.17.1 --dry-run=client -n dev -o yaml > test.yaml

# 添加/更新/移除/查看/筛选标签(https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/)

kubectl label pods my-pod new-label=awesome -n myns

kubectl label pods my-pod new-label=awesome2 -n myns --overwrite

kubectl label pods my-pod new-label- -n myns

kubectl get pods -n myns --show-labels

kubectl get pods -l "new-label=awesome" -n myns --show-labels

# 暴露Service(type=ClusterIP集群内可访问,type=NodePort集群外可访问)

kubectl expose deployment nginx --name=svc-nginx1 --type=ClusterIP --port=80 --target-port=80 -n test

kubectl get service -n test

Pod(Label)

apiVersion: v1

kind: Pod

metadata:

name: nginx

namespace: dev

labels:

version: "3.0"

env: "test"

spec:

containers:

- image: nginx:1.17.1

imagePullPolicy: IfNotPresent

name: pod

ports:

- name: nginx-port

containerPort: 80

protocol: TCP

Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: dev

spec:

replicas: 3

selector:

matchLabels:

run: nginx

template:

metadata:

labels:

run: nginx

spec:

containers:

- image: nginx:1.17.1

name: nginx

ports:

- containerPort: 80

protocol: TCP

Service,Deployment可创建一组Pod提供高可用服务,但是Pod的IP仅是集群内部可见的虚拟IP且会随其重建而变化,外部无法访问。Service可解决这些问题,自身IP不变,供外部调用,生成路由到各Pod的规则。并能实现服务发现和负载均衡。

apiVersion: v1

kind: Service

metadata:

name: svc-nginx

namespace: dev

spec:

clusterIP: 10.109.179.231

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

run: nginx

type: ClusterIP

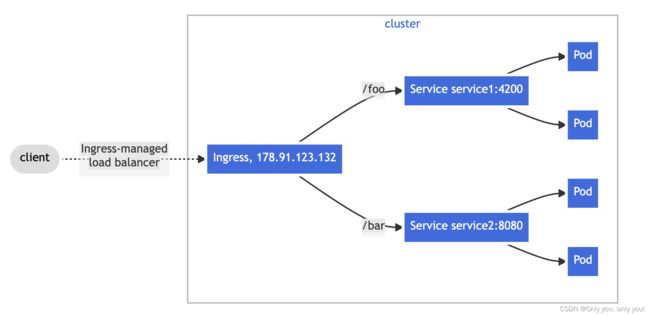

Ingress,相当于一个七层的负载均衡器,是kubernetes对反向代理的一个抽象,它的工作原理类似于Nginx,可以理解为Ingress里面建立了诸多映射规则,Ingress Controller通过监听这些配置规则并转化为Nginx的反向代理配置,然后对外提供服务。

Pod

Pods are the smallest deployable units of computing that you can create and manage in Kubernetes.

# 查看如何配置yaml

kubectl explain pod

kubectl explain pod.metadata

# 查看所有的version/resource

kubectl api-versions

kubectl api-resources

# 镜像拉取策略

https://kubernetes.io/docs/concepts/containers/images/

# 资源配额

https://kubernetes.io/docs/concepts/policy/resource-quotas/

# 生命周期

https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#pod-phase

# 就绪/存活探针(probe)

https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#container-probes

# 重启策略

https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#restart-policy

# init containers

https://kubernetes.io/docs/concepts/workloads/pods/init-containers/

# 钩子

https://kubernetes.io/docs/concepts/containers/container-lifecycle-hooks/

# 调度

https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/

# 污点

https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/

# controller

https://kubernetes.io/docs/concepts/workloads/controllers/

# HorizontalPodAutoscaler,自动扩缩容,基于Deployment or StatefulSet

https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/

# 重建更新/滚动更新/金丝雀发布

删完重建/删一部分重建一部分滚动进行/不删就重建一个并分一波流量观察新的

Storage

# emptyDir

https://kubernetes.io/docs/concepts/storage/volumes/#emptydir

# hostPath

https://kubernetes.io/docs/concepts/storage/volumes/#hostpath

# nfs

https://kubernetes.io/docs/concepts/storage/volumes/#nfs

# pv/pvc

https://kubernetes.io/docs/concepts/storage/persistent-volumes/

Kubernetes Clients

Reference

[1] Kubernetes官方文档

[2] https://www.yuque.com/fairy-era/yg511q/szg74m