关于loguru一次错误排查记录

在做个性化推荐接口性能测试时,当并发量达到一定量级的情况下,系统会崩溃。

错误日志:

Traceback (most recent call last):

File "" , line 1, in <module>

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/multiprocessing/spawn.py", line 116, in spawn_main

。。。

File "/Users/zhanglei/PycharmProjects/AIHead_RS/main.py", line 15, in <module>

logger = Logings().setup_logger()

File "/Users/zhanglei/PycharmProjects/AIHead_RS/AIHead_component/utils/singletion.py", line 34, in __call__

self._instance[self.cls] = self.cls(*args, **kwargs)

File "/Users/zhanglei/PycharmProjects/AIHead_RS/AIHead_component/utils/log_uru.py", line 45, in __init__

self.add_config(trace_path, level="TRACE")

File "/Users/zhanglei/PycharmProjects/AIHead_RS/AIHead_component/utils/log_uru.py", line 65, in add_config

loguru.logger.add(log_path, # 指定文件

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/site-packages/loguru/_logger.py", line 961, in add

handler = Handler(

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/site-packages/loguru/_handler.py", line 90, in __init__

self._confirmation_event = multiprocessing.Event()

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/multiprocessing/context.py", line 93, in Event

return Event(ctx=self.get_context())

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/multiprocessing/synchronize.py", line 324, in __init__

self._cond = ctx.Condition(ctx.Lock())

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/multiprocessing/context.py", line 78, in Condition

return Condition(lock, ctx=self.get_context())

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/multiprocessing/synchronize.py", line 215, in __init__

self._woken_count = ctx.Semaphore(0)

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/multiprocessing/context.py", line 83, in Semaphore

return Semaphore(value, ctx=self.get_context())

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/multiprocessing/synchronize.py", line 126, in __init__

SemLock.__init__(self, SEMAPHORE, value, SEM_VALUE_MAX, ctx=ctx)

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/multiprocessing/synchronize.py", line 57, in __init__

sl = self._semlock = _multiprocessing.SemLock(

OSError: [Errno 28] No space left on device

之后就是clickhouse多进程出错

2023-09-12 15:44:28 | MainProcess | Thread-500 | controller.personal_rec_exec:138 | ERROR: Traceback (most recent call last):

File "/Users/zhanglei/PycharmProjects/AIHead_RS/service/controller.py", line 131, in personal_rec_exec

ret = pipe.run_plugin(thd_data)

File "/Users/zhanglei/PycharmProjects/AIHead_RS/AIHead_component/utils/log_utils.py", line 28, in timed

result = method(*args, **kw)

File "/Users/zhanglei/PycharmProjects/AIHead_RS/pipelines/online_pipe/personal_rec.py", line 48, in run_plugin

PreSetRecall().run_strategy(thd_data)

File "/Users/zhanglei/PycharmProjects/AIHead_RS/AIHead_component/utils/log_utils.py", line 28, in timed

result = method(*args, **kw)

File "/Users/zhanglei/PycharmProjects/AIHead_RS/plugin/personal_rec/PreSetRecall.py", line 44, in run_strategy

pre_recall_set = self.filter(query_id, pre_recall_set, ck)

File "/Users/zhanglei/PycharmProjects/AIHead_RS/plugin/personal_rec/PreSetRecall.py", line 265, in filter

row_data = ck.query(keys=[query_id], tb_name=CK_WHITELIST_TABLE, columns=None, primary_key='uid')

File "/Users/zhanglei/PycharmProjects/AIHead_RS/AIHead_component/data_source/clickhouse_data.py", line 288, in query

return self.__class__.query_batch_parallel(

File "/Users/zhanglei/PycharmProjects/AIHead_RS/AIHead_component/data_source/base_data_source.py", line 105, in query_batch_parallel

result_dfs.append(future.result())

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/concurrent/futures/_base.py", line 438, in result

return self.__get_result()

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/concurrent/futures/_base.py", line 390, in __get_result

raise self._exception

concurrent.futures.process.BrokenProcessPool: A process in the process pool was terminated abruptly while the future was running or pending.

开始一直在排查clickhouse多进程的错误,看到spawnprocess在一直增长,后面修改多进程的代码并没有进行改善, 后面将spotlight锁定到前段报错,也就是单例的日志系统。

其中关键代码:

File "/Users/zhanglei/PycharmProjects/AIHead_RS/main.py", line 15, in <module>

logger = Logings().setup_logger()

File "/Users/zhanglei/PycharmProjects/AIHead_RS/AIHead_component/utils/singletion.py", line 34, in __call__

self._instance[self.cls] = self.cls(*args, **kwargs)

File "/Users/zhanglei/PycharmProjects/AIHead_RS/AIHead_component/utils/log_uru.py", line 45, in __init__

self.add_config(trace_path, level="TRACE")

File "/Users/zhanglei/PycharmProjects/AIHead_RS/AIHead_component/utils/log_uru.py", line 65, in add_config

loguru.logger.add(log_path, # 指定文件

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/site-packages/loguru/_logger.py", line 961, in add

handler = Handler(

File "/Users/zhanglei/opt/anaconda3/lib/python3.9/site-packages/loguru/_handler.py", line 90, in __init__

self._confirmation_event = multiprocessing.Event()

loguru 的 logger.add() 方法中,enqueue 参数是一个布尔值,用于指定是否异步地写入日志。

- 当 enqueue=True 时:日志消息将被放入一个队列中,并由一个单独的线程异步地写入目标(例如,文件或标准输出)。

这可以提高性能,特别是当写入目标是一个慢速操作(例如,写入磁盘或远程服务器)时。

异步日志记录还可以避免潜在的死锁问题,特别是在多线程或多进程环境中。 - 当 enqueue=False 时:日志消息将被同步地写入目标。

这可能会导致写入延迟,特别是当写入目标是一个慢速操作时。

总的来说,enqueue 参数允许你选择同步或异步地写入日志。在大多数情况下,使用 enqueue=True 可以提供更好的性能和更少的问题,特别是在高并发的应用程序中。

当enqueue为True时,这种异步日志记录涉及到进程间通信(IPC),loguru 使用了队列(来自 multiprocessing 模块)来在主程序和日志记录线程之间传递日志消息。这意味着每次你调用 logger.add() 并设置 enqueue=True 时,都会创建新的IPC资源,当IPC累计到一定程度就出现了no space on device.

至于后面的concurrent.futures.process.BrokenProcessPool错误是IPC资源不足导致的,即使重启程序单次query也会有该错误,重启电脑后该问题消失。

原因如下: concurrent.futures.ProcessPoolExecutor 进行多进程并发时,它依赖于 multiprocessing 模块来创建和管理子进程。这些子进程与主进程之间的通信是通过IPC资源(如管道、信号量等)来实现的。如果这些IPC资源不足或耗尽,那么子进程可能无法正常启动或与主进程通信,从而导致 BrokenProcessPool 错误.

知识学习

IPC(InterProcess Communication)

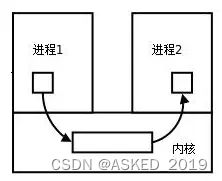

每个进程各自有不同的用户地址空间,任何一个进程的全局变量在另一个进程中都看不到,所以进程之间要交换数据必须通过内核,在内核中开辟一块缓冲区,进程1把数据从用户空间拷到内核缓冲区,进程2再从内核缓冲区把数据读走,内核提供的这种机制称为进程间通信(IPC,InterProcess Communication)

IPC 的主要方法和资源包括:

- 管道 (Pipes):它是最早的 IPC 形式之一,通常用于父子进程之间的通信。

- 命名管道 (Named Pipes):与普通管道类似,但它们有一个名字,可以被不相关的进程使用。

- 信号 (Signals):是一种简单的通信方法,用于通知进程某个事件已经发生。

- 消息队列 (Message Queues):允许进程将消息发送到队列中,其他进程可以从队列中读取或写入消息。

- 共享内存 (Shared Memory):允许多个进程访问同一块内存区域。

- 信号量 (Semaphores):是一种同步工具,用于解决多个进程访问共享资源时可能出现的竞态条件。

- 套接字 (Sockets):通常用于不同机器之间的进程通信,但也可以在同一台机器上的进程之间使用。

- 文件和文件锁:文件可以作为进程之间通信的媒介,而文件锁可以解决并发访问的问题。

OSError: [Errno 28] No space left on device 错误中,主要于信号量(Semaphores)有关。当系统上的信号量资源被耗尽时,可能会出现这种错误。信号量是用于控制多个进程对共享资源的访问的计数器

如何监控IPC

在类Unix系统中,使用

如何解决

最简单的方法:

- enquene这个参数设置为False

- 不使用多进程

这两种方法虽然简单,但是实际上enquene为True导致的性能降低,另外也很难避免多线程的使用,所以需要更加综合的方法:

@Singleton

class Logings:

def __init__(self, log_path=None):

loguru.logger.remove()

# 增加一个属性存储handler_IDs

self.handler_ids = []

....

def setup_logger(self, file_path=None, level="INFO"):

if len(self.handler_ids) == 0:

if file_path:

self.add_config(file_path, level=level)

else:

return loguru.logger

else:

return loguru.logger

def reset(self):

self.close()

self.__init__()

def close(self):

for handler_id in self.handler_ids:

loguru.logger.remove(handler_id)

self.handler_ids.clear()

REF:

进程间通信IPC (InterProcess Communication)