【webrtc】VCMSessionInfo 合并一个可解码的帧

- 知乎大神的概括:

- VCMFrameBuffer 帧中包含VCMSessionInfo的处理,对VPX、h264(分析Nalus)的同一帧中的所有包进行过滤并进行完整帧组帧,用于sink给后续的解码。

- 用于解码器的

- 所以插入的数据都是VCMPacket

- frame_buffer指向一帧的起始数据地址,首先能对待插入list 的packet 有序的插入,按照序号来的,

- 检查是否完整,然后frame_buffer 就是这帧的数据了。

- 零声有对应的讲解

最大的可解码帧大小2MB

1400个包,大约20MB

反向迭代器ReversePacketIterator 的 base() 方法

- base()可以将一个反向迭代器变成一个正向迭代器

int VCMSessionInfo::InsertPacket(const VCMPacket& packet,

uint8_t* frame_buffer,

const FrameData& frame_data) {

视频头里有一个视频帧的类型:空帧

if (packet.video_header.frame_type == VideoFrameType::kEmptyFrame) {

对于空包,要更新序号,这种不是媒体包

// Update sequence number of an empty packet.

// Only media packets are inserted into the packet list.

InformOfEmptyPacket(packet.seqNum);

return 0;

}

每个frame能接受的包最大1400个

if (packets_.size() == kMaxPacketsInSession) {

RTC_LOG(LS_ERROR) << "Max number of packets per frame has been reached.";

return -1;

}

反向遍历:找到这个包在包队列中的位置,然后插入。

以相反的顺序循环遍历列表。

// Find the position of this packet in the packet list in sequence number

// order and insert it. Loop over the list in reverse order.

以相反的顺序循环遍历列表。

ReversePacketIterator rit = packets_.rbegin();

for (; rit != packets_.rend(); ++rit)

从后向前看,包的序号比较,如果输入的比当前包要新,那么找到了要插入的位置了,break掉for

if (LatestSequenceNumber(packet.seqNum, (*rit).seqNum) == packet.seqNum)

break;

检查包是否重复了:

遍历过程中,找到了同序号的包,并且这个包还是有数据的,咋办? 返回错误 -2, 输入的包插入失败。

// Check for duplicate packets.

if (rit != packets_.rend() && (*rit).seqNum == packet.seqNum &&

(*rit).sizeBytes > 0)

return -2;

if (packet.codec() == kVideoCodecH264) {

frame_type_ = packet.video_header.frame_type;

当前输入包在frame中是首个,并且(还没记录到首个包的序号 或者 记录到了但是比输入包的序号要新),则记录为首个包

if (packet.is_first_packet_in_frame() &&

(first_packet_seq_num_ == -1 ||

IsNewerSequenceNumber(first_packet_seq_num_, packet.seqNum))) {

first_packet_seq_num_ = packet.seqNum;

}

如果输入包是最后一个包,并且 (还没记录最后包的序号 或者 输入包的序号比记录到的序号要新,那么更新记录到最后包序号为最新的这个包的序号)

if (packet.markerBit &&

(last_packet_seq_num_ == -1 ||

IsNewerSequenceNumber(packet.seqNum, last_packet_seq_num_))) {

last_packet_seq_num_ = packet.seqNum;

}

#ifdef OWT_ENABLE_H265

} else if (packet.codec() == kVideoCodecH265) {

frame_type_ = packet.video_header.frame_type;

if (packet.is_first_packet_in_frame() &&

(first_packet_seq_num_ == -1 ||

IsNewerSequenceNumber(first_packet_seq_num_, packet.seqNum))) {

first_packet_seq_num_ = packet.seqNum;

}

if (packet.markerBit &&

(last_packet_seq_num_ == -1 ||

IsNewerSequenceNumber(packet.seqNum, last_packet_seq_num_))) {

last_packet_seq_num_ = packet.seqNum;

}

#else

} else {

#endif

只能在首包和末尾包之间插入:

// Only insert media packets between first and last packets (when

// available).

// Placing check here, as to properly account for duplicate packets.

// Check if this is first packet (only valid for some codecs)

// Should only be set for one packet per session.

if (packet.is_first_packet_in_frame() && first_packet_seq_num_ == -1) {

// The first packet in a frame signals the frame type.

frame_type_ = packet.video_header.frame_type;

// Store the sequence number for the first packet.

first_packet_seq_num_ = static_cast<int>(packet.seqNum);

} else if (first_packet_seq_num_ != -1 &&

如果已经有首个包的序号,输入包竟然比首包来的海湾,那么返回-3

IsNewerSequenceNumber(first_packet_seq_num_, packet.seqNum)) {

RTC_LOG(LS_WARNING)

<< "Received packet with a sequence number which is out "

"of frame boundaries";

return -3;

} else if (frame_type_ == VideoFrameType::kEmptyFrame &&

packet.video_header.frame_type != VideoFrameType::kEmptyFrame) {

// Update the frame type with the type of the first media packet.

// TODO(mikhal): Can this trigger?

frame_type_ = packet.video_header.frame_type;

}

// Track the marker bit, should only be set for one packet per session.

if (packet.markerBit && last_packet_seq_num_ == -1) {

last_packet_seq_num_ = static_cast<int>(packet.seqNum);

} else if (last_packet_seq_num_ != -1 &&

IsNewerSequenceNumber(packet.seqNum, last_packet_seq_num_)) {

RTC_LOG(LS_WARNING)

<< "Received packet with a sequence number which is out "

"of frame boundaries";

return -3;

}

}

在某个位置上插入包:

插入操作使迭代器失效

base()可以将一个反向迭代器变成一个正向迭代器

// The insert operation invalidates the iterator |rit|.

这个会在rit位置的正向位置的后面插入packet

PacketIterator packet_list_it = packets_.insert(rit.base(), packet);

size_t returnLength = InsertBuffer(frame_buffer, packet_list_it);

UpdateCompleteSession();

return static_cast<int>(returnLength);

}

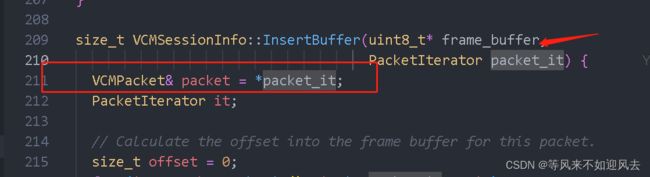

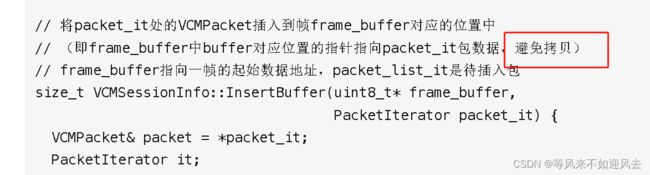

- 传递了一个包的正向迭代器:

InsertBuffer是为了避免内存拷贝?

size_t VCMSessionInfo::InsertBuffer(uint8_t* frame_buffer,

PacketIterator packet_it) {

VCMPacket& packet = *packet_it; //取出这个包

PacketIterator it; //正向迭代

// Calculate the offset into the frame buffer for this packet.

size_t offset = 0;

//正向遍历

for (it = packets_.begin(); it != packet_it; ++it)

//记录每个包的offset

offset += (*it).sizeBytes;

//frame_buffer 这个指针用来收集所有的包的数据部分,这样确实可以避免拷贝内存

// Set the data pointer to pointing to the start of this packet in the

// frame buffer.

const uint8_t* packet_buffer = packet.dataPtr;

packet.dataPtr = frame_buffer + offset;

STAP-A 分判断H.264 数据:用一种特殊的方式去掉两个NAL单元之间的2个字节长度,并且加上起始码

// We handle H.264 STAP-A packets in a special way as we need to remove the

// two length bytes between each NAL unit, and potentially add start codes.

// TODO(pbos): Remove H264 parsing from this step and use a fragmentation

// header supplied by the H264 depacketizer.

const size_t kH264NALHeaderLengthInBytes = 1;

#ifdef OWT_ENABLE_H265

const size_t kH265NALHeaderLengthInBytes = 2;

const auto* h265 =

absl::get_if<RTPVideoHeaderH265>(&packet.video_header.video_type_header);

#endif

const size_t kLengthFieldLength = 2;

const auto* h264 =

absl::get_if<RTPVideoHeaderH264>(&packet.video_header.video_type_header);

if (h264 && h264->packetization_type == kH264StapA) {

size_t required_length = 0;

const uint8_t* nalu_ptr = packet_buffer + kH264NALHeaderLengthInBytes;

while (nalu_ptr < packet_buffer + packet.sizeBytes) {

size_t length = BufferToUWord16(nalu_ptr);

required_length +=

length + (packet.insertStartCode ? kH264StartCodeLengthBytes : 0);

nalu_ptr += kLengthFieldLength + length;

}

ShiftSubsequentPackets(packet_it, required_length);

nalu_ptr = packet_buffer + kH264NALHeaderLengthInBytes;

uint8_t* frame_buffer_ptr = frame_buffer + offset;

while (nalu_ptr < packet_buffer + packet.sizeBytes) {

size_t length = BufferToUWord16(nalu_ptr);

nalu_ptr += kLengthFieldLength;

frame_buffer_ptr += Insert(nalu_ptr, length, packet.insertStartCode,

const_cast<uint8_t*>(frame_buffer_ptr));

nalu_ptr += length;

}

packet.sizeBytes = required_length;

return packet.sizeBytes;

}

#ifdef OWT_ENABLE_H265

else if (h265 && h265->packetization_type == kH265AP) {

// Similar to H264, for H265 aggregation packets, we rely on jitter buffer

// to remove the two length bytes between each NAL unit, and potentially add

// start codes.

size_t required_length = 0;

const uint8_t* nalu_ptr =

packet_buffer + kH265NALHeaderLengthInBytes; // skip payloadhdr

while (nalu_ptr < packet_buffer + packet.sizeBytes) {

size_t length = BufferToUWord16(nalu_ptr);

required_length +=

length + (packet.insertStartCode ? kH265StartCodeLengthBytes : 0);

nalu_ptr += kLengthFieldLength + length;

}

ShiftSubsequentPackets(packet_it, required_length);

nalu_ptr = packet_buffer + kH265NALHeaderLengthInBytes;

uint8_t* frame_buffer_ptr = frame_buffer + offset;

while (nalu_ptr < packet_buffer + packet.sizeBytes) {

size_t length = BufferToUWord16(nalu_ptr);

nalu_ptr += kLengthFieldLength;

// since H265 shares the same start code as H264, use the same Insert

// function to handle start code.

frame_buffer_ptr += Insert(nalu_ptr, length, packet.insertStartCode,

const_cast<uint8_t*>(frame_buffer_ptr));

nalu_ptr += length;

}

packet.sizeBytes = required_length;

return packet.sizeBytes;

}

#endif

ShiftSubsequentPackets(

packet_it, packet.sizeBytes +

(packet.insertStartCode ? kH264StartCodeLengthBytes : 0));

packet.sizeBytes =

Insert(packet_buffer, packet.sizeBytes, packet.insertStartCode,

const_cast<uint8_t*>(packet.dataPtr));

return packet.sizeBytes;

}

判断是否是完整的一帧:UpdateCompleteSession

- 有头、有尾的包,

- 如果InSequence == false ,那么 不完整。

- 也就说InSequence 比如返回true:

void VCMSessionInfo::UpdateCompleteSession() {

if (HaveFirstPacket() && HaveLastPacket()) {

// Do we have all the packets in this session?

bool complete_session = true;

PacketIterator it = packets_.begin();

PacketIterator prev_it = it;

++it;

for (; it != packets_.end(); ++it) {

//第一次,it是第二个包,previt是第一个包:

if (!InSequence(it, prev_it)) {

complete_session = false;

break;

}

//prev 会变为it的位置,第一次后就是第二个包

prev_it = it;

}

complete_ = complete_session;

}

}

InSequence 返回true 就是连续的包

// 判断并更新帧是否完整(组包完成),即判断packets_之间是否有空洞(不连续)

- 为啥相等也是连续的???

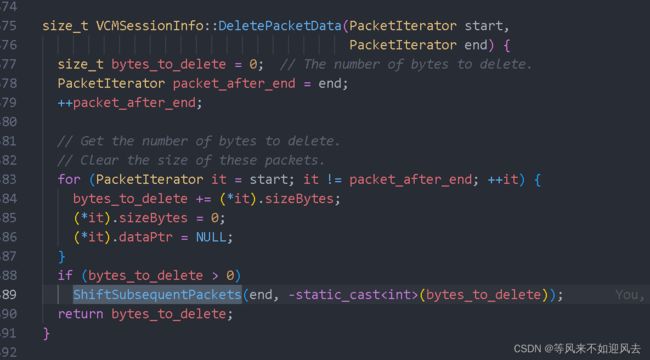

ShiftSubsequentPackets 移动后续的包:

void VCMSessionInfo::ShiftSubsequentPackets(PacketIterator it,

int steps_to_shift) {

//从当前it的后面开始

++it;

if (it == packets_.end()) //it 后刚好是最后一个 ,退出

return;

//后面那个开始,作为第一个包,获取数据部分的指针

uint8_t* first_packet_ptr = const_cast<uint8_t*>((*it).dataPtr);

int shift_length = 0; //需要移动的长度

计算总共要移动的长度,提前移动数据指针

// Calculate the total move length and move the data pointers in advance.

//遍历

for (; it != packets_.end(); ++it) {

//每个包移动的长度 累加

shift_length += (*it).sizeBytes;

//改变数据指针的指向的内存位置:每个包的数据部分都后移steps_to_shift 这么大???

if ((*it).dataPtr != NULL)

(*it).dataPtr += steps_to_shift;

}

//搬运内存,把当前包后面的包的所有数据(first_packet_ptr, shift_length) 移动到first_packet_ptr + steps_to_shift 开始?

memmove(first_packet_ptr + steps_to_shift, first_packet_ptr, shift_length);

}