cs224w_colab5.py 代码精读

精读完代码后的感悟:

First let's take a look at the general structure of a heterogeneous GNN layer by working through an example:

Let's assume we have a graph G, which contains two node types a and b, and three message types m1=(a,r1,a), m2=(a,r2,b) and m3=(a,r3,b). Note: during message passing we view each message as (src, relation, dst), where messages "flow" from src to dst node types. For example, during message passing, updating node type b relies on two different message types m2 and m3.(本实验对应['paper', 'author', 'paper']及"paper", "subject", "paper")

如:m2-(hetero_graph.edge_index['paper', 'author', 'paper'])

m3-(hetero_graph.edge_index['paper', 'subject', 'paper'])

由此,是否可以简单理解为依赖两种不同的类型的边训练网络,以此区别于之前的单一类型边的同构网络。

When applying message passing in heterogenous graphs

class HeteroGNNConv(pyg_nn.MessagePassing):

def forward(

return self.propagate(edge_index, size=size, node_feature_src=node_feature_src,

node_feature_dst=node_feature_dst)#, res_n_id=res_n_id #消息传递

we seperately apply message passing over each message type.

for message_key, message_type in edge_indices.items():

src_type, edge_type, dst_type = message_key

node_feature_src = node_features[src_type]

node_feature_dst = node_features[dst_type]

edge_index = edge_indices[message_key]

message_type_emb[message_key] = (

self.convs[message_key]( #HeteroGNNConv(),{('paper', 'author', 'paper'): HeteroGNNConv(), ('paper', 'subject', 'paper'): HeteroGNNConv()}

node_feature_src,

node_feature_dst,

edge_index,

)

)Therefore, for the graph G, a heterogeneous GNN layer contains three seperate Heterogeneous Message Passing layers (HeteroGNNConv in this Colab), where each HeteroGNNConv layer performs message passing and aggregation with respect to only one message type. Since a message type is viewed as (src, relation, dst) and messages "flow" from src to dst, each HeteroGNNConv layer only computes embeddings for the dst nodes of a given message type. For example, the HeteroGNNConv layer for message type m2 outputs updated embedding representations only for node's with type b(本实验中仅有一种节点类型node:'paper').

自己写是不可能了,只能抄完答案看看为啥这么写,代码参考cs224w(图机器学习)2021冬季课程学习笔记18 Colab 4:异质图_cs224w - colab4_诸神缄默不语的博客-CSDN博客

args = {

'device': torch.device('cuda' if torch.cuda.is_available() else 'cpu'),

'hidden_size': 64,

'epochs': 100,

'weight_decay': 1e-5, #weight_decay (float, optional) – weight decay (L2 penalty) (default: 0) ??

# https://pytorch.org/docs/1.2.0/optim.html?highlight=torch%20optim%20adam#torch.optim.Adam

'lr': 0.003,

'attn_size': 32, #HeteroGNNWrapperConv(deepsnap.hetero_gnn.HeteroConv):

# 5. The first linear layer should have out_features as args['attn_size']第一个线性层的 out_features 应为 args['attn_size']。 ???

}HAN:( Heterogeneous Graph Attention Network)中关于ACM.mat的说明如下:

We extract papers published in KDD, SIGMOD, SIGCOMM, MobiCOMM, and VLDB and divide the papers into three classes (Database, Wireless Communication, Data Mining). Then we construct a heterogeneous graph that comprises 3025 papers (P), 5835 authors (A) and 56 subjects (S). Paper features correspond to elements of a bag-of-words represented of keywords. We employ the meta-path set {PAP, PSP} to perform experiments. Here we label the papers according to the conference they published

colab5中关于数据集的说明:

We will use the ACM(3025) dataset in our node property prediction task, which is proposed in HAN (Wang et al. (2019)) and our dataset is extracted from DGL's ACM.mat.

The original ACM dataset has three node types and two edge (relation) types. For simplicity, we simplify the heterogeneous graph to one node type and two edge types (shown below). This means that in our heterogeneous graph, we have one node type (paper) and two message types (paper, author, paper) and (paper, subject, paper).

# Load the data

data = torch.load("acm.pkl")

# Message types

message_type_1 = ("paper", "author", "paper") #pap

message_type_2 = ("paper", "subject", "paper") #psp

# Dictionary of edge indices

edge_index = {}

edge_index[message_type_1] = data['pap']

edge_index[message_type_2] = data['psp']

# Dictionary of node features

node_feature = {}

node_feature["paper"] = data['feature']

# Dictionary of node labels

node_label = {}

node_label["paper"] = data['label']

# Load the train, validation and test indices

train_idx = {"paper": data['train_idx'].to(args['device'])}

val_idx = {"paper": data['val_idx'].to(args['device'])}

test_idx = {"paper": data['test_idx'].to(args['device'])}data['pap']

Out[3]:

tensor([[ 0, 0, 0, ..., 3024, 3024, 3024],

[ 8, 20, 51, ..., 2948, 2983, 2991]])

data['pap'].shape

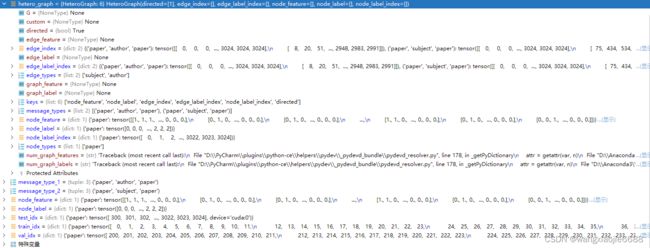

Out[4]: torch.Size([2, 26256]) #这个2代表两行,列代表的是文章与文章之间的引用 hetero_graph = HeteroGraph(# 塞进去后生成异构图

node_feature=node_feature,

node_label=node_label,

edge_index=edge_index,

directed=True

)

print(f"ACM heterogeneous graph: {hetero_graph.num_nodes()} nodes, {hetero_graph.num_edges()} edges")

ACM heterogeneous graph: {'paper': 3025} nodes, {('paper', 'author', 'paper'): 26256, ('paper', 'subject', 'paper'): 2207736} edges

for key in hetero_graph.edge_index:

edge_index = hetero_graph.edge_index[key]

adj = SparseTensor(row=edge_index[0], col=edge_index[1], sparse_sizes=(hetero_graph.num_nodes('paper'), hetero_graph.num_nodes('paper'))) #稀疏矩阵转换

hetero_graph.edge_index[key] = adj.t().to(args['device'])

adj

Out[12]:

SparseTensor(row=tensor([ 0, 0, 0, ..., 3024, 3024, 3024]),

col=tensor([ 8, 20, 51, ..., 2948, 2983, 2991]),

size=(3025, 3025), nnz=26256, density=0.29%)model = HeteroGNN(hetero_graph, args, aggr="mean").to(args['device']) #生成模型

class HeteroGNN(torch.nn.Module):

def __init__(self, hetero_graph, args, aggr="mean"):

convs1 = generate_convs(hetero_graph, HeteroGNNConv, self.hidden_size, first_layer=True) #先实例化 HeteroGNNConv 随后再将卷积层传入

class HeteroGNNConv(pyg_nn.MessagePassing):

def __init__(self, in_channels_src, in_channels_dst, out_channels):

super(HeteroGNNConv, self).__init__(aggr="mean")

self.in_channels_src = in_channels_src #这里对应两组输入src,dst 输出为一组 对应上文中的两组类型的特征输入进 ????

self.in_channels_dst = in_channels_dst

self.out_channels = out_channels

# To simplify implementation, please initialize both self.lin_dst

# and self.lin_src out_features to out_channels

## 1. Initialize the 3 linear layers.

## 2. Think through the connection between the mathematical

## definition of the update rule and torch linear layers!

self.lin_dst = nn.Linear(in_channels_dst, out_channels) # W_d^{(l)[m]}

self.lin_src = nn.Linear(in_channels_src, out_channels) # W_s^{(l)[m]}

self.lin_update = nn.Linear(out_channels * 2, out_channels) # W^{(l)[m]} #未涉及到卷积,只有线性层

def forward(

self,

node_feature_src,

node_feature_dst,

edge_index,

size=None

):

## 1. Unlike Colabs 3 and 4, we just need to call self.propagate with proper/custom arguments.

return self.propagate(edge_index, size=size, #消息传递

node_feature_src=node_feature_src,

node_feature_dst=node_feature_dst)#, res_n_id=res_n_iddef generate_convs(hetero_graph, conv, hidden_size, first_layer=False):

# TODO: Implement this function that returns a dictionary of `HeteroGNNConv`

# layers where the keys are message types. `hetero_graph` is deepsnap `HeteroGraph`

# object and the `conv` is the `HeteroGNNConv`.

############# Your code here #############

## (~9 lines of code)

## Note:

## 1. See the hints above!

## 2. conv is of type `HeteroGNNConv`

for message_type in hetero_graph.message_types: #[('paper', 'author', 'paper'), ('paper', 'subject', 'paper')]

if first_layer is True:

src_type = message_type[0]

dst_type = message_type[2]

src_size = hetero_graph.num_node_features(src_type)

dst_size = hetero_graph.num_node_features(dst_type)

convs[message_type] = conv(src_size, dst_size, hidden_size) #参数传递给卷积层,构建卷积层

else:

convs[message_type] = conv(hidden_size, hidden_size, hidden_size)

return convs # HeteroGNNConv

print(convs)

{('paper', 'author', 'paper'): HeteroGNNConv(), ('paper', 'subject', 'paper'): HeteroGNNConv()}

class HeteroGNN(torch.nn.Module):

def __init__(self, hetero_graph, args, aggr="mean"):

super(HeteroGNN, self).__init__()

.................................................

convs1 = generate_convs(hetero_graph, HeteroGNNConv, self.hidden_size, first_layer=True)

convs2 = generate_convs(hetero_graph, HeteroGNNConv, self.hidden_size)

self.convs1 = HeteroGNNWrapperConv(convs1, args, aggr=self.aggr)

self.convs2 = HeteroGNNWrapperConv(convs2, args, aggr=self.aggr)

self.aggr

Out[5]: 'mean' 本次没用到 if self.aggr == "attn":

class HeteroGNNWrapperConv(deepsnap.hetero_gnn.HeteroConv):

def __init__(self, convs, args, aggr="mean"):

super(HeteroGNNWrapperConv, self).__init__(convs, None)

if self.aggr == "attn":

## Note:

## 1. Initialize self.attn_proj, where self.attn_proj should include

## two linear layers. Note, make sure you understand

## which part of the equation self.attn_proj captures.

## 2. You should use nn.Sequential for self.attn_proj

## 3. nn.Linear and nn.Tanh are useful.

## 4. You can model a weight vector (rather than matrix) by using:

## nn.Linear(some_size, 1, bias=False).

## 5. The first linear layer should have out_features as args['attn_size']

## 6. You can assume we only have one "head" for the attention.

## 7. We recommend you to implement the mean aggregation first. After

## the mean aggregation works well in the training, then you can

## implement this part.

if self.aggr == "attn": #

self.attn_proj = nn.Sequential(

nn.Linear(args['hidden_size'], args['attn_size']),

nn.Tanh(), #https://pytorch.org/docs/stable/generated/torch.nn.Tanh.html#torch.nn.Tanh

nn.Linear(args['attn_size'], 1, bias=False),

)class HeteroGNN(torch.nn.Module):

def __init__(self, hetero_graph, args, aggr="mean"):

super(HeteroGNN, self).__init__()

.................................................

self.convs1 = HeteroGNNWrapperConv(convs1, args, aggr=self.aggr)

self.convs2 = HeteroGNNWrapperConv(convs2, args, aggr=self.aggr)

for node_type in hetero_graph.node_types:

self.bns1[node_type] = torch.nn.BatchNorm1d(self.hidden_size, eps=1)

self.bns2[node_type] = torch.nn.BatchNorm1d(self.hidden_size, eps=1)

self.post_mps[node_type] = nn.Linear(self.hidden_size, hetero_graph.num_node_labels(node_type))

self.relus1[node_type] = nn.LeakyReLU()

self.relus2[node_type] = nn.LeakyReLU()

torch.nn.BatchNorm1d(self.hidden_size, eps=1)

r"""Applies Batch Normalization over a 2D or 3D input as described in the paper `Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift `__ .

#num_features: number of features or channels :math:`C` of the input

#eps: a value added to the denominator for numerical stability. Default: 1e-5 if 'IS_GRADESCOPE_ENV' not in os.environ:

...........................................

model = HeteroGNN(hetero_graph, args, aggr="mean").to(args['device'])

optimizer = torch.optim.Adam(model.parameters(), lr=args['lr'], weight_decay=args['weight_decay'])

for epoch in range(args['epochs']):

loss = train(model, optimizer, hetero_graph, train_idx)

#执行model(HeteroGNN)的反向传播函数 如下

accs, best_model, best_val = test(model, hetero_graph, [train_idx, val_idx, test_idx], best_model, best_val)

print(

f"Epoch {epoch + 1}: loss {round(loss, 5)}, "

f"train micro {round(accs[0][0] * 100, 2)}%, train macro {round(accs[0][1] * 100, 2)}%, "class HeteroGNN(torch.nn.Module):

.................................................

def forward(self, node_feature, edge_index):

# TODO: Implement the forward function. Notice that `node_feature` is

# a dictionary of tensors where keys are node types and values are

# corresponding feature tensors. The `edge_index` is a dictionary of

# tensors where keys are message types and values are corresponding

# edge index tensors (with respect to each message type)

x = node_feature

## 1. `deepsnap.hetero_gnn.forward_op` can be helpful.

x = self.convs1(x, edge_index)# 特征及边 HeteroGNNWrapperConv 每次执行卷积操作都会执行forward函数

x = forward_op(x, self.bns1)

x = forward_op(x, self.relus1)

x = self.convs2(x, edge_index)

x = forward_op(x, self.bns2)

x = forward_op(x, self.relus2)

x = forward_op(x, self.post_mps)

return x

self.convs1

Out[2]:

HeteroGNNWrapperConv(

(modules): ModuleList(

(0): HeteroGNNConv()

(1): HeteroGNNConv()

)

)

print(edge_index)

{('paper', 'author', 'paper'): SparseTensor(row=tensor([ 0, 0, 0, ..., 3024, 3024, 3024], device='cuda:0'),

col=tensor([ 8, 20, 51, ..., 2948, 2983, 2991], device='cuda:0'),

size=(3025, 3025), nnz=26256, density=0.29%), ('paper', 'subject', 'paper'): SparseTensor(row=tensor([ 0, 0, 0, ..., 3024, 3024, 3024], device='cuda:0'),

col=tensor([ 75, 434, 534, ..., 3020, 3021, 3022], device='cuda:0'),

size=(3025, 3025), nnz=2207736, density=24.13%)}class HeteroGNNWrapperConv(deepsnap.hetero_gnn.HeteroConv):

........................................

def forward(self, node_features, edge_indices):

message_type_emb = {}

for message_key, message_type in edge_indices.items():

src_type, edge_type, dst_type = message_key

node_feature_src = node_features[src_type]

node_feature_dst = node_features[dst_type]

edge_index = edge_indices[message_key]

message_type_emb[message_key] = (

self.convs[message_key]( #{('paper', 'author', 'paper'): HeteroGNNConv(), ('paper', 'subject', 'paper'): HeteroGNNConv()}

node_feature_src,

node_feature_dst,

edge_index,

)

)

node_emb = {dst: [] for _, _, dst in message_type_emb.keys()}

mapping = {}

for (src, edge_type, dst), item in message_type_emb.items():

mapping[len(node_emb[dst])] = (src, edge_type, dst)

node_emb[dst].append(item)

self.mapping = mapping

for node_type, embs in node_emb.items():

if len(embs) == 1:

node_emb[node_type] = embs[0]

else:

node_emb[node_type] = self.aggregate(embs) #由此聚集信息,如下部分的代码段

return node_emb

message_type_emb[message_key].shape # message_key ('paper', 'subject', 'paper')

Out[12]: torch.Size([3025, 64])

mapping

Out[40]: {0: ('paper', 'author', 'paper'), 1: ('paper', 'subject', 'paper')}

def aggregate(self, xs):

# TODO: Implement this function that aggregates all message type results.

# Here, xs is a list of tensors (embeddings) with respect to message

# type aggregation results.

if self.aggr == "mean":

## Note:

## 1. Explore the function parameter `xs`!

if self.aggr == "mean":

x = torch.stack(xs, dim=-1) #torch.Size([3025, 64, 2]) 在维度上连接(concatenate)若干个张量。(这些张量形状相同)。

return x.mean(dim=-1)

elif self.aggr == "attn":

N = xs[0].shape[0] # Number of nodes for that node type

M = len(xs) # Number of message types for that node type

x = torch.cat(xs, dim=0).view(M, N, -1) # M * N * D

z = self.attn_proj(x).view(M, N) # M * N * 1

z = z.mean(1) # M * 1

alpha = torch.softmax(z, dim=0) # M * 1

# Store the attention result to self.alpha as np array

self.alpha = alpha.view(-1).data.cpu().numpy()

alpha = alpha.view(M, 1, 1)

x = x * alpha

return x.sum(dim=0)torch.stack()和torch.cat()都是用于将多个张量合并成一个张量的函数,但它们有一些区别。

1. 维度要求:

- torch.stack()要求输入的张量序列具有相同的形状,并且会在新的维度上进行堆叠。例如,如果输入是形状为(2, 3)的张量序列,那么输出将是形状为(3, 2, 3)的张量。

- torch.cat()要求输入的张量在除了指定的拼接维度之外的所有维度上具有相同的形状。它会在指定的维度上进行拼接。例如,如果输入是形状为(2, 3)的张量序列,且在维度0上进行拼接,那么输出将是形状为(4, 3)的张量。

2. 新维度的添加:

- torch.stack()会在新的维度上进行堆叠,新维度的大小就是输入张量的数量。

- torch.cat()不会添加新的维度,它只是在指定的维度上进行拼接。

3. 使用场景:

- torch.stack()通常用于将多个张量堆叠成一个更高维度的张量,例如将一批样本堆叠成一个批次输入神经网络。

- torch.cat()通常用于在指定维度上拼接张量,例如将多个特征张量拼接成一个更大的特征张量。

总之,torch.stack()和torch.cat()在合并张量时有一些细微的区别,具体使用哪个函数取决于你的需求和输入张量的形状。

class HeteroGNN(torch.nn.Module):

def forward(self, node_feature, edge_index):

# TODO: Implement the forward function. Notice that `node_feature` is

# a dictionary of tensors where keys are node types and values are

# corresponding feature tensors. The `edge_index` is a dictionary of

# tensors where keys are message types and values are corresponding

# edge index tensors (with respect to each message type)

x = node_feature

## 1. `deepsnap.hetero_gnn.forward_op` can be helpful.

x = self.convs1(x, edge_index)

x = forward_op(x, self.bns1)

x = forward_op(x, self.relus1)

x = self.convs2(x, edge_index) #torch.Size([3025, 64])

x = forward_op(x, self.bns2) #torch.Size([3025, 64])

x = forward_op(x, self.relus2)#

x = forward_op(x, self.post_mps)

return x #最终预测得到的张量集合 preds = model(hetero_graph.node_feature, hetero_graph.edge_index)def forward_op(x, module_dict, **kwargs):

#对字典里的张量进行操作,这里由于存在key值,不能直接进行relu及bns

r"""A helper function for the heterogeneous operations. Given a dictionary input

`x`, it will return a dictionary with the same keys and the values applied by the

corresponding values of the `module_dict` with specified parameters. The keys in `x`

are same as the keys in the `module_dict`. If `module_dict` is not a dictionary,

it is assumed to be a single value.

Args:

x (Dict[str, Tensor]): A dictionary that the value of each item is a tensor.

module_dict (:class:`torch.nn.ModuleDict`): The value of the `module_dict`

will be fed with each value in `x`.

**kwargs (optional): Parameters that will be passed into each value of the

`module_dict`.

"""

if not isinstance(x, dict):

raise ValueError("The input x should be a dictionary.")

res = {}

if not isinstance(module_dict, dict) and not isinstance(module_dict, nn.ModuleDict):

for key in x:

res[key] = module_dict(x[key], **kwargs)

else:

for key in x:

res[key] = module_dict[key](x[key], **kwargs)

return res

def train(model, optimizer, hetero_graph, train_idx):

model.train()

optimizer.zero_grad()

preds = model(hetero_graph.node_feature, hetero_graph.edge_index)

#然后计算损失梯度等

############# Your code here #############

## Note:

## 1. Compute the loss here

## 2. `deepsnap.hetero_graph.HeteroGraph.node_label` is useful

loss = model.loss(preds, hetero_graph.node_label, train_idx)

##########################################

loss.backward()

optimizer.step()

return loss.item()额,有些抽象,一个类里面包含了多个函数及卷积等等操作,反复横跳,调用卷积时又跳到对应的forward函数

大致运行流程如下:

args = {

'device': torch.device('cuda' if torch.cuda.is_available() else 'cpu'),

'hidden_size': 64,

'epochs': 100,

'weight_decay': 1e-5, #weight_decay (float, optional) – weight decay (L2 penalty) (default: 0) ??

# https://pytorch.org/docs/1.2.0/optim.html?highlight=torch%20optim%20adam#torch.optim.Adam

'lr': 0.003,

'attn_size': 32, #HeteroGNNWrapperConv(deepsnap.hetero_gnn.HeteroConv):

# 5. The first linear layer should have out_features as args['attn_size']第一个线性层的 out_features 应为 args['attn_size']。 ???

}

data = torch.load("acm.pkl")

model = HeteroGNN(hetero_graph, args, aggr="mean").to(args['device'])

hetero_graph = HeteroGraph( # 塞进去后生成异构图

node_feature=node_feature,

node_label=node_label,

edge_index=edge_index,

directed=True

)

class HeteroGNN(torch.nn.Module):

convs1 = generate_convs(hetero_graph, HeteroGNNConv, self.hidden_size, first_layer=True)

def generate_convs(hetero_graph, conv, hidden_size, first_layer=False):

convs[message_type] = conv(hidden_size, hidden_size, hidden_size)

class HeteroGNNConv(pyg_nn.MessagePassing):

self.convs1 = HeteroGNNWrapperConv(convs1, args, aggr=self.aggr)

class HeteroGNNWrapperConv(deepsnap.hetero_gnn.HeteroConv):

optimizer = torch.optim.Adam(model.parameters(), lr=args['lr'], weight_decay=args['weight_decay'])

for epoch in range(args['epochs']):

loss = train(model, optimizer, hetero_graph, train_idx)

def train(model, optimizer, hetero_graph, train_idx):

model.train()##训练模式

preds = model(hetero_graph.node_feature, hetero_graph.edge_index)#model = HeteroGNN(hetero_graph, args, aggr="mean").to(args['device'])

class HeteroGNN(torch.nn.Module):

def forward(self, node_feature, edge_index):

x = self.convs1(x, edge_index) # self.convs1 = HeteroGNNWrapperConv(convs1, args, aggr=self.aggr)

class HeteroGNNWrapperConv(deepsnap.hetero_gnn.HeteroConv):

def forward(self, node_features, edge_indices):

....................................

message_type_emb[message_key] = (

self.convs[message_key]( #self.convs = HeteroGNNConv(),

# {('paper', 'author', 'paper'): HeteroGNNConv(), ('paper', 'subject', 'paper'): HeteroGNNConv()}

node_feature_src,

node_feature_dst,

edge_index,

)

)

class HeteroGNNConv(pyg_nn.MessagePassing):

def forward(self,node_feature_src,node_feature_dst,edge_index,size=None):

return self.propagate(edge_index, size=size, # 消息传递 传给 message_type_emb[message_key]

node_feature_src=node_feature_src,

node_feature_dst=node_feature_dst) # , res_n_id=res_n_id

....................................................

node_emb[node_type] = self.aggregate(embs)

def aggregate(self, xs):

x = torch.stack(xs, dim=-1)

return x.mean(dim=-1)

return node_emb # x = self.convs1(x, edge_index)

x = forward_op(x, self.bns1)

x = forward_op(x, self.bns1)

x = forward_op(x, self.relus1)

x = self.convs2(x, edge_index) # torch.Size([3025, 64])

x = forward_op(x, self.bns2) # torch.Size([3025, 64])

x = forward_op(x, self.relus2) # #对字典里的张量进行操作,这里由于存在key值,不能直接进行relu及bns

x = forward_op(x, self.post_mps)

return x # preds = model(hetero_graph.node_feature, hetero_graph.edge_index)

loss = model.loss(preds, hetero_graph.node_label, train_idx)

def loss(self, preds, y, indices):

loss_func = F.cross_entropy

for node_type in preds:

idx = indices[node_type]

loss += loss_func(preds[node_type][idx], y[node_type][idx])

return loss #

loss.backward()

optimizer.step()

return loss.item() #loss = train(model, optimizer, hetero_graph, train_idx) #执行model(HeteroGNN)的反向传播函数

#以上进行完一轮训练

大体架构: HeteroGNN 中定义HeteroGNNWrapperConv ,HeteroGNNWrapperConv 中定义HeteroGNNConv(卷积) 没毛病

class HeteroGNN(torch.nn.Module):---- class HeteroGNNWrapperConv(deepsnap.hetero_gnn.HeteroConv):---class HeteroGNNConv(pyg_nn.MessagePassing):

附件为精简后的colab5中第二部分实验代码,数据集,上述流程图.py文件