(二)presto集成Hive

一、集成之前

在presto集成Hive之前,要先启动Hive;由于Hive依赖HDFS,并且这里环境其元数据存储在mysql中,故在启动Hive之前要先启动HDFS和Mysql;

1、主节点启动hdfs

[root@master sbin]# pwd

/opt/softWare/hadoop/hadoop-2.7.3/sbin

[root@master sbin]# ./start-all.sh2、启动Mysql

[root@master ~]# mysql -u root -p3、启动hive metastore

[root@master bin]# nohup bin/hive --service metastore -p 9083 >/dev/null & 4、命令查看Hive表信息

[root@master bin]# ./hive

which: no hbase in (/opt/softWare/hive/apache-hive-2.1.1-bin/bin:/opt/softWare/hive/apache-hive-2.1.1-bin/conf:/opt/softWare/spark/spark-2.20/bin:/opt/softWare/scala/scala-2.13.1/bin:/opt/softWare/kafka/kafka_2.10-0.10.2.1/bin:/opt/softWare/zookeeper/zookeeper-3.5.2/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/opt/softWare/hadoop/hadoop-2.7.3/bin:/opt/softWare/java8_151/jdk1.8.0_151/bin:/opt/softWare/java8_151/jdk1.8.0_151/jre/bin :/opt/softWare/mysql/bin:/opt/softWare/kafkaEagle/kafka-eagle-bin-1.2.4/kafka-eagle/bin:/opt/softWare/sqoop/sqoop-1.4.7/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/softWare/hive/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/softWare/hadoop/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/opt/softWare/hive/apache-hive-2.1.1-bin/lib/hive-common-2.1.1.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> show databases;

OK

db_hive

db_hive_test

default

Time taken: 0.991 seconds, Fetched: 3 row(s)

hive> 二、集成Hive

1、新建catalog目录

在所有节点的/opt/softWare/presto/ln_presto/etc/下新建catalog目录

[root@master etc]# mkdir catalog2、创建hive.properties文件

在所有节点的/opt/softWare/presto/ln_presto/etc/catalog/目录下创建hive.properties文件

connector.name=hive-hadoop2

hive.metastore.uri=thrift://master:90833、修改jvm.config文件

在所有几点修改/opt/softWare/presto/ln_presto/etc下的jvm.config文件,并增加hdfs的用户

-server

-Xmx3G

-XX:+UseG1GC

-XX:G1HeapRegionSize=32M

-XX:+UseGCOverheadLimit

-XX:+ExplicitGCInvokesConcurrent

-XX:+HeapDumpOnOutOfMemoryError

-XX:+ExitOnOutOfMemoryError

-DHADOOP_USER_NAME=presto4、创建hdfs用户

为所有节点创建上述hdfs的presto用户

[root@master etc]# useradd presto5、所有节点重启presto

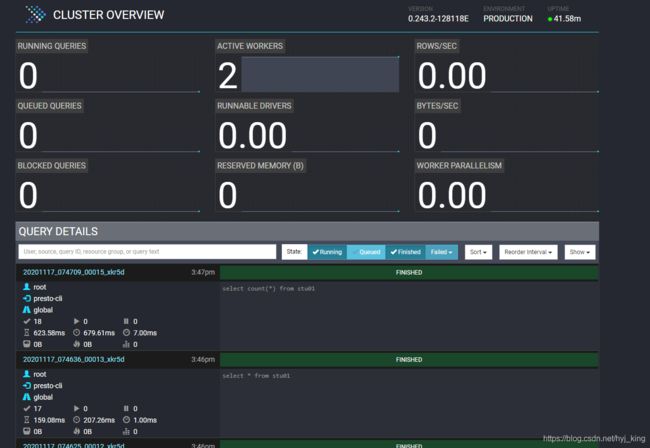

.bin/launcher restart三、CLI工具进行测试

1、将presto-cli-0.243.2-executable.jar移动到bin目录下并重新命名和复制权限

此操作只在一个节点上执行即可,因为cli只是一个客户端;

[root@master presto]# mv presto-cli-0.243.2-executable.jar ln_presto/bin/[root@master bin]# mv presto-cli-0.243.2-executable.jar presto[root@master bin]# chmod a+x presto2、连接访问Hive

[root@master bin]# ./presto --server 192.168.230.21:8099 --catalog=hive --schema=db_hive

presto:db_hive> show tables;

Table

-------

stu

stu01

(2 rows)

Query 20201117_072006_00008_xkr5d, FINISHED, 3 nodes

Splits: 36 total, 36 done (100.00%)

0:01 [2 rows, 42B] [3 rows/s, 82B/s]

presto:db_hive>db_hive为hive数据库中已经存在的库;

presto:db_hive> select * from stu01;

id | name

----+------

(0 rows)

Query 20201117_074636_00013_xkr5d, FINISHED, 1 node

Splits: 17 total, 17 done (100.00%)

0:00 [0 rows, 0B] [0 rows/s, 0B/s]

presto:db_hive> select count(*) from stu01;

_col0

-------

0

(1 row)

Query 20201117_074709_00015_xkr5d, FINISHED, 1 node

Splits: 18 total, 18 done (100.00%)

0:01 [0 rows, 0B] [0 rows/s, 0B/s]

presto:db_hive>