kubeadm 部署 Kubernetes 集群(长文预警)

K8s 集群部署 v1.7.2(kubeadm)

一般常用的k8s集群的部署方式有两种:

- 通过批量工具(ansible/saltstack)部署

这类部署方式的可控性强,方便后期的维护和管理;

生产环境的k8s集群建议采用这种方式部署。 - 通过k8s自己的kubeadm工具进行部署

优点是实现起来相对简单;

缺点是很多操作kubeadm都替你执行了,它做了什么你很难知道,在后期需要维护时会有些麻烦。

这种方式可以用于测试k8s环境的搭建,生产中还是可控性强些比较好。

本次部署k8s-1.17.2,之所以不安装最新版本,是因为之后还要进行k8s集群升级的实验。

一:环境准备

1.1:服务器

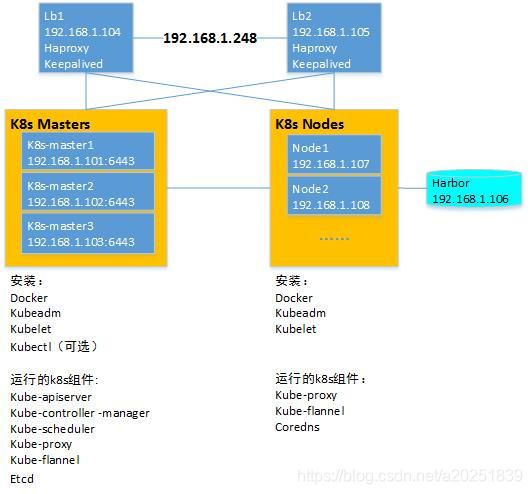

一个最基础的高可用k8s集群环境需要以下几个部分:

- 一套负载均衡:实现k8s管理端的高可用,以及node节点级别的业务负载均衡

- 至少3台master节点:3台master节点可以容错1台master节点的故障

- 若干个node节点:整个k8s集群中最需要高配置服务器的地方。

- 私有Docker镜像仓库:用于存储内部业务镜像和常用基础镜像。

本次准备的实验环境:

| 角色/hostname | 物理网络 IP 地址 | 配置 | 系统 |

|---|---|---|---|

| master1 | 192.168.1.101 | 2c-2g-50G | Ubuntu 1804 |

| master2 | 192.168.1.102 | 2c-2g-50G | Ubuntu 1804 |

| master3 | 192.168.1.103 | 2c-2g-50G | Ubuntu 1804 |

| lb1 | 192.168.1.104 | 1c-1g-20G | Ubuntu 1804 |

| lb2 | 192.168.1.105 | 1c-1g-20G | Ubuntu 1804 |

| harbor | 192.168.1.106 | 1c-2g-100G | Ubuntu 1804 |

| node1 | 192.168.1.107 | 4c-4g-100G | Ubuntu 1804 |

| node2 | 192.168.1.108 | 4c-4g-100G | Ubuntu 1804 |

所有服务器均安装必要的工具、设置好主机名、配置内核参数和资源限制参数,需要特别注意的是:

- 时间一定要同步;

- 主机名设置后,后期不得更改;

- 将k8s的master节点和node节点的swap都禁掉,或者在装系统时就不要分配swap了。

具体的服务器初始准备可以参考博客《centos系统初始化》以及《ubuntu系统初始化》。

1.2:网络规划

物理网络不用赘述,在k8s集群中要规划好两个网络:

- Pod网络:用于为Pod分配IP

网段可以划分得大一些,足够pod使用。 - Service网络:用于为Service分配IP

相对pod网络,service网络可以划得小一些,service的数量相较pod要少很多。

本次的规划:

| 网络类型 | 网段 |

|---|---|

| 物理网络 | 192.168.1.0/24 |

| Service网络 | 172.16.0.0/20 |

| Pod网络 | 10.10.0.0/16 |

二:搭建负载均衡

| 角色/hostname | 物理网络 IP 地址 | 配置 | 系统 |

|---|---|---|---|

| lb1 | 192.168.1.104 | 1c-1g-20G | Ubuntu 1804 |

| lb2 | 192.168.1.105 | 1c-1g-20G | Ubuntu 1804 |

通过Haproxy+Keepalived的方式实现高可用负载均衡。

2.1:Keepalived 配置

将lb1设置为MASTER,lb2设置为BACKUP,添加vip 192.168.1.248,用于k8s集群管理端的访问入口。

lb1:

vrrp_instance VIP {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.248 dev eth0 label eth0:1

}

}

lb2:

vrrp_instance VIP {

state BACKUP

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.248 dev eth0 label eth0:1

}

}

2.2:Haproxy 配置

两台Haproxy的配置保持一致。

配置监听vip的6443端口,来代理k8s集群的apiServer接口;将3台k8s-master设置为后端服务器。

listen k8s-api-6443

bind 192.168.1.248:6443

mode tcp

server k8s-master1 192.168.1.101:6443 check inter 3s fall 3 rise 5

server k8s-master2 192.168.1.102:6443 check inter 3s fall 3 rise 5

server k8s-master3 192.168.1.103:6443 check inter 3s fall 3 rise 5

三:部署 Harbor 镜像仓库

| 角色/hostname | 物理网络 IP 地址 | 配置 | 系统 |

|---|---|---|---|

| harbor | 192.168.1.106 | 1c-2g-100G | Ubuntu 1804 |

本次安装1.7.5版本的harbor

3.1:安装 Docker 和 docker-compose

直接通过脚本,基于apt的方式安装docker-ce。

脚本内容:

#!/bin/bash

# apt install docker-ce & docker-compose.

sudo apt-get update;

sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common;

curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -;

sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable";

sudo apt-get -y update;

sudo apt-get -y install docker-ce docker-ce-cli docker-compose

3.2:部署和配置 harbor

解压并创建软链,易于后期版本管理。

root@harbor:/usr/local/src# tar xf harbor-offline-installer-v1.7.5.tgz

root@harbor:/usr/local/src# mv harbor harbor-v1.7.5

root@harbor:~# ln -sv /usr/local/src/harbor-v1.7.5/ /usr/local/harbor

配置:

root@harbor:~# vim /usr/local/harbor/harbor.cfg

hostname = harbor.yqc.com #harbor访问地址

harbor_admin_password = 123456 #admin用户密码

注意,因为本次实验环境没有私有DNS服务,所以后期要在每个需要使用harbor的服务器上配置静态的hosts解析:

192.168.1.106 harbor.yqc.com

初始化配置:

root@harbor:~# cd /usr/local/harbor

root@harbor:/usr/local/harbor# ./prepare

3.3:Habor 自启动脚本

在/lib/systemd/system中添加harbor的服务脚本harbor.service。

根据实际情况更改docker-compose的二进制路径和harbor的部署路径。

[Unit]

Description=Harbor

After=docker.service systemd-networkd.service systemd-resolved.service

Requires=docker.service

Documentation=http://github.com/vmware/harbor

[Service]

Type=simple

Restart=on-failure

RestartSec=5

ExecStart=/usr/bin/docker-compose -f /usr/local/harbor/docker-compose.yml up

ExecStop=/usr/bin/docker-compose -f /usr/local/harbor/docker-compose.yml down

[Install]

WantedBy=multi-user.target

重载daemon

systemctl daemon reload

开启harbor并设为开机自启:

systemctl start harbor

systemctl enable harbor

3.4:测试 Harbor 的访问

访问http://harbor.yqc.com/,admin/123456

四:准备 K8s 节点

| 角色/hostname | 物理网络 IP 地址 | 配置 | 系统 |

|---|---|---|---|

| master1 | 192.168.1.101 | 2c-2g-50G | Ubuntu 1804 |

| master2 | 192.168.1.102 | 2c-2g-50G | Ubuntu 1804 |

| master3 | 192.168.1.103 | 2c-2g-50G | Ubuntu 1804 |

| node1 | 192.168.1.107 | 4c-4g-100G | Ubuntu 1804 |

| node2 | 192.168.1.108 | 4c-4g-100G | Ubuntu 1804 |

K8s节点需要安装Docker、集群创建工具kubeadm、节点代理程序kubelet。kubectl在集群创建时不是必需的。

需要注意的是程序版本:

- 要选用部署的k8s版本支持的Docker版本;

- kubeadm、kubelet、kubectl均安装与k8s一致的版本。

4.1:准备 Docker 环境

为k8s集群所有的master和node节点准备Docker运行环境(包括容器镜像拉取的配置和优化)。

4.1.1:Docker 版本选择及安装

关于 Docker 版本的选择,在github的kubernets项目的版本changelog中找到相应k8s版本支持的Docker版本,进行安装。

比如1.17版本的changelog:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.17.md#v1173,中有支持到docker 19.03版本的提示。

所以安装19.03版本的docker,修改docker的apt安装脚本,指定一下docker-ce的版本,然后执行脚本进行安装。

#!/bin/bash

# install docker on k8s nodes

sudo apt-get update;

sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common;

curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -;

sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable";

sudo apt-get -y update;

sudo apt-get -y install docker-ce=5:19.03.15~3-0~ubuntu-bionic docker-ce-cli=5:19.03.15~3-0~ubuntu-bionic;

4.1.2:配置 Docker 镜像加速器

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://xxxxxxxx.mirror.aliyuncs.com"] #阿里云的镜像加速服务,具体地址请到自己的阿里云帐号进行获取。

}

EOF

4.1.3:修改 Docker 的默认 Cgroup

k8s推荐使用的Cgroup为systemd,而Docker默认为cgroupfs,所以修改一下。

编辑 /etc/docker/daemon.json (没有该文件就新建一个),添加如下启动项参数即可:

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

4.1.4:添加 insecure-registry

要想Docker成功在私有harbor上拉取/上传镜像,需要将harbor添加为insecure-registry。

在docker的systemd脚本/lib/systemd/system/docker.service中的启动命令ExecStart后添加--insecure-registry选项,指定自己的harbor地址。

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --insecure-registry harbor.yqc.com

同时在/etc/hosts中添加主机解析(有自己的DNS服务就添加A记录):

192.168.1.106 harbor.yqc.com

4.1.5:重启 Docker

所有配置工作结束后重启Docker。

systemctl restart docker && systemctl enable docker

4.2:安装 kubeadm/kubelet/kubectl

根据需要在节点上安装相应程序:

- kubeadm是用于创建k8s集群的工具,在所有k8s集群的节点上都要安装;

- kubectl是用于控制k8s集群管理端(即master,也叫control-plane管理界面)的工具,仅需在k8s集群管理主机上安装,这个管理主机可以是台额外的主机,也可以是k8s集群内的任意节点;

kubectl授权后的权限很大,可以对k8s集群进行管理操作,所以kubectl的部署范围小点为好,一般在一台master节点上安装kubectl即可; - kubelet是三者中唯一一个服务程序,作为node-agent,运行在每一个k8s节点上,负责创建和管理容器。

其余组件在kubeadm搭建的k8s集群中都是容器化运行的,比如kube-proxy、etcd等。

4.2.1:添加kubernetes源

版本:https://github.com/kubernetes/kubernetes/releases/tag/v1.17.2

为所有节点添加kubernets源,这里选用阿里云。

apt-get update && apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat << EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

4.2.2:所有节点安装 kubeadm 和 kubelet

安装前先查看一下kubernetes源中包含的版本,确认一下版本号的指定方式。

apt-cache madison kubeadm

安装指定版本的kubeadm和kubelet。

apt install kubeadm=1.17.2-00 kubelet=1.17.2-00 -y

注意kubelet安装后是启动不了的,需要k8s集群初始化完成、或者节点添加到k8s集群后,相应节点上的kubelet才会启动。

五:创建 k8s 集群

5.1:初始化 - kubeadm init

关于kubeadm init的官方文档:https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm-init/

集群初始化的过程,就是从指定镜像仓库中拉取对应版本的k8s组件镜像,并根据指定的集群参数,将各组件容器运行起来。

注意:

- 在任一master节点上执行

kubeadm init即可,且只执行一次。 - 其余master节点以及node节点在初始化结束后通过

kubeadm join加入集群。 - 初始化的过程中会通过网络在线拉取各组件的镜像,为了缩短初始化的过程,可以在执行初始化的master节点上先将需要的组件镜像拉取下来;

当然,也可以直接执行初始化。

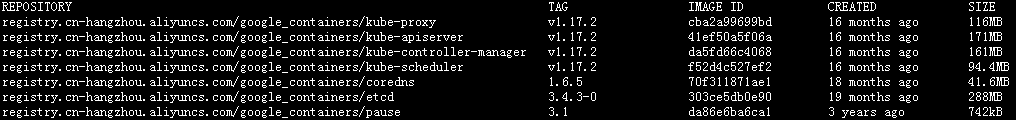

5.1.1:拉取组件镜像

执行kubeadm config images list --kubernetes-version v1.17.2,查看1.7.2版本的k8s需要哪些镜像:

k8s.gcr.io/kube-apiserver:v1.17.2

k8s.gcr.io/kube-controller-manager:v1.17.2

k8s.gcr.io/kube-scheduler:v1.17.2

k8s.gcr.io/kube-proxy:v1.17.2

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.4.3-0

k8s.gcr.io/coredns:1.6.5

可以看到默认是从k8s.gcr.io拉取镜像的,为了提升速度,将谷歌的镜像仓库替换为阿里云,然后执行docker pull把镜像拉取下来 :

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5

查看拉取的镜像:

root@k8s-master1:~# docker image list

5.1.2:执行初始化

初始化有两种方式:

- 第一种:直接在

kubeadm init命令行传参; - 第二种:将初始化参数编辑到配置文件中,通过

kubeadm init --config CONFIG_FILE指定配置文件。

推荐使用这种方式,因为将配置文件保存下来,后期可追溯初始化时使用的参数。

方式一 - 命令行传参

kubeadm init \

--apiserver-advertise-address=192.168.1.101 \ #apiserver的监听地址(本机)

--apiserver-bind-port=6443 \ #apiserver的监听端口

--control-plane-endpoint=192.168.1.248 \ #k8s集群管理地址(设为固定的vip地址)

--ignore-preflight-errors=swap \ #忽略swap的检查报错

--image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers \ #镜像仓库地址

--kubernetes-version=v1.17.2 \ #要安装的k8s版本

--pod-network-cidr=10.10.0.0/16 \ #pod网络的地址段(与网络组件的地址段要一致)

--service-cidr=172.16.0.0/20 \ #service网络的地址段

--service-dns-domain=yqc.com #service的域名(创建的service名称后缀)

方式二 - 指定配置文件(推荐)

先获取到初始化的默认参数,保存到文件中

kubeadm config print init-defaults > kubeadm-init-1.17.2.yml

修改默认参数:

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.101 #修改为本机的地址

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master1.yqc.com

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.1.248:6443 #添加此参数,指定k8s集群的管理地址和端口

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #修改镜像仓库为阿里云

kind: ClusterConfiguration

kubernetesVersion: v1.17.2 #修改为要安装的k8s版本

networking:

dnsDomain: yqc.com #修改为自己的域名

podSubnet: 10.10.0.0/16 #添加此参数,指定Pod网络的地址范围

serviceSubnet: 172.16.0.0/20 #修改为自己规划的service网络地址范围

scheduler: {}

然后指定配置文件,执行初始化:

kubeadm init --config kubeadm-init-1.17.2.yml

5.1.3:记录初始化完成后的返回信息

初始化成功后,会返回一些信息,需要记录下来,用于后边为kubectl进行管理授权、部署集群网络组件,以及添加其它节点到集群。

以下为返回信息:

Your Kubernetes control-plane has initialized successfully!

#如何为当前系统用户进行kubectl管理授权:

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#如何部署网络组件:

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

#如何添加其它master节点:

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 192.168.1.248:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:2186235afb370fbc0eda9a241f2fc877f66cce4e76f4cae121e6bf686769d55d \

--control-plane

#如何添加node节点:

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.248:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:2186235afb370fbc0eda9a241f2fc877f66cce4e76f4cae121e6bf686769d55d

5.2:kubectl 管理授权

安装 kubectl:

apt install -y kubectl=1.17.2-00

为当前用户进行kubectl的管理授权(通常为root用户添加kubectl的管理权限):

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

/etc/kubernetes/admin.conf中保存的是集群管理地址,以及集群管理认证所需的密钥,将其放置在当前用户的$HOME/.kube/config后,当用户执行kubectl命令时,就会调用其中的配置,从而可以通过对应的k8s集群的管理认证,进而可以对k8s集群进行管理操作。

尝试执行kubectl命令:

可以执行表示设置成功,当前系统用户已经可以通过kubectl管理k8s集群了。

root@k8s-master2:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master2.yqc.com NotReady master 11m v1.17.2

NotReady的状态是由于集群的网络组件还未部署,kubelet还无法正常获取节点状态。

5.3:部署 Pod 网络组件(flannel)

这里使用flannel作为k8s集群的Pod网络组件。

github的flannel项目中关于在k8s中部署flannel的说明:https://github.com/flannel-io/flannel#deploying-flannel-manually

Deploying flannel manually

Flannel can be added to any existing Kubernetes cluster though it’s simplest to add flannel before any pods using the pod network have been started.For Kubernetes v1.17+ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

5.3.1:修改 flannel 的 yml 文件

先把kube-flannel.yml下载下来,修改其中的配置,将网段更改为自己规划的pod网段。

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.10.0.0/16", #修改为自己的Pod网段

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.14.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.14.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

5.3.2:执行部署

kubectl apply -f kube-flannel.yml

flannel的控制器是DaemonSet,所以后期新添加的节点上,都会运行一个flannel的Pod。

5.4:创建 certificate-key

为初始化后的k8s集群添加节点时,需要使用--certificate-key指定密钥,需要提前创建一下。

执行:

kubeadm init phase upload-certs --upload-certs

结果:

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

4c2a009a1367866d5b2525a82a02545f3c4cc8f4db370804eaab3f997d608d42

5.5:添加 Master 节点

确保节点已经安装了docker、 kubeadm 和 kubelet 。

在需要添加为master的节点上运行:

kubeadm join 192.168.1.248:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:2186235afb370fbc0eda9a241f2fc877f66cce4e76f4cae121e6bf686769d55d \

--certificate-key 4c2a009a1367866d5b2525a82a02545f3c4cc8f4db370804eaab3f997d608d42 \

--control-plane

添加master节点的过程中也需要拉取所需的组件镜像,所以也可以提前拉取。

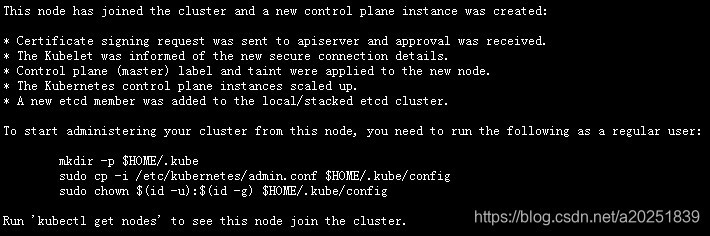

如果添加成功,会返回如下信息:

两台master节点上执行添加操作后,在master1上查看当前集群中的节点:

root@k8s-master1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1.yqc.com Ready master 32m v1.17.2

k8s-master2.yqc.com Ready master 28m v1.17.2

k8s-master3.yqc.com Ready master 24m v1.17.2

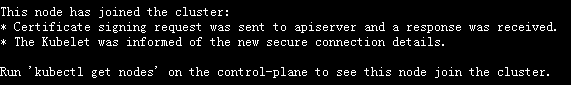

5.6:添加 Node 节点

同样,确保节点已经安装了docker、 kubeadm 和 kubelet 。

在需要添加为woker node的节点上执行:

kubeadm join 192.168.1.248:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:2186235afb370fbc0eda9a241f2fc877f66cce4e76f4cae121e6bf686769d55d \

--certificate-key 4c2a009a1367866d5b2525a82a02545f3c4cc8f4db370804eaab3f997d608d42

添加成功后的返回信息:

添加完两台node节点后,此时,查看k8s集群的节点:

root@k8s-master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1.yqc.com Ready master 39m v1.17.2

k8s-master2.yqc.com Ready master 35m v1.17.2

k8s-master3.yqc.com Ready master 31m v1.17.2

k8s-node1.yqc.com Ready 3m40s v1.17.2

k8s-node2.yqc.com Ready 3m22s v1.17.2

六:Dashboard 安装

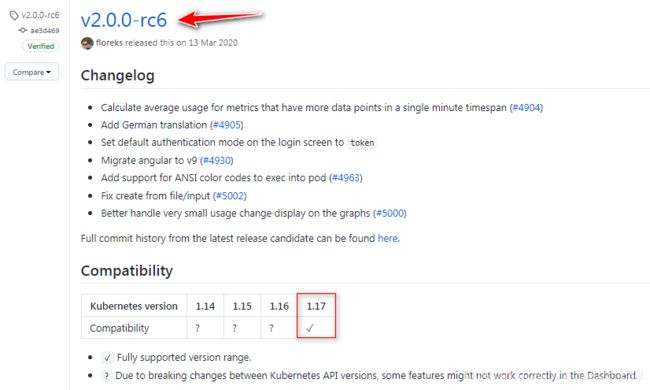

6.1:版本选择

github搜索kubernetes/dashboard项目,在releases中找到和1.17版本的k8s完全兼容的dashboard版本。

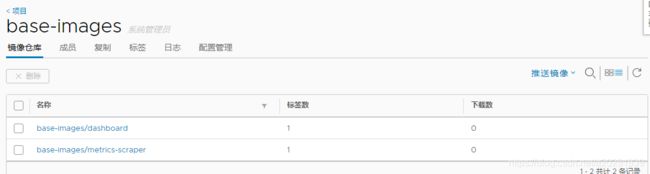

6.2:将相关镜像上传到本地 Harbor

最好在自己的harbor中上传一份当前所用的dashboard镜像,便于后期重建Pod。

把部署dashboard所需的两个镜像拉取下来,并上传到harbor(提前创建好相应的项目):

root@k8s-master1:~# docker pull kubernetesui/dashboard:v2.0.0-rc6

root@k8s-master1:~# docker pull kubernetesui/metrics-scraper:v1.0.3

root@k8s-master1:~# docker images | egrep '(scraper|dashboard)'

kubernetesui/dashboard v2.0.0-rc6 cdc71b5a8a0e 14 months ago 221MB

kubernetesui/metrics-scraper v1.0.3 3327f0dbcb4a 15 months ago 40.1MB

root@k8s-master1:~# docker tag cdc71b5a8a0e harbor.yqc.com/base-images/dashboard:v2.0.0-rc6

root@k8s-master1:~# docker tag 3327f0dbcb4a harbor.yqc.com/base-images/metrics-scraper:v1.0.3

root@k8s-master1:~# docker push harbor.yqc.com/base-images/dashboard:v2.0.0-rc6

root@k8s-master1:~# docker push harbor.yqc.com/base-images/metrics-scraper:v1.0.3

6.3:更改 yml 文件

在github的kubernetes/dashboard项目中下载dashboard的yml文件。

默认的yml文件不能直接用,因为其中使用的是默认的Service类型(Clusterip),不能通过外部访问到Dashboard。

而我们部署Dashboard是想要通过外部访问来管理k8s集群的,所以要将Service的类型定义为NodePort,并为其指定监听端口(这里定义监听在30002),同时,将镜像更改为自己harbor中的镜像,提升拉取速度。

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #指定type为NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30002 #定义nodePort监听端口

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: harbor.yqc.com/base-images/dashboard:v2.0.0-rc6 #私有harbor中的镜像

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: harbor.yqc.com/base-images/metrics-scraper:v1.0.3 #私有harbor中的镜像

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

6.4:创建 admin-user.yml

作用是为dashboard进行管理授权,使其具有管理k8s集群的权限。

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

6.5:执行部署

root@k8s-master1:~/dashboard-v2.0.0-rc6# kubectl apply -f dashboard-v2.0.0-rc6.yml -f admin-user.yml

验证pod是否运行:

root@k8s-master1:~# kubectl get pod -n kubernetes-dashboard

kubernetes-dashboard dashboard-metrics-scraper-6f86cf95f5-hlppq 1/1 Running 0 51s

kubernetes-dashboard kubernetes-dashboard-857797b456-7mtv5 1/1 Running 0 52s

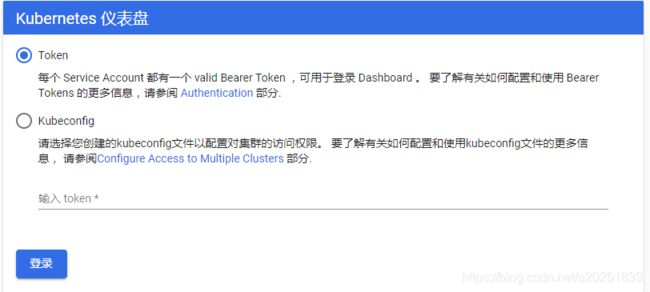

6.6:登录 Dashboard

6.6.1:打开 Dashboard

访问任一node节点的30002端口,都可以打开dashboard,因为nodePort会在所有node节点上监听:

node1:

root@k8s-node1:~# ss -tnlp | grep 30002

LISTEN 0 20480 *:30002 *:* users:(("kube-proxy",pid=89002,fd=8))

node2:

root@k8s-node2:~# ss -tnlp | grep 30002

LISTEN 0 20480 *:30002 *:* users:(("kube-proxy",pid=89653,fd=8))

要通过https访问dashboard,访问https://192.168.1.107:30002/或https://192.168.1.108:30002/

需要认证,有两种方式:

- 一种是直接输入token进行登录;

- 另一种是将token添加到

kubeconfig配置文件(原型为/etc/kubernetes/admin.conf),导入文件进行登录。

6.6.2:获取 Token

找到admin-user的secret名称:

root@k8s-master1:~# kubectl get secret -A | grep admin-user

kubernetes-dashboard admin-user-token-5rcp6 kubernetes.io/service-account-token 3 9m40s

查看这个secret的详细信息,其中有对应的token:

root@k8s-master1:~# kubectl describe secret admin-user-token-5rcp6 -n kubernetes-dashboard

Name: admin-user-token-5rcp6

Namespace: kubernetes-dashboard

Labels:

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: e59a6b13-e197-4538-b531-99e7bf738f7e

Type: kubernetes.io/service-account-token

Data

====

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImJmM1FSamgyYTBSVGxEdW96NzcxbzFqZDVaUkdRejZaaElKQ1h4eWFQTjQifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTVyY3A2Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJlNTlhNmIxMy1lMTk3LTQ1MzgtYjUzMS05OWU3YmY3MzhmN2UiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.PjXK_RYVBctcGBg99vSzaAFo-xSD6Jw53R0WJKi75KpH-tibNS5attjj0Dny3FaCcH7MFL8yphidQFiff9xQ2T1Tb9vAus3zTJSw4yiKW9wqyXUvJmXZ7MfjSXmppar4M_zhxatkKxz8Hs6bJiKj2YHOGuxbwSisBP5lBRVzPSrP1Nms0QBSCOPU0JUyVDPJg8GNo6cEP1NX6cLJbFdaErD9hVpM9r8yaHxbemprgTlnBkwSexLKvA6W8E1FS9c7WMhNj_uALfzbuJT2HcUuQDqeEdzDHwM3M_lew_MX836EmFMb5FThHEIZkRZcNUp70VQGabGLzZXvrMmYa5VsJg

ca.crt: 1025 bytes

6.6.3:登录方式一:直接输入 Token

将token粘到访问页面:

点登录就可以了

6.6.4:登录方式二:kubeconfig 文件(推荐)

使用token登录Dashboard的有效期默认是15min,每次退出重新找token再登录比较费事,有三种解决办法:

- 延长登录有效期

- 将token记录下来,每次复制粘贴

- 使用kubeconfig文件

创建kubeconfig文件(将/etc/kubernetes/admin.conf文件复制出来,在kubernetes-admin这个user下添加token字段,并将token值复制到其中)

root@k8s-master1:~# cp /root/.kube/config /opt/kubeconfig

root@k8s-master1:~# vim /opt/kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: xxxxxxxxxx

server: https://192.168.1.248:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: xxxxxxx

client-key-data: xxxxxxxxxxx

token: #将token粘到这里

然后将kubeconfig文件放到PC端

root@k8s-master1:~# sz /opt/kubeconfig

以后在登录时选择kubeconfig,并选择对应的kubeconfig文件,就可以登录了

6.7:负载均衡添加 Dashboard 代理

最后,在haproxy中添加一个30002端口的vip监听,将各node节点的30002端口添加为后端服务器。

listen k8s-dashboard-30002

bind 192.168.1.248:30002

mode tcp

server 192.168.1.107 192.168.1.107:30002 check inter 3s fall 3 rise 5

server 192.168.1.108 192.168.1.108:30002 check inter 3s fall 3 rise 5

这样,只要为vip添加一个dashboard的域名解析,就可以通过域名访问到dashboard了。

192.168.1.248 dashboard.yqc.com

七:验证 K8s 集群环境是否正常

创建几个测试Pod,验证k8s集群的运行和网络环境。

以alpine为镜像,创建测试Pod,3个副本:

kubectl run net-test1 --image=alpine --replicas=3 sleep 360000

kubectl get pod进行查看,运行正常:

NAME READY STATUS RESTARTS AGE

net-test1-5fcc69db59-l96z8 1/1 Running 0 37s

net-test1-5fcc69db59-t666q 1/1 Running 0 37s

net-test1-5fcc69db59-xkg7n 1/1 Running 0 37s

通过kubectl get pod -o wide可以看到有两个pod被分配到node1,1个被分配到node2:

进入一个容器,ping同宿主机的pod、跨宿主机的pod、外网,如果均正常,则网络环境正常:

root@k8s-master1:~# kubectl exec -it net-test1-5fcc69db59-cjchg sh

/ # ping 10.10.4.2

PING 10.10.4.2 (10.10.4.2): 56 data bytes

64 bytes from 10.10.4.2: seq=0 ttl=64 time=0.205 ms

64 bytes from 10.10.4.2: seq=1 ttl=64 time=0.077 ms

^C

--- 10.10.4.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.077/0.141/0.205 ms

/ # ping 10.10.3.2

PING 10.10.3.2 (10.10.3.2): 56 data bytes

64 bytes from 10.10.3.2: seq=0 ttl=62 time=12.454 ms

64 bytes from 10.10.3.2: seq=1 ttl=62 time=0.779 ms

^C

--- 10.10.3.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.779/6.616/12.454 ms

/ # ping 192.168.1.1

PING 192.168.1.1 (192.168.1.1): 56 data bytes

64 bytes from 192.168.1.1: seq=0 ttl=127 time=0.199 ms

^C

--- 192.168.1.1 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.199/0.199/0.199 ms

/ # ping 223.6.6.6

PING 223.6.6.6 (223.6.6.6): 56 data bytes

64 bytes from 223.6.6.6: seq=0 ttl=127 time=17.979 ms

64 bytes from 223.6.6.6: seq=1 ttl=127 time=15.503 ms

^C

/ # ping www.baidu.com

PING www.baidu.com (110.242.68.4): 56 data bytes

64 bytes from 110.242.68.4: seq=0 ttl=127 time=16.309 ms

64 bytes from 110.242.68.4: seq=1 ttl=127 time=23.715 ms

需要注意

- kubeadm安装的k8s集群,其中创建的证书默认有效期是1年,需要在到期前更新证书。

或者可以通过编译kubeadm源码来实现更长的有效期。

具体方法请自行查阅。 - 如果由于某些不可控原因导致初始化失败,或者在添加节点时失败,不用慌,在相应节点上执行

kubeadm reset,还原系统环境,确认无误后再次执行初始化或添加节点的操作。