Redis应用(6)——Redis的项目应用(五):缓存自动更新 --->Canal管道 & MySQL配置+安装canal & 入门案例 & Canal的项目应用

目录

- 引出

- Canal管道

-

- canal是什么?

- mysql配置

-

- 检查binlog

- 创建用户canal

- Canal安装(docker)

-

- 1.查询,拉取镜像

- 2.准备挂载启动的文件

- 3.修改ip,file,position,username,password

- 4.挂载启动privileged=true

-

- bug:加上privileged=true

- 5.查看日志

- canal入门案例

-

- 1.导包

- 2.官网代码案例

- 3.运行结果

- Redis的项目应用(五):Canal的项目应用

-

- 缓存自动更新

- 改造官网的案例@Component

- 主启动类里运行implements CommandLineRunner

- 进行测试

- 总结

引出

1.Canal:基于 MySQL 数据库增量日志解析,提供增量数据订阅和消费;

2.Canal的使用,MySQL配置,docker的Canal安装;

3.导包canal.client,官网案例,运行结果;

4.项目应用,注册业务中用户名的set自动更新;

5.改造官网案例@component;

6.主启动类implements CommandLineRunner;

Canal管道

canal是什么?

https://github.com/alibaba/canal

https://kgithub.com/alibaba/canal/wiki/ClientExample

Canal是阿里开源的一款基于Mysql binlog的增量订阅和消费组件,通过它可以订阅数据库的binlog日志,然后进行一些数据消费,如数据镜像、数据异构、数据索引、缓存更新等。相对于消息队列,通过这种机制可以实现数据的有序化和一致性。

![]()

canal [kə’næl],译意为水道/管道/沟渠,主要用途是基于 MySQL 数据库增量日志解析,提供增量数据订阅和消费

早期阿里巴巴因为杭州和美国双机房部署,存在跨机房同步的业务需求,实现方式主要是基于业务 trigger 获取增量变更。从 2010 年开始,业务逐步尝试数据库日志解析获取增量变更进行同步,由此衍生出了大量的数据库增量订阅和消费业务。

基于日志增量订阅和消费的业务包括

- 数据库镜像

- 数据库实时备份

- 索引构建和实时维护(拆分异构索引、倒排索引等)

- 业务 cache 刷新

- 带业务逻辑的增量数据处理

当前的 canal 支持源端 MySQL 版本包括 5.1.x , 5.5.x , 5.6.x , 5.7.x , 8.0.x

mysql配置

检查binlog

进入mysql中检查binlog是否开启。

show VARIABLES like ‘log_%’

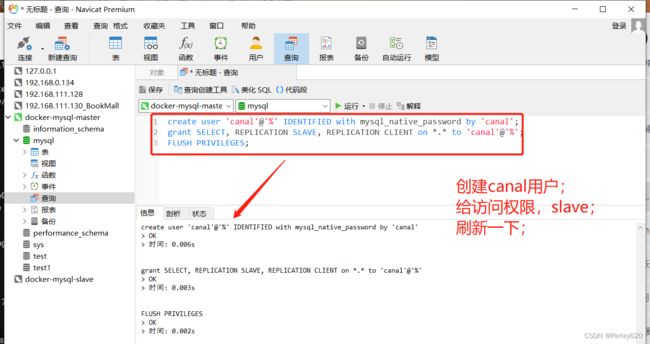

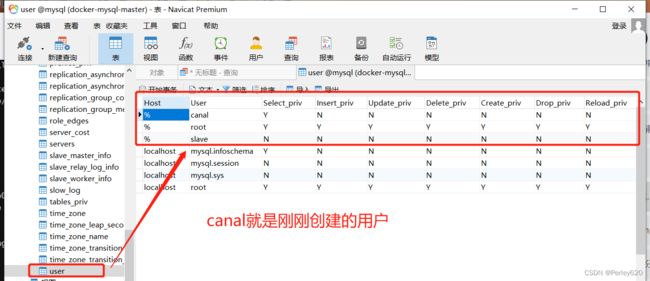

创建用户canal

在mysql中创建用户canal

create user 'canal'@'%' IDENTIFIED with mysql_native_password by 'canal';

grant SELECT, REPLICATION SLAVE, REPLICATION CLIENT on *.* to 'canal'@'%';

FLUSH PRIVILEGES;

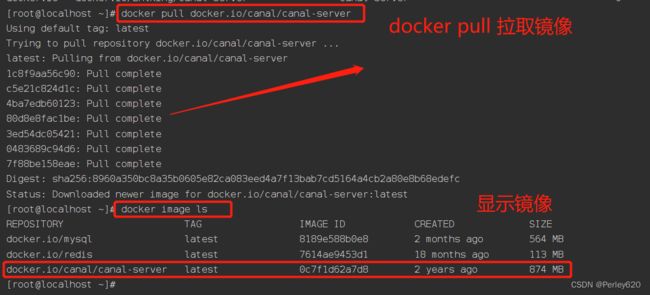

Canal安装(docker)

1.查询,拉取镜像

[root@localhost ~]# docker pull docker.io/canal/canal-server

Using default tag: latest

Trying to pull repository docker.io/canal/canal-server ...

latest: Pulling from docker.io/canal/canal-server

1c8f9aa56c90: Pull complete

c5e21c824d1c: Pull complete

4ba7edb60123: Pull complete

80d8e8fac1be: Pull complete

3ed54dc05421: Pull complete

0483689c94d6: Pull complete

7f88be158eae: Pull complete

Digest: sha256:8960a350bc8a35b0605e82ca083eed4a7f13bab7cd5164a4cb2a80e8b68edefc

Status: Downloaded newer image for docker.io/canal/canal-server:latest

[root@localhost ~]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/mysql latest 8189e588b0e8 2 months ago 564 MB

docker.io/redis latest 7614ae9453d1 18 months ago 113 MB

docker.io/canal/canal-server latest 0c7f1d62a7d8 2 years ago 874 MB

2.准备挂载启动的文件

权限放开

3.修改ip,file,position,username,password

修改ip,file,position,

修改username,password

4.挂载启动privileged=true

bug:加上privileged=true

[root@localhost conf]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/mysql latest 8189e588b0e8 2 months ago 564 MB

docker.io/redis latest 7614ae9453d1 18 months ago 113 MB

docker.io/canal/canal-server latest 0c7f1d62a7d8 2 years ago 874 MB

[root@localhost conf]# docker run -itd --name canal -p 11111:11111 --privileged=true -v /usr/local/software/canal/conf/instance.properties:/home/admin/canal-server/conf/example/instance.properties -v /usr/local/software/canal/conf/canal.properties:/home/admin/canal-server/conf/example/canal.properties canal/canal-server

2cd5ef5e0f4ecc1469ce5e920cde23148a1f228ee3611815d84f570d5628fd21

[root@localhost conf]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2cd5ef5e0f4e canal/canal-server "/alidata/bin/main..." 30 seconds ago Up 29 seconds 9100/tcp, 11110/tcp, 11112/tcp, 0.0.0.0:11111->11111/tcp canal

5.查看日志

[root@localhost conf]# docker logs canal

canal入门案例

https://kgithub.com/alibaba/canal/wiki/ClientExample

1.导包

<dependency>

<groupId>com.alibaba.ottergroupId>

<artifactId>canal.clientartifactId>

<version>1.1.0version>

dependency>

2.官网代码案例

创建连接

// 创建链接

final InetSocketAddress HOST = new InetSocketAddress("192.168.111.130",11111);

CanalConnector connector = CanalConnectors.newSingleConnector(HOST, "example", "", "");

package com.tianju.redisDemo.canal;

import java.net.InetSocketAddress;

import java.util.List;

import com.alibaba.otter.canal.client.CanalConnectors;

import com.alibaba.otter.canal.client.CanalConnector;

import com.alibaba.otter.canal.common.utils.AddressUtils;

import com.alibaba.otter.canal.protocol.Message;

import com.alibaba.otter.canal.protocol.CanalEntry.Column;

import com.alibaba.otter.canal.protocol.CanalEntry.Entry;

import com.alibaba.otter.canal.protocol.CanalEntry.EntryType;

import com.alibaba.otter.canal.protocol.CanalEntry.EventType;

import com.alibaba.otter.canal.protocol.CanalEntry.RowChange;

import com.alibaba.otter.canal.protocol.CanalEntry.RowData;

public class CanalDemo {

public static void main(String args[]) {

// 创建链接

final InetSocketAddress HOST = new InetSocketAddress("192.168.111.130",11111);

CanalConnector connector = CanalConnectors.newSingleConnector(HOST, "example", "", "");

int batchSize = 1000;

int emptyCount = 0;

try {

connector.connect();

connector.subscribe(".*\\..*");

connector.rollback();

int totalEmptyCount = 120;

while (emptyCount < totalEmptyCount) {

Message message = connector.getWithoutAck(batchSize); // 获取指定数量的数据

long batchId = message.getId();

int size = message.getEntries().size();

if (batchId == -1 || size == 0) {

emptyCount++;

System.out.println("empty count : " + emptyCount);

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

}

} else {

emptyCount = 0;

// System.out.printf("message[batchId=%s,size=%s] \n", batchId, size);

printEntry(message.getEntries());

}

connector.ack(batchId); // 提交确认

// connector.rollback(batchId); // 处理失败, 回滚数据

}

System.out.println("empty too many times, exit");

} finally {

connector.disconnect();

}

}

private static void printEntry(List<Entry> entrys) {

for (Entry entry : entrys) {

if (entry.getEntryType() == EntryType.TRANSACTIONBEGIN || entry.getEntryType() == EntryType.TRANSACTIONEND) {

continue;

}

RowChange rowChage = null;

try {

rowChage = RowChange.parseFrom(entry.getStoreValue());

} catch (Exception e) {

throw new RuntimeException("ERROR ## parser of eromanga-event has an error , data:" + entry.toString(),

e);

}

EventType eventType = rowChage.getEventType();

System.out.println(String.format("================> binlog[%s:%s] , name[%s,%s] , eventType : %s",

entry.getHeader().getLogfileName(), entry.getHeader().getLogfileOffset(),

entry.getHeader().getSchemaName(), entry.getHeader().getTableName(),

eventType));

for (RowData rowData : rowChage.getRowDatasList()) {

if (eventType == EventType.DELETE) {

printColumn(rowData.getBeforeColumnsList());

} else if (eventType == EventType.INSERT) {

printColumn(rowData.getAfterColumnsList());

} else {

System.out.println("-------> before");

printColumn(rowData.getBeforeColumnsList());

System.out.println("-------> after");

printColumn(rowData.getAfterColumnsList());

}

}

}

}

private static void printColumn(List<Column> columns) {

for (Column column : columns) {

System.out.println(column.getName() + " : " + column.getValue() + " update=" + column.getUpdated());

}

}

}

3.运行结果

Redis的项目应用(五):Canal的项目应用

缓存自动更新

如果数据库的数据发生了变化,要能够自动更新redis缓存

改造官网的案例@Component

@Component

AutoUpdateRedis.java文件

package com.tianju.redisDemo.canal;

import java.net.InetSocketAddress;

import java.util.List;

import com.alibaba.otter.canal.client.CanalConnectors;

import com.alibaba.otter.canal.client.CanalConnector;

import com.alibaba.otter.canal.common.utils.AddressUtils;

import com.alibaba.otter.canal.protocol.Message;

import com.alibaba.otter.canal.protocol.CanalEntry.Column;

import com.alibaba.otter.canal.protocol.CanalEntry.Entry;

import com.alibaba.otter.canal.protocol.CanalEntry.EntryType;

import com.alibaba.otter.canal.protocol.CanalEntry.EventType;

import com.alibaba.otter.canal.protocol.CanalEntry.RowChange;

import com.alibaba.otter.canal.protocol.CanalEntry.RowData;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.StringRedisTemplate;

import org.springframework.stereotype.Component;

@Slf4j

@Component

public class AutoUpdateRedis {

public void run() {

// 创建链接

final InetSocketAddress HOST = new InetSocketAddress("192.168.111.130",11111);

CanalConnector connector = CanalConnectors.newSingleConnector(HOST, "example", "", "");

int batchSize = 1000;

int emptyCount = 0;

try {

connector.connect();

connector.subscribe(".*\\..*");

connector.rollback();

int totalEmptyCount = 120;

while (emptyCount < totalEmptyCount) {

Message message = connector.getWithoutAck(batchSize); // 获取指定数量的数据

long batchId = message.getId();

int size = message.getEntries().size();

if (batchId == -1 || size == 0) {

emptyCount++;

System.out.println("empty count : " + emptyCount);

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

}

} else {

emptyCount = 0;

// System.out.printf("message[batchId=%s,size=%s] \n", batchId, size);

printEntry(message.getEntries());

}

connector.ack(batchId); // 提交确认

// connector.rollback(batchId); // 处理失败, 回滚数据

}

System.out.println("empty too many times, exit");

} finally {

connector.disconnect();

}

}

private void printEntry(List<Entry> entrys) {

for (Entry entry : entrys) {

if (entry.getEntryType() == EntryType.TRANSACTIONBEGIN || entry.getEntryType() == EntryType.TRANSACTIONEND) {

continue;

}

RowChange rowChage = null;

try {

rowChage = RowChange.parseFrom(entry.getStoreValue());

} catch (Exception e) {

throw new RuntimeException("ERROR ## parser of eromanga-event has an error , data:" + entry.toString(),

e);

}

EventType eventType = rowChage.getEventType();

System.out.println(String.format("================> binlog[%s:%s] , name[%s,%s] , eventType : %s",

entry.getHeader().getLogfileName(), entry.getHeader().getLogfileOffset(),

entry.getHeader().getSchemaName(), entry.getHeader().getTableName(),

eventType));

for (RowData rowData : rowChage.getRowDatasList()) {

if (eventType == EventType.DELETE) {

printColumn(rowData.getBeforeColumnsList()); // 删除

} else if (eventType == EventType.INSERT) {

printColumn(rowData.getAfterColumnsList()); // 添加

} else {

// 修改

log.debug("-------修改之前before");

updateBefore(rowData.getBeforeColumnsList());

log.debug("-------修改之后after");

updateAfter(rowData.getAfterColumnsList());

}

}

}

}

private static void printColumn(List<Column> columns) {

for (Column column : columns) {

System.out.println(column.getName() + " : " + column.getValue() + " update=" + column.getUpdated());

}

}

/**

* 数据库更新之前

* @param columns

*/

@Autowired

private StringRedisTemplate stringRedisTemplate;

private void updateBefore(List<Column> columns) {

for (Column column : columns) {

System.out.println(column.getName() + " : " + column.getValue() + " update=" + column.getUpdated());

// 如果数据更新,就更新缓存的数据

if ("username".equals(column.getName())){

// 把更新之前的数据删除

stringRedisTemplate.opsForSet().remove("usernames", column.getValue());

break;

}

}

}

private void updateAfter(List<Column> columns) {

for (Column column : columns) {

System.out.println(column.getName() + " : " + column.getValue() + " update=" + column.getUpdated());

// 如果数据更新,就更新缓存的数据

if ("username".equals(column.getName()) && column.getUpdated()){

// 把更新后的数据放入缓存

stringRedisTemplate.opsForSet().add("usernames", column.getValue());

break;

}

}

}

}

主启动类里运行implements CommandLineRunner

要点:

- implements CommandLineRunner

- 启动主启动类时也启动一个程序,有点像多线程;

RedisApp.java文件

package com.tianju.redisDemo;

import com.tianju.redisDemo.canal.AutoUpdateRedis;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.CommandLineRunner;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.scheduling.annotation.EnableScheduling;

@SpringBootApplication

@EnableScheduling // 允许定时任务

@Slf4j

public class RedisApp implements CommandLineRunner {

public static void main(String[] args) {

SpringApplication.run(RedisApp.class, args);

}

@Autowired

private AutoUpdateRedis autoUpdateRedis;

@Override

public void run(String... args) throws Exception {

log.debug(">>>>>启动缓存自动更新");

autoUpdateRedis.run();

}

}

进行测试

总结

1.Canal:基于 MySQL 数据库增量日志解析,提供增量数据订阅和消费;

2.Canal的使用,MySQL配置,docker的Canal安装;

3.导包canal.client,官网案例,运行结果;

4.项目应用,注册业务中用户名的set自动更新;

5.改造官网案例@component;

6.主启动类implements CommandLineRunner;