Python的threading与multi_process

threading 单独一个教程,节省时间数据分段线程运算,多线程同时开始,节省运算时间

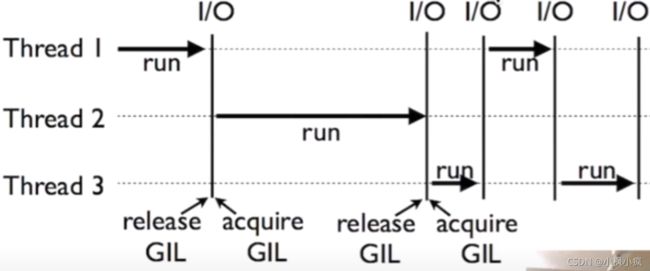

因为GIL锁的存在threading只能节省有限的时间以下代码结果证明不能大量节约资源,但是适合差别很大的任务,比如聊天程序接收和发送的是可以提升效率。

# threading5 不一定有效率

# GIL global interpreter lock 在不停的切换看似是多线程

# 假多线程的证明如下

# 分工合作的多线程是有用的

# multi process

import threading

import time

from queue import Queue

def job(l,q):

# print(l)

q.put(sum(l))

def multithreading(data):

q=Queue()

threads=[]

# data=[[1,2,3],[3,4,5],[4,4,4],[5,5,5]]

for i in range(4):

t=threading.Thread(target=job,args=(data,q))

t.start()

threads.append(t)

for thread in threads:

thread.join()

results=[]

for _ in range(4):

results.append(q.get())

print('结果是',sum(results))

def normal(l):

total=sum(l)

print(total)

if __name__ == '__main__':

l=list(range(1000000))

t1 = time.perf_counter()

normal(l*4)

t2 = time.perf_counter()

print('normal:',t2-t1)

t1 = time.perf_counter()

multithreading(l)

t2 = time.perf_counter()

print('multithreading:', t2 - t1)

multi_threading [多线程] 单独一个教程 join()的作用是主线程等待子线程的结束

import threading

import time

from queue import Queue

def thread_job():

print("This is an added Thread number is %s"%threading.current_thread())

print('T1 start')

for i in range(10):

time.sleep(0.1)

print("T1 finish")

# 多线程

def T2_job():

print("T2 start\n")

print("T2 finish\n")

def main():

add_thread=threading.Thread(target=thread_job)

thread2=threading.Thread(target=T2_job)

add_thread.start()

thread2.start()

# join的作用是等待运行完执行后续代码

# add_thread.join()

thread2.join()#主函数是否会等待线程结束

print("all done")

# print(threading.active_count())

# print(threading.enumerate())

# print(threading.current_thread())

if __name__ == '__main__':

main()

同类拆分任务效率提升

multi_process [多核] 多进程的使用

注意其中的队列的put()

import multiprocessing as mp

q=mp.Queue()

def job(q):

res=0

for i in range(1000):

res+=i+i**2+i**3

q.put(res)

if __name__ == '__main__':

p1=mp.Process(target=job,args=(q,))

p2 = mp.Process(target=job, args=(q,))

p1.start()

p2.start()

p1.join()

p1.join()

res1=q.get()

res2=q.get()

print(res1+res2)

多进程测试时间

import multiprocessing as mp

import threading as td

import time

def job(q):

res=0

for i in range(10000000):

res+=i+i**2+i**3

q.put(res)

def multcore():

q = mp.Queue()

p1=mp.Process(target=job,args=(q,))

p2 = mp.Process(target=job, args=(q,))

p1.start()

p2.start()

p1.join()

p2.join()

res1=q.get()

res2=q.get()

print(res1+res2)

def normal():

res = 0

for _ in range(2):

for i in range(10000000):

res += i + i ** 2 + i ** 3

print("normal:",res)

def multithread():

q = mp.Queue()

t1=td.Thread(target=job,args=(q,))

t2 = td.Thread(target=job, args=(q,))

t1.start()

t2.start()

t1.join()

t2.join()

res1=q.get()

res2=q.get()

print('multithread',res1+res2)

if __name__ == '__main__':

t1 = time.perf_counter()

multcore()

t2 = time.perf_counter()

print("multicore",t2-t1)

s1 = time.perf_counter()

normal()

s2 = time.perf_counter()

print("normal",s2-s1)

m1 = time.perf_counter()

multithread()

m2 = time.perf_counter()

print("multithread",m2-m1)

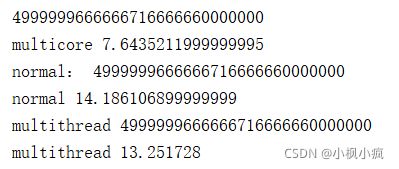

测试时间如下图

结论:在计算任务量足够大的情况下

多进程>多线程 and 正常运算

多线程(threading)效率 略微优于 正常运算

进程池与共享内存的申请概念

import multiprocessing as mp

# 多进程内存的共享值

value=mp.Value('d',1)

# array=mp.Array('i',[1,2,3])

def job(x):

return x*x

def multicore():

pool=mp.Pool(processes=2)

# 相对于process只能put

# map 的作用

res=pool.map(job,range(10))

print(res)

# 迭代变麻烦

res=pool.apply_async(job,(2,))

print(res.get())

multi_res=[pool.apply_async(job,(i,)) for i in range(100)]

print([res.get() for res in multi_res])

if __name__ == '__main__':

multicore()

共享内存与进程锁

import time

import multiprocessing as mp

def job(v,num,l):

l.acquire()

for _ in range(10):

time.sleep(0.1)

v.value+=num

print(v.value)

l.release()

def multi_core():

l=mp.Lock()

v=mp.Value('i',0)

p1=mp.Process(target=job,args=(v,1,l))

p2=mp.Process(target=job,args=(v,3,l))

p1.start()

p2.start()

p1.join()

p2.join()

if __name__ == '__main__':

multi_core()