client-go源码

注 本文源码基于分支release-1.19

client

restclient

RESTClient是最基础的,相当于的底层基础结构,封装底层http rest请求。可以直接通过 是RESTClient提供的RESTful方法如Get(),Put(),Post(),Delete()进行交互,同时支持Json 和 protobuf。支持所有原生资源和crd,为了更为优雅的处理,需要进一步封装,通过Clientset封装RESTClient,然后再对外提供接口和服务。

源码示例

type RESTClient struct {

// base is the root URL for all invocations of the client

base *url.URL

// versionedAPIPath is a path segment connecting the base URL to the resource root

versionedAPIPath string

// content describes how a RESTClient encodes and decodes responses.

content ClientContentConfig

// creates BackoffManager that is passed to requests.

createBackoffMgr func() BackoffManager

// rateLimiter is shared among all requests created by this client unless specifically

// overridden.

rateLimiter flowcontrol.RateLimiter

// warningHandler is shared among all requests created by this client.

// If not set, defaultWarningHandler is used.

warningHandler WarningHandler

// Set specific behavior of the client. If not set http.DefaultClient will be used.

Client *http.Client

}

func (c *RESTClient) Verb(verb string) *Request {

return NewRequest(c).Verb(verb)

}

// Post begins a POST request. Short for c.Verb("POST").

func (c *RESTClient) Post() *Request {

return c.Verb("POST")

}

// Put begins a PUT request. Short for c.Verb("PUT").

func (c *RESTClient) Put() *Request {

return c.Verb("PUT")

}

// Patch begins a PATCH request. Short for c.Verb("Patch").

func (c *RESTClient) Patch(pt types.PatchType) *Request {

return c.Verb("PATCH").SetHeader("Content-Type", string(pt))

}

// Get begins a GET request. Short for c.Verb("GET").

func (c *RESTClient) Get() *Request {

return c.Verb("GET")

}

// Delete begins a DELETE request. Short for c.Verb("DELETE").

func (c *RESTClient) Delete() *Request {

return c.Verb("DELETE")

}

clientset

Clientset是调用Kubernetes资源对象最常用的client,可以操作所有的资源对象,包含RESTClient。需要指定Group、指定Version,然后根据Resource获取,每种内置的gvk都有对应client-go中一个informer、clientset、list,在staging/src/k8s.io/client-go/路径中。自定义的资源的clientset需要通过codegen自动生成。最优雅的客户端方式。

使用示例:

func main() {

var kubeconfig *string

if home := homedir.HomeDir(); home != "" {

kubeconfig = flag.String("kubeconfig", filepath.Join(home, ".kube", "config"), "(optional) absolute path to the kubeconfig file")

} else {

kubeconfig = flag.String("kubeconfig", "", "absolute path to the kubeconfig file")

}

flag.Parse()

config, err := clientcmd.BuildConfigFromFlags("", *kubeconfig)

if err != nil {

panic(err)

}

clientset, err := kubernetes.NewForConfig(config)

if err != nil {

panic(err)

}

deploymentsClient := clientset.AppsV1().Deployments(apiv1.NamespaceDefault)

deployment := &appsv1.Deployment{

ObjectMeta: metav1.ObjectMeta{

Name: "demo-deployment",

},

Spec: appsv1.DeploymentSpec{

Replicas: int32Ptr(2),

Selector: &metav1.LabelSelector{

MatchLabels: map[string]string{

"app": "demo",

},

},

Template: apiv1.PodTemplateSpec{

ObjectMeta: metav1.ObjectMeta{

Labels: map[string]string{

"app": "demo",

},

},

Spec: apiv1.PodSpec{

Containers: []apiv1.Container{

{

Name: "web",

Image: "nginx:1.12",

Ports: []apiv1.ContainerPort{

{

Name: "http",

Protocol: apiv1.ProtocolTCP,

ContainerPort: 80,

},

},

},

},

},

},

},

}

// Create Deployment

fmt.Println("Creating deployment...")

result, err := deploymentsClient.Create(context.TODO(), deployment, metav1.CreateOptions{})

if err != nil {

panic(err)

}

fmt.Printf("Created deployment %q.\n", result.GetObjectMeta().GetName())

// Update Deployment

prompt()

fmt.Println("Updating deployment...")

retryErr := retry.RetryOnConflict(retry.DefaultRetry, func() error {

// Retrieve the latest version of Deployment before attempting update

// RetryOnConflict uses exponential backoff to avoid exhausting the apiserver

result, getErr := deploymentsClient.Get(context.TODO(), "demo-deployment", metav1.GetOptions{})

if getErr != nil {

panic(fmt.Errorf("Failed to get latest version of Deployment: %v", getErr))

}

result.Spec.Replicas = int32Ptr(1) // reduce replica count

result.Spec.Template.Spec.Containers[0].Image = "nginx:1.13" // change nginx version

_, updateErr := deploymentsClient.Update(context.TODO(), result, metav1.UpdateOptions{})

return updateErr

})

if retryErr != nil {

panic(fmt.Errorf("Update failed: %v", retryErr))

}

fmt.Println("Updated deployment...")

// List Deployments

prompt()

fmt.Printf("Listing deployments in namespace %q:\n", apiv1.NamespaceDefault)

list, err := deploymentsClient.List(context.TODO(), metav1.ListOptions{})

if err != nil {

panic(err)

}

for _, d := range list.Items {

fmt.Printf(" * %s (%d replicas)\n", d.Name, *d.Spec.Replicas)

}

// Delete Deployment

prompt()

fmt.Println("Deleting deployment...")

deletePolicy := metav1.DeletePropagationForeground

if err := deploymentsClient.Delete(context.TODO(), "demo-deployment", metav1.DeleteOptions{

PropagationPolicy: &deletePolicy,

}); err != nil {

panic(err)

}

fmt.Println("Deleted deployment.")

}

func prompt() {

fmt.Printf("-> Press Return key to continue.")

scanner := bufio.NewScanner(os.Stdin)

for scanner.Scan() {

break

}

if err := scanner.Err(); err != nil {

panic(err)

}

fmt.Println()

}

func int32Ptr(i int32) *int32 { return &i }

dynamicclient

Dynamic client 是一种动态的 客户端,它能处理 kubernetes 所有的资源包括内置资源和crd资源。不同于 clientset,dynamic client 返回的对象是一个 map[string]interface{}数据结构,如果一个 controller 中需要控制所有的 API,可以使用dynamic client,目前它在 garbage collector 和 namespace controller中被使用。只支持JSON。

使用示例:

func main() {

var kubeconfig *string

if home := homedir.HomeDir(); home != "" {

kubeconfig = flag.String("kubeconfig", filepath.Join(home, ".kube", "config"), "(optional) absolute path to the kubeconfig file")

} else {

kubeconfig = flag.String("kubeconfig", "", "absolute path to the kubeconfig file")

}

flag.Parse()

namespace := "default"

config, err := clientcmd.BuildConfigFromFlags("", *kubeconfig)

if err != nil {

panic(err)

}

client, err := dynamic.NewForConfig(config)

if err != nil {

panic(err)

}

deploymentRes := schema.GroupVersionResource{Group: "apps", Version: "v1", Resource: "deployments"}

deployment := &unstructured.Unstructured{

Object: map[string]interface{}{

"apiVersion": "apps/v1",

"kind": "Deployment",

"metadata": map[string]interface{}{

"name": "demo-deployment",

},

"spec": map[string]interface{}{

"replicas": 2,

"selector": map[string]interface{}{

"matchLabels": map[string]interface{}{

"app": "demo",

},

},

"template": map[string]interface{}{

"metadata": map[string]interface{}{

"labels": map[string]interface{}{

"app": "demo",

},

},

"spec": map[string]interface{}{

"containers": []map[string]interface{}{

{

"name": "web",

"image": "nginx:1.12",

"ports": []map[string]interface{}{

{

"name": "http",

"protocol": "TCP",

"containerPort": 80,

},

},

},

},

},

},

},

},

}

// Create Deployment

fmt.Println("Creating deployment...")

result, err := client.Resource(deploymentRes).Namespace(namespace).Create(context.TODO(), deployment, metav1.CreateOptions{})

if err != nil {

panic(err)

}

fmt.Printf("Created deployment %q.\n", result.GetName())

// Update Deployment

prompt()

fmt.Println("Updating deployment...")

retryErr := retry.RetryOnConflict(retry.DefaultRetry, func() error {

// Retrieve the latest version of Deployment before attempting update

// RetryOnConflict uses exponential backoff to avoid exhausting the apiserver

result, getErr := client.Resource(deploymentRes).Namespace(namespace).Get(context.TODO(), "demo-deployment", metav1.GetOptions{})

if getErr != nil {

panic(fmt.Errorf("failed to get latest version of Deployment: %v", getErr))

}

// update replicas to 1

if err := unstructured.SetNestedField(result.Object, int64(1), "spec", "replicas"); err != nil {

panic(fmt.Errorf("failed to set replica value: %v", err))

}

// extract spec containers

containers, found, err := unstructured.NestedSlice(result.Object, "spec", "template", "spec", "containers")

if err != nil || !found || containers == nil {

panic(fmt.Errorf("deployment containers not found or error in spec: %v", err))

}

// update container[0] image

if err := unstructured.SetNestedField(containers[0].(map[string]interface{}), "nginx:1.13", "image"); err != nil {

panic(err)

}

if err := unstructured.SetNestedField(result.Object, containers, "spec", "template", "spec", "containers"); err != nil {

panic(err)

}

_, updateErr := client.Resource(deploymentRes).Namespace(namespace).Update(context.TODO(), result, metav1.UpdateOptions{})

return updateErr

})

if retryErr != nil {

panic(fmt.Errorf("update failed: %v", retryErr))

}

fmt.Println("Updated deployment...")

// List Deployments

prompt()

fmt.Printf("Listing deployments in namespace %q:\n", apiv1.NamespaceDefault)

list, err := client.Resource(deploymentRes).Namespace(namespace).List(context.TODO(), metav1.ListOptions{})

if err != nil {

panic(err)

}

for _, d := range list.Items {

replicas, found, err := unstructured.NestedInt64(d.Object, "spec", "replicas")

if err != nil || !found {

fmt.Printf("Replicas not found for deployment %s: error=%s", d.GetName(), err)

continue

}

fmt.Printf(" * %s (%d replicas)\n", d.GetName(), replicas)

}

// Delete Deployment

prompt()

fmt.Println("Deleting deployment...")

deletePolicy := metav1.DeletePropagationForeground

deleteOptions := metav1.DeleteOptions{

PropagationPolicy: &deletePolicy,

}

if err := client.Resource(deploymentRes).Namespace(namespace).Delete(context.TODO(), "demo-deployment", deleteOptions); err != nil {

panic(err)

}

fmt.Println("Deleted deployment.")

}

func prompt() {

fmt.Printf("-> Press Return key to continue.")

scanner := bufio.NewScanner(os.Stdin)

for scanner.Scan() {

break

}

if err := scanner.Err(); err != nil {

panic(err)

}

fmt.Println()

}

discoveryclient

DiscoveryClient是发现客户端,主要用于发现Kubernetes API Server所支持的资源组、资源版本、资源信息gvk信息。除此之外,还可以将这些信息存储到本地磁盘,用户本地缓存cache memory,以减轻对Kubernetes API Server访问的压力。kubectl的api-versions和api-resources命令查看支持的api资源信息的输出是通过DiscoversyClient实现的。

源码位置

源码

目录

-

deprecated 废弃

-

discovery:gvk资源的客户端

-

dynamic:动态客户端,支持任何资源类型 map[string]interface{}

-

informers:内置 各种api资源的Informer接口

-

kubernetes: group下每种资源不同api资源的 clientset客户端

-

listers:每种资源的lister,在上述informers中使用

-

metadata

-

pkg

-

plugin

-

rest:最底层的restclient 封装http请求

-

restmapper

-

scale

-

tools:主要包括cache等。。。见下

-

transport

-

util:包括自定义控制器用的各种队列。。。见下

rest

-

fake

-

watch:

-

client.go:拼装restclient结构

-

config.go:配置包括:http请求、gv、tls等

-

plugin.go

-

request.go:封装http rest请求 restclient的底层调用

-

transport.go

-

url_utils.go

-

urlbackoff.go

-

warnings.go:警告 放到http header Warning

restclient的底层核心实现request.go

// POST PUT DELETE ...

func (r *Request) request(ctx context.Context, fn func(*http.Request, *http.Response)) error {

//Metrics for total request latency

start := time.Now()

defer func() {

metrics.RequestLatency.Observe(r.verb, r.finalURLTemplate(), time.Since(start))

}()

if r.err != nil {

klog.V(4).Infof("Error in request: %v", r.err)

return r.err

}

if err := r.requestPreflightCheck(); err != nil {

return err

}

client := r.c.Client

if client == nil {

client = http.DefaultClient

}

// Throttle the first try before setting up the timeout configured on the

// client. We don't want a throttled client to return timeouts to callers

// before it makes a single request.

if err := r.tryThrottle(ctx); err != nil {

return err

}

if r.timeout > 0 {

var cancel context.CancelFunc

ctx, cancel = context.WithTimeout(ctx, r.timeout)

defer cancel()

}

// Right now we make about ten retry attempts if we get a Retry-After response.

retries := 0

for {

url := r.URL().String()

req, err := http.NewRequest(r.verb, url, r.body)

if err != nil {

return err

}

req = req.WithContext(ctx)

req.Header = r.headers

r.backoff.Sleep(r.backoff.CalculateBackoff(r.URL()))

if retries > 0 {

// We are retrying the request that we already send to apiserver

// at least once before.

// This request should also be throttled with the client-internal rate limiter.

if err := r.tryThrottle(ctx); err != nil {

return err

}

}

resp, err := client.Do(req)

updateURLMetrics(r, resp, err)

if err != nil {

r.backoff.UpdateBackoff(r.URL(), err, 0)

} else {

r.backoff.UpdateBackoff(r.URL(), err, resp.StatusCode)

}

if err != nil {

// "Connection reset by peer" or "apiserver is shutting down" are usually a transient errors.

// Thus in case of "GET" operations, we simply retry it.

// We are not automatically retrying "write" operations, as

// they are not idempotent.

if r.verb != "GET" {

return err

}

// For connection errors and apiserver shutdown errors retry.

if net.IsConnectionReset(err) || net.IsProbableEOF(err) {

// For the purpose of retry, we set the artificial "retry-after" response.

// TODO: Should we clean the original response if it exists?

resp = &http.Response{

StatusCode: http.StatusInternalServerError,

Header: http.Header{"Retry-After": []string{"1"}},

Body: ioutil.NopCloser(bytes.NewReader([]byte{})),

}

} else {

return err

}

}

done := func() bool {

// Ensure the response body is fully read and closed

// before we reconnect, so that we reuse the same TCP

// connection.

defer func() {

const maxBodySlurpSize = 2 << 10

if resp.ContentLength <= maxBodySlurpSize {

io.Copy(ioutil.Discard, &io.LimitedReader{R: resp.Body, N: maxBodySlurpSize})

}

resp.Body.Close()

}()

retries++

if seconds, wait := checkWait(resp); wait && retries <= r.maxRetries {

if seeker, ok := r.body.(io.Seeker); ok && r.body != nil {

_, err := seeker.Seek(0, 0)

if err != nil {

klog.V(4).Infof("Could not retry request, can't Seek() back to beginning of body for %T", r.body)

fn(req, resp)

return true

}

}

klog.V(4).Infof("Got a Retry-After %ds response for attempt %d to %v", seconds, retries, url)

r.backoff.Sleep(time.Duration(seconds) * time.Second)

return false

}

fn(req, resp)

return true

}()

if done {

return nil

}

}

}

// watch

func (r *Request) Watch(ctx context.Context) (watch.Interface, error) {

// We specifically don't want to rate limit watches, so we

// don't use r.rateLimiter here.

if r.err != nil {

return nil, r.err

}

url := r.URL().String()

req, err := http.NewRequest(r.verb, url, r.body)

if err != nil {

return nil, err

}

req = req.WithContext(ctx)

req.Header = r.headers

client := r.c.Client

if client == nil {

client = http.DefaultClient

}

r.backoff.Sleep(r.backoff.CalculateBackoff(r.URL()))

resp, err := client.Do(req)

updateURLMetrics(r, resp, err)

if r.c.base != nil {

if err != nil {

r.backoff.UpdateBackoff(r.c.base, err, 0)

} else {

r.backoff.UpdateBackoff(r.c.base, err, resp.StatusCode)

}

}

if err != nil {

// The watch stream mechanism handles many common partial data errors, so closed

// connections can be retried in many cases.

if net.IsProbableEOF(err) || net.IsTimeout(err) {

return watch.NewEmptyWatch(), nil

}

return nil, err

}

if resp.StatusCode != http.StatusOK {

defer resp.Body.Close()

if result := r.transformResponse(resp, req); result.err != nil {

return nil, result.err

}

return nil, fmt.Errorf("for request %s, got status: %v", url, resp.StatusCode)

}

contentType := resp.Header.Get("Content-Type")

mediaType, params, err := mime.ParseMediaType(contentType)

if err != nil {

klog.V(4).Infof("Unexpected content type from the server: %q: %v", contentType, err)

}

objectDecoder, streamingSerializer, framer, err := r.c.content.Negotiator.StreamDecoder(mediaType, params)

if err != nil {

return nil, err

}

handleWarnings(resp.Header, r.warningHandler)

frameReader := framer.NewFrameReader(resp.Body)

watchEventDecoder := streaming.NewDecoder(frameReader, streamingSerializer)

return watch.NewStreamWatcher(

restclientwatch.NewDecoder(watchEventDecoder, objectDecoder),

// use 500 to indicate that the cause of the error is unknown - other error codes

// are more specific to HTTP interactions, and set a reason

errors.NewClientErrorReporter(http.StatusInternalServerError, r.verb, "ClientWatchDecoding"),

), nil

}

tools

-

auth

-

cache:informer的实现 见下

-

clientcmd

-

events

-

leaderelection

-

metrics:指标 度量告警性能?

-

pager:分页器

-

portforward

-

record

-

reference

-

remotecommand

-

watch

util

-

cert

-

certificate

-

connrotation

-

exec

-

flowcontrol

-

homedir

-

jsonpath

-

keyutil

-

retry

-

testing

-

workqueue 各种队列的实现 见下

workqueue

概念

client-go 中实现了多种队列,包括通用队列、延时队列、限速队列 。

-

default_rate_limiters.go

-

default_rate_limiters_test.go

-

delaying_queue.go

-

delaying_queue_test.go

-

doc.go

-

main_test.go

-

metrics.go

-

metrics_test.go

-

parallelizer.go

-

parallelizer_test.go

-

queue.go

-

queue_test.go

-

rate_limiting_queue.go

-

rate_limiting_queue_test.go

延时队列与优先级队列

延迟队列可以看成一个使用时间作为优先级的优先级队列。

优先级队列虽然也叫队列,但是和普通的队列还是有差别的。普通队列出队顺序只取决于入队顺序,而优先级队列的出队顺序总是按照元素自身的优先级。换句话说,优先级队列是一个自动排序的队列。元素自身的优先级可以根据入队时间,也可以根据其他因素来确定,因此非常灵活。优先级队列的内部实现可以通过有序数组、无序数组和堆等。

限速队列

限速队列是扩展的延迟队列 。用延迟队列的特性,延迟某个元素的插入时间来达到限速的目的。

延迟队列

引用:https://mp.weixin.qq.com/s?__biz=MzU4NjcwMTk1Ng==&mid=2247483864&idx=1&sn=2fe734d9913936c59e0b2dd0d6ad0403&chksm=fdf60c33ca8185253f9a9000dcc790bce459beaef96a49626489ffd8f34a7e6c0a4eaadbe3bb&scene=21#wechat_redirect

基础通用队列

// 通用队列接口定义

type Interface interface {

Add(item interface{}) // 向队列中添加一个元素

Len() int // 获取队列长度

Get() (item interface{}, shutdown bool) // 获取队列头部的元素,第二个返回值表示队列是否已经关闭

Done(item interface{}) // 标记队列中元素已经处理完

ShutDown() // 关闭队列

ShuttingDown() bool // 队列是否正在关闭

}

// New constructs a new work queue (see the package comment).

func New() *Type {

return NewNamed("")

}

func NewNamed(name string) *Type {

rc := clock.RealClock{}

return newQueue(

rc,

globalMetricsFactory.newQueueMetrics(name, rc),

defaultUnfinishedWorkUpdatePeriod,

)

}

func newQueue(c clock.Clock, metrics queueMetrics, updatePeriod time.Duration) *Type {

t := &Type{

clock: c,

dirty: set{},

processing: set{},

cond: sync.NewCond(&sync.Mutex{}),

metrics: metrics,

unfinishedWorkUpdatePeriod: updatePeriod,

}

go t.updateUnfinishedWorkLoop()

return t

}

const defaultUnfinishedWorkUpdatePeriod = 500 * time.Millisecond

// Type is a work queue (see the package comment).

type Type struct {

// queue defines the order in which we will work on items. Every

// element of queue should be in the dirty set and not in the

// processing set.

queue []t

// dirty defines all of the items that need to be processed.

dirty set

// Things that are currently being processed are in the processing set.

// These things may be simultaneously in the dirty set. When we finish

// processing something and remove it from this set, we'll check if

// it's in the dirty set, and if so, add it to the queue.

processing set

cond *sync.Cond

shuttingDown bool

metrics queueMetrics

unfinishedWorkUpdatePeriod time.Duration

clock clock.Clock

}

type empty struct{}

type t interface{}

type set map[t]empty

func (s set) has(item t) bool {

_, exists := s[item]

return exists

}

func (s set) insert(item t) {

s[item] = empty{}

}

func (s set) delete(item t) {

delete(s, item)

}

// Add marks item as needing processing.

func (q *Type) Add(item interface{}) {

q.cond.L.Lock()

defer q.cond.L.Unlock()

if q.shuttingDown {

return

}

if q.dirty.has(item) {

return

}

q.metrics.add(item)

q.dirty.insert(item)

if q.processing.has(item) {

return

}

q.queue = append(q.queue, item)

q.cond.Signal()

}

// Len returns the current queue length, for informational purposes only. You

// shouldn't e.g. gate a call to Add() or Get() on Len() being a particular

// value, that can't be synchronized properly.

func (q *Type) Len() int {

q.cond.L.Lock()

defer q.cond.L.Unlock()

return len(q.queue)

}

// Get blocks until it can return an item to be processed. If shutdown = true,

// the caller should end their goroutine. You must call Done with item when you

// have finished processing it.

func (q *Type) Get() (item interface{}, shutdown bool) {

q.cond.L.Lock()

defer q.cond.L.Unlock()

for len(q.queue) == 0 && !q.shuttingDown {

q.cond.Wait()

}

if len(q.queue) == 0 {

// We must be shutting down.

return nil, true

}

item, q.queue = q.queue[0], q.queue[1:]

q.metrics.get(item)

q.processing.insert(item)

q.dirty.delete(item)

return item, false

}

// Done marks item as done processing, and if it has been marked as dirty again

// while it was being processed, it will be re-added to the queue for

// re-processing.

func (q *Type) Done(item interface{}) {

q.cond.L.Lock()

defer q.cond.L.Unlock()

q.metrics.done(item)

q.processing.delete(item)

if q.dirty.has(item) {

q.queue = append(q.queue, item)

q.cond.Signal()

}

}

// ShutDown will cause q to ignore all new items added to it. As soon as the

// worker goroutines have drained the existing items in the queue, they will be

// instructed to exit.

func (q *Type) ShutDown() {

q.cond.L.Lock()

defer q.cond.L.Unlock()

q.shuttingDown = true

q.cond.Broadcast()

}

func (q *Type) ShuttingDown() bool {

q.cond.L.Lock()

defer q.cond.L.Unlock()

return q.shuttingDown

}

func (q *Type) updateUnfinishedWorkLoop() {

t := q.clock.NewTicker(q.unfinishedWorkUpdatePeriod)

defer t.Stop()

for range t.C() {

if !func() bool {

q.cond.L.Lock()

defer q.cond.L.Unlock()

if !q.shuttingDown {

q.metrics.updateUnfinishedWork()

return true

}

return false

}() {

return

}

}

}

延时队列实现

// DelayingInterface is an Interface that can Add an item at a later time. This makes it easier to

// requeue items after failures without ending up in a hot-loop.

type DelayingInterface interface {

Interface

// AddAfter adds an item to the workqueue after the indicated duration has passed

AddAfter(item interface{}, duration time.Duration)

}

// 对外暴露的实例化函数

// NewDelayingQueue constructs a new workqueue with delayed queuing ability

func NewDelayingQueue() DelayingInterface {

return NewDelayingQueueWithCustomClock(clock.RealClock{}, "")

}

// NewDelayingQueueWithCustomQueue constructs a new workqueue with ability to

// inject custom queue Interface instead of the default one

func NewDelayingQueueWithCustomQueue(q Interface, name string) DelayingInterface {

return newDelayingQueue(clock.RealClock{}, q, name)

}

// NewNamedDelayingQueue constructs a new named workqueue with delayed queuing ability

func NewNamedDelayingQueue(name string) DelayingInterface {

return NewDelayingQueueWithCustomClock(clock.RealClock{}, name)

}

// NewDelayingQueueWithCustomClock constructs a new named workqueue

// with ability to inject real or fake clock for testing purposes

func NewDelayingQueueWithCustomClock(clock clock.Clock, name string) DelayingInterface {

return newDelayingQueue(clock, NewNamed(name), name)

}

func newDelayingQueue(clock clock.Clock, q Interface, name string) *delayingType {

ret := &delayingType{

Interface: q,

clock: clock,

heartbeat: clock.NewTicker(maxWait),

stopCh: make(chan struct{}),

waitingForAddCh: make(chan *waitFor, 1000),

metrics: newRetryMetrics(name),

}

// 后台循环任务处理延时消息的消费和添加

go ret.waitingLoop()

return ret

}

// delayingType wraps an Interface and provides delayed re-enquing

type delayingType struct {

// 一个通用队列

Interface

// clock tracks time for delayed firing

clock clock.Clock

// stopCh lets us signal a shutdown to the waiting loop

stopCh chan struct{}

// stopOnce guarantees we only signal shutdown a single time

stopOnce sync.Once

// heartbeat ensures we wait no more than maxWait before firing

heartbeat clock.Ticker

// waitingForAddCh is a buffered channel that feeds waitingForAdd

//buffered channel,将延迟添加的元素封装成 waitFor 放到通道中,当到了指定的时间后就将元素添加到通用队列中去进行处理,还没有到时间的话就放到这个缓冲通道中

waitingForAddCh chan *waitFor

// metrics counts the number of retries

metrics retryMetrics

}

// waitFor holds the data to add and the time it should be added

type waitFor struct {

data t

readyAt time.Time

// index in the priority queue (heap)

index int

}

// waitForPriorityQueue implements a priority queue for waitFor items.

//

// waitForPriorityQueue implements heap.Interface. The item occurring next in

// time (i.e., the item with the smallest readyAt) is at the root (index 0).

// Peek returns this minimum item at index 0. Pop returns the minimum item after

// it has been removed from the queue and placed at index Len()-1 by

// container/heap. Push adds an item at index Len(), and container/heap

// percolates it into the correct location.

// 把需要延迟的元素放到一个队列中,然后在队列中按照元素的延时添加时间(readyAt)从小到大排序

// 其实这个优先级队列就是实现的 go内置的 container/heap/heap.go 中的 Interface 接口。heap.Init时会根据实现的函数排序。最终实现的队列就是 waitForPriorityQueue 这个集合是有序的,按照时间从小到大进行排列

type waitForPriorityQueue []*waitFor

func (pq waitForPriorityQueue) Len() int {

return len(pq)

}

func (pq waitForPriorityQueue) Less(i, j int) bool {

return pq[i].readyAt.Before(pq[j].readyAt)

}

func (pq waitForPriorityQueue) Swap(i, j int) {

pq[i], pq[j] = pq[j], pq[i]

pq[i].index = i

pq[j].index = j

}

// Push adds an item to the queue. Push should not be called directly; instead,

// use `heap.Push`.

func (pq *waitForPriorityQueue) Push(x interface{}) {

n := len(*pq)

item := x.(*waitFor)

item.index = n

*pq = append(*pq, item)

}

// Pop removes an item from the queue. Pop should not be called directly;

// instead, use `heap.Pop`.

func (pq *waitForPriorityQueue) Pop() interface{} {

n := len(*pq)

item := (*pq)[n-1]

item.index = -1

*pq = (*pq)[0:(n - 1)]

return item

}

// Peek returns the item at the beginning of the queue, without removing the

// item or otherwise mutating the queue. It is safe to call directly.

func (pq waitForPriorityQueue) Peek() interface{} {

return pq[0]

}

// ShutDown stops the queue. After the queue drains, the returned shutdown bool

// on Get() will be true. This method may be invoked more than once.

func (q *delayingType) ShutDown() {

q.stopOnce.Do(func() {

q.Interface.ShutDown()

close(q.stopCh)

q.heartbeat.Stop()

})

}

// AddAfter adds the given item to the work queue after the given delay

func (q *delayingType) AddAfter(item interface{}, duration time.Duration) {

// don't add if we're already shutting down

if q.ShuttingDown() {

return

}

q.metrics.retry()

// 延时时间小于0直接放到通用队列中

if duration <= 0 {

q.Add(item)

return

}

select {

case <-q.stopCh:

// unblock if ShutDown() is called

// 把元素封装成 waitFor 传给 waitingForAddCh

case q.waitingForAddCh <- &waitFor{data: item, readyAt: q.clock.Now().Add(duration)}:

}

}

// maxWait keeps a max bound on the wait time. It's just insurance against weird things happening.

// Checking the queue every 10 seconds isn't expensive and we know that we'll never end up with an

// expired item sitting for more than 10 seconds.

const maxWait = 10 * time.Second

// waitingLoop runs until the workqueue is shutdown and keeps a check on the list of items to be added.

func (q *delayingType) waitingLoop() {

defer utilruntime.HandleCrash()

// Make a placeholder channel to use when there are no items in our list

never := make(<-chan time.Time)

// Make a timer that expires when the item at the head of the waiting queue is ready

var nextReadyAtTimer clock.Timer

// 构造一个优先级队列

waitingForQueue := &waitForPriorityQueue{}

// container/heap堆实现优先级队列

heap.Init(waitingForQueue)

// 用来避免元素重复添加,如果重复添加了就只更新时间

waitingEntryByData := map[t]*waitFor{}

// 死循环

for {

if q.Interface.ShuttingDown() {

return

}

now := q.clock.Now()

// 如果优先队列中有元素的话

for waitingForQueue.Len() > 0 {

// 获取第一个元素

entry := waitingForQueue.Peek().(*waitFor)

// 如果第一个元素指定的时间还没到时间,则跳出当前循环 因为第一个元素是时间最小的。直到优先队列中元素都需要等待

if entry.readyAt.After(now) {

break

}

// 时间已经过了,那就把它从优先队列中拿出来放入通用队列中

// 同时要把元素从上面提到的 map 中删除,因为不用再判断重复添加了

entry = heap.Pop(waitingForQueue).(*waitFor)

q.Add(entry.data)

delete(waitingEntryByData, entry.data)

}

// 如果优先队列中还有元素,那就用第一个元素指定的时间减去当前时间作为等待时间

// 因为优先队列是用时间排序的,后面的元素需要等待的时间更长,所以先处理排序靠前面的元素

nextReadyAt := never

if waitingForQueue.Len() > 0 {

if nextReadyAtTimer != nil {

nextReadyAtTimer.Stop()

}

entry := waitingForQueue.Peek().(*waitFor)

// 第一个元素的时间减去当前时间作为等待时间

nextReadyAtTimer = q.clock.NewTimer(entry.readyAt.Sub(now))

nextReadyAt = nextReadyAtTimer.C()

}

select {

case <-q.stopCh:

return

// 定时器,每过一段时间没有任何数据,那就再执行一次大循环

case <-q.heartbeat.C():

// continue the loop, which will add ready items

// 上面的等待时间信号,时间到了就有信号 激活这个case,然后继续循环

case <-nextReadyAt:

// continue the loop, which will add ready items

// AddAfter 函数中放入到通道中的元素,这里从通道中获取数据

case waitEntry := <-q.waitingForAddCh:

// 如果时间已经过了就直接放入通用队列,没过就插入到有序队列

if waitEntry.readyAt.After(q.clock.Now()) {

insert(waitingForQueue, waitingEntryByData, waitEntry)

} else {

q.Add(waitEntry.data)

}

// 下面就是把channel里面的元素全部取出来 如果没有数据了就直接退出

drained := false

for !drained {

select {

case waitEntry := <-q.waitingForAddCh:

if waitEntry.readyAt.After(q.clock.Now()) {

insert(waitingForQueue, waitingEntryByData, waitEntry)

} else {

q.Add(waitEntry.data)

}

default:

drained = true

}

}

}

}

}

// 插入元素到有序队列,如果已经存在了则更新时间

func insert(q *waitForPriorityQueue, knownEntries map[t]*waitFor, entry *waitFor) {

// if the entry already exists, update the time only if it would cause the item to be queued sooner

existing, exists := knownEntries[entry.data]

if exists {

if existing.readyAt.After(entry.readyAt) {

existing.readyAt = entry.readyAt

heap.Fix(q, existing.index)

}

return

}

heap.Push(q, entry)

knownEntries[entry.data] = entry

}

延时队列原理:

优先级有序队列+通用队列。按照时间的先后顺序来构造一个优先级队列,优先级队列中的元素时间到了的话就把这个元素放到通用队列中去进行正常的处理就行,没到则继续等待。

限速队列

引用:https://cloud.tencent.com/developer/article/1709073

限速队列实现

// RateLimitingInterface is an interface that rate limits items being added to the queue.

type RateLimitingInterface interface {

DelayingInterface

// 在限速器说ok后,将元素item添加到工作队列中

AddRateLimited(item interface{})

// Forget indicates that an item is finished being retried. Doesn't matter whether it's for perm failing

// or for success, we'll stop the rate limiter from tracking it. This only clears the `rateLimiter`, you

// still have to call `Done` on the queue.

// 丢弃指定的元素

Forget(item interface{})

// NumRequeues returns back how many times the item was requeued

// 查询元素放入队列的次数

NumRequeues(item interface{}) int

}

// NewRateLimitingQueue constructs a new workqueue with rateLimited queuing ability

// Remember to call Forget! If you don't, you may end up tracking failures forever.

// 限速器 + 延时队列

func NewRateLimitingQueue(rateLimiter RateLimiter) RateLimitingInterface {

return &rateLimitingType{

DelayingInterface: NewDelayingQueue(),

rateLimiter: rateLimiter,

}

}

func NewNamedRateLimitingQueue(rateLimiter RateLimiter, name string) RateLimitingInterface {

return &rateLimitingType{

DelayingInterface: NewNamedDelayingQueue(name),

rateLimiter: rateLimiter,

}

}

// rateLimitingType wraps an Interface and provides rateLimited re-enquing

type rateLimitingType struct {

DelayingInterface

rateLimiter RateLimiter

}

// 通过限速器获取延迟时间,然后加入到延时队列

func (q *rateLimitingType) AddRateLimited(item interface{}) {

q.DelayingInterface.AddAfter(item, q.rateLimiter.When(item))

}

// 直接通过限速器获取元素放入队列的次数

func (q *rateLimitingType) NumRequeues(item interface{}) int {

return q.rateLimiter.NumRequeues(item)

}

// 直接通过限速器丢弃指定的元素

func (q *rateLimitingType) Forget(item interface{}) {

q.rateLimiter.Forget(item)

}

不同限速器实现

限流器类型

- BucketRateLimiter

- ItemExponentialFailureRateLimiter

- ItemFastSlowRateLimiter

- MaxOfRateLimiter

1 BucketRateLimiter

BucketRateLimiter(令牌桶限速器),这是一个固定速率(qps)的限速器,该限速器是利用

golang.org/x/time/rate库来实现的,令牌桶算法内部实现了一个存放 token(令牌)的“桶”,初始时“桶”是空的,token 会以固定速率往“桶”里填充,直到将其填满为止,多余的 token 会被丢弃。每个元素都会从令牌桶得到一个 token,只有得到 token 的元素才允许通过,而没有得到 token 的元素处于等待状态。令牌桶算法通过控制发放 token 来达到限速目的。令牌桶是有一个固定大小的桶,系统会以恒定的速率向桶中放 Token,桶满了就暂时不放了,而用户则从桶中取 Token,如果有剩余的 Token 就可以一直取,如果没有剩余的 Token,则需要等到系统中放置了 Token 才行。比如抽奖、抢优惠、投票、报名……等场景,在面对突然到来的上百倍流量峰值,除了消息队列预留容量以外,可以考虑做峰值限流。因为对于大部分营销类活动,消息限流(对被限流的消息直接丢弃并直接回复:“系统繁忙,请稍后再试。”)并不会对营销的结果有太大影响。

2 ItemExponentialFailureRateLimiter

ItemExponentialFailureRateLimiter(指数增长限速器) 是比较常用的限速器,从字面意思解释是元素错误次数指数递增限速器,他会根据元素错误次数逐渐累加等待时间。

3 ItemFastSlowRateLimiter

ItemFastSlowRateLimiter (快慢限速器)和 ItemExponentialFailureRateLimiter 很像,都是用于错误尝试的,但是 ItemFastSlowRateLimiter 的限速策略是尝试次数超过阈值用长延迟,否则用短延迟,不过该限速器很少使用。

4 MaxOfRateLimiter

MaxOfRateLimiter 也可以叫混合限速器,他内部有多个限速器,选择所有限速器中速度最慢(延迟最大)的一种方案。比如内部有三个限速器,When() 接口返回的就是三个限速器里面延迟最大的。在 Kubernetes 中默认的控制器限速器初始化就是使用的混合限速器:

type RateLimiter interface {

// When gets an item and gets to decide how long that item should wait

When(item interface{}) time.Duration

// Forget indicates that an item is finished being retried. Doesn't matter whether its for perm failing

// or for success, we'll stop tracking it

Forget(item interface{})

// NumRequeues returns back how many failures the item has had

NumRequeues(item interface{}) int

}

// DefaultControllerRateLimiter is a no-arg constructor for a default rate limiter for a workqueue. It has

// both overall and per-item rate limiting. The overall is a token bucket and the per-item is exponential

func DefaultControllerRateLimiter() RateLimiter {

return NewMaxOfRateLimiter(

NewItemExponentialFailureRateLimiter(5*time.Millisecond, 1000*time.Second),

// 10 qps, 100 bucket size. This is only for retry speed and its only the overall factor (not per item)

&BucketRateLimiter{Limiter: rate.NewLimiter(rate.Limit(10), 100)},

)

}

// BucketRateLimiter adapts a standard bucket to the workqueue ratelimiter API

type BucketRateLimiter struct {

*rate.Limiter

}

var _ RateLimiter = &BucketRateLimiter{}

func (r *BucketRateLimiter) When(item interface{}) time.Duration {

// 获取需要等待的时间(延迟),而且这个延迟是一个相对固定的周期

return r.Limiter.Reserve().Delay()

}

func (r *BucketRateLimiter) NumRequeues(item interface{}) int {

return 0

}

func (r *BucketRateLimiter) Forget(item interface{}) {

}

// ItemExponentialFailureRateLimiter does a simple baseDelay*2^ limit

// dealing with max failures and expiration are up to the caller

type ItemExponentialFailureRateLimiter struct {

failuresLock sync.Mutex

failures map[interface{}]int

baseDelay time.Duration

maxDelay time.Duration

}

var _ RateLimiter = &ItemExponentialFailureRateLimiter{}

func NewItemExponentialFailureRateLimiter(baseDelay time.Duration, maxDelay time.Duration) RateLimiter {

return &ItemExponentialFailureRateLimiter{

failures: map[interface{}]int{},

baseDelay: baseDelay,

maxDelay: maxDelay,

}

}

func DefaultItemBasedRateLimiter() RateLimiter {

return NewItemExponentialFailureRateLimiter(time.Millisecond, 1000*time.Second)

}

func (r *ItemExponentialFailureRateLimiter) When(item interface{}) time.Duration {

r.failuresLock.Lock()

defer r.failuresLock.Unlock()

exp := r.failures[item]

r.failures[item] = r.failures[item] + 1

// The backoff is capped such that 'calculated' value never overflows.

backoff := float64(r.baseDelay.Nanoseconds()) * math.Pow(2, float64(exp))

if backoff > math.MaxInt64 {

return r.maxDelay

}

calculated := time.Duration(backoff)

if calculated > r.maxDelay {

return r.maxDelay

}

return calculated

}

func (r *ItemExponentialFailureRateLimiter) NumRequeues(item interface{}) int {

r.failuresLock.Lock()

defer r.failuresLock.Unlock()

return r.failures[item]

}

func (r *ItemExponentialFailureRateLimiter) Forget(item interface{}) {

r.failuresLock.Lock()

defer r.failuresLock.Unlock()

delete(r.failures, item)

}

// ItemFastSlowRateLimiter does a quick retry for a certain number of attempts, then a slow retry after that

type ItemFastSlowRateLimiter struct {

failuresLock sync.Mutex

failures map[interface{}]int

maxFastAttempts int

fastDelay time.Duration

slowDelay time.Duration

}

var _ RateLimiter = &ItemFastSlowRateLimiter{}

func NewItemFastSlowRateLimiter(fastDelay, slowDelay time.Duration, maxFastAttempts int) RateLimiter {

return &ItemFastSlowRateLimiter{

failures: map[interface{}]int{},

fastDelay: fastDelay,

slowDelay: slowDelay,

maxFastAttempts: maxFastAttempts,

}

}

func (r *ItemFastSlowRateLimiter) When(item interface{}) time.Duration {

r.failuresLock.Lock()

defer r.failuresLock.Unlock()

r.failures[item] = r.failures[item] + 1

if r.failures[item] <= r.maxFastAttempts {

return r.fastDelay

}

return r.slowDelay

}

func (r *ItemFastSlowRateLimiter) NumRequeues(item interface{}) int {

r.failuresLock.Lock()

defer r.failuresLock.Unlock()

return r.failures[item]

}

func (r *ItemFastSlowRateLimiter) Forget(item interface{}) {

r.failuresLock.Lock()

defer r.failuresLock.Unlock()

delete(r.failures, item)

}

// MaxOfRateLimiter calls every RateLimiter and returns the worst case response

// When used with a token bucket limiter, the burst could be apparently exceeded in cases where particular items

// were separately delayed a longer time.

type MaxOfRateLimiter struct {

limiters []RateLimiter

}

func (r *MaxOfRateLimiter) When(item interface{}) time.Duration {

ret := time.Duration(0)

for _, limiter := range r.limiters {

curr := limiter.When(item)

if curr > ret {

ret = curr

}

}

return ret

}

func NewMaxOfRateLimiter(limiters ...RateLimiter) RateLimiter {

return &MaxOfRateLimiter{limiters: limiters}

}

func (r *MaxOfRateLimiter) NumRequeues(item interface{}) int {

ret := 0

for _, limiter := range r.limiters {

curr := limiter.NumRequeues(item)

if curr > ret {

ret = curr

}

}

return ret

}

func (r *MaxOfRateLimiter) Forget(item interface{}) {

for _, limiter := range r.limiters {

limiter.Forget(item)

}

}

应用

informer 自定义控制器 workqueue应用

func NewController(queue workqueue.RateLimitingInterface, indexer cache.Indexer, informer cache.Controller) *Controller {

return &Controller{

informer: informer,

indexer: indexer,

queue: queue,

}

}

func (c *Controller) processNextItem() bool {

// Wait until there is a new item in the working queue

key, quit := c.queue.Get()

if quit {

return false

}

// Tell the queue that we are done with processing this key. This unblocks the key for other workers

// This allows safe parallel processing because two pods with the same key are never processed in

// parallel.

defer c.queue.Done(key)

// Invoke the method containing the business logic

err := c.syncToStdout(key.(string))

// Handle the error if something went wrong during the execution of the business logic

c.handleErr(err, key)

return true

}

// syncToStdout is the business logic of the controller. In this controller it simply prints

// information about the pod to stdout. In case an error happened, it has to simply return the error.

// The retry logic should not be part of the business logic.

func (c *Controller) syncToStdout(key string) error {

obj, exists, err := c.indexer.GetByKey(key)

if err != nil {

klog.Errorf("Fetching object with key %s from store failed with %v", key, err)

return err

}

if !exists {

// Below we will warm up our cache with a Pod, so that we will see a delete for one pod

fmt.Printf("Pod %s does not exist anymore\n", key)

} else {

// Note that you also have to check the uid if you have a local controlled resource, which

// is dependent on the actual instance, to detect that a Pod was recreated with the same name

fmt.Printf("Sync/Add/Update for Pod %s\n", obj.(*v1.Pod).GetName())

}

return nil

}

// handleErr checks if an error happened and makes sure we will retry later.

func (c *Controller) handleErr(err error, key interface{}) {

if err == nil {

// Forget about the #AddRateLimited history of the key on every successful synchronization.

// This ensures that future processing of updates for this key is not delayed because of

// an outdated error history.

c.queue.Forget(key)

return

}

// This controller retries 5 times if something goes wrong. After that, it stops trying.

if c.queue.NumRequeues(key) < 5 {

klog.Infof("Error syncing pod %v: %v", key, err)

// Re-enqueue the key rate limited. Based on the rate limiter on the

// queue and the re-enqueue history, the key will be processed later again.

c.queue.AddRateLimited(key)

return

}

c.queue.Forget(key)

// Report to an external entity that, even after several retries, we could not successfully process this key

runtime.HandleError(err)

klog.Infof("Dropping pod %q out of the queue: %v", key, err)

}

func (c *Controller) Run(threadiness int, stopCh chan struct{}) {

defer runtime.HandleCrash()

// Let the workers stop when we are done

defer c.queue.ShutDown()

klog.Info("Starting Pod controller")

go c.informer.Run(stopCh)

// Wait for all involved caches to be synced, before processing items from the queue is started

if !cache.WaitForCacheSync(stopCh, c.informer.HasSynced) {

runtime.HandleError(fmt.Errorf("Timed out waiting for caches to sync"))

return

}

for i := 0; i < threadiness; i++ {

go wait.Until(c.runWorker, time.Second, stopCh)

}

<-stopCh

klog.Info("Stopping Pod controller")

}

func (c *Controller) runWorker() {

for c.processNextItem() {

}

}

func main() {

var kubeconfig string

var master string

flag.StringVar(&kubeconfig, "kubeconfig", "", "absolute path to the kubeconfig file")

flag.StringVar(&master, "master", "", "master url")

flag.Parse()

// creates the connection

config, err := clientcmd.BuildConfigFromFlags(master, kubeconfig)

if err != nil {

klog.Fatal(err)

}

// creates the clientset

clientset, err := kubernetes.NewForConfig(config)

if err != nil {

klog.Fatal(err)

}

// create the pod watcher

podListWatcher := cache.NewListWatchFromClient(clientset.CoreV1().RESTClient(), "pods", v1.NamespaceDefault, fields.Everything())

// create the workqueue

// 默认的限速队列

queue := workqueue.NewRateLimitingQueue(workqueue.DefaultControllerRateLimiter())

// Bind the workqueue to a cache with the help of an informer. This way we make sure that

// whenever the cache is updated, the pod key is added to the workqueue.

// Note that when we finally process the item from the workqueue, we might see a newer version

// of the Pod than the version which was responsible for triggering the update.

indexer, informer := cache.NewIndexerInformer(podListWatcher, &v1.Pod{}, 0, cache.ResourceEventHandlerFuncs{

AddFunc: func(obj interface{}) {

key, err := cache.MetaNamespaceKeyFunc(obj)

if err == nil {

queue.Add(key)

}

},

UpdateFunc: func(old interface{}, new interface{}) {

key, err := cache.MetaNamespaceKeyFunc(new)

if err == nil {

queue.Add(key)

}

},

DeleteFunc: func(obj interface{}) {

// IndexerInformer uses a delta queue, therefore for deletes we have to use this

// key function.

key, err := cache.DeletionHandlingMetaNamespaceKeyFunc(obj)

if err == nil {

queue.Add(key)

}

},

}, cache.Indexers{})

controller := NewController(queue, indexer, informer)

// We can now warm up the cache for initial synchronization.

// Let's suppose that we knew about a pod "mypod" on our last run, therefore add it to the cache.

// If this pod is not there anymore, the controller will be notified about the removal after the

// cache has synchronized.

indexer.Add(&v1.Pod{

ObjectMeta: meta_v1.ObjectMeta{

Name: "mypod",

Namespace: v1.NamespaceDefault,

},

})

// Now let's start the controller

stop := make(chan struct{})

defer close(stop)

go controller.Run(1, stop)

// Wait forever

select {}

}

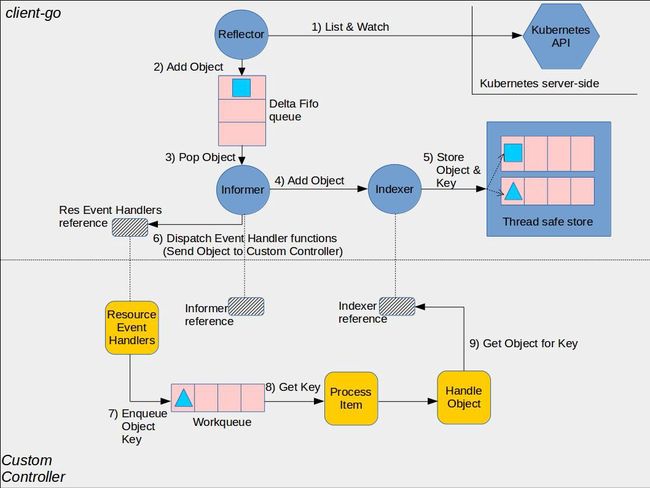

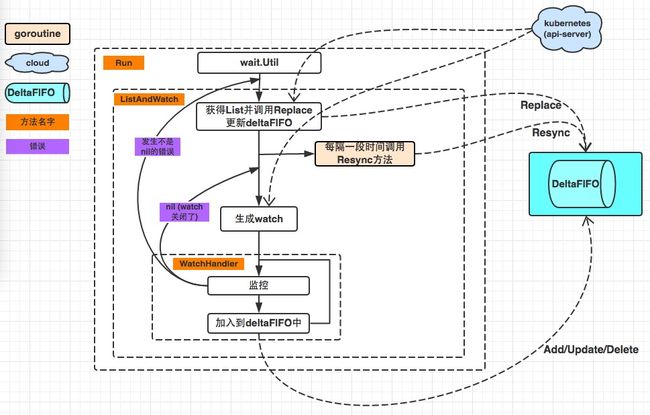

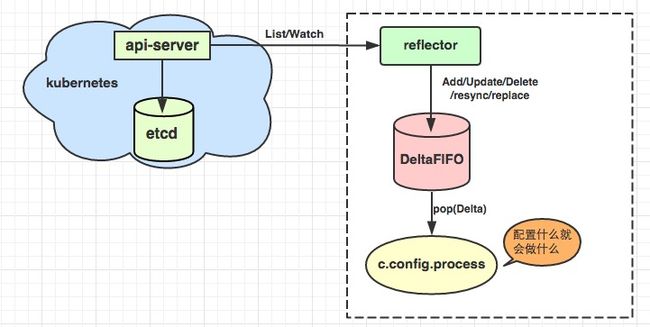

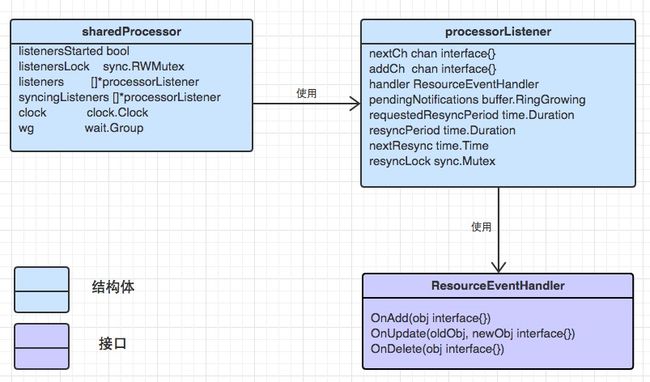

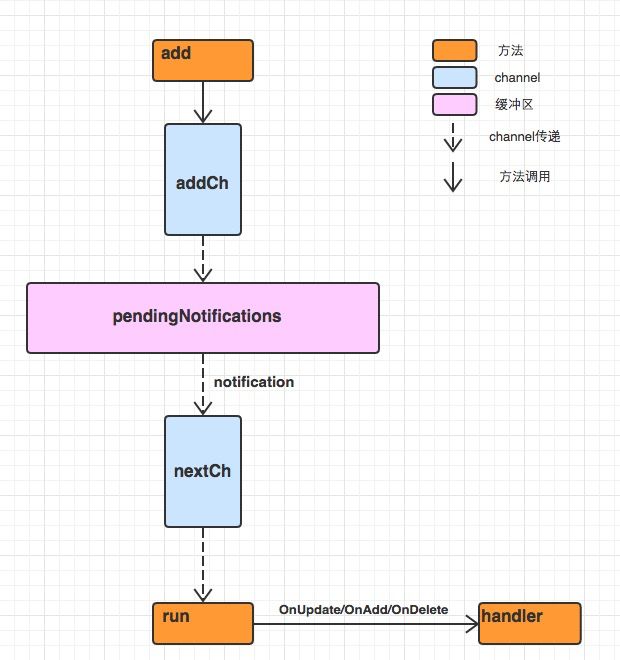

informer

Informer模块是Kubernetes中的基础组件,负责各组件与Apiserver的资源与事件同步。List/Watch机制是Kubernetes中实现集群控制模块最核心的设计之一,它采用统一的异步消息处理机制,保证了消息的实时性、可靠性、顺序性和性能等,为声明式风格的API奠定了良好的基础。

应用

引用:https://www.jianshu.com/p/61f2d1d884a9

pod的informer

package main

import (

"fmt"

clientset "k8s.io/client-go/kubernetes"

"k8s.io/client-go/rest"

"k8s.io/client-go/informers"

"k8s.io/client-go/tools/cache"

"k8s.io/api/core/v1"

"k8s.io/apimachinery/pkg/labels"

"time"

)

func main() {

config := &rest.Config{

Host: "http://172.21.0.16:8080",

}

client := clientset.NewForConfigOrDie(config)

// 生成一个SharedInformerFactory

// 工厂方法

factory := informers.NewSharedInformerFactory(client, 5 * time.Second)

// 生成一个PodInformer

// staging/src/k8s.io/client-go/informers

// staging/src/k8s.io/client-go/listers

podInformer := factory.Core().V1().Pods()

// 获得一个cache.SharedIndexInformer 单例模式

sharedInformer := podInformer.Informer()

// 用户自定义控制器逻辑

sharedInformer.AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: func(obj interface{}) {fmt.Printf("add: %v\n", obj.(*v1.Pod).Name)},

UpdateFunc: func(oldObj, newObj interface{}) {fmt.Printf("update: %v\n", newObj.(*v1.Pod).Name)},

DeleteFunc: func(obj interface{}){fmt.Printf("delete: %v\n", obj.(*v1.Pod).Name)},

})

stopCh := make(chan struct{})

// 第一种方式

// 可以这样启动 也可以按照下面的方式启动

// go sharedInformer.Run(stopCh)

// 需要等待2秒钟才调用list方法, 因为如果不sleep, 有可能获得的是空的.

// time.Sleep(2 * time.Second)

// 第二种方式

factory.Start(stopCh)

// 该方法是等待所有已经启动的informers完成同步. 因为不等到同步完成的时候, 本地缓存中是没有数据的, 如果直接就运行逻辑代码, 有些调用list方法就会获取不到, 因为服务器端是有数据的, 所以就会产生一定的偏差, 因此一般都是等到服务器端数据同步到本地缓存完了才开始运行用户自己的逻辑.

factory.WaitForCacheSync(stopCh)

// Lister()就是从本地缓存中取数据, 而不是直接去服务器端(k8s)上获得数据.

pods, _ := podInformer.Lister().Pods("default").List(labels.Everything())

for _, p := range pods {

fmt.Printf("list pods: %v\n", p.Name)

}

<- stopCh

}

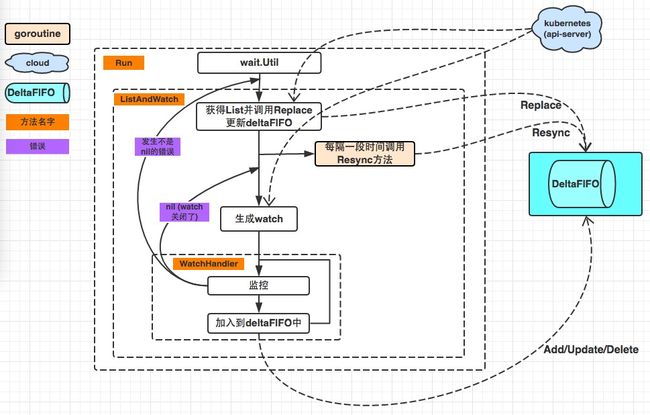

原理图

尽在图中

目录

-

controller.go

-

expiration_cache.go

-

heap.go

-

index.go

-

listers.go:从本地缓存中结合index读取资源的列表数据

-

listwatch.go:listwatch接口的定义,底层对应不同资源的restclient实现list watch

-

mutation_cache.go

-

mutation_detector.go

-

reflector.go list watch apiserver将事件放到delta queue中

-

reflector_metrics.go

-

shared_informer.go

-

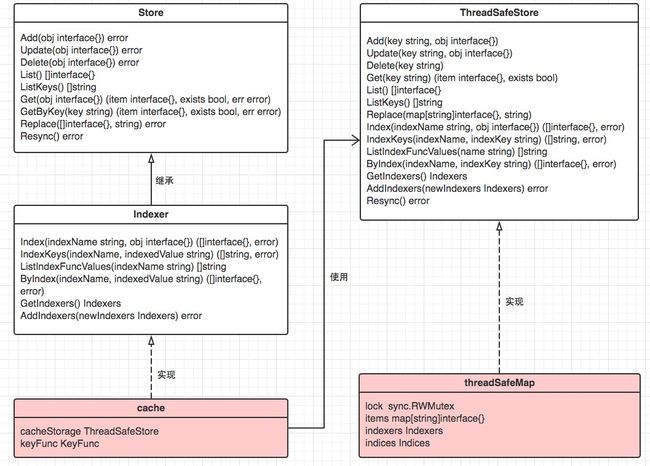

store.go:fifo.go中的Queue接口继承store.go中的Store接口,deltafifo、fifo实现了接口queue;store.go中的cache结构体,装配ThreadSafeStore本地缓存实现Store接口。其中的cache是本地缓存的实现结构,cache中通过ThreadSafeStore实现具体的逻辑。

-

fifo.go

-

delta_fifo.go 一级队列缓存

-

thread_safe_store.go 二级本地缓存

-

undelta_store.go

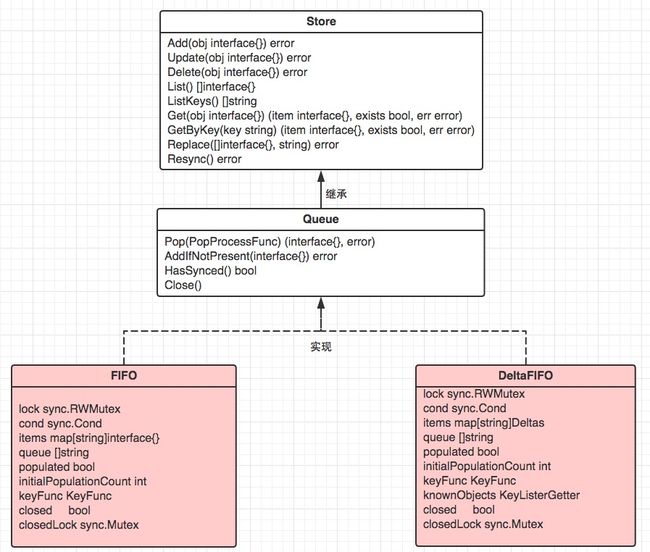

queue

作为一级缓存,reflector listwatch的缓存后端。

- fifo队列

- deltafifo:有具体增删改查事件动作的队列

引用:https://www.jianshu.com/p/095de3ee5f7b

实现接口与实现关系:

fifo

引用 https://www.jianshu.com/p/9e75976a9f3c

源码

// PopProcessFunc is passed to Pop() method of Queue interface.

// It is supposed to process the accumulator popped from the queue.

type PopProcessFunc func(interface{}) error

// ErrRequeue may be returned by a PopProcessFunc to safely requeue

// the current item. The value of Err will be returned from Pop.

type ErrRequeue struct {

// Err is returned by the Pop function

Err error

}

// ErrFIFOClosed used when FIFO is closed

var ErrFIFOClosed = errors.New("DeltaFIFO: manipulating with closed queue")

func (e ErrRequeue) Error() string {

if e.Err == nil {

return "the popped item should be requeued without returning an error"

}

return e.Err.Error()

}

// Queue extends Store with a collection of Store keys to "process".

// Every Add, Update, or Delete may put the object's key in that collection.

// A Queue has a way to derive the corresponding key given an accumulator.

// A Queue can be accessed concurrently from multiple goroutines.

// A Queue can be "closed", after which Pop operations return an error.

type Queue interface {

// 继承

Store

// Pop blocks until there is at least one key to process or the

// Queue is closed. In the latter case Pop returns with an error.

// In the former case Pop atomically picks one key to process,

// removes that (key, accumulator) association from the Store, and

// processes the accumulator. Pop returns the accumulator that

// was processed and the result of processing. The PopProcessFunc

// may return an ErrRequeue{inner} and in this case Pop will (a)

// return that (key, accumulator) association to the Queue as part

// of the atomic processing and (b) return the inner error from

// Pop. 从队列弹出元素

Pop(PopProcessFunc) (interface{}, error)

// AddIfNotPresent puts the given accumulator into the Queue (in

// association with the accumulator's key) if and only if that key

// is not already associated with a non-empty accumulator.

//不存在添加

AddIfNotPresent(interface{}) error

// HasSynced returns true if the first batch of keys have all been

// popped. The first batch of keys are those of the first Replace

// operation if that happened before any Add, AddIfNotPresent,

// Update, or Delete; otherwise the first batch is empty.

HasSynced() bool

// Close the queue

Close()

}

// Pop is helper function for popping from Queue.

// WARNING: Do NOT use this function in non-test code to avoid races

// unless you really really really really know what you are doing.

func Pop(queue Queue) interface{} {

var result interface{}

queue.Pop(func(obj interface{}) error {

result = obj

return nil

})

return result

}

type FIFO struct {

//队列的增删改锁

lock sync.RWMutex

//队列pop时没有元素,等待增改改的广播消息

cond sync.Cond

// We depend on the property that every key in `items` is also in `queue`

// 1个map存id元素 一个切片存id

items map[string]interface{}

queue []string

// populated is true if the first batch of items inserted by Replace() has been populated

// or Delete/Add/Update was called first.

populated bool

// initialPopulationCount is the number of items inserted by the first call of Replace()

// 最初replace时 队列中元素个数

initialPopulationCount int

// keyFunc is used to make the key used for queued item insertion and retrieval, and

// should be deterministic.

// 对obj哈希函数取的id

keyFunc KeyFunc

// Indication the queue is closed.

// Used to indicate a queue is closed so a control loop can exit when a queue is empty.

// Currently, not used to gate any of CRED operations.

closed bool

}

var (

// if impl interface Queue

_ = Queue(&FIFO{}) // FIFO is a Queue

)

// Close the queue.

func (f *FIFO) Close() {

f.lock.Lock()

defer f.lock.Unlock()

f.closed = true

f.cond.Broadcast()

}

// HasSynced returns true if an Add/Update/Delete/AddIfNotPresent are called first,

// or the first batch of items inserted by Replace() has been popped.

// !!!!!!! 队列中的元素个数为0+已经调用了Replace;场景:队列中的元素已经被消息完了,即已经同步完成

// 假设此时FIFQ刚刚初始化.

//1. 如果啥方法都没有调用, 那么HasSynced返回false, 因为populated=false.

//2. 如果先调用Add/Update/AddIfNotPresent/Delete后(后面调用什么函数都不用管了), 那么HasSynced返回true, 因为populated=true并且initialPopulationCount == 0.

//3. 如果先调用Replace(后面调用什么函数都不用管了), 那么必须要等待该replace方法加入元素的个数全部pop之后, HasSynced才会返回true, 因为只有全部pop完了之后initialPopulationCount才减为0

func (f *FIFO) HasSynced() bool {

f.lock.Lock()

defer f.lock.Unlock()

return f.populated && f.initialPopulationCount == 0

}

// Add inserts an item, and puts it in the queue. The item is only enqueued

// if it doesn't already exist in the set.

func (f *FIFO) Add(obj interface{}) error {

id, err := f.keyFunc(obj)

if err != nil {

return KeyError{obj, err}

}

f.lock.Lock()

defer f.lock.Unlock()

f.populated = true

if _, exists := f.items[id]; !exists {

f.queue = append(f.queue, id)

}

f.items[id] = obj

f.cond.Broadcast()

return nil

}

// AddIfNotPresent inserts an item, and puts it in the queue. If the item is already

// present in the set, it is neither enqueued nor added to the set.

//

// This is useful in a single producer/consumer scenario so that the consumer can

// safely retry items without contending with the producer and potentially enqueueing

// stale items.

func (f *FIFO) AddIfNotPresent(obj interface{}) error {

id, err := f.keyFunc(obj)

if err != nil {

return KeyError{obj, err}

}

f.lock.Lock()

defer f.lock.Unlock()

f.addIfNotPresent(id, obj)

return nil

}

// addIfNotPresent assumes the fifo lock is already held and adds the provided

// item to the queue under id if it does not already exist.

func (f *FIFO) addIfNotPresent(id string, obj interface{}) {

f.populated = true

if _, exists := f.items[id]; exists {

return

}

f.queue = append(f.queue, id)

f.items[id] = obj

f.cond.Broadcast()

}

// Update is the same as Add in this implementation.

func (f *FIFO) Update(obj interface{}) error {

return f.Add(obj)

}

// Delete removes an item. It doesn't add it to the queue, because

// this implementation assumes the consumer only cares about the objects,

// not the order in which they were created/added.

//Delete方法可以看到只是从items中删除, 并没有从queue中删除该obj的key., 不过这不会有影响, 在pop方法的时候, 如果从queue里面出来的key在items中找不到, 就认为该obj已经删除了, 就不做处理了. 所以items里面的数据是安全的, queue里面的数据有可能是已经被删除了的.

func (f *FIFO) Delete(obj interface{}) error {

id, err := f.keyFunc(obj)

if err != nil {

return KeyError{obj, err}

}

f.lock.Lock()

defer f.lock.Unlock()

f.populated = true

delete(f.items, id)

return err

}

// List returns a list of all the items.

func (f *FIFO) List() []interface{} {

f.lock.RLock()

defer f.lock.RUnlock()

list := make([]interface{}, 0, len(f.items))

for _, item := range f.items {

list = append(list, item)

}

return list

}

// ListKeys returns a list of all the keys of the objects currently

// in the FIFO.

func (f *FIFO) ListKeys() []string {

f.lock.RLock()

defer f.lock.RUnlock()

list := make([]string, 0, len(f.items))

for key := range f.items {

list = append(list, key)

}

return list

}

// Get returns the requested item, or sets exists=false.

func (f *FIFO) Get(obj interface{}) (item interface{}, exists bool, err error) {

key, err := f.keyFunc(obj)

if err != nil {

return nil, false, KeyError{obj, err}

}

return f.GetByKey(key)

}

// GetByKey returns the requested item, or sets exists=false.

func (f *FIFO) GetByKey(key string) (item interface{}, exists bool, err error) {

f.lock.RLock()

defer f.lock.RUnlock()

item, exists = f.items[key]

return item, exists, nil

}

// IsClosed checks if the queue is closed

func (f *FIFO) IsClosed() bool {

f.lock.Lock()

defer f.lock.Unlock()

if f.closed {

return true

}

return false

}

// Pop waits until an item is ready and processes it. If multiple items are

// ready, they are returned in the order in which they were added/updated.

// The item is removed from the queue (and the store) before it is processed,

// so if you don't successfully process it, it should be added back with

// AddIfNotPresent(). process function is called under lock, so it is safe

// update data structures in it that need to be in sync with the queue.

func (f *FIFO) Pop(process PopProcessFunc) (interface{}, error) {

f.lock.Lock()

defer f.lock.Unlock()

for {

for len(f.queue) == 0 {

// When the queue is empty, invocation of Pop() is blocked until new item is enqueued.

// When Close() is called, the f.closed is set and the condition is broadcasted.

// Which causes this loop to continue and return from the Pop().

// 如果队列已经关闭 则直接返回错误

if f.IsClosed() {

return nil, ErrFIFOClosed

}

// 等待 有元素了之后会通知

f.cond.Wait()

}

id := f.queue[0]

f.queue = f.queue[1:]

// 如果initialPopulationCount > 0 表明Replace是比Add/Update/AddIfNotPresent/Delete先调用 然后设置了initialPopulationCount

if f.initialPopulationCount > 0 {

f.initialPopulationCount--

}

item, ok := f.items[id]

if !ok {

// 如果已经删除了 不做处理

// Item may have been deleted subsequently.

continue

}

// 从items中删除id

delete(f.items, id)

// 消息处理

err := process(item)

if e, ok := err.(ErrRequeue); ok {

// 如果用户处理逻辑返回错误是ErrRequeue

// 那么表明需要重新加回到queue里面去

f.addIfNotPresent(id, item)

err = e.Err

}

return item, err

}

}

// Replace will delete the contents of 'f', using instead the given map.

// 'f' takes ownership of the map, you should not reference the map again

// after calling this function. f's queue is reset, too; upon return, it

// will contain the items in the map, in no particular order.

//informer list的时候会调用这个接口,刷新队列中的所有元素和资源版本

func (f *FIFO) Replace(list []interface{}, resourceVersion string) error {

items := make(map[string]interface{}, len(list))

for _, item := range list {

key, err := f.keyFunc(item)

if err != nil {

return KeyError{item, err}

}

items[key] = item

}

f.lock.Lock()

defer f.lock.Unlock()

if !f.populated {

f.populated = true

// 最初的元素数量

f.initialPopulationCount = len(items)

}

f.items = items

f.queue = f.queue[:0]

for id := range items {

f.queue = append(f.queue, id)

}

if len(f.queue) > 0 {

f.cond.Broadcast()

}

return nil

}

// Resync will ensure that every object in the Store has its key in the queue.

// This should be a no-op, because that property is maintained by all operations.

// id切片列表去重

// id切片列表增加 元素map中没有的id

func (f *FIFO) Resync() error {

f.lock.Lock()

defer f.lock.Unlock()

inQueue := sets.NewString()

for _, id := range f.queue {

inQueue.Insert(id)

}

for id := range f.items {

if !inQueue.Has(id) {

f.queue = append(f.queue, id)

}

}

if len(f.queue) > 0 {

f.cond.Broadcast()

}

return nil

}

// NewFIFO returns a Store which can be used to queue up items to

// process.

func NewFIFO(keyFunc KeyFunc) *FIFO {

f := &FIFO{

items: map[string]interface{}{},

queue: []string{},

keyFunc: keyFunc,

}

f.cond.L = &f.lock

return f

}

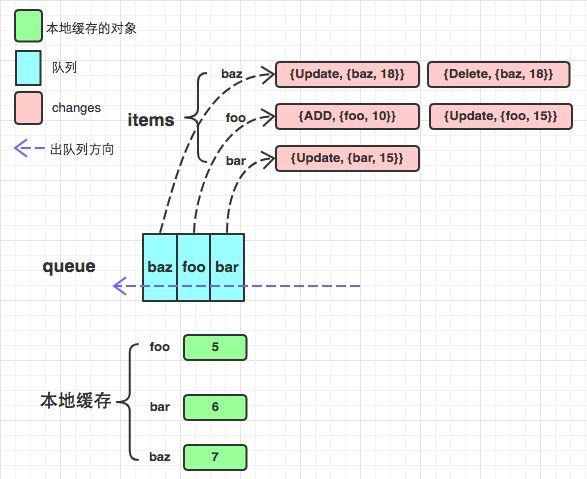

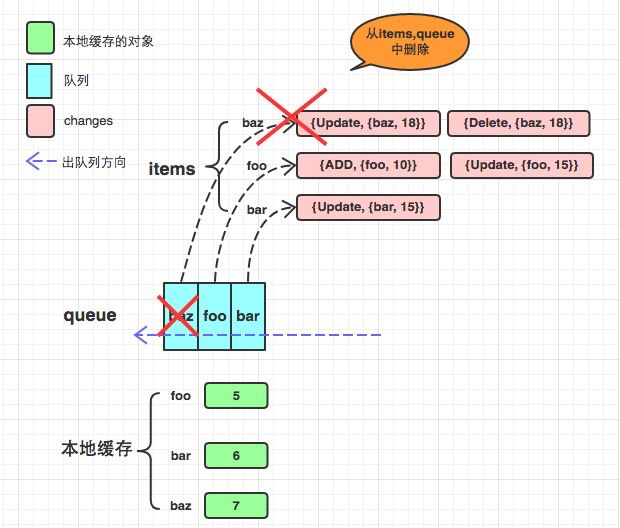

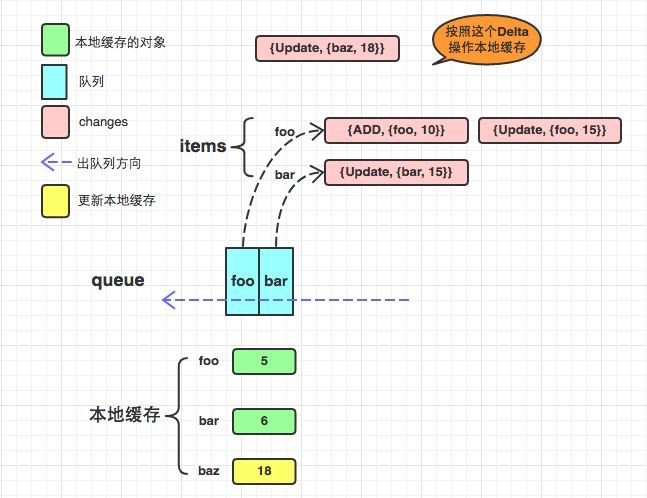

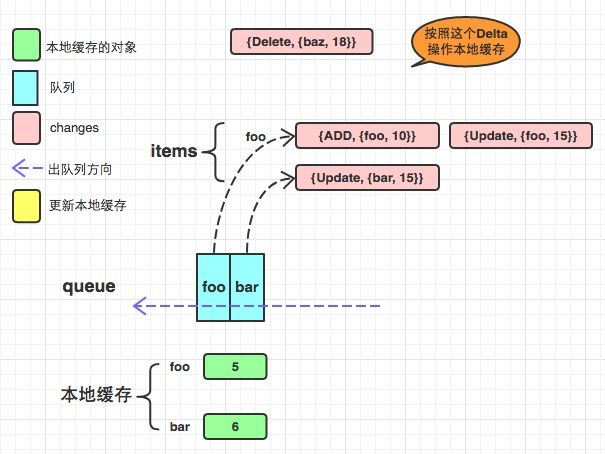

deltafifo

引用:https://www.jianshu.com/p/095de3ee5f7b

与FIFO相比, 主要有以下几点不同:

- items中的value不再只存着该key对应的obj, 而是obj的一系列变化, 用一个数组来表示. 包括添加/更新/删除等等. 因此衍生出来了很多结构体和方法, 包括Deltas, Delta等等.

- 增加了本地缓存knownObjects KeyListerGetter, KeyListerGetter提供了两个方法分别是从本地缓存中获得所有的key和根据key找到对应的obj. 当程序中错过了某些event, 比如deletion event, 会造成服务器数据库中没有该obj, 而本地缓存中有该obj, 从而造成数据不一致, 那么在同步的过程中会有所操作. (其实KeyListerGetter在informers体系中是一个Indexer. )或许有人会疑惑会为什么需要用另外一个属性来缓存呢? items属性不就可以当做缓存了吗? 理由是: items只是暂时性存储, 当调用pop的时候对应的数据就会从items中删除了, 而knownObjects会维护本地缓存.

- DeletedFinalStateUnknown: 当一个obj被删除了, 但是这个程序这边由于某种原因miss了这次deletion event, 那么假如在做同步操作时, 从服务器获取的列表中已经没有了这个obj, 因为该程序没有接收到deletion event, 所以该obj在本地缓存中依然存在, 所以此时会给这个obj构造成这个DeletedFinalStateUnknown类型.

源码

// 对象的hash key函数 如获取对象的id

// 对象的二级本地缓存

// replace的是sync还是replace事件?

func NewDeltaFIFO(keyFunc KeyFunc, knownObjects KeyListerGetter) *DeltaFIFO {

return NewDeltaFIFOWithOptions(DeltaFIFOOptions{

KeyFunction: keyFunc,

KnownObjects: knownObjects,

})

}

// DeltaFIFOOptions is the configuration parameters for DeltaFIFO. All are

// optional.

type DeltaFIFOOptions struct {

// KeyFunction is used to figure out what key an object should have. (It's

// exposed in the returned DeltaFIFO's KeyOf() method, with additional

// handling around deleted objects and queue state).

// Optional, the default is MetaNamespaceKeyFunc.

KeyFunction KeyFunc

// KnownObjects is expected to return a list of keys that the consumer of

// this queue "knows about". It is used to decide which items are missing

// when Replace() is called; 'Deleted' deltas are produced for the missing items.

// KnownObjects may be nil if you can tolerate missing deletions on Replace().

KnownObjects KeyListerGetter

// EmitDeltaTypeReplaced indicates that the queue consumer

// understands the Replaced DeltaType. Before the `Replaced` event type was

// added, calls to Replace() were handled the same as Sync(). For

// backwards-compatibility purposes, this is false by default.

// When true, `Replaced` events will be sent for items passed to a Replace() call.

// When false, `Sync` events will be sent instead.

EmitDeltaTypeReplaced bool

}

// NewDeltaFIFOWithOptions returns a Queue which can be used to process changes to

// items. See also the comment on DeltaFIFO.

func NewDeltaFIFOWithOptions(opts DeltaFIFOOptions) *DeltaFIFO {

if opts.KeyFunction == nil {

opts.KeyFunction = MetaNamespaceKeyFunc

}

f := &DeltaFIFO{

items: map[string]Deltas{},

queue: []string{},

keyFunc: opts.KeyFunction,

knownObjects: opts.KnownObjects,

emitDeltaTypeReplaced: opts.EmitDeltaTypeReplaced,

}

f.cond.L = &f.lock

return f

}

type DeltaFIFO struct {

// lock/cond protects access to 'items' and 'queue'.

lock sync.RWMutex

cond sync.Cond

// `items` maps keys to Deltas.

// `queue` maintains FIFO order of keys for consumption in Pop().

// We maintain the property that keys in the `items` and `queue` are

// strictly 1:1 mapping, and that all Deltas in `items` should have

// at least one Delta.

// items里面存的是key 以及该key对应的pod的变化

// queue中存的是key 即出队列的顺序。消息的顺序性保证

items map[string]Deltas

queue []string

// populated is true if the first batch of items inserted by Replace() has been populated

// or Delete/Add/Update/AddIfNotPresent was called first.

populated bool

// initialPopulationCount is the number of items inserted by the first call of Replace()

initialPopulationCount int

// keyFunc is used to make the key used for queued item

// insertion and retrieval, and should be deterministic.

keyFunc KeyFunc

// knownObjects list keys that are "known" --- affecting Delete(),

// Replace(), and Resync()

// 说白了就是本地缓存 是一个indexer

knownObjects KeyListerGetter

// Used to indicate a queue is closed so a control loop can exit when a queue is empty.

// Currently, not used to gate any of CRED operations.

closed bool

// emitDeltaTypeReplaced is whether to emit the Replaced or Sync

// DeltaType when Replace() is called (to preserve backwards compat).

emitDeltaTypeReplaced bool

}

// 用于判断DeltaFIFO是否实现了接口Queue,若没有实现则编译失败

var (

_ = Queue(&DeltaFIFO{}) // DeltaFIFO is a Queue

)

var (

// ErrZeroLengthDeltasObject is returned in a KeyError if a Deltas

// object with zero length is encountered (should be impossible,

// but included for completeness).

ErrZeroLengthDeltasObject = errors.New("0 length Deltas object; can't get key")

)

// Close the queue.

func (f *DeltaFIFO) Close() {

f.lock.Lock()

defer f.lock.Unlock()

f.closed = true

f.cond.Broadcast()

}

// KeyOf exposes f's keyFunc, but also detects the key of a Deltas object or

// DeletedFinalStateUnknown objects.

func (f *DeltaFIFO) KeyOf(obj interface{}) (string, error) {

if d, ok := obj.(Deltas); ok {

if len(d) == 0 {

return "", KeyError{obj, ErrZeroLengthDeltasObject}

}

obj = d.Newest().Object

}

if d, ok := obj.(DeletedFinalStateUnknown); ok {

return d.Key, nil

}

return f.keyFunc(obj)

}

// HasSynced returns true if an Add/Update/Delete/AddIfNotPresent are called first,

// or the first batch of items inserted by Replace() has been popped.

// 1. 如果啥方法都没有调用, 那么HasSynced返回false, 因为populated=false.

//2. 如果先调用Add/Update/AddIfNotPresent/Delete后(后面调用什么函数都不用管了), 那么HasSynced返回true, 因为populated=true并且initialPopulationCount == 0.

//3. 如果先调用Replace(后面调用什么函数都不用管了), 那么必须要等待该replace方法加入元素的个数和DeletedFinalStateUnknown(也就是那些本地缓存上有服务器上没有的元素)全部pop之后, HasSynced才会返回true, 因为只有全部pop完了之后initialPopulationCount才减为0.

func (f *DeltaFIFO) HasSynced() bool {

f.lock.Lock()

defer f.lock.Unlock()

return f.populated && f.initialPopulationCount == 0

}

// Add inserts an item, and puts it in the queue. The item is only enqueued

// if it doesn't already exist in the set.

func (f *DeltaFIFO) Add(obj interface{}) error {

f.lock.Lock()

defer f.lock.Unlock()

f.populated = true

return f.queueActionLocked(Added, obj)

}

// Update is just like Add, but makes an Updated Delta.

func (f *DeltaFIFO) Update(obj interface{}) error {

f.lock.Lock()

defer f.lock.Unlock()

f.populated = true

return f.queueActionLocked(Updated, obj)

}

// Delete is just like Add, but makes a Deleted Delta. If the given

// object does not already exist, it will be ignored. (It may have

// already been deleted by a Replace (re-list), for example.) In this

// method `f.knownObjects`, if not nil, provides (via GetByKey)

// _additional_ objects that are considered to already exist.

func (f *DeltaFIFO) Delete(obj interface{}) error {

id, err := f.KeyOf(obj)

if err != nil {

return KeyError{obj, err}

}

f.lock.Lock()

defer f.lock.Unlock()

f.populated = true

if f.knownObjects == nil {

if _, exists := f.items[id]; !exists {

// Presumably, this was deleted when a relist happened.

// Don't provide a second report of the same deletion.

return nil

}

} else {

// We only want to skip the "deletion" action if the object doesn't

// exist in knownObjects and it doesn't have corresponding item in items.

// Note that even if there is a "deletion" action in items, we can ignore it,

// because it will be deduped automatically in "queueActionLocked"

_, exists, err := f.knownObjects.GetByKey(id)

_, itemsExist := f.items[id]

if err == nil && !exists && !itemsExist {

// Presumably, this was deleted when a relist happened.

// Don't provide a second report of the same deletion.

return nil

}

}

// exist in items and/or KnownObjects

return f.queueActionLocked(Deleted, obj)

}

// AddIfNotPresent inserts an item, and puts it in the queue. If the item is already

// present in the set, it is neither enqueued nor added to the set.

//

// This is useful in a single producer/consumer scenario so that the consumer can

// safely retry items without contending with the producer and potentially enqueueing

// stale items.

//

// Important: obj must be a Deltas (the output of the Pop() function). Yes, this is

// different from the Add/Update/Delete functions.

func (f *DeltaFIFO) AddIfNotPresent(obj interface{}) error {

deltas, ok := obj.(Deltas)

if !ok {

return fmt.Errorf("object must be of type deltas, but got: %#v", obj)

}

id, err := f.KeyOf(deltas.Newest().Object)

if err != nil {

return KeyError{obj, err}

}

f.lock.Lock()

defer f.lock.Unlock()

f.addIfNotPresent(id, deltas)

return nil

}

// addIfNotPresent inserts deltas under id if it does not exist, and assumes the caller

// already holds the fifo lock.

func (f *DeltaFIFO) addIfNotPresent(id string, deltas Deltas) {

f.populated = true

if _, exists := f.items[id]; exists {

return

}

f.queue = append(f.queue, id)

f.items[id] = deltas

f.cond.Broadcast()

}

// re-listing and watching can deliver the same update multiple times in any

// order. This will combine the most recent two deltas if they are the same.

// 后两位去重?

func dedupDeltas(deltas Deltas) Deltas {

n := len(deltas)

if n < 2 {

return deltas

}

a := &deltas[n-1]

b := &deltas[n-2]

if out := isDup(a, b); out != nil {

// `a` and `b` are duplicates. Only keep the one returned from isDup().

// TODO: This extra array allocation and copy seems unnecessary if

// all we do to dedup is compare the new delta with the last element

// in `items`, which could be done by mutating `items` directly.

// Might be worth profiling and investigating if it is safe to optimize.

d := append(Deltas{}, deltas[:n-2]...)

return append(d, *out)

}

return deltas

}

// If a & b represent the same event, returns the delta that ought to be kept.

// Otherwise, returns nil.

// TODO: is there anything other than deletions that need deduping?

func isDup(a, b *Delta) *Delta {

if out := isDeletionDup(a, b); out != nil {

return out

}

// TODO: Detect other duplicate situations? Are there any?

return nil

}

// keep the one with the most information if both are deletions.

func isDeletionDup(a, b *Delta) *Delta {

if b.Type != Deleted || a.Type != Deleted {

return nil

}

// Do more sophisticated checks, or is this sufficient?

if _, ok := b.Object.(DeletedFinalStateUnknown); ok {

return a

}

return b

}

// queueActionLocked appends to the delta list for the object.

// Caller must lock first.

// 增删改 同步替换事件入队

func (f *DeltaFIFO) queueActionLocked(actionType DeltaType, obj interface{}) error {

id, err := f.KeyOf(obj)

if err != nil {

return KeyError{obj, err}

}

newDeltas := append(f.items[id], Delta{actionType, obj})

// 判断最后两个元素是不是都是delete, 如果是则将前面去掉只保留最后两个其一

newDeltas = dedupDeltas(newDeltas)

if len(newDeltas) > 0 {

if _, exists := f.items[id]; !exists {

f.queue = append(f.queue, id)

}

f.items[id] = newDeltas

f.cond.Broadcast()

} else {

// This never happens, because dedupDeltas never returns an empty list

// when given a non-empty list (as it is here).

// But if somehow it ever does return an empty list, then

// We need to remove this from our map (extra items in the queue are

// ignored if they are not in the map).

delete(f.items, id)

}

return nil

}

// List returns a list of all the items; it returns the object

// from the most recent Delta.

// You should treat the items returned inside the deltas as immutable.

func (f *DeltaFIFO) List() []interface{} {

f.lock.RLock()

defer f.lock.RUnlock()

return f.listLocked()

}

func (f *DeltaFIFO) listLocked() []interface{} {

list := make([]interface{}, 0, len(f.items))

for _, item := range f.items {

list = append(list, item.Newest().Object)

}

return list

}

// ListKeys returns a list of all the keys of the objects currently

// in the FIFO.

func (f *DeltaFIFO) ListKeys() []string {

f.lock.RLock()

defer f.lock.RUnlock()

list := make([]string, 0, len(f.items))

for key := range f.items {

list = append(list, key)

}

return list

}

// Get returns the complete list of deltas for the requested item,

// or sets exists=false.

// You should treat the items returned inside the deltas as immutable.

func (f *DeltaFIFO) Get(obj interface{}) (item interface{}, exists bool, err error) {

key, err := f.KeyOf(obj)

if err != nil {

return nil, false, KeyError{obj, err}