rocketMq,seata控制分布式事务

1. 概念

这篇文章清晰简单,不再详述

https://blog.csdn.net/weixin_38305440/article/details/107384969

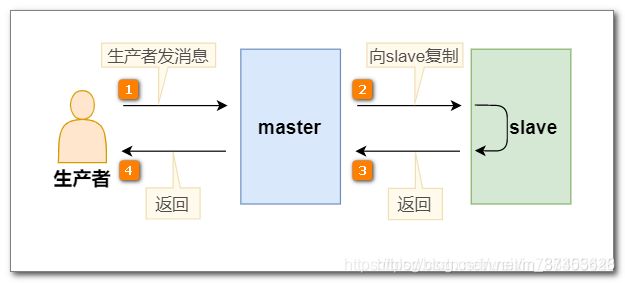

rocketmq存在两种消息,同步消息和异步消息,大致如下:

同步消息:

1.1 什么是事务

数据库事务(简称:事务,Transaction)是指数据库执行过程中的一个逻辑单位,由一个有限的数据库操作序列构成[由当前业务逻辑多个不同操作构成]。

事务拥有以下四个特性,被称为ACID:

原子性(Atomicity):事务作为一个整体被执行,包含在其中的对数据库的操作要么全部被执行,要么都不执行。

一致性(Consistency):事务应确保数据库的状态从一个一致状态转变为另一个一致状态。一致状态是指数据库中的数据应满足完整性约束。除此之外,一致性还有另外一层语义,就是事务的中间状态不能被观察到。

隔离性(Isolation):多个事务并发执行时,一个事务的执行不应影响其他事务的执行,如同只有这一个操作在被数据库所执行一样。

持久性(Durability):已被提交的事务对数据库的修改应该永久保存在数据库中。在事务结束时,此操作将不可逆转。

1.2 CAP定理

CAP 定理,又被叫作布鲁尔定理。对于设计分布式系统(不仅仅是分布式事务)的架构师来说,CAP 就是你的入门理论。

**C (一致性):**对某个指定的客户端来说,读操作能返回最新的写操作。

对于数据分布在不同节点上的数据来说,如果在某个节点更新了数据,那么在其他节点如果都能读取到这个最新的数据,那么就称为强一致,如果有某个节点没有读取到,那就是分布式不一致。

**A (可用性):**非故障的节点在合理的时间内返回合理的响应(不是错误和超时的响应)。可用性的两个关键一个是合理的时间,一个是合理的响应。

合理的时间指的是请求不能无限被阻塞,应该在合理的时间给出返回。合理的响应指的是系统应该明确返回结果并且结果是正确的,这里的正确指的是比如应该返回 50,而不是返回 40。

**P (分区容错性):**当出现网络分区后,系统能够继续工作。打个比方,这里集群有多台机器,有台机器网络出现了问题,但是这个集群仍然可以正常工作。

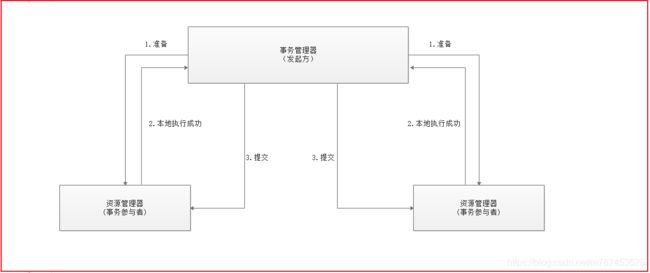

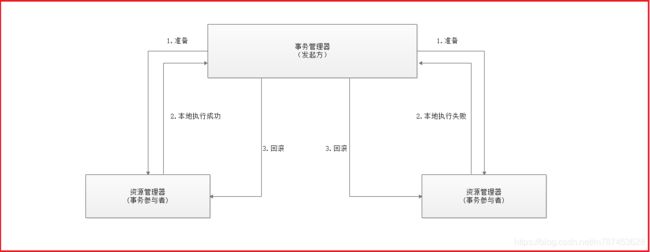

1.3 2PC,两段提交

一个事务协调者(coordinator):负责协调多个参与者进行事务投票及提交(回滚)

多个事务参与者(participants):即本地事务执行者

总共处理步骤有两个

(1)投票阶段(voting phase):协调者将通知事务参与者准备提交或取消事务,然后进入表决过程。参与者将告知协调者自己的决策:同意(事务参与者本地事务执行成功,但未提交)或取消(本地事务执行故障);

(2)提交阶段(commit phase):收到参与者的通知后,协调者再向参与者发出通知,根据反馈情况决定各参与者是否要提交还是回滚;

优点: 尽量保证了数据的强一致,适合对数据强一致要求很高的关键领域。

缺点: 牺牲了可用性,对性能影响较大,不适合高并发高性能场景。

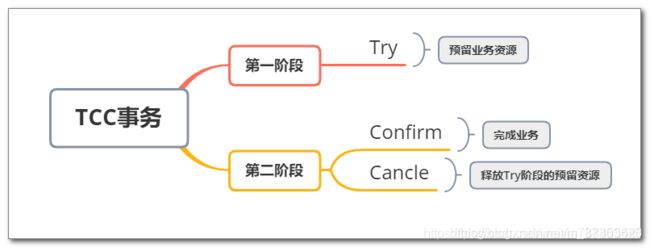

1.4 TCC,补偿事务

TCC 将事务提交分为 Try(method1) - Confirm(method2) - Cancel(method3) 3个操作。其和两阶段提交有点类似,Try为第一阶段,Confirm - Cancel为第二阶段,是一种应用层面侵入业务的两阶段提交。

| 操作方法 | 含义

| Try | 预留业务资源/数据效验

| Confirm | 确认执行业务操作,实际提交数据,不做任何业务检查,try成功,confirm必定成功,需保证幂等

| Cancel | 取消执行业务操作,实际回滚数据,需保证幂等

其核心在于将业务分为两个操作步骤完成。不依赖 RM 对分布式事务的支持,而是通过对业务逻辑的分解来实现分布式事务。

优点:跟2PC比起来,实现以及流程相对简单了一些,但数据的一致性比2PC也要差一些

缺点:缺点还是比较明显的,在2,3步中都有可能失败。TCC属于应用层的一种补偿方式,所以需要程序员在实现的时候多写很多补偿的代码,在一些场景中,一些业务流程可能用TCC不太好定义及处理。

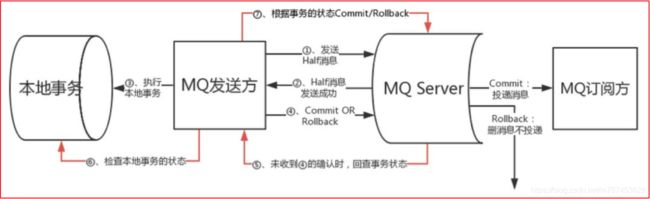

1.5 MQ事务消息

有一些第三方的MQ是支持事务消息的,比如RocketMQ,他们支持事务消息的方式也是类似于采用的二阶段提交,但是市面上一些主流的MQ都是不支持事务消息的,比如 RabbitMQ 和 Kafka 都不支持。

以阿里的 RocketMQ 中间件为例,其思路大致为:

第一阶段Prepared消息,会拿到消息的地址。

第二阶段执行本地事务,第三阶段通过第一阶段拿到的地址去访问消息,并修改状态。

也就是说在业务方法内要想消息队列提交两次请求,一次发送消息和一次确认消息。如果确认消息发送失败了RocketMQ会定期扫描消息集群中的事务消息,这时候发现了Prepared消息,它会向消息发送者确认,所以生产方需要实现一个check接口,RocketMQ会根据发送端设置的策略来决定是回滚还是继续发送确认消息。这样就保证了消息发送与本地事务同时成功或同时失败。

优点: 实现了最终一致性,不需要依赖本地数据库事务。

缺点:目前主流MQ中只有RocketMQ支持事务消息。

1.6 seata的AT模式

Seata AT模式是基于XA事务演进而来的一个分布式事务中间件,XA是一个基于数据库实现的分布式事务协议,本质上和两阶段提交一样,需要数据库支持,Mysql5.6以上版本支持XA协议,其他数据库如Oracle,DB2也实现了XA接口

解释:

Transaction Coordinator (TC): 事务协调器,维护全局事务的运行状态,负责协调并驱动全局事务的提交或回滚。

Transaction Manager(TM): 控制全局事务的边界,负责开启一个全局事务,并最终发起全局提交或全局回滚的决议。

Resource Manager (RM): 控制分支事务,负责分支注册、状态汇报,并接收事务协调器的指令,驱动分支(本地)事务的提交和回滚。

第一阶段

Seata 的 JDBC 数据源代理通过对业务 SQL 的解析,把业务数据在更新前后的数据镜像组织成回滚日志,利用 本地事务 的 ACID 特性,将业务数据的更新和回滚日志的写入在同一个 本地事务 中提交。

这样,可以保证:任何提交的业务数据的更新一定有相应的回滚日志存在,

这也是Seata和XA事务的不同之处,两阶段提交往往对资源的锁定需要持续到第二阶段实际的提交或者回滚操作,而有了回滚日志之后,可以在第一阶段释放对资源的锁定,降低了锁范围,提高效率,即使第二阶段发生异常需要回滚,只需找对undolog中对应数据并反解析成sql来达到回滚目的

同时Seata通过代理数据源将业务sql的执行解析成undolog来与业务数据的更新同时入库,达到了对业务无侵入的效果。

第二阶段

如果决议是全局提交,此时分支事务此时已经完成提交,不需要同步协调处理(只需要异步清理回滚日志),Phase2 可以非常快速地完成.

如果决议是全局回滚,RM 收到协调器发来的回滚请求,通过 XID 和 Branch ID 找到相应的回滚日志记录,通过回滚记录生成反向的更新 SQL 并执行,以完成分支的回滚

2. AT 模式

2.1 POM文件

<properties>

<mybatis-plus.version>3.3.2</mybatis-plus.version>

<druid-spring-boot-starter.version>1.1.23</druid-spring-boot-starter.version>

<seata.version>1.3.0</seata.version>

<spring-cloud-alibaba-seata.version>2.0.0.RELEASE</spring-cloud-alibaba-seata.version>

<spring-cloud.version>Hoxton.SR6</spring-cloud.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-netflix-eureka-client</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-openfeign</artifactId>

</dependency>

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

<version>${mybatis-plus.version}</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>${druid-spring-boot-starter.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-alibaba-seata</artifactId>

<version>${spring-cloud-alibaba-seata.version}</version>

<exclusions>

<exclusion>

<artifactId>seata-all</artifactId>

<groupId>io.seata</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

<version>${seata.version}</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

<exclusions>

<exclusion>

<groupId>org.junit.vintage</groupId>

<artifactId>junit-vintage-engine</artifactId>

</exclusion>

</exclusions>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring-cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

2.2seata必要sql

seata需要额外新建一个数据库,并且在每个库中添加undo_log表用于记录回滚日志

seata数据库sql

https://github.com/seata/seata/tree/develop/script/server/db

drop database if exists `seata`;

CREATE DATABASE `seata` CHARSET utf8;

use `seata`;

-- -------------------------------- The script used when storeMode is 'db' --------------------------------

-- the table to store GlobalSession data

CREATE TABLE IF NOT EXISTS `global_table`

(

`xid` VARCHAR(128) NOT NULL,

`transaction_id` BIGINT,

`status` TINYINT NOT NULL,

`application_id` VARCHAR(32),

`transaction_service_group` VARCHAR(32),

`transaction_name` VARCHAR(128),

`timeout` INT,

`begin_time` BIGINT,

`application_data` VARCHAR(2000),

`gmt_create` DATETIME,

`gmt_modified` DATETIME,

PRIMARY KEY (`xid`),

KEY `idx_gmt_modified_status` (`gmt_modified`, `status`),

KEY `idx_transaction_id` (`transaction_id`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8;

-- the table to store BranchSession data

CREATE TABLE IF NOT EXISTS `branch_table`

(

`branch_id` BIGINT NOT NULL,

`xid` VARCHAR(128) NOT NULL,

`transaction_id` BIGINT,

`resource_group_id` VARCHAR(32),

`resource_id` VARCHAR(256),

`branch_type` VARCHAR(8),

`status` TINYINT,

`client_id` VARCHAR(64),

`application_data` VARCHAR(2000),

`gmt_create` DATETIME(6),

`gmt_modified` DATETIME(6),

PRIMARY KEY (`branch_id`),

KEY `idx_xid` (`xid`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8;

-- the table to store lock data

CREATE TABLE IF NOT EXISTS `lock_table`

(

`row_key` VARCHAR(128) NOT NULL,

`xid` VARCHAR(96),

`transaction_id` BIGINT,

`branch_id` BIGINT NOT NULL,

`resource_id` VARCHAR(256),

`table_name` VARCHAR(32),

`pk` VARCHAR(36),

`gmt_create` DATETIME,

`gmt_modified` DATETIME,

PRIMARY KEY (`row_key`),

KEY `idx_branch_id` (`branch_id`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8;

undo_log表sql

https://github.com/seata/seata/tree/develop/script/client/at/db

-- for AT mode you must to init this sql for you business database. the seata server not need it.

CREATE TABLE IF NOT EXISTS `undo_log`

(

`branch_id` BIGINT(20) NOT NULL COMMENT 'branch transaction id',

`xid` VARCHAR(100) NOT NULL COMMENT 'global transaction id',

`context` VARCHAR(128) NOT NULL COMMENT 'undo_log context,such as serialization',

`rollback_info` LONGBLOB NOT NULL COMMENT 'rollback info',

`log_status` INT(11) NOT NULL COMMENT '0:normal status,1:defense status',

`log_created` DATETIME(6) NOT NULL COMMENT 'create datetime',

`log_modified` DATETIME(6) NOT NULL COMMENT 'modify datetime',

UNIQUE KEY `ux_undo_log` (`xid`, `branch_id`)

) ENGINE = InnoDB

AUTO_INCREMENT = 1

DEFAULT CHARSET = utf8 COMMENT ='AT transaction mode undo table';

2.3 eureka注册中心

省略

2.4 Seata Server - TC全局事务协调器

https://github.com/seata/seata/releases

Seata Server 的配置文件有两个:

seata/conf/registry.conf

修改类型为eureka

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "eureka"

nacos {

serverAddr = "localhost"

namespace = ""

cluster = "default"

}

eureka {

# 注册中心地址

serviceUrl = "http://localhost:8761/eureka"

# 注册的服务ID

application = "seata-server"

weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = "0"

password = ""

cluster = "default"

timeout = "0"

}

zk {

cluster = "default"

serverAddr = "127.0.0.1:2181"

session.timeout = 6000

connect.timeout = 2000

username = ""

password = ""

}

consul {

cluster = "default"

serverAddr = "127.0.0.1:8500"

}

etcd3 {

cluster = "default"

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

application = "default"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

cluster = "default"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}

Seata 需要存储全局事务信息、分支事务信息、全局锁信息,这些数据存储到什么位置?

针对存储位置的配置,支持放在配置中心,或者也可以放在本地文件。Seata Server 支持的配置中心服务有:nacos 、apollo、zk、consul、etcd3。

这里我们选择最简单的,使用本地文件,这需要在 registry.conf 配置文件中来指定:

config {

# file、nacos 、apollo、zk、consul、etcd3、springCloudConfig

type = "file"

nacos {

serverAddr = "localhost"

namespace = ""

group = "SEATA_GROUP"

}

consul {

serverAddr = "127.0.0.1:8500"

}

apollo {

app.id = "seata-server"

apollo.meta = "http://192.168.1.204:8801"

namespace = "application"

}

zk {

serverAddr = "127.0.0.1:2181"

session.timeout = 6000

connect.timeout = 2000

username = ""

password = ""

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}

file.conf 中对事务信息的存储位置进行配置,存储位置支持:file、db、redis。

这里我们选择数据库作为存储位置,这需要在 file.conf 中进行配置:

seata/conf/file.conf

## transaction log store, only used in seata-server

store {

## store mode: file、db、redis

mode = "db"

## file store property

file {

## store location dir

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

maxBranchSessionSize = 16384

# globe session size , if exceeded throws exceptions

maxGlobalSessionSize = 512

# file buffer size , if exceeded allocate new buffer

fileWriteBufferCacheSize = 16384

# when recover batch read size

sessionReloadReadSize = 100

# async, sync

flushDiskMode = async

}

## database store property

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp)/HikariDataSource(hikari) etc.

datasource = "druid"

## mysql/oracle/postgresql/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.jdbc.Driver"

# 数据库连接配置

url = "jdbc:mysql://192.168.140.213:3306/seata?useUnicode=true&characterEncoding=UTF-8&serverTimezone=GMT%2B8"

user = "root"

password = "root"

minConn = 5

maxConn = 30

# 事务日志表表名设置

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

maxWait = 5000

}

## redis store property

redis {

host = "127.0.0.1"

port = "6379"

password = ""

database = "0"

minConn = 1

maxConn = 10

queryLimit = 100

}

}

2.5 客户端

2.5.1 application.yml

spring:

......

cloud:

alibaba:

seata:

tx-service-group: order_tx_group

2.5.2 registry.conf

registry.conf需要从注册中心获得 TC 的地址,这里配置注册中心的地址。

registry.conf

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "eureka"

nacos {

serverAddr = "localhost"

namespace = ""

cluster = "default"

}

eureka {

serviceUrl = "http://localhost:8761/eureka"

# application = "default"

# weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = "0"

password = ""

cluster = "default"

timeout = "0"

}

zk {

cluster = "default"

serverAddr = "127.0.0.1:2181"

session.timeout = 6000

connect.timeout = 2000

username = ""

password = ""

}

consul {

cluster = "default"

serverAddr = "127.0.0.1:8500"

}

etcd3 {

cluster = "default"

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

application = "default"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

cluster = "default"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3、springCloudConfig

type = "file"

nacos {

serverAddr = "localhost"

namespace = ""

group = "SEATA_GROUP"

}

consul {

serverAddr = "127.0.0.1:8500"

}

apollo {

app.id = "seata-server"

apollo.meta = "http://192.168.1.204:8801"

namespace = "application"

}

zk {

serverAddr = "127.0.0.1:2181"

session.timeout = 6000

connect.timeout = 2000

username = ""

password = ""

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}

在这里我们指定 TC 的服务ID seata-server:

vgroupMapping.order_tx_group = “seata-server”

order_tx_group 对应 application.yml 中注册的事务组名。

TC 在注册中心注册的服务ID在下面 file.conf 中指定。

file.conf

transport {

# tcp udt unix-domain-socket

type = "TCP"

#NIO NATIVE

server = "NIO"

#enable heartbeat

heartbeat = true

# the client batch send request enable

enableClientBatchSendRequest = true

#thread factory for netty

threadFactory {

bossThreadPrefix = "NettyBoss"

workerThreadPrefix = "NettyServerNIOWorker"

serverExecutorThread-prefix = "NettyServerBizHandler"

shareBossWorker = false

clientSelectorThreadPrefix = "NettyClientSelector"

clientSelectorThreadSize = 1

clientWorkerThreadPrefix = "NettyClientWorkerThread"

# netty boss thread size,will not be used for UDT

bossThreadSize = 1

#auto default pin or 8

workerThreadSize = "default"

}

shutdown {

# when destroy server, wait seconds

wait = 3

}

serialization = "seata"

compressor = "none"

}

service {

#transaction service group mapping

# order_tx_group 与 yml 中的 “tx-service-group: order_tx_group” 配置一致

# “seata-server” 与 TC 服务器的注册名一致

# 从eureka获取seata-server的地址,再向seata-server注册自己,设置group

vgroupMapping.order_tx_group = "seata-server"

#only support when registry.type=file, please don't set multiple addresses

order_tx_group.grouplist = "127.0.0.1:8091"

#degrade, current not support

enableDegrade = false

#disable seata

disableGlobalTransaction = false

}

client {

rm {

asyncCommitBufferLimit = 10000

lock {

retryInterval = 10

retryTimes = 30

retryPolicyBranchRollbackOnConflict = true

}

reportRetryCount = 5

tableMetaCheckEnable = false

reportSuccessEnable = false

}

tm {

commitRetryCount = 5

rollbackRetryCount = 5

}

undo {

dataValidation = true

logSerialization = "jackson"

logTable = "undo_log"

}

log {

exceptionRate = 100

}

}

2.6 创建seata数据源

import com.alibaba.druid.pool.DruidDataSource;

import io.seata.rm.datasource.DataSourceProxy;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import javax.sql.DataSource;

@Configuration

public class DatasourceConfiguration {

@Bean

@ConfigurationProperties(prefix = "spring.datasource")

public DataSource druidDataSource(){

DruidDataSource druidDataSource = new DruidDataSource();

return druidDataSource;

}

//@Primary注解的作用其实就是相当于默认值,在众多实现类中给他一个这样的注解就会在不指定调用哪个bean类时会默认调用有这个注解的方法

@Primary

@Bean("dataSource")

public DataSourceProxy dataSourceProxy(DataSource druidDataSource){

return new DataSourceProxy(druidDataSource);

}

}

主程序中排除Springboot 的默认数据源:

@MapperScan("cn.tedu.order.mapper")

@EnableFeignClients

@SpringBootApplication(exclude = DataSourceAutoConfiguration.class)

public class OrderApplication {

public static void main(String[] args) {

SpringApplication.run(OrderApplication.class,args);

}

}

2.7

到此,seata分布式事务AT模式完成,添加一个 @GlobalTransactional 注解即可,隔离策略与@Transactional类似.,该模式下対源代码入侵度小,

3. TCC

TCC 与 Seata AT 事务一样都是两阶段事务,它与 AT 事务的主要区别为:

TCC 对业务代码侵入严重

每个阶段的数据操作都要自己进行编码来实现,事务框架无法自动处理。

TCC 效率更高

不必对数据加全局锁,允许多个事务同时操作数据。

AT模式主要添加单表用于记录回滚日志,而TCC模式下则是新增字段用于冻结操作数据

前置准备与2.5以前一致,无需更换为seata数据源

3.6 修改业务实现类

该步骤是在AT模式的2.5步骤完成后开始.

下面是修改后的业务实现类:

将原有数据库操作删除,改为新接口XXTCCAction接口的实现类注入

@Service

public class OrderServiceImpl implements OrderService {

@Autowired

private OrderMapper orderMapper;

@Autowired

EasyIdGeneratorClient easyIdGeneratorClient;

@Autowired

private AccountClient accountClient;

@Autowired

private StorageClient storageClient;

@Autowired

private OrderTccAction orderTccAction;

@GlobalTransactional

@Override

public void create(Order order) {

// 从全局唯一id发号器获得id

Long orderId = easyIdGeneratorClient.nextId("order_business");

order.setId(orderId);

// orderMapper.create(order);

//官方文档不推荐直接传order,传简单数据

orderTccAction.prepareCreateOrder(

null,

order.getId(),

order.getUserId(),

order.getProductId(),

order.getCount(),

order.getMoney()

);

// 修改库存

storageClient.decrease(order.getProductId(), order.getCount());

// 修改账户余额

accountClient.decrease(order.getUserId(), order.getMoney());

}

}

3.7 添加TCC接口

@LocalTCC

public interface OrderTccAction {

/*

第一阶段的方法

通过注解指定第二阶段的两个方法名

BusinessActionContext 上下文对象,用来在两个阶段之间传递数据

@BusinessActionContextParameter 注解的参数数据会被存入 BusinessActionContext

*/

@TwoPhaseBusinessAction(name = "orderTccAction",commitMethod = "commit",rollbackMethod = "rollback")

boolean prepareCreateOrder(BusinessActionContext businessActionContext,

@BusinessActionContextParameter(paramName = "orderId") Long orderId,

@BusinessActionContextParameter(paramName = "userId") Long userId,

@BusinessActionContextParameter(paramName = "productId") Long productId,

@BusinessActionContextParameter(paramName = "count") Integer count,

@BusinessActionContextParameter(paramName = "money") BigDecimal money

);

// 第二阶段 - 提交

boolean commit(BusinessActionContext businessActionContext);

// 第二阶段 - 回滚

boolean rollback(BusinessActionContext businessActionContext);

}

3.8 TCC接口实现类

@Component

@Slf4j

public class OrderTccActionImpl implements OrderTccAction {

@Autowired

private OrderMapper orderMapper;

@Transactional

@Override

public boolean prepareCreateOrder(BusinessActionContext businessActionContext, Long orderId, Long userId, Long productId, Integer count, BigDecimal money) {

log.info("第一阶段,冻结订单数据--{}",businessActionContext.getXid());

Order order = new Order(orderId, userId, productId, count, money, 0);

orderMapper.create(order);

//事务成功,保存一个标识,供第二阶段进行判断

ResultHolder.setResult(OrderTccAction.class,businessActionContext.getXid(),"p");

return true;

}

@Transactional

@Override

public boolean commit(BusinessActionContext businessActionContext) {

log.info("创建 order 第二阶段提交,修改订单状态1,改成1位解冻, - -"+businessActionContext.getXid());

//幂等性判断,防止重复提交,RM可能重复执行多次

if(ResultHolder.getResult(OrderTccAction.class,businessActionContext.getXid()) == null){

return true;

}

//serta在一阶段转json,在直接强转Long会报异常

Long orderId = Long.parseLong(businessActionContext.getActionContext("orderId").toString());

orderMapper.updateStatus(orderId,1);

//提交成功是删除标识

ResultHolder.removeResult(getClass(), businessActionContext.getXid());

return true;

}

@Transactional

@Override

public boolean rollback(BusinessActionContext businessActionContext) {

log.info("创建 order 第二阶段回滚,删除订单 - "+businessActionContext.getXid());

//第一阶段没有完成的情况下,不必执行回滚

//因为第一阶段有本地事务,事务失败时已经进行了回滚。

//如果这里第一阶段成功,而其他全局事务参与者失败,这里会执行回滚

//幂等性控制:如果重复执行回滚则直接返回

if (ResultHolder.getResult(getClass(), businessActionContext.getXid()) == null) {

return true;

}

Long orderId = Long.parseLong(businessActionContext.getActionContext("orderId").toString());

orderMapper.deleteById(orderId);

//回滚结束时,删除标识

ResultHolder.removeResult(getClass(), businessActionContext.getXid());

return true;

}

}

关于幂等性问题,之前的博客有解析,这里不再解释,TCC模式基本完成

4. RocketMQ

rocketmq基础看以下链接:

https://blog.csdn.net/weixin_38305440/category_10137513.html

Rocketmq收到事务消息后,会等待生产者提交或回滚该消息。如果无法得到生产者的提交或回滚指令,则会主动向生产者询问消息状态,称为回查。

在 order 项目中,为了让Rocketmq可以回查到事务的状态,需要记录事务的状态,所以我们添加一个事务的状态表来记录事务状态。

seataAT模式需要日志表undo_log,而rocketMq需要单表查本地事务状态

CREATE TABLE tx_table(

`xid` char(32) PRIMARY KEY COMMENT '事务id',

`status` int COMMENT '0-提交,1-回滚,2-未知',

`created_at` BIGINT UNSIGNED NOT NULL COMMENT '创建时间'

);

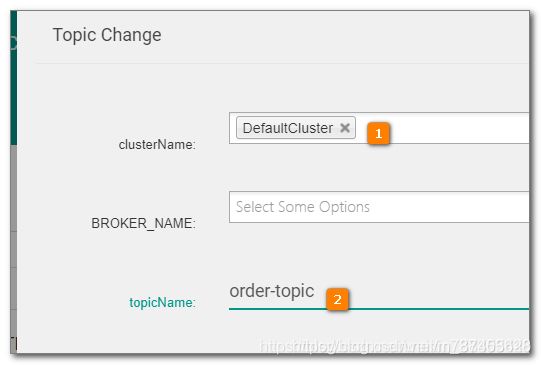

4.1 rocketMQ添加topic

4.2 添加依赖

<dependency>

<groupId>org.apache.rocketmq</groupId>

<artifactId>rocketmq-spring-boot-starter</artifactId>

<version>2.1.0</version>

</dependency>

4.3 application.yml

rocketmq:

name-server: 192.168.64.151:9876;192.168.64.152:9876

producer:

group: order-group

4.4 tx_table对应接口,xml

实体类

@Data

@NoArgsConstructor

@AllArgsConstructor

public class TxInfo {

private String xid;

private long created;

private int status;

}

接口

public interface TxMapper extends BaseMapper<TxInfo> {

Boolean exists(String xid);

}

xml

<?xml version="1.0" encoding="UTF-8" ?>

<!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd" >

<mapper namespace="cn.tedu.order.mapper.TxMapper" >

<resultMap id="BaseResultMap" type="cn.tedu.order.tx.TxInfo" >

<id column="xid" property="xid" jdbcType="CHAR" />

<result column="created_at" property="created" jdbcType="BIGINT" />

<result column="status" property="status" jdbcType="INTEGER"/>

</resultMap>

<insert id="insert">

INSERT INTO `tx_table`(`xid`,`created_at`,`status`) VALUES(#{xid},#{created},#{status});

</insert>

<select id="exists" resultType="boolean">

SELECT COUNT(1) FROM tx_table WHERE xid=#{xid};

</select>

<select id="selectById" resultMap="BaseResultMap">

SELECT `xid`,`created_at`,`status` FROM tx_table WHERE xid=#{xid};

</select>

</mapper>

4.5 Json工具类

package cn.tedu.order.util;

import java.io.File;

import java.io.FileWriter;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.io.Writer;

import java.math.BigDecimal;

import java.math.BigInteger;

import java.net.URL;

import java.nio.charset.StandardCharsets;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.List;

import org.apache.commons.lang3.StringUtils;

import com.fasterxml.jackson.annotation.JsonInclude;

import com.fasterxml.jackson.core.JsonGenerator;

import com.fasterxml.jackson.core.JsonParser;

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.core.type.TypeReference;

import com.fasterxml.jackson.databind.DeserializationFeature;

import com.fasterxml.jackson.databind.JsonNode;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.fasterxml.jackson.databind.SerializationFeature;

import com.fasterxml.jackson.databind.node.ObjectNode;

import com.fasterxml.jackson.datatype.jdk8.Jdk8Module;

import com.fasterxml.jackson.datatype.jsr310.JavaTimeModule;

import com.fasterxml.jackson.module.paramnames.ParameterNamesModule;

import lombok.extern.slf4j.Slf4j;

@Slf4j

public class JsonUtil {

private static ObjectMapper mapper;

private static JsonInclude.Include DEFAULT_PROPERTY_INCLUSION = JsonInclude.Include.NON_DEFAULT;

private static boolean IS_ENABLE_INDENT_OUTPUT = false;

private static String CSV_DEFAULT_COLUMN_SEPARATOR = ",";

static {

try {

initMapper();

configPropertyInclusion();

configIndentOutput();

configCommon();

} catch (Exception e) {

log.error("jackson config error", e);

}

}

private static void initMapper() {

mapper = new ObjectMapper();

}

private static void configCommon() {

config(mapper);

}

private static void configPropertyInclusion() {

mapper.setSerializationInclusion(DEFAULT_PROPERTY_INCLUSION);

}

private static void configIndentOutput() {

mapper.configure(SerializationFeature.INDENT_OUTPUT, IS_ENABLE_INDENT_OUTPUT);

}

private static void config(ObjectMapper objectMapper) {

objectMapper.enable(JsonGenerator.Feature.WRITE_BIGDECIMAL_AS_PLAIN);

objectMapper.enable(DeserializationFeature.ACCEPT_EMPTY_STRING_AS_NULL_OBJECT);

objectMapper.enable(DeserializationFeature.ACCEPT_SINGLE_VALUE_AS_ARRAY);

objectMapper.enable(DeserializationFeature.FAIL_ON_READING_DUP_TREE_KEY);

objectMapper.enable(DeserializationFeature.FAIL_ON_NUMBERS_FOR_ENUMS);

objectMapper.disable(DeserializationFeature.FAIL_ON_UNKNOWN_PROPERTIES);

objectMapper.disable(DeserializationFeature.FAIL_ON_NULL_FOR_PRIMITIVES);

objectMapper.disable(SerializationFeature.FAIL_ON_EMPTY_BEANS);

objectMapper.enable(JsonParser.Feature.ALLOW_COMMENTS);

objectMapper.disable(JsonGenerator.Feature.ESCAPE_NON_ASCII);

objectMapper.enable(JsonGenerator.Feature.IGNORE_UNKNOWN);

objectMapper.enable(JsonParser.Feature.ALLOW_UNQUOTED_FIELD_NAMES);

objectMapper.disable(SerializationFeature.WRITE_DATES_AS_TIMESTAMPS);

objectMapper.setDateFormat(new SimpleDateFormat("yyyy-MM-dd HH:mm:ss"));

objectMapper.enable(JsonParser.Feature.ALLOW_SINGLE_QUOTES);

objectMapper.registerModule(new ParameterNamesModule());

objectMapper.registerModule(new Jdk8Module());

objectMapper.registerModule(new JavaTimeModule());

}

public static void setSerializationInclusion(JsonInclude.Include inclusion) {

DEFAULT_PROPERTY_INCLUSION = inclusion;

configPropertyInclusion();

}

public static void setIndentOutput(boolean isEnable) {

IS_ENABLE_INDENT_OUTPUT = isEnable;

configIndentOutput();

}

public static <V> V from(URL url, Class<V> c) {

try {

return mapper.readValue(url, c);

} catch (IOException e) {

log.error("jackson from error, url: {}, type: {}", url.getPath(), c, e);

return null;

}

}

public static <V> V from(InputStream inputStream, Class<V> c) {

try {

return mapper.readValue(inputStream, c);

} catch (IOException e) {

log.error("jackson from error, type: {}", c, e);

return null;

}

}

public static <V> V from(File file, Class<V> c) {

try {

return mapper.readValue(file, c);

} catch (IOException e) {

log.error("jackson from error, file path: {}, type: {}", file.getPath(), c, e);

return null;

}

}

public static <V> V from(Object jsonObj, Class<V> c) {

try {

return mapper.readValue(jsonObj.toString(), c);

} catch (IOException e) {

log.error("jackson from error, json: {}, type: {}", jsonObj.toString(), c, e);

return null;

}

}

public static <V> V from(String json, Class<V> c) {

try {

return mapper.readValue(json, c);

} catch (IOException e) {

log.error("jackson from error, json: {}, type: {}", json, c, e);

return null;

}

}

public static <V> V from(URL url, TypeReference<V> type) {

try {

return mapper.readValue(url, type);

} catch (IOException e) {

log.error("jackson from error, url: {}, type: {}", url.getPath(), type, e);

return null;

}

}

public static <V> V from(InputStream inputStream, TypeReference<V> type) {

try {

return mapper.readValue(inputStream, type);

} catch (IOException e) {

log.error("jackson from error, type: {}", type, e);

return null;

}

}

public static <V> V from(File file, TypeReference<V> type) {

try {

return mapper.readValue(file, type);

} catch (IOException e) {

log.error("jackson from error, file path: {}, type: {}", file.getPath(), type, e);

return null;

}

}

public static <V> V from(Object jsonObj, TypeReference<V> type) {

try {

return mapper.readValue(jsonObj.toString(), type);

} catch (IOException e) {

log.error("jackson from error, json: {}, type: {}", jsonObj.toString(), type, e);

return null;

}

}

public static <V> V from(String json, TypeReference<V> type) {

try {

return mapper.readValue(json, type);

} catch (IOException e) {

log.error("jackson from error, json: {}, type: {}", json, type, e);

return null;

}

}

public static <V> String to(List<V> list) {

try {

return mapper.writeValueAsString(list);

} catch (JsonProcessingException e) {

log.error("jackson to error, obj: {}", list, e);

return null;

}

}

public static <V> String to(V v) {

try {

return mapper.writeValueAsString(v);

} catch (JsonProcessingException e) {

log.error("jackson to error, obj: {}", v, e);

return null;

}

}

public static <V> void toFile(String path, List<V> list) {

try (Writer writer = new FileWriter(new File(path), true)) {

mapper.writer().writeValues(writer).writeAll(list);

writer.flush();

} catch (Exception e) {

log.error("jackson to file error, path: {}, list: {}", path, list, e);

}

}

public static <V> void toFile(String path, V v) {

try (Writer writer = new FileWriter(new File(path), true)) {

mapper.writer().writeValues(writer).write(v);

writer.flush();

} catch (Exception e) {

log.error("jackson to file error, path: {}, obj: {}", path, v, e);

}

}

public static String getString(String json, String key) {

if (StringUtils.isEmpty(json)) {

return null;

}

try {

JsonNode node = mapper.readTree(json);

if (null != node) {

return node.get(key).asText();

} else {

return null;

}

} catch (IOException e) {

log.error("jackson get string error, json: {}, key: {}", json, key, e);

return null;

}

}

public static Integer getInt(String json, String key) {

if (StringUtils.isEmpty(json)) {

return null;

}

try {

JsonNode node = mapper.readTree(json);

if (null != node) {

return node.get(key).intValue();

} else {

return null;

}

} catch (IOException e) {

log.error("jackson get int error, json: {}, key: {}", json, key, e);

return null;

}

}

public static Long getLong(String json, String key) {

if (StringUtils.isEmpty(json)) {

return null;

}

try {

JsonNode node = mapper.readTree(json);

if (null != node) {

return node.get(key).longValue();

} else {

return null;

}

} catch (IOException e) {

log.error("jackson get long error, json: {}, key: {}", json, key, e);

return null;

}

}

public static Double getDouble(String json, String key) {

if (StringUtils.isEmpty(json)) {

return null;

}

try {

JsonNode node = mapper.readTree(json);

if (null != node) {

return node.get(key).doubleValue();

} else {

return null;

}

} catch (IOException e) {

log.error("jackson get double error, json: {}, key: {}", json, key, e);

return null;

}

}

public static BigInteger getBigInteger(String json, String key) {

if (StringUtils.isEmpty(json)) {

return new BigInteger(String.valueOf(0.00));

}

try {

JsonNode node = mapper.readTree(json);

if (null != node) {

return node.get(key).bigIntegerValue();

} else {

return null;

}

} catch (IOException e) {

log.error("jackson get biginteger error, json: {}, key: {}", json, key, e);

return null;

}

}

public static BigDecimal getBigDecimal(String json, String key) {

if (StringUtils.isEmpty(json)) {

return null;

}

try {

JsonNode node = mapper.readTree(json);

if (null != node) {

return node.get(key).decimalValue();

} else {

return null;

}

} catch (IOException e) {

log.error("jackson get bigdecimal error, json: {}, key: {}", json, key, e);

return null;

}

}

public static boolean getBoolean(String json, String key) {

if (StringUtils.isEmpty(json)) {

return false;

}

try {

JsonNode node = mapper.readTree(json);

if (null != node) {

return node.get(key).booleanValue();

} else {

return false;

}

} catch (IOException e) {

log.error("jackson get boolean error, json: {}, key: {}", json, key, e);

return false;

}

}

public static byte[] getByte(String json, String key) {

if (StringUtils.isEmpty(json)) {

return null;

}

try {

JsonNode node = mapper.readTree(json);

if (null != node) {

return node.get(key).binaryValue();

} else {

return null;

}

} catch (IOException e) {

log.error("jackson get byte error, json: {}, key: {}", json, key, e);

return null;

}

}

public static <T> ArrayList<T> getList(String json, String key) {

if (StringUtils.isEmpty(json)) {

return null;

}

String string = getString(json, key);

return from(string, new TypeReference<ArrayList<T>>() {});

}

public static <T> String add(String json, String key, T value) {

try {

JsonNode node = mapper.readTree(json);

add(node, key, value);

return node.toString();

} catch (IOException e) {

log.error("jackson add error, json: {}, key: {}, value: {}", json, key, value, e);

return json;

}

}

private static <T> void add(JsonNode jsonNode, String key, T value) {

if (value instanceof String) {

((ObjectNode) jsonNode).put(key, (String) value);

} else if (value instanceof Short) {

((ObjectNode) jsonNode).put(key, (Short) value);

} else if (value instanceof Integer) {

((ObjectNode) jsonNode).put(key, (Integer) value);

} else if (value instanceof Long) {

((ObjectNode) jsonNode).put(key, (Long) value);

} else if (value instanceof Float) {

((ObjectNode) jsonNode).put(key, (Float) value);

} else if (value instanceof Double) {

((ObjectNode) jsonNode).put(key, (Double) value);

} else if (value instanceof BigDecimal) {

((ObjectNode) jsonNode).put(key, (BigDecimal) value);

} else if (value instanceof BigInteger) {

((ObjectNode) jsonNode).put(key, (BigInteger) value);

} else if (value instanceof Boolean) {

((ObjectNode) jsonNode).put(key, (Boolean) value);

} else if (value instanceof byte[]) {

((ObjectNode) jsonNode).put(key, (byte[]) value);

} else {

((ObjectNode) jsonNode).put(key, to(value));

}

}

public static String remove(String json, String key) {

try {

JsonNode node = mapper.readTree(json);

((ObjectNode) node).remove(key);

return node.toString();

} catch (IOException e) {

log.error("jackson remove error, json: {}, key: {}", json, key, e);

return json;

}

}

public static <T> String update(String json, String key, T value) {

try {

JsonNode node = mapper.readTree(json);

((ObjectNode) node).remove(key);

add(node, key, value);

return node.toString();

} catch (IOException e) {

log.error("jackson update error, json: {}, key: {}, value: {}", json, key, value, e);

return json;

}

}

public static String format(String json) {

try {

JsonNode node = mapper.readTree(json);

return mapper.writerWithDefaultPrettyPrinter().writeValueAsString(node);

} catch (IOException e) {

log.error("jackson format json error, json: {}", json, e);

return json;

}

}

public static boolean isJson(String json) {

try {

mapper.readTree(json);

return true;

} catch (Exception e) {

log.error("jackson check json error, json: {}", json, e);

return false;

}

}

private static InputStream getResourceStream(String name) {

return JsonUtil.class.getClassLoader().getResourceAsStream(name);

}

private static InputStreamReader getResourceReader(InputStream inputStream) {

if (null == inputStream) {

return null;

}

return new InputStreamReader(inputStream, StandardCharsets.UTF_8);

}

}

4.6 rocketMQ消息实体类

TxAccountMessage,封装发送给账户服务的数据:用户id和扣减金额。另外还封装了事务id。

@Data

@NoArgsConstructor

@AllArgsConstructor

public class TxAccountMessage {

Long userId;

BigDecimal money;

String xid;

}

4.7新建接口实现类

@Slf4j

@Primary

@Service

public class TxOrderService implements OrderService {

@Autowired

private RocketMQTemplate rocketMQTemplate;

@Autowired

private OrderMapper orderMapper;

@Autowired

private TxMapper txMapper;

@Autowired

EasyIdGeneratorClient easyIdGeneratorClient;

/*

创建订单的业务方法

这里修改为:只向 Rocketmq 发送事务消息。

*/

@Override

public void create(Order order) {

// 产生事务ID

String xid = UUID.randomUUID().toString().replace("-", "");

//对事务相关数据进行封装,并转成 json 字符串

TxAccountMessage sMsg = new TxAccountMessage(order.getUserId(), order.getMoney(), xid);

String json = JsonUtil.to(sMsg);

//json字符串封装到 Spring Message 对象

Message<String> msg = MessageBuilder.withPayload(json).build();

//发送事务消息

rocketMQTemplate.sendMessageInTransaction("order-topic:account", msg, order);

log.info("事务消息已发送");

}

//本地事务,执行订单保存

//这个方法在事务监听器中调用

@Transactional

public void doCreate(Order order, String xid) {

log.info("执行本地事务,保存订单");

// 从全局唯一id发号器获得id

Long orderId = easyIdGeneratorClient.nextId("order_business");

order.setId(orderId);

orderMapper.create(order);

log.info("订单已保存! 事务日志已保存");

}

}

4.8 消息监听器

发送事务消息后会触发事务监听器执行。

事务监听器有两个方法:

executeLocalTransaction(): 执行本地事务

checkLocalTransaction(): 负责响应Rocketmq服务器的事务回查操作

监听器类:

package cn.tedu.order.tx;

import cn.tedu.order.entity.Order;

import cn.tedu.order.mapper.TxMapper;

import cn.tedu.order.util.JsonUtil;

import lombok.extern.slf4j.Slf4j;

import org.apache.rocketmq.spring.annotation.RocketMQTransactionListener;

import org.apache.rocketmq.spring.core.RocketMQLocalTransactionListener;

import org.apache.rocketmq.spring.core.RocketMQLocalTransactionState;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.messaging.Message;

import org.springframework.stereotype.Component;

@Slf4j

@Component

@RocketMQTransactionListener

public class TxListener implements RocketMQLocalTransactionListener {

@Autowired

private TxOrderService orderService;

@Autowired

private TxMapper txMapper;

@Override

public RocketMQLocalTransactionState executeLocalTransaction(Message message, Object o) {

log.info("事务监听 - 开始执行本地事务");

// 监听器中得到的 message payload 是 byte[]

String json = new String((byte[]) message.getPayload());

String xid = JsonUtil.getString(json, "xid");

log.info("事务监听 - "+json);

log.info("事务监听 - xid: "+xid);

RocketMQLocalTransactionState state;

int status = 0;

Order order = (Order) o;

try {

orderService.doCreate(order, xid);

log.info("本地事务执行成功,提交消息");

state = RocketMQLocalTransactionState.COMMIT;

status = 0;

} catch (Exception e) {

e.printStackTrace();

log.info("本地事务执行失败,回滚消息");

state = RocketMQLocalTransactionState.ROLLBACK;

status = 1;

}

TxInfo txInfo = new TxInfo(xid, System.currentTimeMillis(), status);

txMapper.insert(txInfo);

return state;

}

@Override

public RocketMQLocalTransactionState checkLocalTransaction(Message message) {

log.info("事务监听 - 回查事务状态");

// 监听器中得到的 message payload 是 byte[]

String json = new String((byte[]) message.getPayload());

String xid = JsonUtil.getString(json, "xid");

TxInfo txInfo = txMapper.selectById(xid);

if (txInfo == null) {

log.info("事务监听 - 回查事务状态 - 事务不存在:"+xid);

return RocketMQLocalTransactionState.UNKNOWN;

}

log.info("事务监听 - 回查事务状态 - "+ txInfo.getStatus());

switch (txInfo.getStatus()) {

case 0: return RocketMQLocalTransactionState.COMMIT;

case 1: return RocketMQLocalTransactionState.ROLLBACK;

default: return RocketMQLocalTransactionState.UNKNOWN;

}

}

}

4.9 消费者

消息实体类省略

TxConsumer 实现消息监听,收到消息后完成扣减金额业务:

package cn.tedu.account.tx;

import cn.tedu.account.service.AccountService;

import cn.tedu.account.util.JsonUtil;

import com.fasterxml.jackson.core.type.TypeReference;

import lombok.extern.slf4j.Slf4j;

import org.apache.rocketmq.spring.annotation.RocketMQMessageListener;

import org.apache.rocketmq.spring.core.RocketMQListener;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Component;

@Slf4j

@Component

@RocketMQMessageListener(consumerGroup = "account-consumer-group", topic = "order-topic", selectorExpression = "account")

public class TxConsumer implements RocketMQListener<String> {

@Autowired

private AccountService accountService;

@Override

public void onMessage(String msg) {

TxAccountMessage txAccountMessage = JsonUtil.from(msg, new TypeReference<TxAccountMessage>() {});

log.info("收到消息: "+txAccountMessage);

accountService.decrease(txAccountMessage.getUserId(), txAccountMessage.getMoney());

}

}

rocketMq分布式事务基本完成事务,笔记到此结束