【k8s学习2】二进制文件方式安装 Kubernetes之kubernetesmaster部署

【k8s学习2】二进制文件方式安装 Kubernetes之etcd集群部署_温殿飞的博客-CSDN博客

如果已经完成了etcd的部署,可以按照这个文章继续部署。

部署安全的kubernetes master高可用集群。

(1)下载kubernetes

直接到github网站上搜索kubernetes,然后点击对应的项目进入。

找到releases页面

https://github.com/kubernetes/kubernetes/releases?page=1

然后再对应版本点击CHANGELOG就会跳转到下面页面,1.19即版本号,url里面可以直接修改。

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.19.md

页面往下拉找到对应的版本, 点击Server Binaries,按道理应该会调到对应版本的下载段但是我的没有跳转。

- Server Binaries

直接往下拉滚动条找到对应的版本。

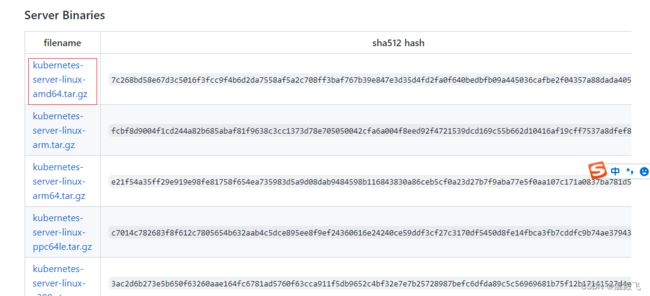

再往下拉倒server binaries就可以看到下载地址了。

wget https://dl.k8s.io/v1.19.0/kubernetes-server-linux-amd64.tar.gz

wget https://dl.k8s.io/v1.19.0/kubernetes-client-darwin-amd64.tar.gz

wget https://dl.k8s.io/v1.19.0/kubernetes-node-linux-amd64.tar.gz

解压kubernetes-server-linux-amd64.tar.gz

#tar -zxvf kubernetes-server-linux-amd64.tar.gz

#cd kubernetes/server/bin/

#ls

apiextensions-apiserver kube-apiserver kube-controller-manager kubectl kube-proxy.docker_tag kube-scheduler.docker_tag

kubeadm kube-apiserver.docker_tag kube-controller-manager.docker_tag kubelet kube-proxy.tar kube-scheduler.tar

kube-aggregator kube-apiserver.tar kube-controller-manager.tar kube-proxy kube-scheduler mounter#将可执行文件放到/usr/bin/

#mv apiextensions-apiserver kubeadm kube-aggregator kube-apiserver kube-controller-manager kubectl kubelet kube-proxy kube-scheduler /usr/bin/

| 文件名 | 说明 |

| kube-apiserver | kube-apiserver 主程序 |

| kube-apiserver.docker_tag | kube-apiserver docker 镜像的 tag |

| kube-apiserver.tar | kube-apiserver docker 镜像文件 |

| kube-controller-manager | kube-controller-manager 主程序 |

| kube-controller-manager.docker_tag | kube-controller-manager docker 镜像的 tag |

| kube-controller-manager.tar | kube-controller-manager docker 镜像文件 |

| kube-scheduler | kube-scheduler 主程序 |

| kube-scheduler.docker_tag | kube-scheduler docker 镜像的 tag |

| kube-scheduler.tar | kube-scheduler docker 镜像文件 |

| kubelet | kubelet 主程序 |

| kube-proxy | kube-proxy 主程序 |

| kube-proxy.docker_tag | kube-proxy docker 镜像的 tag |

| kube-proxy.tar | kube-proxy docker 镜像文件 |

| kubectl | 客户端命令行工具 |

| kubeadm | Kubernetes 集群安装的命令工具 |

| apiextensions-apiserver | 提供实现自定义资源对象的扩展 API Server |

| kube-aggregator | 聚合 API Server 程序 |

然后需要在/usr/lib/systemd/system/目录下为各个服务创建systemd服务配置文件,就完成了服务的安装。

(2)部署kube-apiserver服务

(2.1)设置kube-apiserver服务所需的ca证书。

#mkdir k8s_ssl

#cd k8s_ssl/

#vim master_ssl.cnf

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name][ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation,digitalSignature,keyEncipherment

subjectAltName = @alt_names[alt_names]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster.local #上面4个都是虚拟服务名称

DNS.5 = master01 ##主机名

DNS.6 = master02 ##主机名

IP.1 = 169.169.0.1 ##master service虚拟服务的clusterIP地址。

IP.2 = 192.168.52.21 ##主机ip

IP.3 = 192.168.52.22 ##主机ip

IP.4 = 192.168.52.100 ##lvs用的vip

创建证书

#openssl genrsa -out apiserver.key 2048

#openssl req -new -key apiserver.key -config master_ssl.cnf -subj "/CN=192.168.52.21" -out apiserver.csr

#openssl x509 -req -in apiserver.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile master_ssl.cnf -out apiserver.crt

#mv apiserver.crt apiserver.key /etc/kubernetes/pki/

(2.2)为kube-apiserver服务创建systemd服务

配置文件/usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/apiserver

ExecStart=/usr/bin/kube-apiserver $KUBE_API_ARGS

Restart=always

[Install]

WantedBy=multi-user.target配置文件 /etc/kubernetes/apiserver 的内容通过环境变量 KUBE_API_ARGS 设置 kube-apiserver 的全部启动参数。新版的参数可能不同。

KUBE_API_ARGS="--secure-port=6443 \

--tls-cert-file=/etc/kubernetes/pki/apiserver.crt \

--tls-private-key-file=/etc/kubernetes/pki/apiserver.key \

--client-ca-file=/etc/kubernetes/pki/ca.crt \

--apiserver-count=1 \

--endpoint-reconciler-type=master-count \

--etcd-servers=https://192.168.52.21:2379,https://192.168.52.22:2379 \

--etcd-cafile=/etc/kubernetes/pki/ca.crt \

--etcd-certfile=/etc/etcd/pki/etcd_client.crt \

--etcd-keyfile=/etc/etcd/pki/etcd_client.key \

--service-cluster-ip-range=169.169.0.0/16 \

--service-node-port-range=30000-32767 \

--allow-privileged=true \

--v=0"

启动服务

systemctl restart kube-apiserver && systemctl enable kube-apiserver

systemctl status kube-apiserver

很好没有报错直接成功了。 同样的配置把另外一台也配置上。

(2.3)创建客户端证书

kube-controller-manager、kube-scheduler、kublet和kube-proxy 都是apiserver的客户端,访问kube-apiserver的服务。用openssl创建证书并放到/etc/kubernetes/pki/ 创建好的证书考到同集群的其他服务器使用。

openssl genrsa -out client.key 2048

openssl req -new -key client.key -subj "/CN=admin" -out client.csr

openssl x509 -req -in client.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -out client.crt -days 36500cp client.key client.crt /etc/kubernetes/pki/

(2.4). 创建客户端连接 kube-apiserver 服务所需的 kubeconfig 配置文件。

两台都要部署,为kube-controller-manager、kube-scheduler/kubelet、kube-proxy、kubectl统一使用的链接kube-api的配置文件。

apiVersion: v1

kind: Config

clusters:

- name: default

cluster:

server: https://192.168.52.100:9443

certificate-authority: /etc/kubernetes/pki/ca.crt

users:

- name: admin

user:

client-certificate: /etc/kubernetes/pki/client.crt

client-key: /etc/kubernetes/pki/client.key

contexts:

- context:

cluster: default

user: admin

name: default

current-context: default

(2.5)部署kube-controller-manager服务

两台都要部署,创建systemd的service文件,/usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/controller-manager

ExecStart=/usr/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

配置文件 /etc/kubernetes/kube-controller-manager.service 的内容通过环境变量KUBE_CONTROLLER_MANAGER_ARGS设置的kube-controller-manager 的全部启动参数,包含CA安全配置的启动参数示例如下:

KUBE_CONTROLLER_MANAGER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--leader-elect=false \

--service-cluster-ip-range=169.169.0.0/16 \

--service-account-private-key-file=/etc/kubernetes/pki/apiserver.key \

--root-ca-file=/etc/kubernetes/pki/ca.crt \

--v=0"

systemd执行的时候其实是执行下面的命令。

/usr/bin/kube-controller-manager --kubeconfig=/etc/kubernetes/kubeconfig --leader-elect=false --service-cluster-ip-range=169.169.0.0/16 --service-account-private-key-file=/etc/kubernetes/pki/apiserver.key --root-ca-file=/etc/kubernetes/pki/ca.crt --v=0

接下来启动服务即可

systemctl restart kube-controller-manager

systemctl enable kube-controller-manager

(2.6)部署kube-scheduler服务

两台都要部署,为kube-scheduler服务创建systemd服务配置文件//usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/scheduler

ExecStart=/usr/bin/kube-scheduler $KUBE_SCHEDULER_ARGS

Restart=always

[Install]

WantedBy=multi-user.target配置文件 /etc/kubernetes/scheduler通过环境变量$KUBE_SCHEDULER_ARGS 实现设置服务的全部参数。

KUBE_SCHEDULER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \

--leader-elect=false \

--v=0"(2.7). 使用 HAProxy 和 keepalived 部署高可用负载均衡器

使用docker进行安装,docker的安装方法参考我之前的文章使用yum安装。

Docker学习笔记1-在centos服务器上安装docker_温殿飞的博客-CSDN博客

也可以使用二进制形式安装docker

wget https://download.docker.com/linux/static/stable/x86_64/docker-18.03.1-ce.tgz

tar -xf docker-18.03.1-ce.tgzcp docker/* /usr/bin/

创建docker的systemd服务文件/usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

systemctl daemon-reload

systemctl start docker

systemctl enable docker

接下来就是使用docker启动一个haproxy服务,创建haproxy配置文件/etc/kubernetes/haproxy.cfg

[root@master01 kubernetes]# cat haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4096

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend kube-apiserver

mode tcp

bind *:9443

option tcplog

default_backend kube-apiserver

listen stats

mode http

bind *:8888

stats auth admin:password

stats refresh 5s

stats realm HAProxy\ Statistics

stats uri /stats

log 127.0.0.1 local3 err

backend kube-apiserver

mode tcp

balance roundrobin

server master01 192.168.52.21:6443 check

server master02 192.168.52.22:6443 check

然后执行命令启动容器,并将文件挂载到容器

docker run -d --name k8s-haproxy --net=host --restart=always -v ${PWD}/haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg:ro haproxytech/haproxy-debian

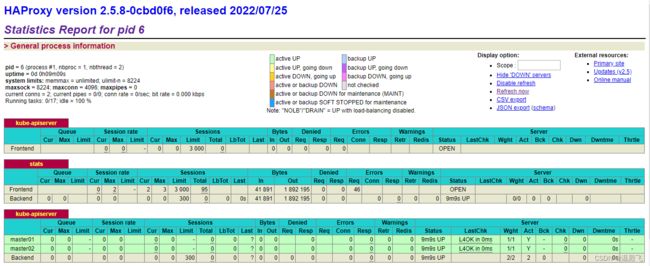

然后浏览器打开,输入用户admin 密码password

http://192.168.52.21:8888/stats

另外一台也部署上hapeoxy服务。

(2.8)部署lvs做vip的高可用,这里是实验都放在这两台服务器上了,生产环境可以分开设服务器。

创建keepalive配置

! Configuration File for keepalived

global_defs {

router_id LVS_1

}

vrrp_script checkhaproxy

{

script "/usr/bin/check-haproxy.sh"

interval 2

weight -30

}

vrrp_instance VI_1 {

state MASTER #主用master唯一 备用BACKUP可以有多个

interface ens33 #注意这里是你的服务器网卡名

virtual_router_id 51

priority 100

advert_int 1

virtual_ipaddress {

192.168.52.100/24 dev ens33 ##vip

}

authentication {

auth_type PASS

auth_pass password

}

track_script {

checkhaproxy

}

}

在/usr/bin目录创建hapoxy端口检测脚本check-haproxy.sh,用于keepalived判断服务状态切换vip

#!/bin/bash

# Program:

# check health

# History:

# 2022/01/14 wendianfei version:0.0.1

path=/bin:/sbin:/usr/bin:/usr/sbin:/usr/local/bin:/usr/local/sbin:~/bin

export path

count=$(netstat -apn | grep 9443 | wc -l)

if [ ${count} -gt 0 ]

then

exit 0

else

exit 1

fi使用docker创建keepalived服务。

docker run -d --name k8s-keepalived --restart=always --net=host --cap-add=NET_ADMIN --cap-add=NET_BROADCAST --cap-add=NET_RAW -v ${PWD}/keepalived.conf:/container/service/keepalived/assets/keepalived.conf -v ${PWD}/check-haproxy.sh:/usr/bin/check-haproxy.sh osixia/keepalived:2.0.20 --copy-service使用docker ps 查看容器运行成功

ip -a 查看服务器网卡已经挂载vip

浏览器访问能返回http页面证明keepalived+hapoxy组成的负载均衡系统部署完成,到这一步master就部署完了

http://192.168.52.100:9443/

http://192.168.52.100:8888/stats