ubuntu单节点快速部署ceph集群、部署rgw业务

ubuntu单节点快速部署ceph集群、部署rgw业务

标签(空格分隔): ubuntu、ceph集群、单节点、rgw

一、背景:

最近开始学习ceph集群的相关知识,作为初学者,部署单节点集群,足以满足学习的要求,下面是自己部署的记录。

二、部署:

ubuntu版本:

root@slot-54141:/home/ceph-cluster# cat /proc/version

Linux version 5.15.0-69-generic (buildd@lcy02-amd64-071) (gcc (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0, GNU ld (GNU Binutils for Ubuntu) 2.34) #76~20.04.1-Ubuntu SMP Mon Mar 20 15:54:19 UTC 2023

说明:以下操作都在root用户下进行

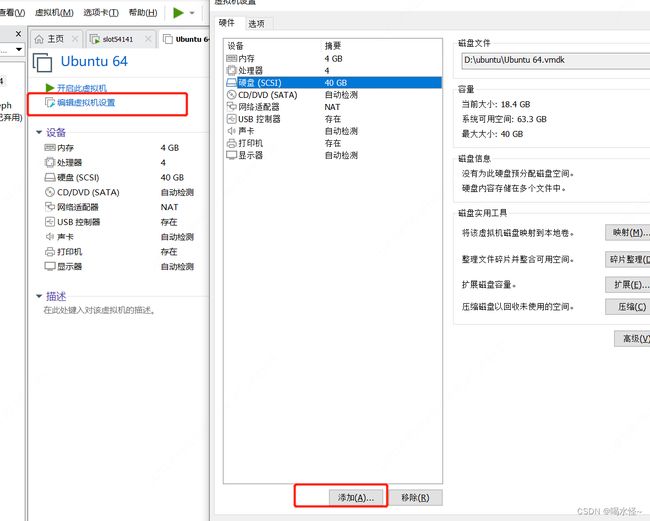

先查看虚拟机已有的硬盘,,看到只有sda,后续添加新的硬盘便可知其块设备号:

heshuiguai@ubuntu:~/Desktop$ ls /dev/sda

sda sda1 sda2 sda3 sda5

虚拟机重新添加一块硬盘,分区,创建vg、lv,将lv挂载到虚拟磁盘上,后续用于创建osd,操作如下:

重启虚拟机,查看新增的硬盘设备号:

root@slot-54141:/home/chenyue# ls /dev/sd

sda sda1 sda2 sda5 sdb sdb1 //新增的sdb就是我添加的硬盘

root@slot-54141:/home/chenyue# ls /dev/sd

fdisk /dev/sdb //给新添加的硬盘(20G)创建一个分区分区

输入:n (表示new 一个新的分区)

输入: p (表示创建一个基本分区,p是基本分区,e是扩展分区)

选择分区编号,1-4,默认使用1:直接回车

选择分区起始点,使用默认即可:直接回车

选择分区终点,使用默认即可:直接回车

写入磁盘:w

vgcreate testvg /dev/sdb1 //分区创建vg

vgdisplay //查看创建的vg

//创建lv1,lv2,lv3,大小均为5G,后续对应添加3个osd,testvg为上面创建的vg

lvcreate -L 5G -n lv1 testvg

lvcreate -L 5G -n lv2 testvg

lvcreate -L 5G -n lv3 testvg

lvdisplay //查看创建的lv

//格式化lv,使用ext4进行格式化操作,然后 lv 才能存储资料。

mkfs -t ext4 /dev/testvg/lv1

mkfs -t ext4 /dev/testvg/lv2

mkfs -t ext4 /dev/testvg/lv3

//创建的lv在如下目录:

root@slot-54141:/home/ceph-cluster# cd /dev/testvg/

root@slot-54141:/dev/testvg# ls

lv1 lv2 lv3

//创建如下目录,/src/ceph/osd.0 ... 用于挂载lv,还有后续集群部署需要

mkdir -p /src/ceph/{osd.0,osd.1,osd.2,mon0,mds0}

//编辑 /etc/fstab 文件,,末尾添加这么几行,开机自动挂载lv到上述创建的目录下:

/dev/testvg/lv1 /src/ceph/osd.0 ext4 defaults 1 1

/dev/testvg/lv2 /src/ceph/osd.1 ext4 defaults 1 1

/dev/testvg/lv3 /src/ceph/osd.2 ext4 defaults 1 1

//reboot看是否自动挂载成功,lsblk可以看到如下类似输出就说明挂载成功

root@slot-54141:/dev/testvg# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sdb 8:16 0 20G 0 disk

└─sdb1 8:17 0 20G 0 part

├─testvg-lv1 253:0 0 5G 0 lvm /src/ceph/osd.0

├─testvg-lv2 253:1 0 5G 0 lvm /src/ceph/osd.1

└─testvg-lv3 253:2 0 5G 0 lvm /src/ceph/osd.2

//修改主机号:

root@slot-54141:/dev/testvg# cat /etc/hostname

slot-54141

root@slot-54141:/dev/testvg# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.29.129 netmask 255.255.255.0 broadcast 192.168.29.255

inet6 fe80::78a6:d2ff:58c9:7b0c prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:97:ea:ec txqueuelen 1000 (Ethernet)

RX packets 55887 bytes 75316103 (75.3 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 9915 bytes 633848 (633.8 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 58996 bytes 72794800 (72.7 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 58996 bytes 72794800 (72.7 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

root@slot-54141:/dev/testvg# cat /etc/hosts

127.0.0.1 localhost

192.168.29.129 slot-54141 //主机的ip和主机名对应,其他不用改

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

root@slot-54141:/dev/testvg#

//安装ceph-deploy工具,快速部署集群

apt install ceph-deploy

//开始创建集群

cd /home

mkdir ceph-cluster //创建中间目录

cd ceph-cluster

ceph-deploy new slot-54141 //创建一个新集群,并写入CLUSTER.conf和keyring等等

//因为我们是在单节点上工作,因此需要修改一下配置文件

echo "osd crush chooseleaf type = 0" >> ceph.conf

echo "osd pool default size = 1" >> ceph.conf

echo "osd journal size = 100" >> ceph.conf

//安装基本库(ceph,ceph-common, ceph-fs-common, ceph-mds)

ceph-deploy install slot-54141 //在刚刚创建的中间目录下执行 /home/ceph-cluster

//创建一个集群监视器mon,本节点作为主mon节点

ceph-deploy mon create slot-54141 //也是在中间目录下执行

收集远程节点上的密钥到当前文件夹

ceph-deploy gatherkeys slot-54141 //中间目录下执行

//添加osd,lv1、lv2、lv3分别对应osd.0 osd.1 osd.3 ,后续集群扩容可采用同样方式添加osd

ceph-deploy osd create slot-54141 --data /dev/testvg/lv1

ceph-deploy osd create slot-54141 --data /dev/testvg/lv2

ceph-deploy osd create slot-54141 --data /dev/testvg/lv3

//查看osd状态

root@slot-54141:/home/ceph-cluster# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.01469 root default

-3 0.01469 host slot-54141

0 hdd 0.00490 osd.0 up 1.00000 1.00000

1 hdd 0.00490 osd.1 up 1.00000 1.00000

2 hdd 0.00490 osd.2 up 1.00000 1.00000

root@slot-54141:/home/ceph-cluster#

//查看集群状态,接下来还需要添加其他组件,mgr、创建策略、pool

root@slot-54141:/home/ceph-cluster# ceph -s

cluster:

id: 7ed5c75c-1310-42a3-a565-29a624574310

health: HEALTH_WARN

mon is allowing insecure global_id reclaim

no active mgr

services:

mon: 1 daemons, quorum slot-54141 (age 2h)

mgr: no daemons active

osd: 3 osds: 3 up (since 13m), 3 in (since 2h)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

root@slot-54141:/home/ceph-cluster#

//创建rgw网关

ceph-deploy rgw create slot-54141 //在/home/ceph-cluster下执行

//创建用户

radosgw-admin user create --uid chenyue --display-name chenyue --access-key 111 --secret-key 222

//查看已创建的用户信息

root@slot-54141:/var/run/ceph# radosgw-admin user info --uid chenyue

{

"user_id": "chenyue",

"display_name": "chenyue",

"email": "",

"suspended": 0,

"max_buckets": 1000,

"subusers": [],

"keys": [

{

"user": "chenyue",

"access_key": "111",

"secret_key": "222"

}

],

"swift_keys": [],

"caps": [],

"op_mask": "read, write, delete",

"default_placement": "",

"default_storage_class": "",

"placement_tags": [],

"bucket_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"user_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"temp_url_keys": [],

"type": "rgw",

"mfa_ids": []

}

//查看rgw网关监听的端口号:7480

root@slot-54141:/home/ceph-cluster# ps -aux | grep rgw

ceph 1110 0.2 1.4 6092592 58384 ? Ssl 05:38 0:16 /usr/bin/radosgw -f --cluster ceph --name client.rgw.slot-54141 --setuser ceph --setgroup ceph

root 15750 0.0 0.0 9040 720 pts/0 S+ 07:40 0:00 grep --color=auto rgw

root@slot-54141:/home/ceph-cluster# netstat -nap | grep 1110

tcp 0 0 0.0.0.0:7480 0.0.0.0:* LISTEN 1110/radosgw

tcp 0 0 192.168.29.129:51530 192.168.29.129:6824 ESTABLISHED 1110/radosgw

tcp 0 0 192.168.29.129:50250 192.168.29.129:3300 ESTABLISHED 1110/radosgw

tcp 0 0 192.168.29.129:51518 192.168.29.129:6824 ESTABLISHED 1110/radosgw

tcp 0 0 192.168.29.129:54990 192.168.29.129:6808 ESTABLISHED 1110/radosgw

tcp 0 0 192.168.29.129:55004 192.168.29.129:6808 ESTABLISHED 1110/radosgw

tcp 0 0 192.168.29.129:59986 192.168.29.129:6816 ESTABLISHED 1110/radosgw

tcp 0 0 192.168.29.129:39206 192.168.29.129:3300 ESTABLISHED 1110/radosgw

tcp 0 0 192.168.29.129:59976 192.168.29.129:6816 ESTABLISHED 1110/radosgw

tcp 0 0 192.168.29.129:51566 192.168.29.129:6800 ESTABLISHED 1110/radosgw

tcp 0 0 192.168.29.129:51562 192.168.29.129:6800 ESTABLISHED 1110/radosgw

tcp6 0 0 :::7480 :::* LISTEN 1110/radosgw

unix 2 [ ACC ] STREAM LISTENING 53845 1110/radosgw /var/run/ceph/ceph-client.rgw.slot-54141.1110.94773595740728.asok

unix 3 [ ] STREAM CONNECTED 62310 1110/radosgw

unix 3 [ ] STREAM CONNECTED 62318 1110/radosgw

unix 3 [ ] STREAM CONNECTED 53849 1110/radosgw

unix 3 [ ] STREAM CONNECTED 62319 1110/radosgw

unix 3 [ ] STREAM CONNECTED 50456 1110/radosgw

unix 3 [ ] STREAM CONNECTED 62312 1110/radosgw

unix 3 [ ] STREAM CONNECTED 62309 1110/radosgw

unix 3 [ ] STREAM CONNECTED 62313 1110/radosgw

unix 3 [ ] STREAM CONNECTED 53850 1110/radosgw

//安装s3cmd,rgw客户端管理工具

apt install s3cmd //针对ubuntu

//查看所有帮助

s3cmd -h

//修改s3cmd配置

root@slot-54141:~# s3cmd --configure

Enter new values or accept defaults in brackets with Enter.

Refer to user manual for detailed description of all options.

Access key and Secret key are your identifiers for Amazon S3. Leave them empty for using the env variables.

Access Key [111]: 111

Secret Key [222]: 222

Default Region [US]:

Use "s3.amazonaws.com" for S3 Endpoint and not modify it to the target Amazon S3.

S3 Endpoint [192.168.29.129:7480]: 192.168.29.129:7480

Use "%(bucket)s.s3.amazonaws.com" to the target Amazon S3. "%(bucket)s" and "%(location)s" vars can be used

if the target S3 system supports dns based buckets.

DNS-style bucket+hostname:port template for accessing a bucket [192.168.29.129:7480/%(bucket)]: 192.168.29.129:7480/%(bucket)

Encryption password is used to protect your files from reading

by unauthorized persons while in transfer to S3

Encryption password:

Path to GPG program [/usr/bin/gpg]:

When using secure HTTPS protocol all communication with Amazon S3

servers is protected from 3rd party eavesdropping. This method is

slower than plain HTTP, and can only be proxied with Python 2.7 or newer

Use HTTPS protocol [No]: no

On some networks all internet access must go through a HTTP proxy.

Try setting it here if you can't connect to S3 directly

HTTP Proxy server name:

New settings:

Access Key: 111

Secret Key: 222

Default Region: US

S3 Endpoint: 192.168.29.129:7480

DNS-style bucket+hostname:port template for accessing a bucket: 192.168.29.129:7480/%(bucket)

Encryption password:

Path to GPG program: /usr/bin/gpg

Use HTTPS protocol: False

HTTP Proxy server name:

HTTP Proxy server port: 0

Test access with supplied credentials? [Y/n] y

Please wait, attempting to list all buckets...

Success. Your access key and secret key worked fine :-)

Now verifying that encryption works...

Not configured. Never mind.

Save settings? [y/N] y

Configuration saved to '/root/.s3cfg'

root@slot-54141:~#

//s3cmd 命令行创建桶,上传对象,每个用户可以创建多个桶

root@slot-54141:~# s3cmd mb s3://buck //创建桶

Bucket 's3://buck/' created

root@slot-54141:~# s3cmd ls //列举用户所有的桶,用户是s3cmd --configure配置里的

2023-04-23 14:59 s3://buck

root@slot-54141:~# cd /home/ceph-cluster/

root@slot-54141:/home/ceph-cluster# ls

ceph.bootstrap-mds.keyring ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph-deploy-ceph.log

ceph.bootstrap-mgr.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph.mon.keyring

root@slot-54141:/home/ceph-cluster#

root@slot-54141:/home/ceph-cluster#

root@slot-54141:/home/ceph-cluster# stat ceph.conf

File: ceph.conf

Size: 281 Blocks: 8 IO Block: 4096 regular file

Device: 805h/2053d Inode: 669374 Links: 1

Access: (0644/-rw-r--r--) Uid: ( 0/ root) Gid: ( 0/ root)

Access: 2023-04-22 08:38:15.631242349 -0700

Modify: 2023-04-22 08:35:06.165967530 -0700

Change: 2023-04-22 08:35:06.169967922 -0700

Birth: -

root@slot-54141:/home/ceph-cluster# s3cmd put ceph.conf s3://buck //上传文件到bucket中

upload: 'ceph.conf' -> 's3://buck/ceph.conf' [1 of 1]

281 of 281 100% in 1s 213.52 B/s done

root@slot-54141:/home/ceph-cluster#

//列举桶中所有的文件,rgw对象存储中也叫对象

root@slot-54141:/home/ceph-cluster# s3cmd la

2023-04-23 15:01 281 s3://buck/ceph.conf

root@slot-54141:/home/ceph-cluster#

//radosgw-admin 原生命令可以看到上传的对象保存到default.rgw.buckets.data 池中

root@slot-54141:/home/ceph-cluster# ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 15 GiB 12 GiB 263 MiB 3.3 GiB 21.71

TOTAL 15 GiB 12 GiB 263 MiB 3.3 GiB 21.71

--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

device_health_metrics 1 1 0 B 0 0 B 0 11 GiB

repool 2 32 0 B 0 0 B 0 3.7 GiB

.rgw.root 3 32 1.3 KiB 4 256 KiB 0 11 GiB

default.rgw.log 4 32 3.4 KiB 207 2 MiB 0.02 11 GiB

default.rgw.control 5 32 0 B 8 0 B 0 11 GiB

default.rgw.meta 6 8 682 B 5 256 KiB 0 11 GiB

default.rgw.buckets.index 7 8 0 B 11 0 B 0 3.7 GiB

default.rgw.buckets.data 8 32 281 B 1 192 KiB 0 3.7 GiB

root@slot-54141:/home/ceph-cluster#

root@slot-54141:/home/ceph-cluster# rados -p default.rgw.buckets.data ls

4ef24692-9293-4a77-9a5a-d891d5e354a3.14126.1_ceph.conf //上传的文件

//查看上传文件的具体信息

root@slot-54141:/home/ceph-cluster# radosgw-admin object stat --bucket buck(桶名) --object ceph.conf(文件名)

//虚拟机安装boto3库,通过sdk访问集群:

apt install python3-pip

pip install boto3 //等待安装完毕

//boto3官网s3 sdk: https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/s3.html

//eg:编写python 测试脚本,调用boto3 s3 sdk,列举指定用户的所有桶

root@slot-54141:/home/chenyue# cat s3_test.py

#!/usr/bin/env python

# coding=utf-8

import boto3

def main():

access_key = '111' //对应rgw用户的ak

secret_key = '222' //对应rgw用户sk

s3_host = 'http://192.168.29.129:7480' //rgw实例

bucket_name = 'buck'

s3client = boto3.client('s3',

aws_secret_access_key=secret_key,

aws_access_key_id=access_key,

endpoint_url=s3_host)

response = s3client.list_buckets() //调用boto3 s3 列举桶接口

print(response)

if __name__ == '__main__':

main()

root@slot-54141:/home/chenyue#

root@slot-54141:/home/chenyue# python3 s3_test.py

{'ResponseMetadata': {'RequestId': 'tx00000715ff1f45e8dcab6-0064473113-12176-default', 'HostId': '', 'HTTPStatusCode': 200, 'HTTPHeaders': {'transfer-encoding': 'chunked', 'x-amz-request-id': 'tx00000715ff1f45e8dcab6-0064473113-12176-default', 'content-type': 'application/xml', 'date': 'Tue, 25 Apr 2023 01:46:59 GMT', 'connection': 'Keep-Alive'}, 'RetryAttempts': 0}, 'Buckets': [{'Name': 'buck', 'CreationDate': datetime.datetime(2023, 4, 23, 14, 59, 35, 809000, tzinfo=tzutc())}], 'Owner': {'DisplayName': 'chenyue', 'ID': 'chenyue'}}

root@slot-54141:/home/chenyue#

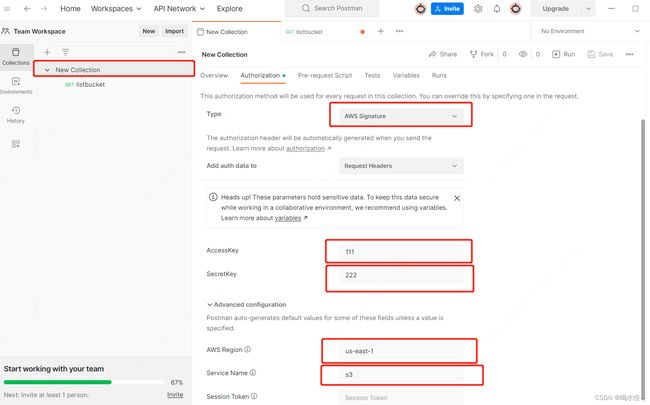

同时支持通过restful api访问rgw网关:

借助postman工具,下载地址:https://www.postman.com/downloads/

安装完毕使用:

部署参考博客 https://www.jianshu.com/p/e9e331d53bb9